Calculating multiple regression

1/37

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

38 Terms

why are the beta values of each predictor tested

for significance

in multiple linear regression what is computed

multple R and R²

In multiple regression when all predictors are tested simultaneously..

each beta has been adjusted for every other predictor in the regression model

what does beta represent

the inependent relationship between the predictor and y

in Multiple linear relationships between multiple predictors and y

they are tested simultaneously with a series of matrix algebra calculations

basically we cant do it ourselves

when is multple loinear regression best conducted

using a statistical software package

in multple linear regression the dependent variable must be measured as

continuous or measured at interval or ratio level

multple linear regression assumptions

1. Normal Distribution of the dependent variable

2. Linear relationship between x and y

3. Independent observations

4. Homoscedasticity

5. Interval or ratio measurement of the dependent variable (or a converted likert scale)

Multiple Linear Regression Equation

y = β1x1 + β2x2 + β3x3 . . .+ a

Multiple Linear Regression Equation componenets

• y = the dependent variable

• x1, x2, x3 = independent variables

• Β1, β2, β3 = the slopes of the line for each predictor

• a = y intercept

what happens if the data for the predictor and dependent variable are not homoscedastic

the inferences made could be invalid

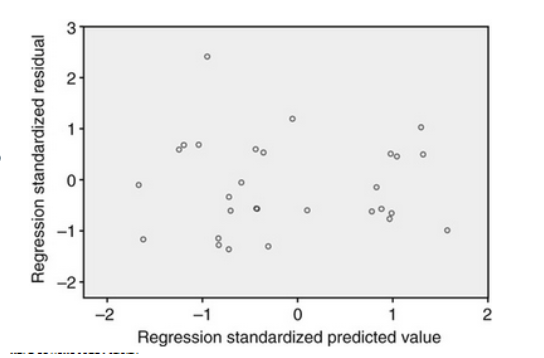

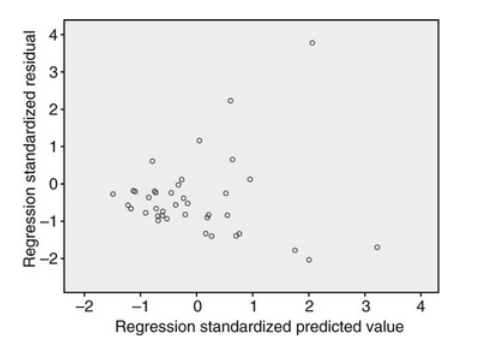

when can the homoscedasticity assumption be validated

by a visual examination of a plot of the standardized residual (errors) by the regression standardized predicted value

when does heteroscedasticity occur

when the residuals are not evenly scattered around the line

how is Heteroscedasticity manifested

in all kinds of uneven shapes

when should more formal test be performed

When the plot of residuals appears to deviate substantially from normal

what should homoscedasticity look like

a birds nest

what does heteroscedasticity look like

a cone or triangle

when does multicollinearity occur

when independent variables in a multiple regression equation are strongly correlated

how is multicollinearity minimized

by carefully selecting the predictors and thoroughly determining the

interrelationships among predictors before to the regression analysis

does Multicollinearity affect the predictive power

no

predictive power

the capacity of the independent variables to predict values

of the dependent variable in that specific sample

what does multicollinearity cause

problems related to generalizability

If multicollinearity is present the equation it will

not have predictive validity

with Multicollinearity the amount of variance by each variable will

be inflated

with multicollinearity what will happen when cross-validation in performed

the beta values will not remain consistent

what is the First step to examining multicollinearity

to examine the correlations among the independent variables

what do you have to do before conducting the regression analyses

perform multiple correlation analyses

what statisitics look at multicollinearity

tolerance and VIF (variance inflation factor)

tolerance value

< 0.20 or 0.10

what does tolerance of < 0.20 or 0.10 indicate

a problem with multicollinearity

VIF (variance inflation factor) values

5 or 10 and above

what does VIF (variance inflation factor) 5 or 10 above indicate

a problem with multicollinearity

what is developed to use a categorical predictors in regression analysis

a coding system

why is a coding system developed

to represent group membership

If the variable is dichotomous the numbers

0 and 1 are used

When 3 categories are used how many dummy values are used

2

When more than 3 categories are used

we increase the number of values

how to determine number of dummy variables

The number of dummy variables is always one less than the number of categories