CDM Final

1/59

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

60 Terms

choice

selecting from 1 of 2 options

distinct from a decision (special case of choosing what to do)

rational choice

what a normative model of choice should look like

what should people do

utility

how preferable or desirable a person finds a particular choice (subjective)

is also the numerical expression of a given person’s preference

[term] function is concave in a safe decision

![<p><strong>how</strong><span style="color: yellow"><strong><em> preferable or desirable</em></strong></span><strong> a person finds a </strong><span style="color: yellow"><strong><em>particular choice </em></strong></span><strong>(subjective)</strong></p><ul><li><p>is also the <strong><em><mark data-color="purple" style="background-color: purple; color: inherit">numerical expression</mark></em></strong> of a given person’s preference</p><ul><li><p><span style="color: #eaa6ff"><strong><em>[term] function </em></strong></span><span style="color: #eaa6ff"><strong><em>is concave in a safe decision</em></strong></span></p></li></ul></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/a8b76c53-3a6c-4752-bd91-218c2967c9e0.png)

quantification

people should express any preference and call that the utility

rating something on importance on a scale from 1 to X

principle of expected utility theory

completeness

people can always state a preference for one of two outcomes, or that neither are preferred

principle of expected utility theory

transitivity

if A is preferred to B and B is preferred to C, than A is preferred to C

principle of expected utility theory

maximization

the goal of a choice should be to maximize utility

principle of expected utility theory

classical theory of rational choice

we already have knowledge of all options and their consequences

people are omniscient

we can already do every calculation to arrive at an optimal choice

expected utility

the anticipated desirability of two choices/gambles

example: 30% probability of rain and a 70% chance it will not rain

carrying umbrella and it rains: 4

carrying umbrella and it doesn’t rain: 2

leave umbrella and it rains: -5

leave umbrella and it doesn’t rain: 5

math process ⤵

EU (carrying umbrella) → (0.3 x 0.4) + (0.7 x 0.2) → (1.2) + (1.4) = 2.6

EU (leave umbrella) → (0.3 x -0.5) + (0.7 x 0.5) → -1.5 + 3.5 = 2

add the two results up to get the expected utility, choose the result with the highest number (carry the umbrella)

bounded rationality

choice-making should be bounded by human limitations

achieved by being adaptive in making said choice

especially important in natural environments

people have limited access to information and limited computational abilities

adaptiveness

choices with [this] don’t need to lead to the best possible situation, instead only need to be good enough (satisficing)

building knowledge is very costly (in time, energy, etc) and it won't be worth it to expend it

can explain heuristics → are usually wrong, but are ‘good enough’

![<p><strong>choices with [this] </strong><span><strong>don’t</strong></span><span style="color: yellow"><strong><em> need to lead to the best possible situation</em></strong></span><span><strong>, instead </strong></span><span style="color: yellow"><strong><em>only need to be good enough (satisficing)</em></strong></span></p><ul><li><p><span><strong><em><mark data-color="yellow" style="background-color: yellow; color: inherit">building knowledge is very costly</mark></em></strong> (in time, energy, etc) and it <strong><em><mark data-color="yellow" style="background-color: yellow; color: inherit">won't be worth it to expend it</mark></em></strong></span></p></li><li><p><span>can explain heuristics → are usually wrong, but are ‘good enough’</span></p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/e34cf48b-7500-4138-aba8-c61a03a13fc4.png)

choice overload effect

the more choices someone has, the more difficult it is for them to make a decision

likelier to happen when . . .

items are unfamiliar

items are difficult to compare (very simplistic)

the person is under a time constraint

Iyengar, Lepper: people offered free samples of 6 or 24 jams at the store

people offered 6 samples bought more than people offered 24 samples

nudge theory

nudges are implicit initiatives that do not impose significant material incentives or disincentives (subsidies, taxes, fines, jail time)

ex: increasing quantity of healthy foods at a grocery store is not a nudge because it changes the available options and thus the decision they have to make

a nudge would be having healthy food near the front of the store and unhealthy food at the back of the store

libertarian paternalism

uses nudge theory to help people make choices in a way that help them do what can actually achieve their goals (nudge theory)

choice architecture

the environment in which people make decisions (nudge theory)

people making those decisions are choice architects

paternalism

implicit interference in the life of another person without their knowledge or consent (nudge theory)

believed that the interference will leave the person more well-off

problematic because the person interfering can be mistaken and the subject not fully informed

common in government

Gonclaves et al

group of researchers who tracked grocery store’s customers for amount of produce purchased (nudge theory)

arranged from soft (<4) to hard (10+) buyers

displayed ads in store praising hard buyers, added “and you?”

results ⤵

soft buyers: produce sales jumped 59%

medium buyers: jumped ~20%

hard buyers: jumped 8%

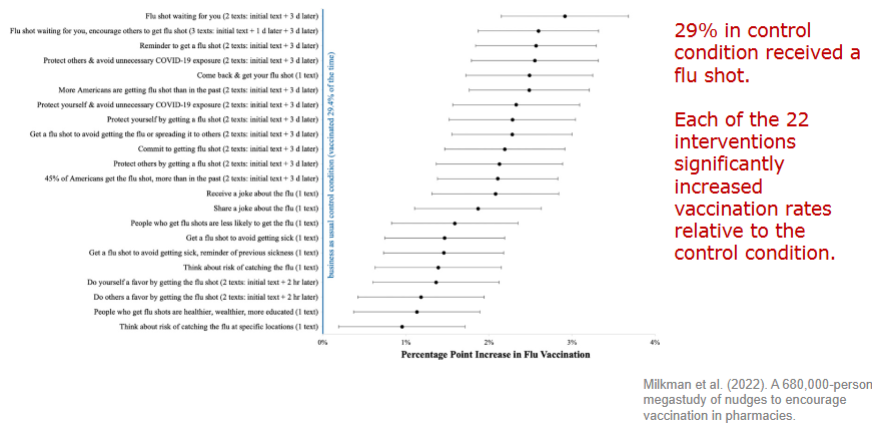

Dai et al

group of researchers who did the vaccine study (different text reminders for Walmart shoppers to get their flu vaccines)

ownership language (“flu vaccine is waiting for you!”) boosted vaccination appointments by 95%

displacement effect

people going somewhere else to do something that a nudge is telling them not to (nudge theory)

nudges can be beneficial because

. . . they make the right thing easier to do more than they make it impossible to do the neutral/wrong thing (nudge theory)

criticisms of nudge theory

nudge theory has some drawbacks because of . . .

consent → vast majority of nudges are done without the target pop’s behind-the-scenes knowledge

non-educative nudges are covert and/or sneaky, so they should have a clear right to opt-out

people may still be unaware of how they are influenced by nudges whether or not there is an opt-out option

profittering → some nudges are done to promote thoughtless behavior and/or profit for the self/company instead of being done to make the right thing

non-educative nudges are an insult to human agency (ex: convenience, rules)

they allow people to choose in theory, but they take advantage of the fact that people don’t have the mental energy to think about their choices

factors of good nudges

good nudging should include (Thalen et al):

transparency → letting public know who is doing the nudging and why

choice options → not manipulating the options to not create preference for a certain choice

consent → telling the public that the nudge is a nudge

considerable thought into how the nudge will improve public welfare

care to avoid token nudging

care that sources used are up-to-date and replicable

token nudge

a nudge intended to create an illusion of improvement as a substitute for systemic improvements

fair gamble

a gamble with an expected value of 0 (choice theory)

people avoiding these illustrates risk aversion

framing effect

illustrates how the wording of a situation affects a decision that people make based on it

measured with gain framing and loss framing

has one of the most replicable effects in psych research

gain framing

situation described in terms of getting something (choice theory)

leads to risk-averse behavior

best implemented to get people to do things they perceive to have beneficial results

loss framing

situation described in terms of losing something (choice theory)

leads to risk-seeking behavior

best implemented to get people to do something they perceive to involve harmful results

risk aversion

general unwillingness to engage in activities in which one might lose something (choice theory)

measured by the utlity function (start point = $0)

function is concave in a safe decision

measured by usefulness (ex: $1-$2M more useful than $8-$9M)

more money = less utility (“oh there’s already a lot”)

certainty is treated as having extra utility beyond tangible (ex: monetary) value

expected utility theory

posits that, for a choice to be rational, one’s preferences must be

complete: always stating a preference for 1 of 2 outcomes or that both are equally liked/disliked

transitive: if A is preferred to B, and B is preferred to C, then A is preferred to C

mental accounting

decisions about how people spend resources

influenced by where they think resources come from

sunk cost fallacy

if someone has already invested a lot into something and it doesn’t work out, then they tend to continue investing anyway because of the effort already put in

should not factor into decisions about the future, but are included anyway for the following reasons:

socialization: “don’t waste”

not accounting for time and money the same

happens because current and future costs carry more weight than past ones

reference dependence

people’s decisions depend on a reference level they can use to compare options with

explains framing effects and why people can be risk-seeking in certain situations

phases of choice

in prospect theory, choices are made like this ⤵

1) framing phase

simplify the prospects to make decisions less complex

frame the outcome in terms of gains vs losses relative to a natural reference point

(ex: current wealth)

2) valuation phase

choose the more valuable and useful option

expected value

sum of the subjective value (U) and the decision weight (W) for each outcome

comes from adding the Us and Ws for every outcome of a gamble

outcomes of individual gambles are calculated by multiplying the U by the W

accounts for cognitive biases that can influence people’s evaluations of outcome probabilities and utilities

V function

describes how much people subjectively value each positive outcome

loss plot line is steeper than gain plot line (usually)

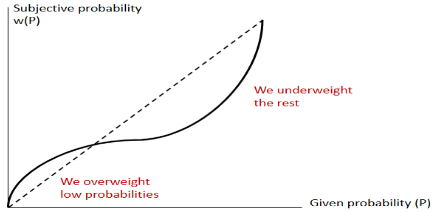

W function

describes how too much weight/importance is often given to small probabilities

happens because people underweight moderate and large probabilities

(ex: investing in the lottery)

when probabilities are small, risk-seeking is observed for gains and risk-aversion for losses

prospect theory vs expected utility

prospect theory can explain the endowment effect

and loss aversion → feeling losses more than equivalent gains

PT says that happiness is determined by one’s change in wealth and not the utility of wealth itself

gains and losses are not strictly seen in relation to current wealth

PT says that risk aversion only happens when things are framed in loss vs. gain lenses

Blackwell et al

measured how schemas influence learning and performance

students tested on intelligent beliefs:

you have a certain amount of intelligence that you cannot change (entity theory)

you can always change how intelligent you are (incremental theory)

students with entity theory thinking interpreted setbacks through “i’m not good enough”

gave up

students with incremental theory thinking interpreted setbacks by saying that they didn’t work to potential and tried different strategies

they also performed better during the next two school years

script theory

posits that scripts are picked up over time by observation, and memories of them help people understand and expect certain things in certain situations

violations of scripts

errors (ex: being given coffee instead of a milkshake)

obstacles (ex: bus taking long break at stop, being late to class)

distractions (ex: parents calling mid-class)

violations are more interesting (and are thus likelier to be remembered) than script-consistent components

story model

posits that people construct stories of different phenomena to make sense of them

constructed by using a schema (story) to understand what has happened

in juries → knowing the information alone may not be enough to predict a juror’s (or anyone’s) behavior, order in which the info is presented matters more

temporal order of evidence (sequential) vs scrambled order of evidence (random witness calling) important in how stories were constructed differently

more gave guilty verdicts with temporal than scrambled

cognitive consistency model

people may construct a story before all evidence is presented, then unknowingly prioritize evidence that fits their story and ignoring everything else

involves confirmation bias

background knowledge heavily influences all aspects of the story, including treatment of evidence and the decision

at the end, the person will have an emerging conclusion that can be influenced by the evidence

Wason card selection task

tests how well people falsify objective material they think to be right

cards have a letter on one side and a number on the other, and the player has to find a card that can show the hypothesis (if a card has a vowel on one side, it has an even number on the other) is true or false

correct cards are A and 7 (4 is already even, D is a consonant) because given that there already a confirming instance, it is redundant to flip the 4

modal answer (most common) is A and 4 → shows that people have difficulty realizing negations

the 4 card can only confirm the rule, not falsify the rule → only the 7 card can do that

shows that people are bad at knowing what information to find to falsify a rule, even when the rule is wholly cognitive

positive test strategy

makes rules about the world around them by examining incidents where something is supposed to happen and where hypotheses about the thing have happened

person does not need the rule to be true

if a bird is a raven, then it is black → hypothesis = all ravens are black

falsifying the rule: finding a rule violation means fitting the thing into the rule’s inverse and finding any other random stimulus that would satisfy that rule

finding a non-black raven (ex: an albino)→ logically equivalent to “if it isn’t black, it isn’t a raven”

negative test strategy

intentionally running through cases that rule out a given hypothesis

scientific enterprise is and should generally be centered around this kind of falsifiability (Popper)

scientists should be doing this to their theories to indirectly correct their hypotheses

theories shouldnt be considered scientific until we are able to falsify them

just because a theory passes a test does not mean that it is true

no study that 'proves a theory', theories are supported with evidence

“either-or” statements are not falsifiable

theories should be generalized to a category so they can be falsified (ex: “all dogs bark” > “all German shepards bark”)

ad-hoc modification

happens when a theory is modified to continue being ‘true’, or unfalsifiable

doesn't add any testable predictions or consequences to the original hypothesis

makes the new theory even less falsifiable than the original

ex: Aristotilean scholars updating round planet hypothesis after being proven wrong —> “um ackshually they’re covered by clouds”

non ad-hoc modification

adding another falsifiable theory to an already-established falsifiable theory

testing the new theory can give more evidence for the original one

for the theory to be added, there must be prior agreement among scientists about what is being tested and how it is to be tested

should not be done to give complete certainty

forensic confirmation bias

in criminal cases, prior expectations influence how case evidence is collected and interpreted

ex: Mayfield case

caused such a bad outcome that the FBI had to integrate linear procedure to make sure police only used crime scene prints during investigations

clinical confirmation bias

when making diagnoses, doctors may first make rough initial diagnoses and then interpret a patient’s actual symptoms in ways that would confirm the bias

my-side bias

tendency to find arguments that defend one’s position and refutes a disagreed-with position

happens when people are told about an issue and asked to tell what they would support/why

bias appears even when told to look at both sides