CPS590 Final Exam Review (Chpt. 5-8)

1/99

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

100 Terms

Concurrency

The ability of an operating system to execute multiple tasks simultaneously or in an overlapping matter. Host of design issues are included such as sharing/competing for resources, synchronization of jobs done by multiple processes and allocation of processor time to each process

3 Different Contexts of Concurrency

- Multiple applications: Multiprogramming was invented to allow processing time to be shared among a number of applications

- Structured applications: Extension of modular design and structured programming, i.e. 1 application can have as many as N processes/threads

- Operating System Structure: OS themselves are really just a bunch of processes and threads

Principles of Concurrency

- Interleaving and overlapping: Can be viewed as examples of concurrent processing

- Uniprocessor: The relative speed of execution of processes cannot be predicted, depends on several factors such as the activities of other processes, scheduling policies of the OS and how the OS handles interrupts

Difficulties of Concurrency

- Sharing of global resources

- Difficult for the OS to manage the resources optimally

- Difficult to locate programming errors as results aren't deterministic and reproducible

Critical Section

A section of code within a process that requires access to shared resources, and that must not be executed while another process is in a corresponding state

Mutual Exclusion

The requirement that when one process is in a critical section that accesses shared resources, no other process may be in a critical section that accesses any of those shared resources.

Race Condition

- A situation in which multiple threads or processes read and write a shared data item, and the final result depends on the relative timing of their execution

- The "loser" of the race (or the process/thread that accesses the data item last) will determine the final value of the variable

Atomic operation

A function or action implemented as a sequence of one or more instructions that appears to be indivisible; that is, no other process can see an intermediate state or interrupt the operation. The sequence of instruction is guaranteed execute as a group, or not execute at all, having no visible effect on system state. Atomicity guarantees isolation from concurrent processes

Deadlock

- A situation in which two or more processes are unable to continue execution because each is stuck waiting for one of the others to do something

- Permanent and has no efficient solution

Livelock

A situation in which two or more processes continuously change their states in response to changes in the other process(es) without doing any useful work

Starvation

A situation in which a runnable process is overlooked indefinitely by the scheduler, although it is able to proceed, it is never chosen by the processor

Process Interaction Situation 1: Processes unaware of each other

- Relationship: Competition

- Results of one process are completely independent of the action(s) of others

- Timing of process may be affected

- Potential Control Problems: Mutual Exclusion, Deadlock, Starvation

Process Interaction Situation 2: Processes are indirectly aware of each other

- Relationship: Cooperation by sharing

- Results of one process may depend on information obtained from others

- Timing of process may be affected

- Potential Control Problems: Mutual Exclusion, Deadlock, Starvation, Data coherence (values of shared memory locations may not be consistent across processes/threads)

Process Interaction Situation 3: Processes are directly aware of each other

- Relationship: Cooperation by communication

- Results of one process may depend on information obtained from others

- Timing of process may be affected

- Potential Control Problems: Deadlock, Starvation

Resource Competition

- Concurrent processes come into conflict when they are competing for use of the same resource (critical resources like I/O devices)

- When this happens, the following 3 control problems must be considered:

-> Need for Mutual Exclusion

-> Possibility for Deadlock

-> Possibility for Starvation

Requirements for Mutual Exclusion

- Only one process is allowed to be in its critical section at a time

- A process in a noncritical section must halt and not interfere with other processes

- A process can't be over delayed (no deadlock or starvation)

- A process can't be denied the request to enter its critical section IFF there are no other processes inside their critical section

- A process can only be inside of its critical section for a finite amount of time

Mutual Exclusion: Hardware Support (Disabling Interrupts)

- In a uniprocessor system, concurrent processes may only be interleaved and execution can't be overlapped

- Mutual Exclusion is guaranteed by disabling interrupts

- Disadvantages: Impossible in a multiprocessor system, efficiency of execution is worsened

Special Machine Instruction: Compare & Swap

- An atomic operation where a comparison is made between a memory value and test value, if the values are the same a swap occurs

- Code:

compare_and_swap(word, testval, newval) {

oldval = word;

if (word == testval) {

word = newval;

}

return oldval;

}

Busy Waiting/Spin Waiting

Refers to a technique in which a process can do nothing until it gets permission to enter its critical section, but continues to execute an instruction or set of instructions that test the appropriate variable to gain entrance to the critical section

Exchange Instruction

exchange(register, memory) {

temp = memory;

memory = register;

register = temp;

}

Special Machine Instructions: Advantages

- Applicable to any number of processes on either a single processor or multiple processors sharing main memory

- Simple and easy to verify

- It can be used to support multiple critical sections, each critical section can defined by its own variable

Special Machine Instructions: Disadvantages

- Busy-waiting is used, meaning while a process is waiting for access to a critical section, it continues to use processor time

- Starvation is possible when a process leaves a critical section and more than one process is waiting

- Deadlock is also possible

Mutual Exclusion: Software Approaches

- Can be implemented for concurrent processes that execute on a single-processor or a multiprocessor machine with shared memory

- We can usually assume elementary mutual exclusion at the memory access level

- Two main algorithms: Peterson's and Dekker's

Peterson's Algorithm

boolean flag[2];

int turn;

void P0() {

while (true) {

flag[0] = true;

turn = 1;

while (flag[1] && turn == 1) ;

// critical section ;

flag[0] = false;

// remainder ;

}

}

void P1() {

while (true) {

flag[1] = true;

turn = 0;

while (flag[0] && turn == 0) ;

// critical section ;

flag[1] = false;

// remainder ;

}

}

int main() {

flag[0] = false;

flag[1] = false;

parbegin(P0, P1);

}

3 OS/programming language mechanisms for concurrency

- Semaphores

- Monitors

- Message passing

Semaphore

- An integer value used for signaling among processes

- Only 3 atomic operations can be done on a semaphore:

-> Initialize or semInit(): Initializes semaphore to any non-negative value

-> Decrement or semWait(): If semaphore's value < 0, then this process will be blocked

-> Increment or semSignal(): If semaphore's value <= 0, then a process (if any) blocked by semWait() is unblocked

Consequences of using Semaphores

- There is no way to know before a process decrements a semaphore whether it will block or not

- There is no way to know which process will continue immediately on a uniprocessor system where two processes are running concurrently

- When you signal a semaphore, you don't necessarily know whether another process is waiting, so the number of unblocked processes may be zero or one

Binary Semaphore

- A semaphore who's value may only either be 0 or 1

- Cannot allow multiple processes into critical section at once

Strong/Weak Semaphores

- A queue is used to hold processes waiting on the semaphore

- Strong semaphores removes from the queue the process that has been blocked the longest (FIFO) and has no starvation

- Weak semaphores removes from the queue in no specified order and has the possibility for starvation

Mutex

- Programming flag used to grab & release an object

- Similar to a binary semaphore

- Major difference is that the process that locks the mutex (sets the value to 0) is the only process allowed to unlock the mutex (sets the value to 1)

Condition variable

A data type that is used to block a process or thread until a particular condition is true

Producer/Consumer Problem

- One or more producers are generating data and placing these in a buffer

- A single consumer is taking items out of the buffer one at a time

- Only one producer or consumer may access the buffer at any one time

- Problem: Ensure that the producer can't add data to a full buffer & consumer can't take data from an empty buffer

Producer/Consumer Problem Solution

- Let only one producer or consumer into critical section at a time (mutual exclusion)

- Issue: When consumer is exhausted, buffer waits until produces more items

- Deadlock is prevented by using general semaphores

- Code:

// program boundedbuffer

const int sizeOfBuffer = / buffer size / ;

semaphore s = 1, n = 0, e = sizeOfBuffer;

void producer() {

while (true) {

produce();

semWait(e);

semWait(s);

append();

semSignal(s);

semSignal(n);

}

}

void consumer() {

while (true) {

semWait(n);

semWait(s);

take();

semSignal(s);

semSignal(e);

consume();

}

}

int main() {

parbegin(producer(s), consumer);

}

Monitors

- Programming language construct that provides the equivalent functionality of a semaphore and easier to use

- Encapsulates variables, access procedures, and initialization code within an abstract data type

- Monitor's data can only be accessed through it access procedures and only one process may access the monitor at any given time

Monitor Synchronization

- Achieved by the use of condition variables that are contained within the monitor

- The condition variables are a special data type that are operated on with the following functions:

-> cwait(c): Suspend execution of the calling process on condition C

-> csignal(c): Resume execution of some process blocked from cwait()

Hoare Monitors

- If there is atleast one process in the condition queue, that process runs immediately when another process issues csignal() for that condition

- Two drawbacks:

-> If the process issuing the csignal() has not finished with the monitor, then 2 additional process switches are required: one to block this process, and another to resume it when the monitor free again

-> Process scheduling with signal must be reliable: when csignal() is issued, a process in the queue is activated immediately and no other process enters

Message Passing

- A means for two processes to exchange information and that may be used for synchronization

- Works in distributed systems, both shared-memory multiprocessor and uniprocessor systems

- Two functions are provided: send(destination, msg), receive(source, msg)

Message Passing Synchronization

- The receiver cannot receive a message until it has been sent one by another process

- When a receive primitive is executed, there are 2 possibilities:

-> If a message has been previously sent, the message is received and execution continues

-> If there is no waiting message the process is blocked until a message arrives or the process continues to execute, abandoning the receive primitive entirely

Blocking Send, Blocking Receive

- Both sender and receiver are blocked until message is delivered

- Sometimes referred to as a rendezvous

- Allows for tight synchronization between processes

Direct Addressing

- Send primitive includes a specific identifier of the destination process

- Receive is handled in either of the following ways:

-> Require that the process explicitly designate a sending process as this is effective for cooperating with concurrent processes

-> Do implicit addressing where the source parameter of the receive parameter of the receive primitive possesses a value returned when the receive operation has been performed

Indirect Addressing

- Messages are sent to a shared data structure consisting of queues temporarily holding messages

- Queues are commonly referred to as mailboxes, where the send process puts messages inside the mailbox and the receive process grabs messages from the mailbox

General Message Format

- Message is divided into the 2 following parts:

- Header

-> Message Type

-> Destination ID

-> Source ID

-> Message Length

-> Control Information

- Body

-> Message Content

System V Style Messages

- Only has msg type inside the header

- Uses indirect addressing

- Send blocks when msgQ is full and receive blocks when msgQ is empty

Readers/Writers Problem

- A data area is shared among many processes

-> Some processes only read from the data area (readers) and others only write to the data area (writers)

- Conditions that must be satisfied:

1. Any number of readers may simultaneously read the data area

2. Only one writer at a time may write to the data area

3. If a writer is writing to the data area, no reader may read it

Readers/Writers Problem Solution using Message Passing

- Controller gives access to shared data

- Both readers and writers sent controller request msg, controller grants access with OK reply msg and when process is done, sends finished msg

- Writers have priority

- Variable count for M.E (initialized to some value > the max num of readers)

- How it works:

-> Let max num of reads = 99 => count = 100

-> Each read: count-- (but never gets to 0)

-> Write request: count -= 100

-> If count = -10, then 10 concurrent reads and 1 write waiting

-> When count < 0: Allow all reads to finish (count++) and let writer proceed after

Resource Categories

- Reusable

-> Can be safely used by only one process at a time and is not depleted by that use

-> Ex: Processors, I/O channels, main and secondary memory, etc.

- Consumable

-> One that can be created (produced) and destroyed (consumed)

-> Ex: Interrupts, signals, messages, and information in I/O buffers

Conditions for Deadlock

- Mutual exclusion: Only one process may use a resource at a time, no process may access a resource that is already "held" by another process

- Hold-and-wait: A process may hold allocated resources while awaiting assignment of others

- No pre-emption: no resource can be forcibly removed from a process holding it

- Circular wait: A closed chain of processes exist in such a way where each process holds a resource and needs the resource held by the next process in the chain

Approaches to handle Deadlock

- Prevention: Adopt a policy that eleminates one of the conditions for deadlock

- Avoidance: Make the appropriate dynamic choices based on the current state of resource allocation

- Detection: Attempt to detect the presence of deadlock and take action to recover

Deadlock Prevention

- Design a system in such a way that the possibility of deadlock is excluded

- Two main methods:

-> Indirect: Prevent the occurrence of any one of Mutual Exclusion, Hold-and-wait or No pre-emption

-> Direct: Prevent Circular wait

- Disadvantage: Can lead to inefficient use of resources

Deadlock Indirect Condition Prevention

- Eliminate Mutual Exclusion

-> If access to a resource requires mutual exclusion then it must be supported by the OS

- Eliminate Hold-and-wait

-> Require that a process request all of its required resource at once and block the process until all requests can be granted simultaneously

- Allow pre-emption

-> If Process X holds certain resources and is denied a further request, X must release its original resources and request these original resources + the new resources (Requester is preempted)

-> OS may preempt the second process and require it to release its resources (Holder is preempted)

Deadlock Direct Condition Prevention

- Eliminate Circular Wait

-> Define a linear ordering of resource types (R1, R2, ...)

-> Processes must request in that order

-> This is now impossible for processes P & Q to do:

P Q

... ...

get A get B

get B get A

- Can be inefficient

Deadlock Avoidance

- A decision is made dynamically whether the current resource allocation request will, if granted potentially lead to deadlock

- Requires knowledge of future process resource requests

- 2 main approaches:

-> Resource Allocation Denial: Do not grant an incremental resource request if this allocation might lead to deadlock

-> Process Initiation Denial: Do not start a process if its demand might lead to deadlock

- Advantages: It is not necessary to preempt and rollback processes like in deadlock detection and it's not as restrictive as deadlock prevention

- Disadvantages: Max resource requirement for each process, no process may exit while holding resources, necessary to have fixed number of resources to allocate

Process Initiation Denial Example

- System has 21 MB of memory

- 3 Processes: P1, P2 and P3

- Max Memory Claim: P1 claims 16 MB, P2 claims 4MB and P3 claims 2MB

- First P1 requests initiation => Allowed (16 MB < 21 MB)

- After P2 requests initiation => Allowed (16 + 4 MB < 21 MB)

- Finally P3 requests initiation => Denied (16 + 4 + 2 MB > 21 MB)

Resource Allocation Denial

- Referred to as the banker's algorithm

- State of the system reflects the current allocation of resources to processes

- Safe state is one which there is some sequence of resource allocations that doesn't result in deadlock, otherwise is an unsafe state

- Grants resources or blocks process depending on state

Deadlock Detection

- Resource requests are granted whenever possible

- Least conservative method

- A deadlock detection algorithm is made whenever a process requests a resource

- Advantages: Simple algorithm, leads to early detection

- Disadvantage: Consumes alot of processor time

Recovery Strategies

- Abort all deadlocked processes

- Back up each deadlocked process to some previously defined checkpoint and restart all processes

- Successively abort & preempt processes and resources until deadlock no longer exists

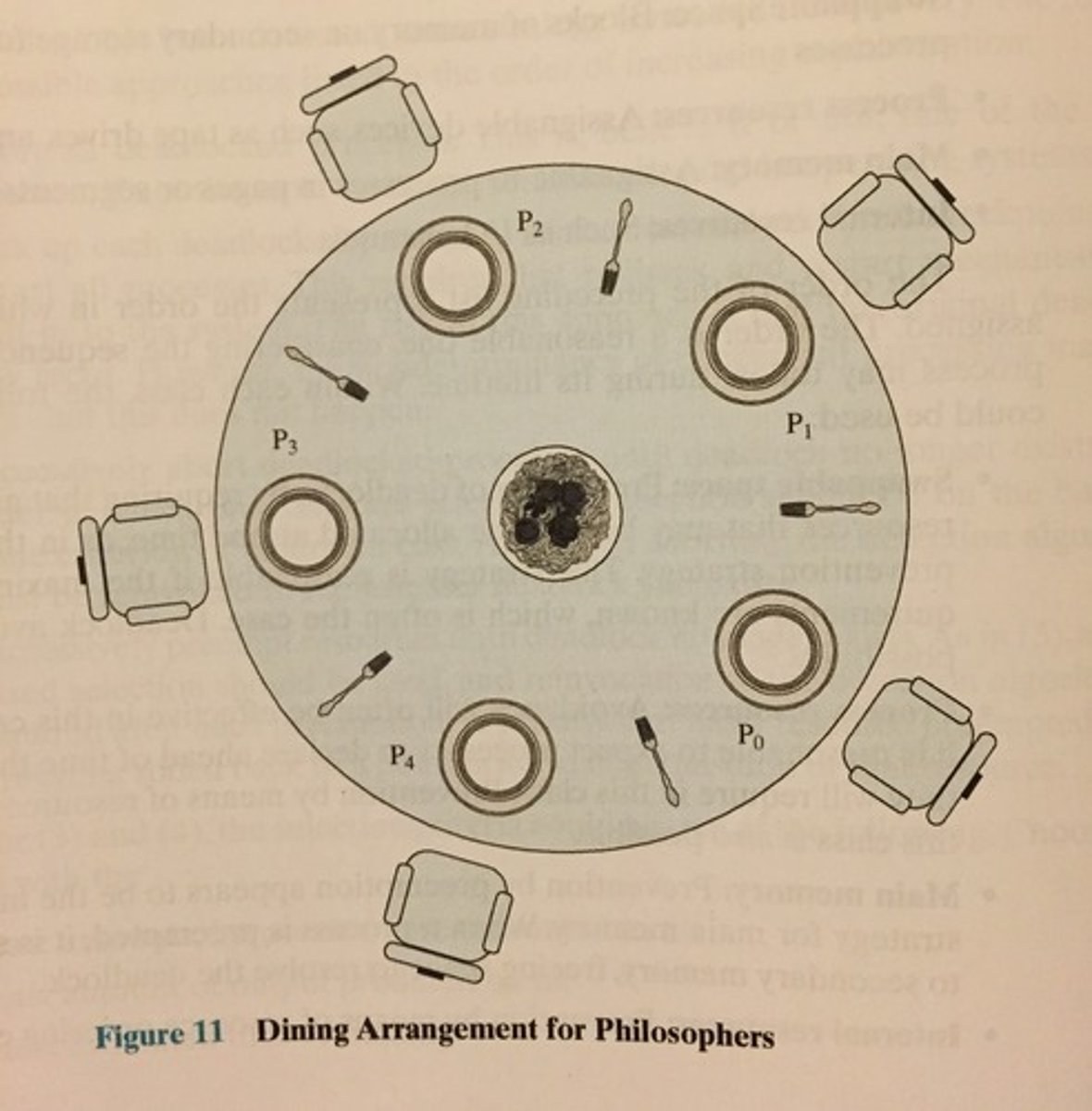

Dining Philosophers Problem

- No two philosophers can use the same fork at the same time (mutual exclusion)

- No philosopher must starve to death (avoid deadlock and starvation)

Unix Concurrency Mechanisms

- Unix provides a variety of mechanisms for IPC (interprocess communication) and synchronization settings:

-> Pipes

-> Messages

-> Shared Memory

-> Semaphores

-> Signals

Pipes

- Circular buffers allowing two processes to communicate on the producer-consumer model

- First-in first-out queue, written by one process and read by another

- Two types: Named & Unnamed

Messages

- A block of bytes with an accompanying type

- UNIX provides msgsnd and msgrcv system calls for processes to engage in message passing

- Associated with each process is a message queue which functions like a mailbox

Shared Memory

- Fastest form of IPC

- Common block of virtual memory shared by multiple processes

- Permission is read-only or read-write for a process

- Mutual exclusion must be provided by the process

Signals

- A software signal mechanism that informs a process of the occurrence of asynchronous events inside the process table

- A process may respond to a signal by:

-> Performing some default action

-> Executing a signal-handler function

-> Ignoring the signal entirely

Real-time (RT) Signals

- Only available in Linux

- Signal priority ordering is supported

- Values/data can also be sent along with the signal

Linux Kernel Concurrency Mechanism

- Everything found in UNIX (pipes, messages, shared memory, signals) plus:

-> Spinlocks

-> Barriers

-> Atomic Operations

-> Semaphores

Spinlocks

- Most common technique for protecting a critical section

- Can only be acquired by one thread at a time

- Built on an integer location in memory that is checked by each thread before it enters its critical section

- Disadvantage: Locked out threads are left in busy-waiting mode

Barriers

Enforce the order in which instructions are executed

RCU (Read-Copy-Update)

- Readers and Writers share a data structure

- Multiple readers can occur concurrently with a writer updating the data structure

- The shared resource is accessed via a pointer

- For a writer to update:

-> Creates a copy, then updates it and lastly makes pointer point to the new copy

-> Free old resource once readers are all done

Memory Management

OS must dynamically divide up Main Memory for the user program, which may or may not have multiple processes/threads

Frame

A fixed-length block of main memory

Page

- A fixed-length block of data that resides in secondary memory (such as the disk)

- A page of data may be temporarily copied into a frame of main memory

Segment

- A variable-length block of data that resides in secondary memory

- An entire segment may be temporarily copied into main memory (segmentation)

- The segment may also be divided into pages and then individually copied into main memory (combined segmentation and paging)

Memory Management Requirements

- Relocation

- Protection

- Sharing

- Logical Organization

- Physical Organization

Relocation

- Refers to the ability of a process to move around in memory

- Processes get swapped out of memory and swapped back into an entirely different memory region

- All addresses and pointers must change as well

Protection

- Each process shouldn't interfere with other process (Ex: memory read/write)

- Each process' memory references must be checked at run time

Sharing

If necessary, must allow several processes to access the same portion of main memory (like a data structure) without compromising protection

Logical Organization

- Most programs are organized into modules

-> Some modifiable (data), some not (read only, executables)

- Advantages of Modules:

-> Shared across many processes

-> Independent compilation

-> Different degrees of protection

Physical Organization

- Computer Memory is divided into 2 levels: Main memory (volatile, faster, smaller capacity) and secondary memory (permanent, slower, bigger capacity)

- Information is constantly moved between the 2 levels of memory

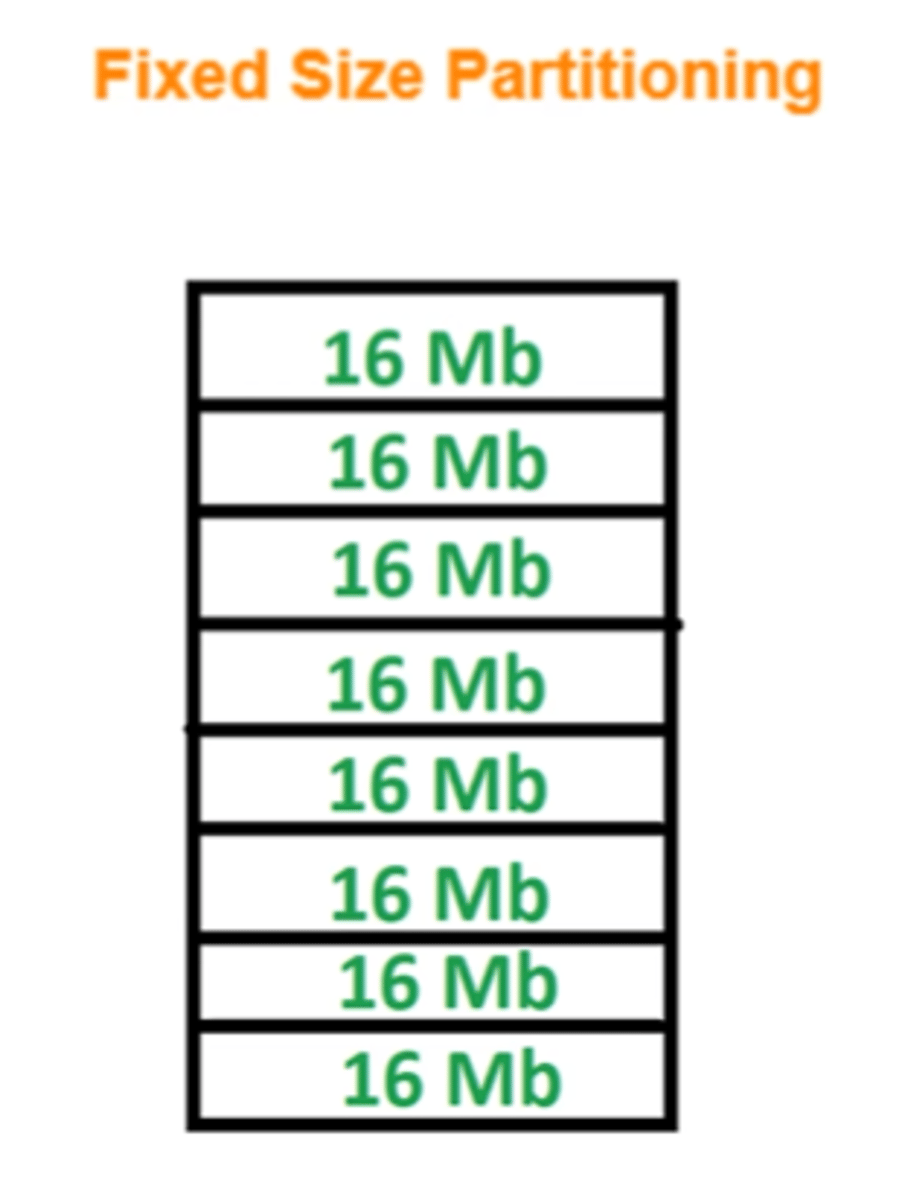

Fixed Partitioning

- Equal-size partitions

- Any process whose size is less than or equal to the partition size can be loaded into an available partition

- The OS can swap out processes if all partitions are full and no process is in the Ready/Running state

- Disadvantages: Program may be too large for partition, Internal fragmentation (program is really small in comparison to partition)

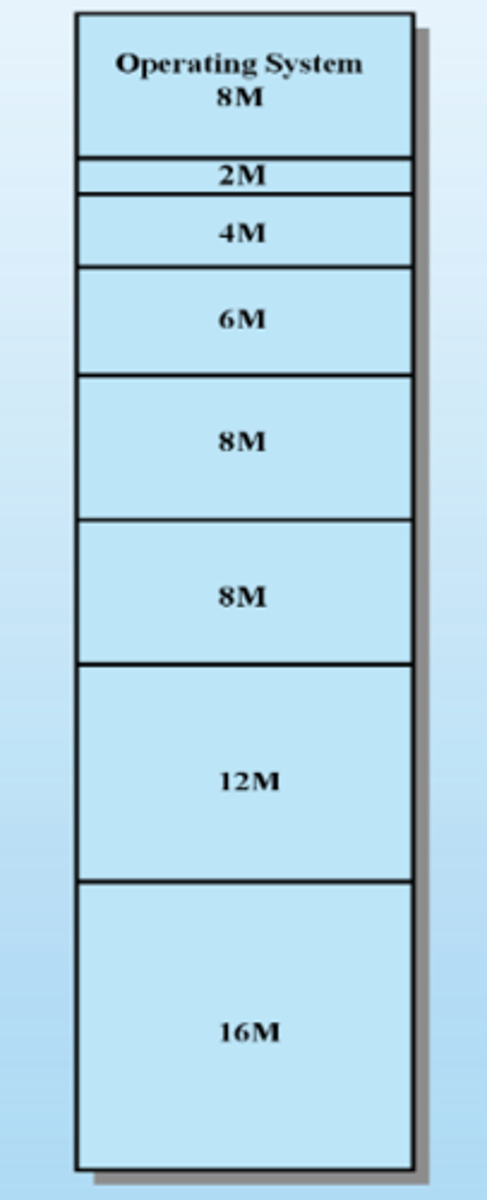

Unequal Size Partitions

- Using unequal size partitions helps lessen the problem

- Lessens internal fragmentation

- Disadvantages: The number of partitions specifies the maximum number of processes allowed in main memory, small jobs don't use the partition space efficiently

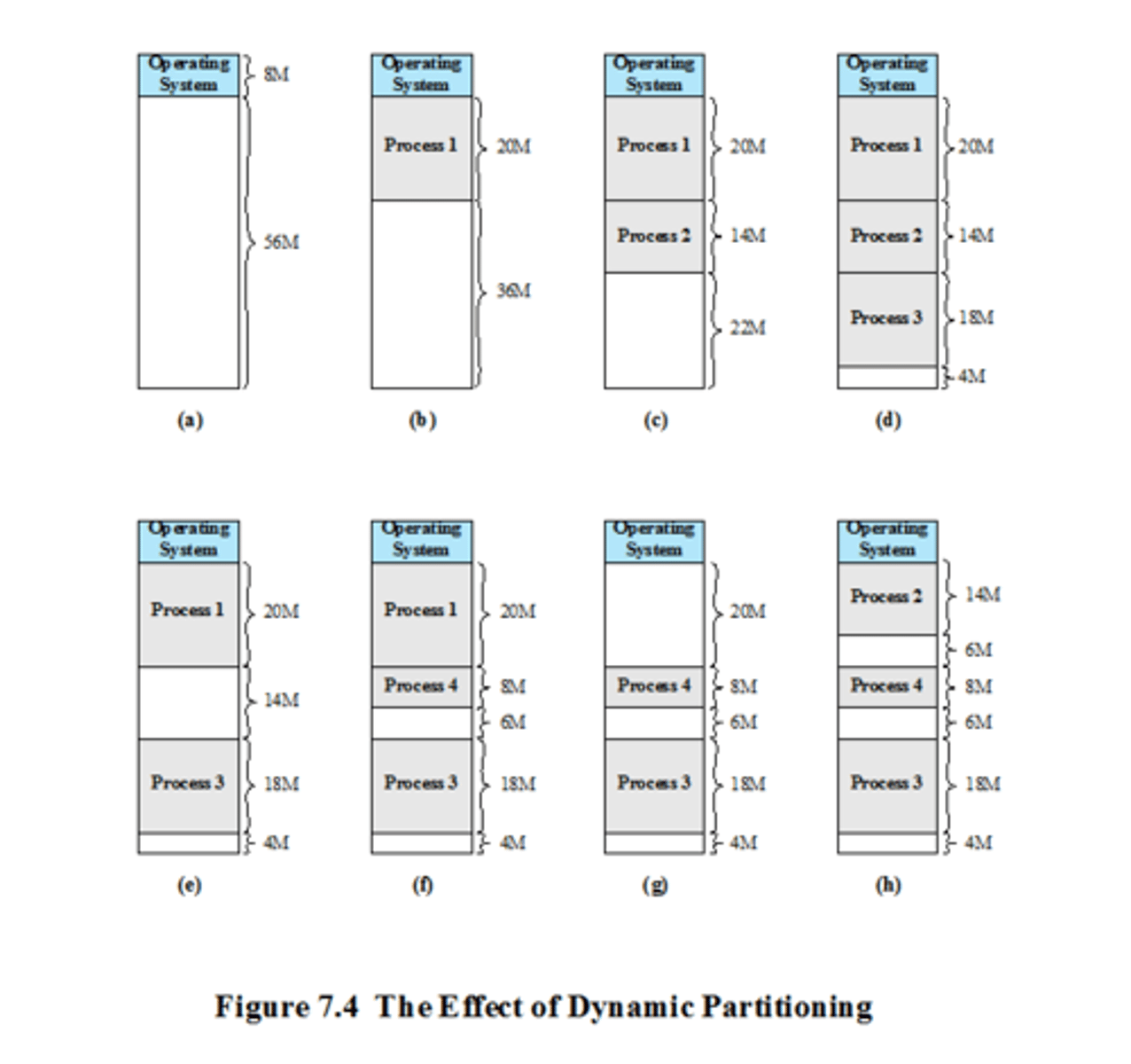

Dynamic Partitioning

- Partitions are of variable length and number

- Processes are allocated exactly as much memory as they need

- Disadvantages: External fragmentation, memory utilization worsens

- Compaction can be utilized to shift processes up in memory so that they are contiguous, however this wastes CPU time

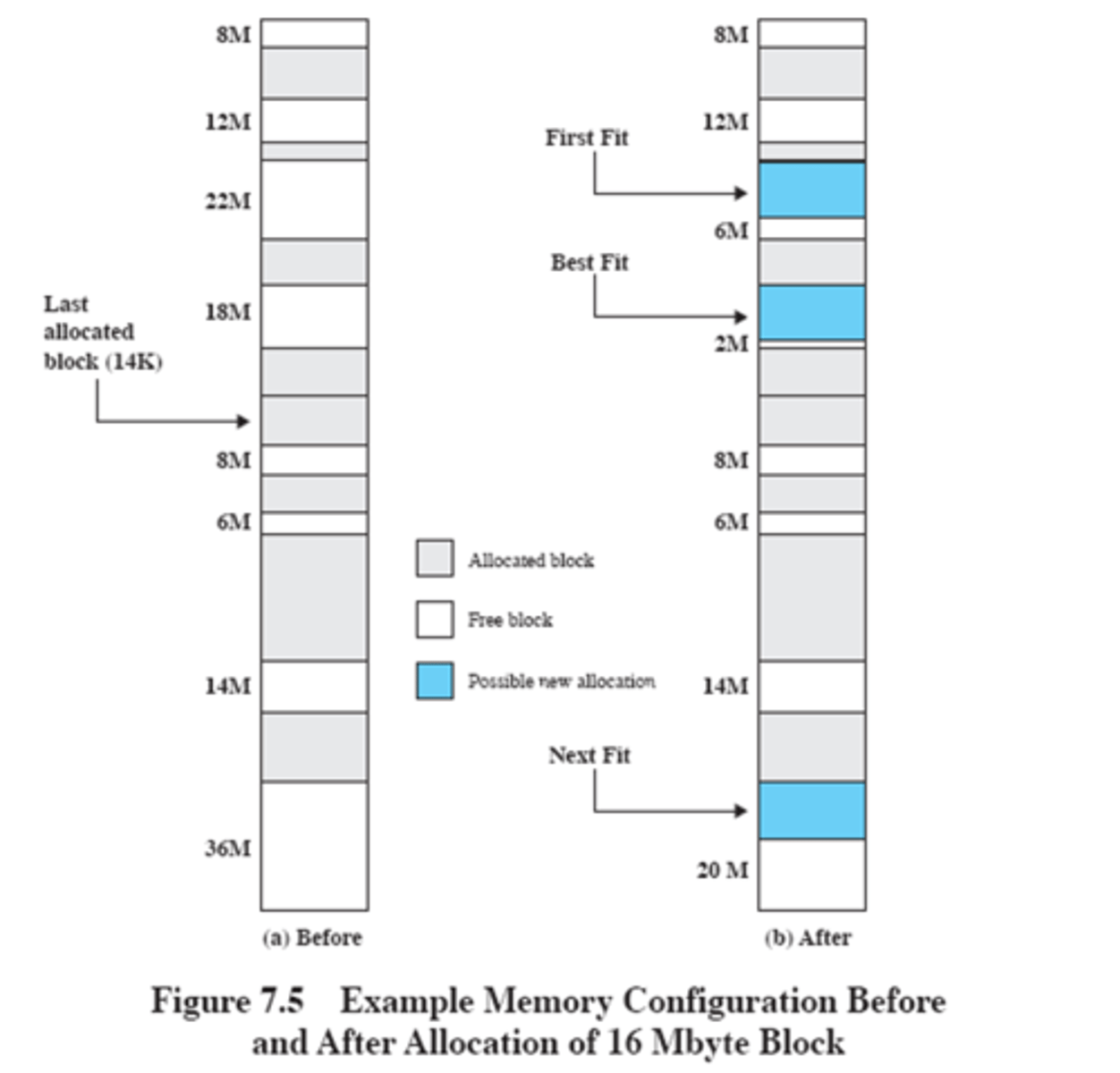

Placement Algorithms

- Best-fit: Chooses the block that is closest in size to the request

- First-fit: Scans memory from beginning to end, chooses the first available block

- Next-fit: First-fit but with a pointer that remembers the last process insertion

Addresses

- Logical: Reference to a memory location independent of the current assignment of data to memory

- Relative: Address is expressed as a location relative to some known point

- Absolute: Actual location in main memory

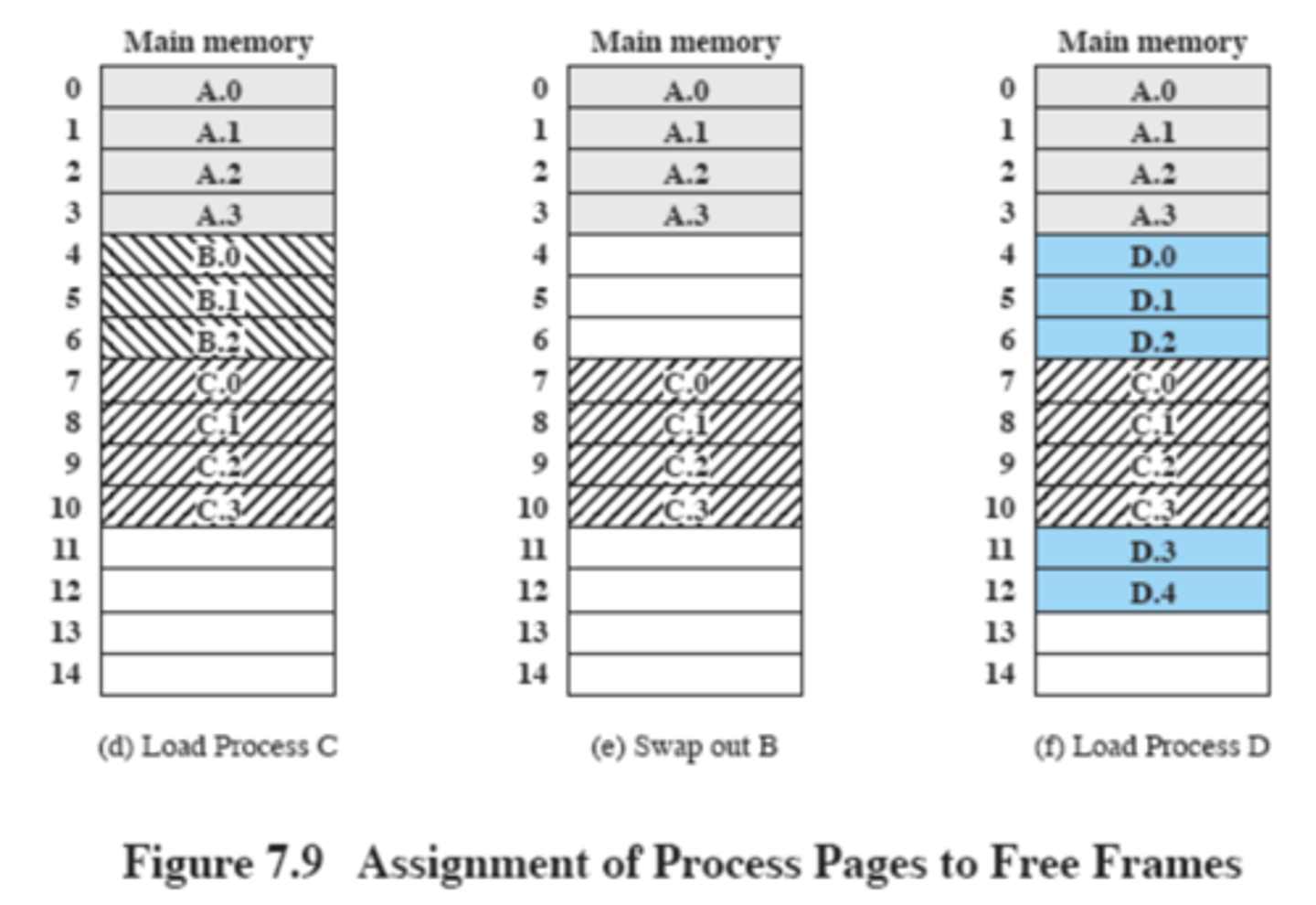

Paging

- Partition memory into equal fixed-size chunks that are relatively small

- Process is also divided into small fixed-size chunks of the same size

- Pages: Chunks of a process

- Frames: Available chunks of memory

Page Table

- Maintained by the operating system for each process

- Contains the frame location for each page in the process

- Processor must know how to access for the current process

- Used by the processor to produce a physical address from a logical address

Virtual Memory

- A storage allocation scheme in which secondary memory can be treated as though it were part of main memory

- The addresses a program may use to reference memory are distinguished from the address the memory system uses to identify physical storage sites

- Size of virtual storage is limited by the addressing scheme of the OS and amount of secondary memory, but not size of main memory

Execution of a Process

- Operating system brings into main memory a few pieces of the program (known as the resident set)

- Hardware generates an interrupt if needed logical address is not in main memory (memory access fault)

- Operating system places the process in a blocking state

Implications of Virtual Memory

- More processes may be maintained in main memory as we only load some pieces of each process

- With so many processes in main memory, it is highly likely at least one process will be in the Ready state at any given time

- A process can be executed that is larger than all of main memory

- Better processor and memory utilization

Thrashing

- A state in which the system spends most of its time swapping out process pages, rather than executing instructions

- In order to avoid this, the OS uses recent history and tries to guess which pieces will least likely be used in the near future

Principle of Locality

- Remember the concept stating program and data references within a process tend to cluster

- This can be applied to process pages, as only a few pieces of a process will be needed over a short period of time

- Avoids thrashing

Page Table Size

- Proportional to the size of a process (image)

- Ex:

- Suppose Process P in Virtual Memory occupies 4GB (2^32 bytes)

- Assume 4KB pages (2^12 bytes) and byte-level addressing

- Then P has 2^20 pages & P's Page Table has 2^20 entries (2^32 / 2^12)

- Assume each entry takes 4 bytes (2^2 bytes)

- Then P's Page Table takes 2^22 bytes (2^20 * 2^2 = 4MB)

Translation Lookaside Buffer (TLB)

- Each virtual memory reference can cause two physical memory accesses: One to fetch the page table entry and another for data

- To overcome this, we use a TLB which is a memory cache that stores recent translations of virtual addresses to physical addresses

- Speeds up memory accesses

Segmentation

- Allows programmer to view memory as consisting of multiple address spaces or segments

- Advantages: Simplifies handling growing data structures, modularity support, protection/sharing support

Combined Paging and Segmentation

- A user's address space is broken up into a number of segments

- Each segment is then broken up into a number of fixed-size pages which are equal in length to a main memory frame

- Segmentation is visible and paging is invisible to the programmer

Policies for Virtual Memory

- Fetch policy

- Placement policy

- Replacement policy

- Resident Set Management

- Cleaning policy

- Load control

Fetch Policy

- Determines when a page should be brought into main memory

- Two main types: Demand paging, prepaging

Demand Paging

- Only bring pages into main memory when a reference is made to a location on the page

- Many page faults when a process is first started

- Page faults should decrease as more and more pages are brought in according to the Principle of Locality

Prepaging

- Pages other than the one demanded by a page fault are brought in

- If pages of a process are stored contiguously in secondary memory, it is more efficient to bring a number of pages at one time

Placement Policy

- Determines where in real memory a process is to reside

- Important design issue in a segmentation system

- For NUMA systems an automatic placement strategy is desirable

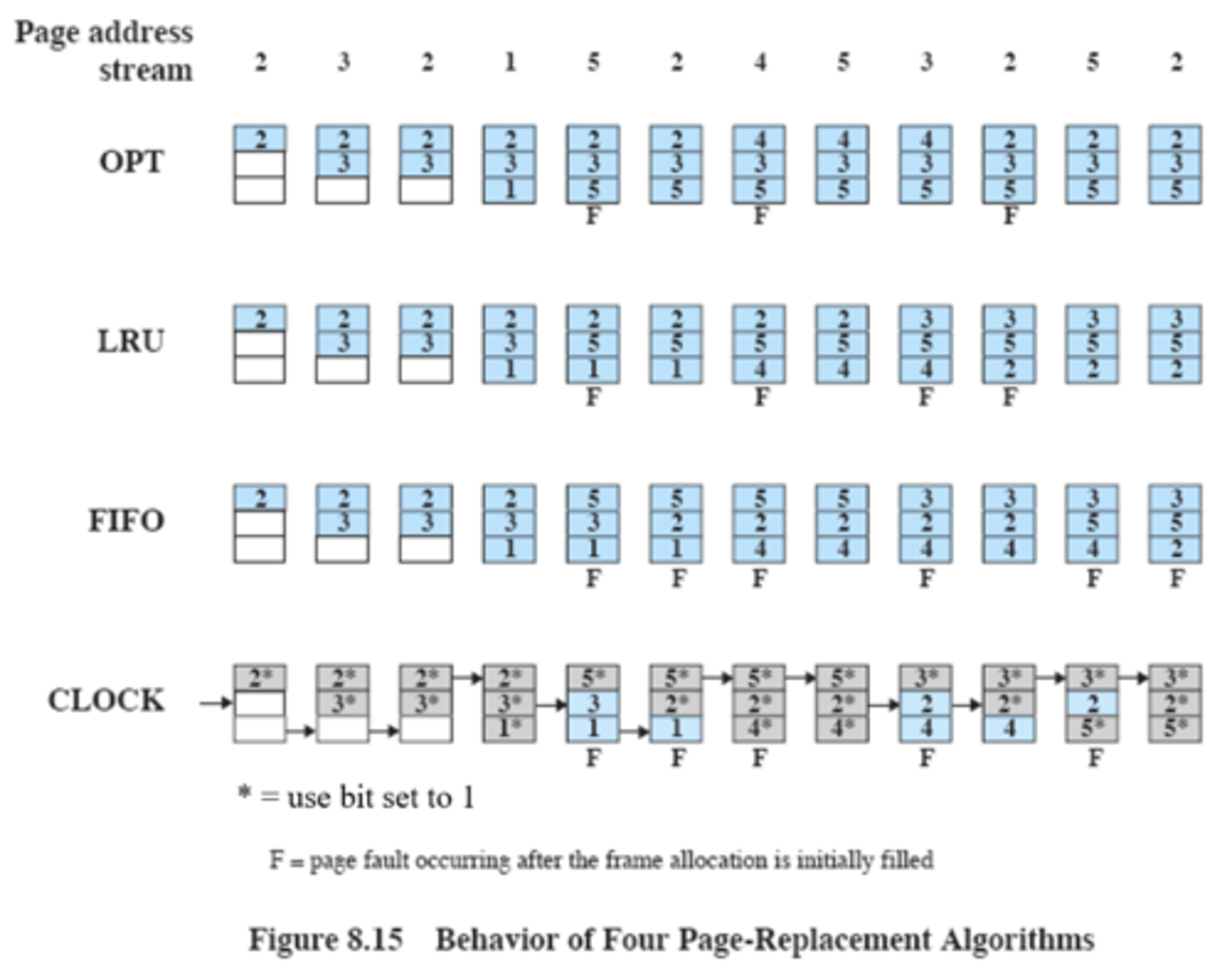

Replacement Policy

- Deals with the selection of a page in main memory to be replaced when a new page must be brought in

- Objective is to remove the page least likely to be referenced in the near future

- The more elaborate the replacement policy, the greater hardware and software overhead exists

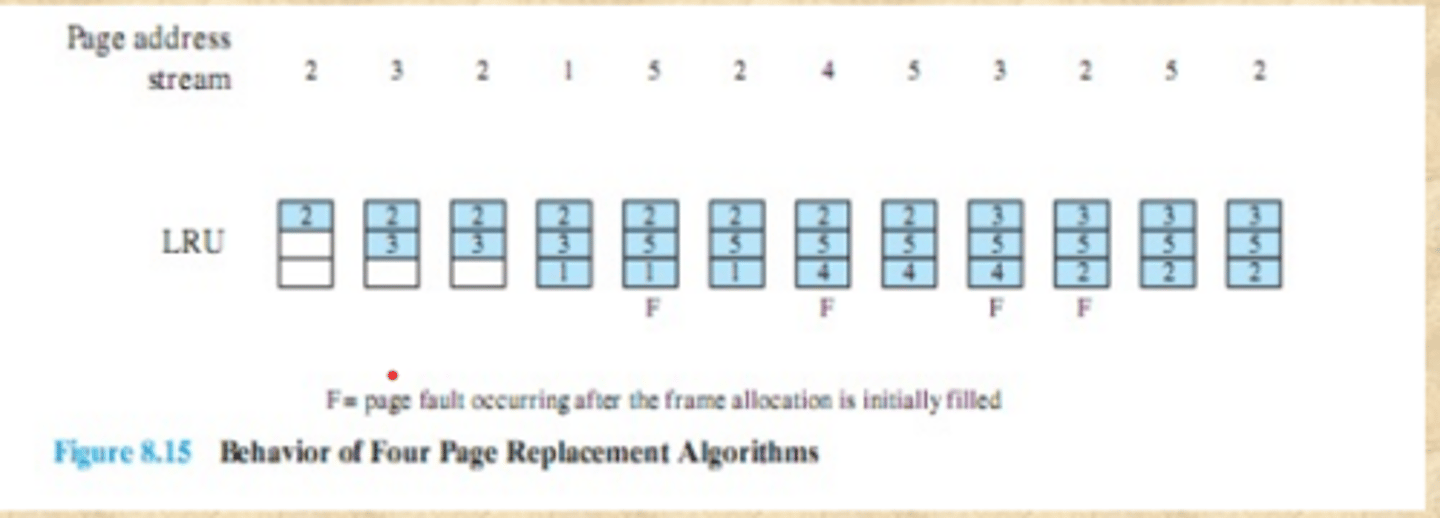

- Basic Algorithms: Least Recently Used (LRU), First-in-first-out (FIFO), Clock

Least Recently Used (LRU)

- Replaces the page that has not been referenced for the longest time

- By the principle of locality, this should be the page least likely to be referenced in the near future

- Difficult to implement, requires a lot of overhead