CHAPTER 4: DESIGN A RATE LIMITER

1/19

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

20 Terms

Requirement questions

Step 1

Is it a client side rate limiter or client side?

Does the rate limiter throttle request based on IP? User ID?

How many request are we talking?

Should it be a separate service or in application code?

Do we tell users they are throttled?

Common requirements

Low latency

High throughput

Low memory usage

High fault tolerance. If there are any problems with the rate limiter (for example, a cache server goes offline), it does not affect the entire system.

Status code for too many requests

429

Benefits of build your own rate limiter

You can choose algorithm

Algorithms for rate limiting

Token bucket

Leaky bucket

Fixed window counter

Sliding window counter

Sliding window log

Considerations for token bucket algo

Bucket size (burst request allowance)

Refill rate

Bucket grouping (per endpoint per IP per server)

Issue with fixed window counter

If the burst falls between 1 windows, up to 2x the limit would be allowed

How does sliding window log work

Keep track of each request timestamp

Get count of request in last 1s on every request

How does sliding window counter work

Uses fixed window counter, but use a percentage of each window

Not 100% accurate

Issues with refilling token bucket

A lot of load to constantly incrementing token counter

Alternative to refilling token bucket

Get token count

If positive, decrement token, update token timestamp, allow request

If negative, calculate new token count based on last updated token timestamp

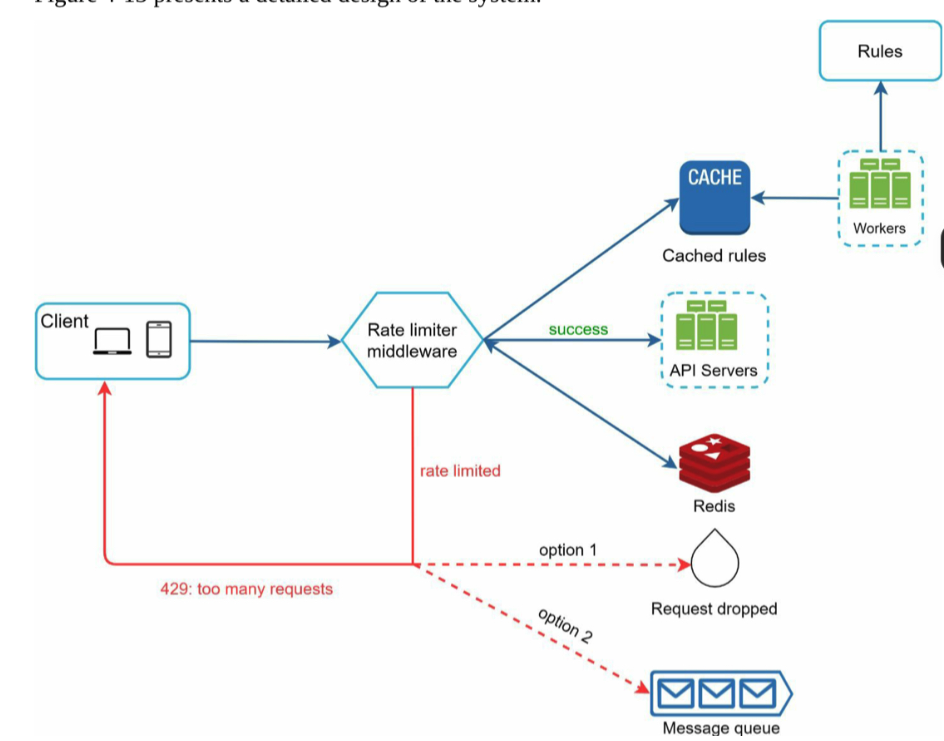

How to make rules configurable

Use YAML config file, each rule has request filter, rate limit unit and requests per unit

Cache the rules

Add worker to update cache if rule changes

HTTP Headers for rate limiting

X-Ratelimit-Limit: total number of requests allowed

X-Ratelimit-Remaining: the remaining number of allowed requests

X-Ratelimit-Retry-After

Options when request hit rate limit

Drop

Put it in message queue and retry later

What’s the race condition challenge

Client needs to calculate how many token in the bucket as well incrementing the bucket in 1 atomic action

Otherwise 2 concurrent request may not count each other

Solution to race condition challenge

Use lua script for 1 atomic action

Use sliding window algo with Sorted String Set in Redis

Components needed for rate limiter

Rate limiter service

Redis

Rule store (cache)

How to improve performance

Close to user (multiple data centers or edge servers)

Synchronize data eventual consistency

How to reduce hitting rate limit

Cache on client side

Gracefully handle rate limit

Add sufficient back off time for retry

High level diagram