selective attention

1/46

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

47 Terms

areas

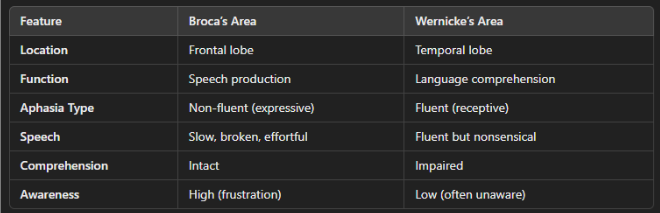

Brocas area (located in the frontal lobe, usually in the left hemisphere)

primarily responsible for speech production and articulation

helps in forming grammatically correct sentences

coordinates motor functions needed for speaking

Wernickes area (located in the superior temporal gyrus, in the left hemisphere)

primarily responsible for language comprehension

helps in understanding spoken and written language

plays a role in meaningful sentence formation

evidence for specialised structure-computing (parsing) module in our brains

Broca’s aphasia and comprehension difficulties

while Broca’s aphasia is typically associated with difficulty in speech production, some Broca’s aphasia patients also struggle with comprehension

their comprehension deficits mainly appear in syntactically complex sentences

Challenges with complex sentence structure:

Example: ‘put the small round green circle to the left of the brown square above the triangle’

This sentence requires hierarchical parsing and spatial reasoning, which Broca’s aphasia patients often find difficult

problems with reversible sentences

example

‘the dancer applauds the clown’

since both dancer and clown can be subjects or objects, word order and grammatical markers (like affixes or function words) are crucial to determine meaning

Broca’s aphasia patients often rely on semantic cues rather than syntax, so they may struggle to correctly interpret who is performing the action

what this tells us about Broca’s area

this supports the idea that Broca’s area is crucial not only for speech production but also for underlying syntax and sentence structure

Patients with Broca’s aphasia rely more on meaning (semantics) rather than syntax, leading to difficulties in interpreting sentences where meaning depends on grammatical structure rather than word meaning

perception and attention

perception: successful perception involves making educated guesses about the world

attention: we cannot attend to everything so we attend to some things and not others. We miss a surprising amount of what goes on in the world

dividing attention between voices: the ‘cocktail party’ problem

we cannot understand/remember the contents of two concurrent spoken messages (Cherry, 1950s)

the best we can do is alternate between attending selectively to the speakers

wheres the bottleneck?

focused attention to one of two simultaneous speech messages

‘shadowing’ (ie repeating aloud) one of the messages is

successful if the messages differ in physical properties (location, voice, amplitude)

not successful if they differ only in semantic content (eg novel versus recipe, or two categories of word)

what kind of changes do participants notice in the unattended message?

physical changes (location, voice, volume, gross phonetics)

not semantic changes (ie from meaningful to meaningless, words to pseudowords)

word repeated 35 times in unattended message not remembered better (in later unexpected recognition test) than word heard once

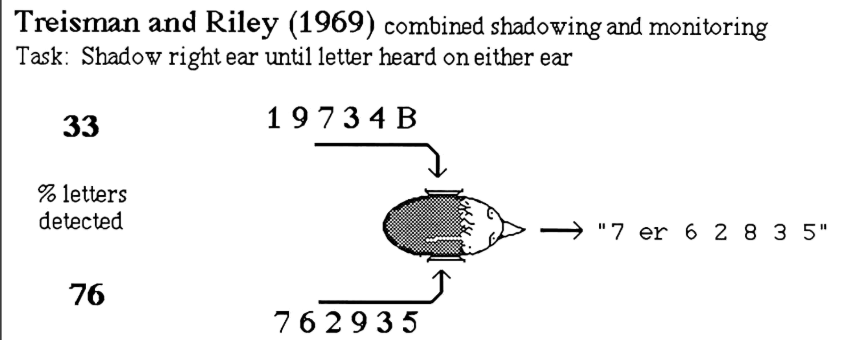

Experiments in the 1960s by Treisman, Moray, and others

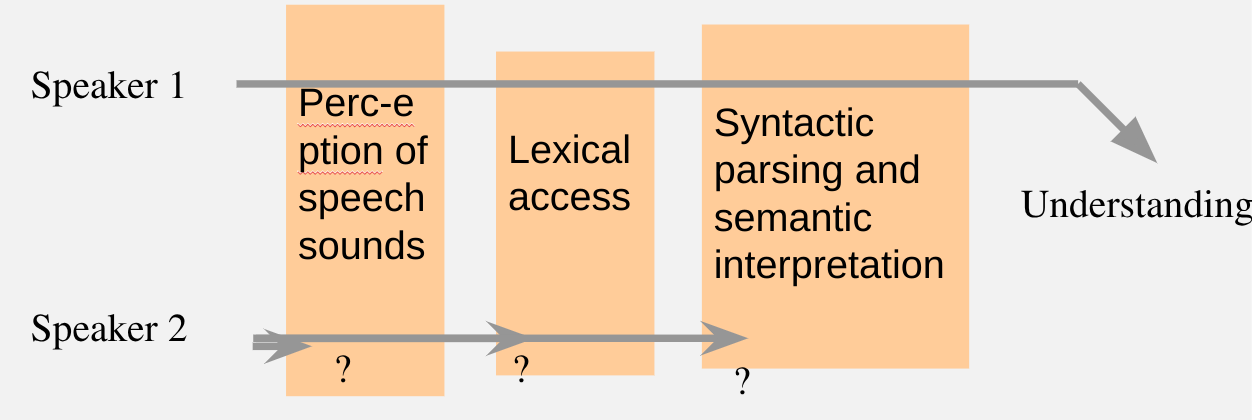

attentional selection precedes lexical identification and access to meaning?

it seems that unattended words are ‘filtered out’ early, after analysis of physical attributes, before access to identity/meaning

so, though aware of unattended speech as sounds with pitch, loudness and phonetic characteristics (in the background), we do not seem to process their identity or meaning

if required to extract identity or meaning from 2 sources, participant has to switch the attention filter between them - a slow and effortful process:

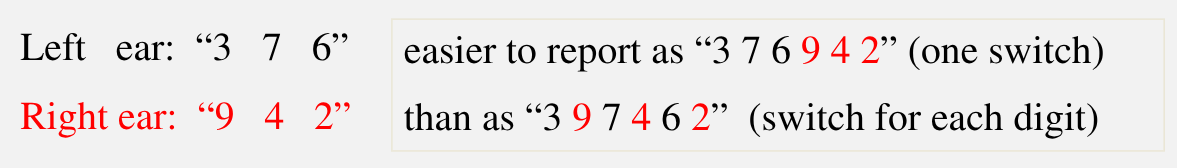

Broadbent’s (1958) dichotic split-span experiment:

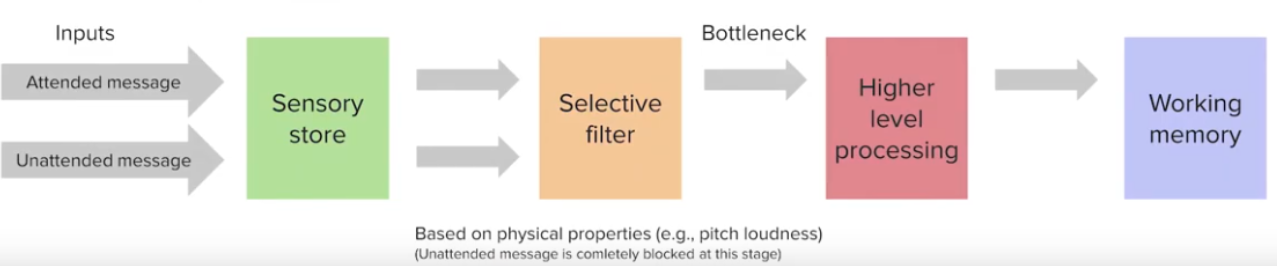

Broadbent’s (1958) ‘Filter’ model

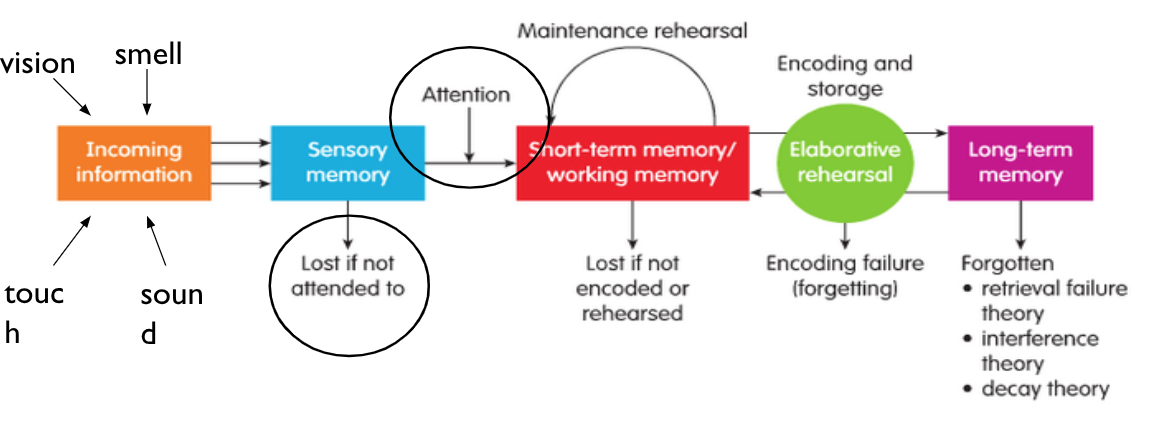

sensory features of all speech sources are processed in parallel and stored briefly in sensory memory (‘echoic memory’)

a selective ‘filter’ is directed only to once source at a time

filter is early in processing, so that only information that passes through the filter achieves

recognition (of words, objects, faces)

activation of their meaning

representation in memory (WM and LTM)

control of voluntary action

access to conscious awareness

2 additional assumptions of Broadbent’s account

filter is all-or-none

filter is obligatory ‘structural’ bottleneck

but (it turned out) filtering is not all-or-none

examples of partial ‘breakthrough’ of meaning of unattended speech in shadowing experiments:

own name often noticed in unattended speech

interpretation of lexically ambiguous words in attended message influenced by meaning of words in unattended message

attended: they saw the port for the first time

unattended: they drank until the bottle was empty

condition a galvanic skin response to eg Chicago. Then:

GSR evoked by ‘Chicago’ in unattended message, though participant does not notice/remember ‘Chicago’

effect generalises to related city names eg New York

ie meaning has been activated, not just sound pattern detected

but GSRs to unattended than to attended names: semantic activation by unattended input is attenuated, though not blocked

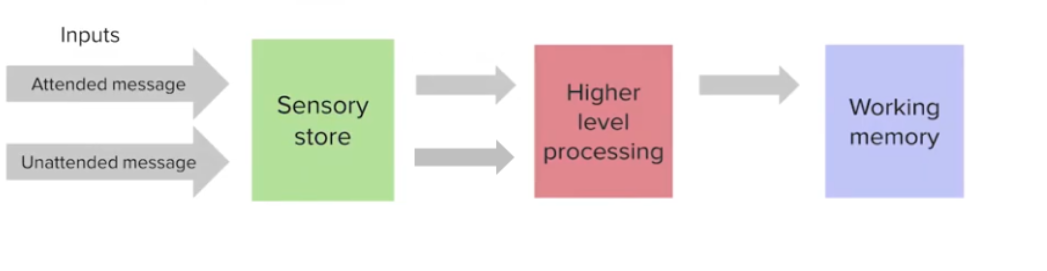

‘breakthrough’ demonstrations inspired: ‘late selection’ theories

theory: both attended and unattended words processed up to and including identification and meaning activation; relevant meanings then picked out on a basis of permanent salience or current relevance

but doesn’t explain why

selection on the basis of sensory attributes so much more efficient than selection on the basis of meaning

GSR to unattended probe words weaker than to attended

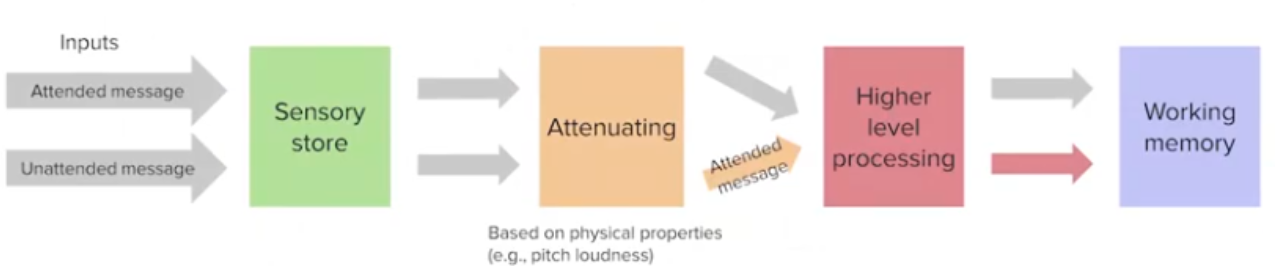

the resolution: filter-attenuation theory (Triesman, 1969)

there is an early filter but

it is not all-or-none: it attenuates (turns down) input from attenuated sources (hence, with the support of top-down activation, unattended words, if salient or contextually relevant, can still activate meanings)

early filtering is an optional strategy (not a fixed structural bottleneck)

early selection an option not a structural bottleneck

monitoring for target word (animal name)

left ear: plate cloth day fog tent risk bear..

right ear: hat king pig groan loaf cope lint

Task: press L or R key when animal name is heard

after practice, target detection as accurate when a word target must be detected on either ear as only one

unless selective understanding/repetition of one message is required eg.

part 2 - vision

There is quite a lot of evidence that when you display an array of objects very briefly, such as in Sperling’s experiments I described previously, we can select which ones to report on the basis of their physical properties, like colour, size, etc., but not their category – e.g. letter or digit.

However such displays are rather unrepresentative of normal vision, where we are dealing with dynamic visual events.

One difference between visual and auditory attention is that while we can’t really select a sound to attend to by pointing our ears at it, we clearly do this with vision. That is, usually when we want to attend to an object, we fixate that object so as to get its image on to the fovea, where we have high acuity and good colour discrimination.

But we can also move attention independently of fixation. For example, there are social situations where we don’t want to be seen to be looking at someone, but we can attend to what they are doing

An influential way of probing this voluntary movement of visual attention within the visual field was introduced by Mike Posner. Just try a few trials of his paradigm.

the attentional ‘spotlight’ (of covert attention)

‘endogenous cueing’

probable stimulus location indicated by arrow cue (80% valid) or not - neutral cue

participant responds as fast as possible to stimulus (maintaining central fixation)

simple RT to onset

choice ‘spatial’ RT (left/right from centre)

choice ‘symbolic’ RT (letter/digit)

all faster for expected location and slower for unexpected

The arrow cue enables you to shift your attention to the expected location without moving your eyes (and Posner monitored eye movements). There were also trials where an uninformative “neutral” cue was presented

What is the consequence of shifting one’s attentional “spotlight” to a location in this covert way? On average, responses were faster to a stimulus when it occurs in the expected -- and therefore attended – location, and slower to a target in the unattended location

So the processing of stimuli in the attentional spotlight enjoys an efficiency gain, and stimuli outside the spotlight an efficiency penalty.

In this case the orienting of attention was voluntary, driven by expectation

‘endogenous’ (voluntary, top-down) vs ‘exogenous’ (stimulus driven, bottom-up) shifts

RT faster just after sudden onset/change at the stimulus location

although it does not predict the stimulus location

Timing of this ‘exogenous’ cueing is different from endogenous:

exogenous attraction of ‘the spotlight’ is fast

endogenous movement of ‘spotlight’ is slower

But the attentional spotlight also gets attracted involuntarily, or automatically, to an isolated and salient change somewhere in the visual field. This too was studied by Posner. Now, instead of an arrow being presented before the stimulus, one of the boxes suddenly flashed, and this was completely random with respect to which side the stimulus was on: i.e. it didn’t predict where the stimulus would be. Nevertheless he found an advantage for a stimulus presented in the box that has just flashed.

So a sudden onset or movement in the visual field -- a singleton - as attention researchers call it -- automatically captures attention.

This automatic stimulus driven -- exogenous --shift of attention happens very quickly (<200 ms)

The endogenous, or voluntary shift of attention is slower: it takes maybe half a second or more to shift.

Posner's paradigm has recently been used in combination with measures of brain activation to address the question of how early in the processing of visual information this selective focusing happens

recap

endogenous cueing (voluntary, top-down)

attention is guided internally based on expectations and prior knowledge

stimulus location is indicated by a cue (eg an arrow or number)

the cue is valid 80% of the time, encouraging voluntary allocation of attention

reaction time (RT) is measured:

faster for expected locations

slower for unexpected locations

exogenous cueing (stimulus driven, bottom-up)

attention is captured automatically by a sudden visual change (eg. flash or onset of a stimulus)

even if the change does not predict the target location, RT is still faster at the cued location due to automatic attraction of attention

timing differences:

exogenous shifts occur very fast (<200ms)

endogenous shifts take several hundred milliseconds

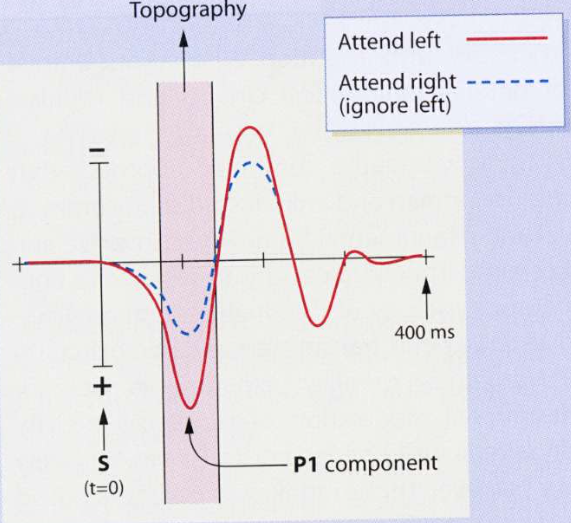

voluntary attention to a spatial locus modulates early components of the ERP in extra-striate visual cortex (mangun et al 1993)

the PI component of the event-related potential (ERP) is enhanced when attention is directed to a spatial location

the graph (bottom left) shows how attention to the left (red line) enhances the PI response compared to ignoring the left (blue dashed line)

the topographic brain maps (right side) show increased activity in the extra-striate visual cortex when attending to a stimulus

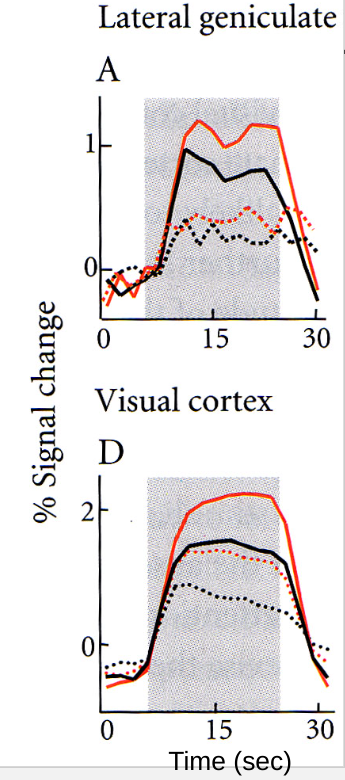

early selection in the Primary Visual Cortex and LGN

visual processing follows a hierarchal pathway:

retina → LGN → V1 → extra-striate cortex

the experiment described involved:

fixating on a central point

digits appearing at fixation (requiring counting)

high or low contrast checkerboards appearing peripherally, requiring attention shifts

early selection in primary visual cortex and even LGN

while fixation maintained on central point,

series of digits appears at fixation, and

high or low contrast checkerboards appear in left and right periphery

P either counts digits at fixation, or detects random luminence changes in left (or right) checkerboard

fMRI BOLD signal in LGN/VI voxels that react to checkerboard luminence change is greater with attention directed to that side (red lines) than with attention to fixation (black lines)

(continous/dotted = high/low contrast conditions)

so: at least some selection (for regions in the visual field) occurs very early in processing

recap

attention influences early visual processing, not just higher-level cognitive stages

even at the LGN (thalamus) and VI levels, there is top-down modulation by attention

this suggests ‘early selection’ - meaning that attention can enhance sensory representations before higher-level processing takes place

visual selection (as for auditory attention)

it is not all-or-none - there is a gradient of enhancement/supression across the visual field

is is an optional process - the size of the attended area is under voluntary control: ‘zooming the spotlight’

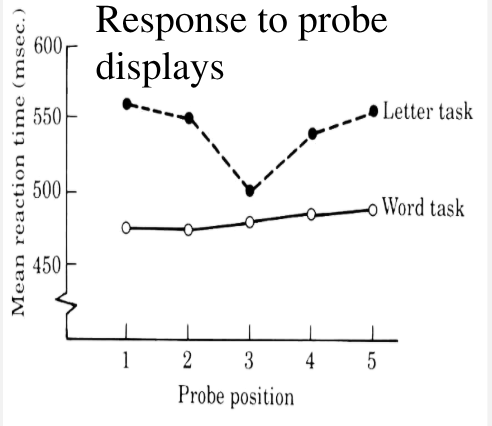

eg on most trials, different groups of subjects classified (Laberge, 1983)

participants were asked to attend either:

a central letter

the whole word (eg ‘TACTIC’ - checking if it is a real word)

occasionally, a probe display appeared, requiring classification of a single letter eg (Z vs T)

reaction time (RT) was faster for probes near the centre when attention was focused on on a single letter (letter task)

RT was faster for probes across the whole word when attention was spread out to process the entire word (word task)

this suggests flexibility in attentional focus - it can be narrowly focused or broadly distributed

visual selection and zooming the attentional spotlight

visual attention is not all-or-none: it operates on a gradient, enhancing relevant information while suppressing distractions

attention is an optional process: the size of the attended area can be voluntarily adjusted, similar to ‘zooming in’ or ‘zooming out’ with a spotlight

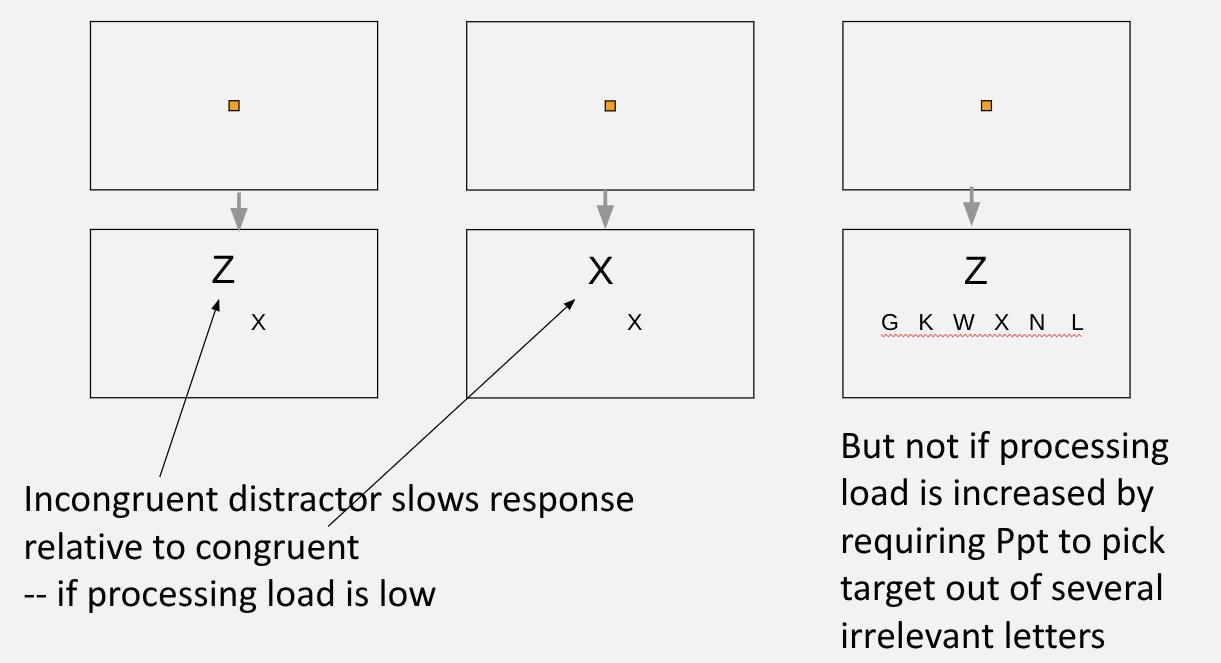

efficiency of early selection depends on processing load?

‘Flanker’ task: press left key for little X, right key for little Z, on midline, ignore big letter above or below mid-line (distracting flanker)

early selection is more efficient under high cognitive load

if attention is fully occupied with a demanding task, irrelevant distractors have less impact

This supports Load theory of attention: when cognitive load is high, there is less leftover attention for distractors

visual attention in dynamic scences: inattentional blindness

expts by Daniel Simon require participant to attend closely to one coherent ‘stream’ of visual events on the screen, spatially overlapping with another stream

highly salient events in the unattended stream are missed by a large proportion of participants (~50% in Simons experiments)

hence the events of the unattended stream, though happening in a part of the visual field fixated by the participant (as later eye-tracking studies show), do not appear to be processed to the level of meaning

summary of visual attention

processing of information in the visual field to the level of recognition and meaning is highly selective and limited:

the ‘spot-light’ of visual attention can be moved voluntarily (and relatively slowly) to locations/objects of potential interest away from fixation. It is attracted automatically (and relatively fast) to local transients in the visual field (eg Posner exps). The size of the spotlight can be varied or ‘zoomed’ (eg Laberge exp)

this selective filtering occurs early in the processing of visual information

processing of objects outside the ‘spotlight’ is relatively shallow (little evidence for object recognition, or activation of meaning) (eg. ‘inattentional blindness’ and visual search)

capacity and multitasking

the limiting factors

working memory capacity

speed of processing

attention

multi tasking and cognitive capacity

even when we do just one task, there are limits to cognitive capacity

all processes take tome (eg memory retrieval, decision making)

tjere are limits to the input any one process (eg syntactic parsing) can handle

representational/storage capacity is limited (eg working memory)

capacity limits become even more obvious when resources must be shared between tasks ie

have to get more than one task done in a certain time

at least some tasks are time-critical (can’t wait), so we must either

try to do them simultaneously

or switch back and forth between them

examples of real life multitasking

cooking and ironing and baby-monitoring and phone answering and door bell

chef, air traffic controller, pilot, taxi-dispatcher, driver

these limitations are important

theoretically (what is the global computational architecture of the mind/brain?)

practically (efficiency, risk - ‘human error’ as a source of accidents

multi tasking: the demands include

when we try to do the task simultaneously:

competition for shared resources - dual task interference

when we try to switch between tasks, the overhead includes:

set-shifting or task-switching costs

retrospective memory

prospective memory

monitoring for trigger conditions (is it time to do X?)

remembering the meaning of the ‘trigger’: what am I supposed to do now?)

other demands on executive control:

planning, scheduling, prioritising, coordinating the two task streams

trouble-shooting, problem-solving

when things go wrong or unexpected problems arise

so (a) multitasking is not a single competence

(b) executive control processes are critical

an example: using a mobile phone while driving

use of handset while driving now illegal in UK

use of hands-free phone not illegal, but you can be prosecuted if use is concurrent with an accident

yet many people think they can drive competently while speaking on a mobile phone, especially if using a hands-free set

but they are wrong

epidemiological studies show increased accidents (relative risk similar to driving at legal limit for alcohol)

observational studies show eg. delayed braking at T-junction

experimental studies show impaired breaking, detection of potential hazards etc. especially in young drivers

study on relative risk of mobile phone use and drinking - Strayer, drews and crouch (2006)

driver in simulator: follows a pacer car in slow lane of motorway for 15 minutes, tries to maintain distance, pacer car breaks occasionally

Baseline vs Alcohol (80mg/100ml) vs Casual talk on hand-held or hands-free mobile (call initiated before and terminated after measured driving)

mobile phone users: slower reactions, more tail-end collisions, slower recovery

alcohol: more aggressive driving (closer following, harder braking)

no significant differences between effects of talking on hand-held and hands-free!

further driving simulator studies show

(just) talking on hands-free mobile phone:

reduced (by ~50%) anticipatory glances to safety critical locations (eg parked lorry obscuring a zebra crossing)

reduced (by ~50%) later recognition memory of objects in driving environment

increased probability of unsafe lane change

does talking to passengers have the same effect? crash risk data suggests not.

simulator studies show that (unlike person on phone)

passengers are sensitive to drivers load eg stop talking, wait for reply

passengers help spot hazards, turn-offs etc

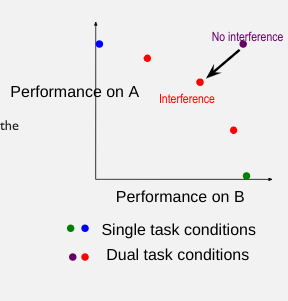

measuring dual-task interference in the lab

just two tasks, designed for measurement and manipulation

typically measures performance

on tasks A and B alone

on A and B combined

does performance on each task deteriorate when the other must also be performed?

note: must measure performance on both tasks: participant may be able to trade off performance of A and B

possible sources of dual-task interference

shower and less accurate performance in dual task conditions might be attributable to

competition for use of specialised domain-specific resources

parts of body (effectors, sense organs)

brain ‘modules’: specific processes/representations

competition for use of general purpose processing capacity

central processor?

pool of general-purpose processing resource?

limited capacity of executive control mechanisms that set up and manage the flow of information through the system

and/or sub-optimal control strategies

competition for domain-specific resources eg

certain cognitive tasks interfere with each other when they rely on the same specialised resources:

auditory processing: listening to two speech streams at once impairs comprehension, as both rely on speech perception mechanisms

visuo-spatial working memory: performing a spatial tracking task makes it harder to use visual imagery for memory tasks

dual-task interference occurs when tasks require the same sensory, response or central processing mechanisms.

However, if the information rate is low enough, resources may be efficiently switched between tasks to reduce interference

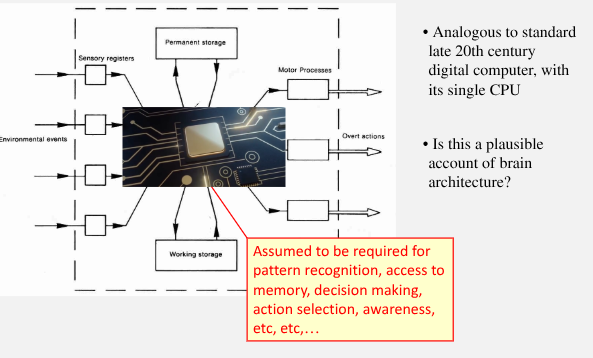

competition for a general-purpose processor?

competition for a general-purpose processor?

the central processing model compares cognitive processing to a single-CPU computer:

a single processing unit is responsible for:

pattern recognition

memory access

decision-making

action selection

awareness

Posner (1978) linked this central processor to conciousness. This model suggests that ny task requiring central processing competes for the same limited resource, leading to task interference

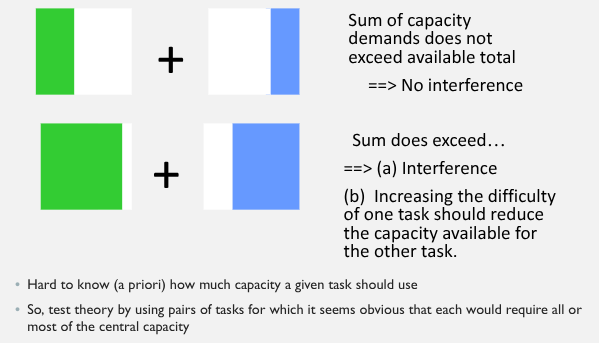

a general-purpose resource pool?

Kahneman (1975) proposed a pool of general-purpose resources that is shared among concurrent tasks (mental energy eg attention and effort)

The capacity of the general purpose resource might vary:

over people

within people, states of alertness:

level of ‘sustained attention’ (=available ‘resource’ or ‘effort’)

diminishes with boredom or fatigue

increases with time of day (apart from post lunch dip), presence of moderate stressors (eg noise, heat, but only up to a point), emotional arousal (up to a point), conscious effort

suppose there were a central processor or resource pool, whose capacity could be shared by (any) two tasks:

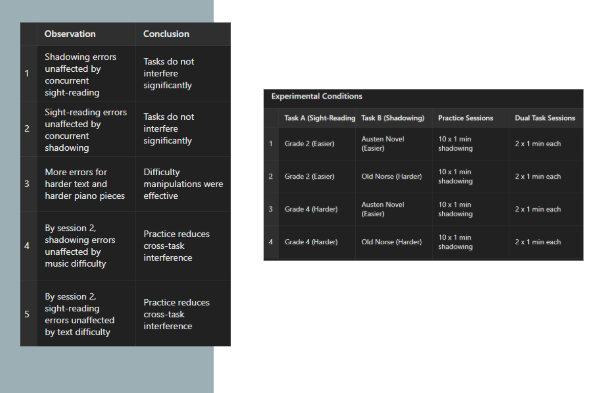

a case of demanding tasks combined without interference - allport antonis and reynolds (1972)

university of reading Y3 music studnets (competent pianists). Tasks:

A: sight-read Grade 2 (easier) or Grade 4 (harder) piano pieces.

B: shadow prose from Austen novel (easier) or text on Old Norse (harder)

relatively little practice: 10 × 1 min shadowing (to reach criterion of 2 trials with no omissions), 2 × 1 min sight-reading, 7 × 1 min dual task

experiment: two sessions of 2 × 1 min dual task for each combination of easy and hard, and 1 min sight-reading or shadowing alone (order balanced)

the rate of shadowing and number of shadowing errors were no different with and without concurrent sight reading

concurrent shadowing also did not increase sight reading errors

more shadowing error for harder text, and more sight-reading errors for grade 4 pieces (difficulty manipulations effective), but (by session 2):

shadowing performance not influenced by difficulty of music piece

sight-reading performance not influenced by difficulty of prose shadowed

dual-task interference challenge

write down numbers 1 to 10

now, say the alphabet from A to T

now, alternate between writing a number and saying a letter (eg write ‘I’, say ‘A’, write ‘2’, say ‘B’ etc)

how multitasking slows performance due to switching costs and dual-task interference

two more examples

evidence against a general-purpose processing bottleneck

tasks can be combined without interference:

Shaffer (1975): skilled typists can visually copy-type while shadowing prose (repeating spoken words aloud) without interference

suggests parallel processing of distinct tasks when they rely on separate cognitive resources

task difficulty doesn’t always influence performance:

North (1977): a continuous tracking task was combined with a digit recognition task (identifying/classifying number)

increasing difficulty of the digit task did not slow performance in the tracking task

this suggests that when tasks rely on different cognitive systems, they can operate independently

the radical claim: no central general-purpose processor/resource - Allport, 1980

Allport (1980) rejected the idea of a single ‘central processor’. Instead, tasks rely on seperate, non-overlapping modules (eg. sensory modalities, motor control, memory systems)

If tasks engage different brain networks, they can be performed simultaneously with little interference

this challenges Kahneman’s (1975) general-resource theory, which posited a shared attentional pool.

but, even when task use completely different modules, some interference may arise due to coordination and control demands - ie as a consequence of the load on (specialised) cognitive processes

eg Allport et al error in one task briefly slows the other

back to driving and phone conversation

si it isn’t the case that

a - driving/navigation (visuo/spatial input →hand/foot responses) and

b - conversation (speech input ←—→ meaning ←—→ speech production)

require use of quite different ‘modules’?

yes and no. they use different input and output modalities, but both require construction of a ‘mental model’

(for driving: representation of route, goals, progress, meaning of road-signs, interpretation of observed events, prediction of potential hazards etc)

the construction of the mental model for driving can be interfered with by a conversation that asks the driver to think about a visuo-spatial arrangement (eg when we get to X, do you know how to find Y) or imagine movements

the importance of practice

tasks which cannot be combined without interference become easier to combine with practice.

Eg. changing gear while driving

Spelke Hirst and Neisser (1976): after 85 hours of practice at:

reading stories (for comprehension) at the same time as writing to dictation (6 weeks)

( and then)

reading stories concurrent with writing category of spoken words (11 weeks)

some participants showed little dual-task interference

because?

practising one task automates it: reduces need for ‘executive’ control of the constituent processes

practising combining tasks develops optimal control strategies for combining that particular task pair

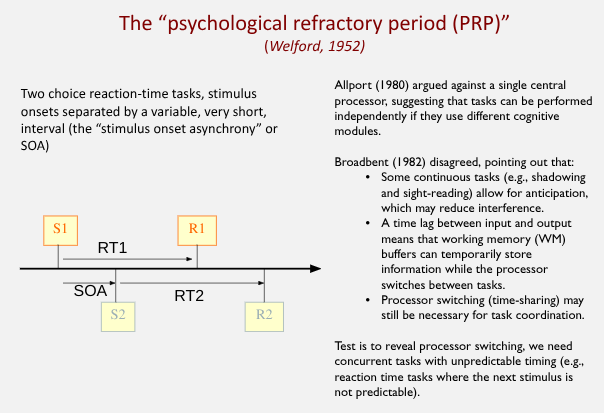

Broadbent’s objection to Allport-type experiments - Broadbent (1982)

with pairs of continous tasks like shadowing and sight-reading there is

some predictability in the input (ie can anticipate)

a substantial lag between input and output (so there must be temporary storage in WM of input and/or output)

so, there could still be a central processor switching between the two tasks (time-sharing). While processor services one task, input/output for the other task could be stored in WM buffers

test: processor-switching should be revealed if we use concurrent tasks with very small lags between input and output, and the next stimulus unpredictable ie reaction time tasks

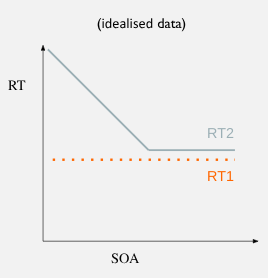

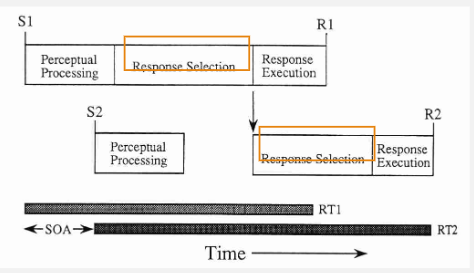

the ‘psychological refractory period (PRP)’ - Welford, 1952

PRP demonstrates a fundamental limit in multitasking, supporting the idea of a central bottleneck.

Two choice reaction-time tasks occur in quick succession.

Stimulus I (SI) → response I (RI)

after a short delay (Stimulus Onset Ansynchrony, SOA), stimulus 2 (S2) → response 2 (R2)

When SOA is short:

RTI remains stable

RT2 is delayed (bottleneck effect)

As SOA increases, RT2 speeds up because the bottleneck is cleared

Response selection is the processing bottleneck:

only one response selection process can occur at a time

if a second stimulus is identified while the first response is being selected, it must wait until the first response is completed

This explains why RT2 is delayed at short SOAs

conclusions

real multi-tasking is rare to achieve; it is better (and often more efficient) to focus on one task at a time

why? switching attention constantly consumes energy and drains cognitive resources

executive control is needed to do the coordinating, scheduling, prioritising and these mechanisms have capacity limits, being constantly on alert leads to fatigue, burn outs etc