Psych 225 Exam 1

1/224

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

225 Terms

consumer of research

Ability to tell high-quality research information

Must seek empirical evidence to test the efficacy of interventions (Ex. scared straight), with ability to evaluate the evidence behind claims and be more informed by asking right questions

Informs you of programs that do work (ex. mindfulness) for future jobs to invest in

evidence based treatments

therapies supported by research, sales analytics, teaching methods

empiricism

using evidence from the senses or from instruments that assist senses (scales, thermometers, timers, questionnaires, photographs) as the basis for conclusion

Ideas & intuitions are checked against reality to learn how the world works in ways that can be answered by systematic observation

work is verifiable by others, claims must be falsifiable

Most reliable basis for conclusions

animal attachment theories

Cupboard theory of mother-infant attachment is that a mother is valuable to a baby mammal because she is a source of food, over time the mom takes on a positive value because associated with food

Contact comfort theory is babies are attached because of the comfort of warm fur

Harlow tested this out by separated food and contact comfort by building “mothers” for his lab monkeys. Monkeys ended up choosing the contact over the food.

theory

set of statements that describe general principles about how variables relate to each other

Studies don’t prove theories, you cannot make generalizations about phenomena you have not observed (ex. Can’t say you proved that all ravens are black).

A single study cannot prove a theory, and a single disconfirming finding does not scrap a theory -->

Instead you say support or are consistent with a theory. Evaluated based on weight of evidence

types of theories

Functional theories focus on why

mechanistic theories focus on how

some theories provide organization without explanation. Different than the use of “theory” colloquially

Ex. Pavlov’s classical conditioning, Maslow’s hierarchy, Bandura’s social learning

Not all theories are formally named

how theories are used

Theories help:

-To think about phenomena by organizing them

-To help generate new research questions

-To make predictions

Often consider multiples theories at once, theories can be complementary or competing

Theories don’t have to be entirely correct to be useful

hypothesis

empirically testable proposition/prediction about some fact, behavior, or relationship based on theory

stated in terms of the study design & the specific outcome the researcher will observe to test if they are correct based on specific conditions

Many researchers test theories with a series of empirical studies, each designed to test an individual hypothesis

data

set of observations which can either support or challenge the theory. When data doesn’t match the hypothesis, the theory needs to be revised or the research design must change

preregistered hypothesis

after the study is designed but before collecting any data, the researcher states publicly what the study's outcome is expected to be

replication

study being conducted again to test whether the result is consistent.

Theories are evaluated based on weight of evidence which is a collection of studies (& replications) of the same theory

falsifiability

characteristic of good theories, when a theory should lead to hypotheses that could fail to support the theory.

Some supporters believe that certain theories are not falsifiable (ex. Therapist facilitated communication shows lack of faith in disabled people) so we can never test the efficacy

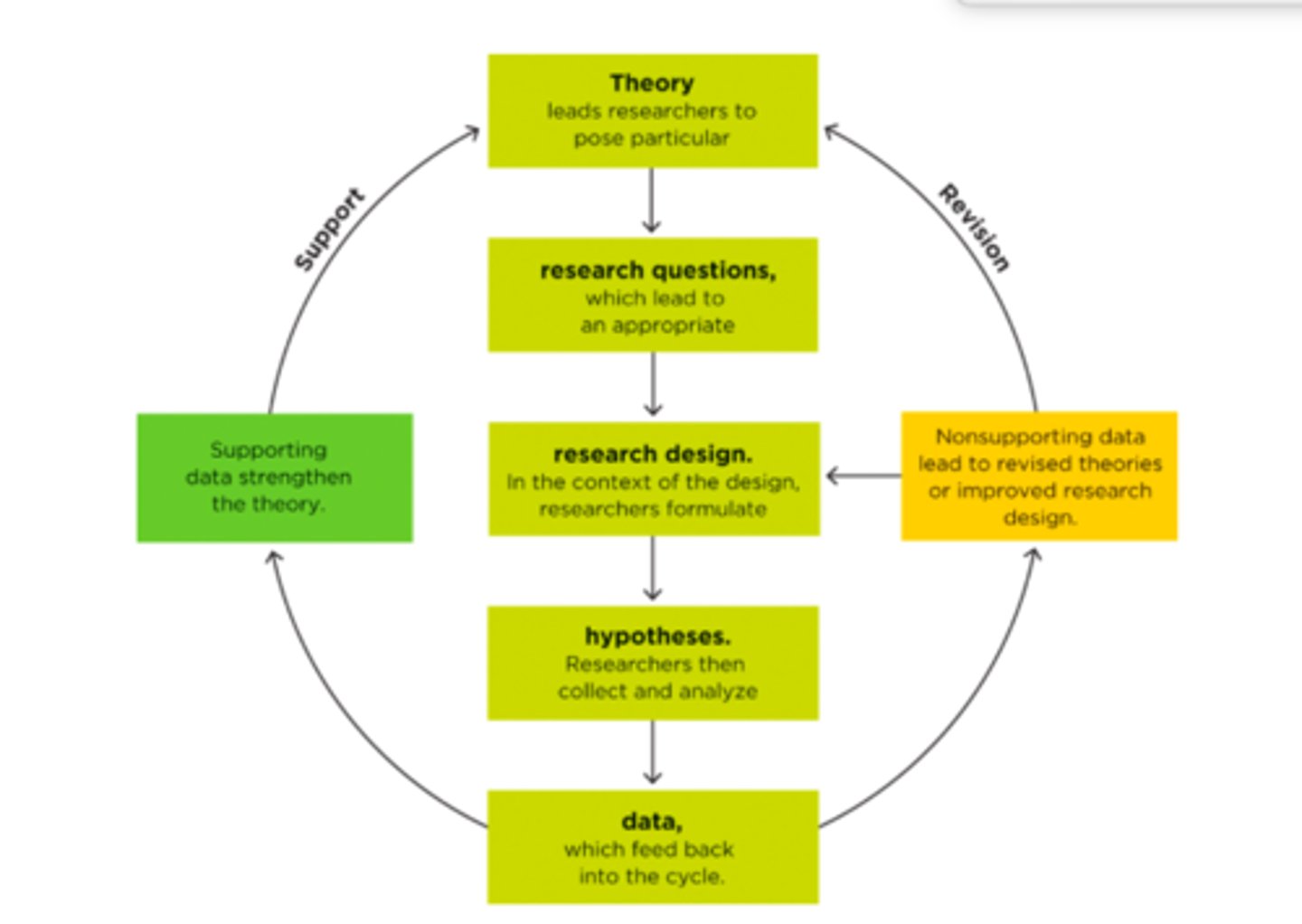

theory data cycle

1. theory

2. research questions

3. research design

4. hypothesis (then preregister)

5. data

repeat if data supports theory. If not, alter research design or theory and redo.

theories are evaluated based on the weight of evidence, a single study can't "prove" a theory

Behavioral research is probabilistic

Merton's scientific norms

-universalism

-communality

-disinterestedness

-organized skepticism

self correcting

scientific norm:

discovering your own mistaken theories and correcting them by being open to falsification and skeptically testing every assumption

universalism

scientific norm:

scientific claims are evaluated according to their merit, independent from the researcher and their background

communality

scientific norm:

scientific knowledge it created by a community and its findings belong to that community (methods, analysis, and interpretation are reviewed and critiqued by experts), making scientists be transparent about sharing their results with others

opinion/perspective piece

Drawing on recent empirical research, the author formulates an opinion about a controversy, an important finding, or a disagreement in a field's theoretical foundation, methodology, or application

Ex. Participatory autism research: how consultation benefits everyone

disinterestedness

scientific norm:

scientists strive to discover the truth and are not swayed by politics, profit, idealism. They are not personally invested in whether their hypothesis is supported by the data, they don't spin the story, they don't let their own beliefs bias the interpretation or reporting

organized skepticism

scientific norm:

scientists question everything, including their own theories and widley accepted ideas, they always ask to see the evidence

applied research

done with a practical problem in mind and the researchers conduct their work in a real world context

Ex. If the school's new method of teaching is working better based on depression treatments

Ex. do first responders who debrief with a counselor after a stressful decision experience less emotional distress?

basic research

to enhance the general body of knowledge rather than address a specific problem, understanding structures of systems, motivations of a depressed person, or limitations

Could be applied to real-world issues later on

Ex. what neural pathways are involved in decision-making under stress?

translational research

use of basic research to develop and test approaches to health care and treatment intervention, the bridge between basic & applied (ex. Mindfulness to study skills)

Ex. how can a smartphone app target these decision making pathways to help first responders make decisions under stress

scientific journal

come out every month and contain articles written by many researchers. They are peer-reviewed anonymously by other experts on the subjects and then decided if it should be published.

They comment on the importance of the work, how it fits with existing knowledge, how convincing the results are, and how competently the research was done

Journals reject over 90% of submissions.

Scientists who find flaws in published research can publish commentaries for correction

journalism

secondhand report about the research, written by journalists interested in a study, they turn it into a news story by summarizing it for a popular general public audience

Journalists potentially over exaggerate the summarization, using the mozart effect to misrepresent science in the media which distorts the effects of the first study

comparison group

enables us to compare what would happen both with and without the thing we are interested in (ex. Radical mastectomy to show it was unnecessary)

By not testing any comparison groups, scientists fail to follow a norm of organized skepticism by assuming their hypothesis was working instead of looking for the evidence

personal experience

Limitations:

has no comparison group (no way to see results if you had not taken action)

is confounded (difficult to isolate variables)

only seen from one lense (researcher can see all conditions)

not probabilistic

"My kid seems less happy when she doesn't use screens"

confounds

alternative explanations for an outcome, we can't always know what caused a change

Occurs when you think one thing caused an outcome but in fact other things changed too, so you are confused about what the real cause was

research is better than experience

Ex. Study about venting anger is beneficial. Ended up that those who sat quietly for 2 minutes punished Steve (essay grader) the least. This did not support original hypothesis that letting your anger out helps.

If you had asked someone from the punching group if it helped, they would only consider their own experience, as opposed to the researcher who sees it all. Also allowed to separate exercise and venting anger into two groups.

confederate

an actor playing a specific role for the experimenter

probabilistic

research results do not explain cases all of the time in behavioral research

Conclusions are meant to explain a certain proportion of possible cases based on patterns.

intuition biases

1) being swayed by a good story (they feel natural)

2) availability heuristic

3) present/present bias (failure to consider things we cannot see)

4) confirmation bias

5) bias blind spot

"Exercise is good for kids, so sitting in front of a screen is probably not"

must instead strive to collect disconfirming evidence

availability heuristic

bias where things that pop up easily in our mind guide our thinking (recent or memorable events come easier to mind, leading us to overestimate how often things happen)

ex. Homicide, # of muslim people in the area, spending more time at red lights

confirmation bias

cherry picking information that supports what we already think

Ex. looking at articles that support or don't support IQ tests based on your score

bias blind spot

when we think biases do not apply to us and that we are objective. Envelopes all other biases

Makes it more difficult to initiate the scientific theory data cycle because you "already know that it is correct"

Ex. people believed americans would blame the victims, even though they would not do that themselves

trusting authorities

Must ask if the authority systematically compared different conditions as a researcher would do. How do they make their decisions?

If an authority refers to research evidence in their own area of expertise, their advice might be worthy, but it might just be based on their experience or intuition such as the rest of us

"The American Academy of Pediatricians recommend reducing screen time for children"

empirical journal articles

report the original results of an empirical research study, including details about the abstract, intro, method, discussion, and results. Written for psychologists

portions of methods or results may be shown in "supplementary materials" section online

AKA "empirical report", "feature article" or "target article"

review journal articles

qualitative review/ summarize and integrate many published studies that have been done in one research topic, to draw conclusions about common trends

Might use meta-analysis (quantitative review) to combine the results of many studies and gives a number that shows the magnitude (or effect size) of a relationship. Valued well because each article is equally considered in this.

theoretical article

Describes a theory or model of a psychological process

Integrates empirical and theoretical findings to show how specific theory or model can help guide research

edited books

Collection of chapters on a common topic, each chapter is written by a different contributor. Not peer reviewed as heavily as journals, but co-authors are carefully selected to write chapters. Written for psychologists.

finding scientific sources

-PsycINFO shows articles written by author or keyword, shows if it was peer reviewed

-Google Scholar is search engine for empirical journals, but you can't limit your search term to specific fields

Stay away from "predatory" websites which publish any submission they receive even if it is wrong. Want an impact factor of >1

Must search similar subject terms and be not too broad and not too specific

paywalled source

accessed through subscription only, while open access is free to the general public

the second one supports Merton's norm of communality

components of an empirical journal article

-abstract

-introduction

-method

-results

-discussion

-references

abstract

1. concise summary of the article (250 words max) of brief description of the research: question it addresses, methodology, outcome, conclusions

Must be simple but not boring

introduction

2. establishes importance of the topic, nature of question being addressed, background for research (what past studies, why is it important, what theory is tested), and specific research questions or hypotheses (based on existing research)

Has review of literature that gives a sense what other psychologists think about the issue, in an objective view to all sides

Questions to ask when reading introduction

What general topic is this addressing? What have other researchers already discovered? How does this study follow from earlier research? What is new about this study compared to previous? What are the research hypotheses?

method

3. how the researcher conducted their study, subsections of participants, materials, procedure, and apparatus. Shows what participants experienced, less to do with theory.

subjects, apparatus/materials, procedure

A good one gives enough detail for study replication

questions to ask when reading methods

What are the characteristics of the participants? What apparatus was used? What did the participants actually do?

subjects & participants

part of method section:

Includes the nature of the people or animals who took part in the study. (participant is human, subject is nonhuman animals). Gives characteristics of their participants to help understand results.

Ex. age, gender, ethnicity, hospitalization history, drug use, species

More descriptive sections are used for nonlaboratory research

apparatus and materials

part of method section:

Read about tests, surveys, word lists, pictures, electronics involved in research. Should understand how the materials helped investigators address their research question

procedure

part of method section:

Details what the researchers and participants did. Should be relevant if you want to replicate the research. Some minor details in this section, you should start off by only reading the major features.

results

4. describes quantitative and qualitative results, includes statistical tests used to analyze the data, most technical, provides tables and figures that summarize results

should be comprehensible outside of numerical results (presented in English). You should see if results support or fail to confirm the hypothesis and if the participants impact the outcome.

Questions to ask when reading results

For each hypothesis: what results relate to the hypothesis and do they support it? What statistical tests did the researchers use when they analyzed the data? (ex. T test shows differences across groups, chi-square shows association between variables). In everyday english, what do the statistical tests tell you? Are those results consistent with those of earlier research? What do the figures and tables convey and do they lend support to the hypothesis?

discussion

5. summarizes the research question and methods and indicates if the results of the study supported the hypothesis, discuss the study's importance (if participants were unlike others who had been studied before, if the method was creative for this hypothesis), show how results connect to previous studies, explain unexpected findings and reasons why, potentially share alternative explanations for their data and pose questions raised by the research

notes limitations of research and how future studies can overcome that

questions to ask when reading discussion

Do the current research findings agree with previous research and with accepted theory? If there are any unexpected findings, why did they occur? Why does the researcher think their findings are important? What are the implications of this research?

reading with a purpose- empirical journals

Ask yourself: what is the argument? What is the evidence to support the argument?

Empirical articles reports on data generated from a hypothesis for a theory, try to skip to the end of the introduction to learn more about the goals of the study, or look at the beginning of discussion where results are summarized, then look for evidence

reading with a purpose- review articles

Ask yourself: what is the argument? What is the evidence to support the argument?

The argument of these usually presents an entire theory (ex. "Using cell phones impairs driving, even when phones are hands-free")

science in popular media

scholarly articles (Psychological Science, Child Development) report results of research after peer review and continue discussing ongoing research. Written by named scholars within a specific field, use technical language not easily understood, includes all sources

Popular articles (NY Times, CNN) summarizes research of interest to the general public. Written by unnamed journalists for the public, written easily to understand, typically don’t include sources unless it is a link to the published article. Journalists might not describe stories accurately, or choose flawed articles

Ex. article about horns growing, but author had conflict of interest as a chiropractor and participants were not random

disinformation

deliberate creation and sharing of information known to be false

Has made people disengaged from voting, or could lead to other harmful actions

Motives: propaganda, passion, politics, provocation (make others into emotional reactions), profit, parody (create false stories to make us laugh)

Types of disinformation can be subtle (ex. Attributing false quotes to real people or real quote in false context, manipulating photos, adding real images to false context)

plagiarism

(1) using another writer’s words or ideas without in-text citation and documentation

(2) using another writer’s exact words without quotation marks

(3) paraphrasing or summarizing someone else’s ideas using language or sentence structures that are too close to the original, even if you cited the source in parentheses.

To avoid it:

-take careful notes where you label quotations

-use own sentence structure in paraphrases

-Know what sources must be documented in text and in references

-Be careful with online material

-don't copy & paste notes

-how would you explain passage aloud

-Make sure all quotations are documented, however in APA you should paraphrase (when material is important but wording is not)

understanding journal articles

Take notes rather than highlighting. Use argument mapping to relate topics and show statements to support claims and counterarguments

characteristics of a strong hypothesis

1. Testable and falsifiable

2. Logical

(Not a random guess, Informed by previous research and theory)

3. Positive

(X is associated with Y, not X is NOT associated with Y)

4. DIrectional

5. Specific

peer review cycle

Those who work in the same research fields evaluate the article to test quality & significance of research. Is it logical and original?

Make recommendations to the editor to publish with revisions or reject. Not paid to review other people’s papers

Double blind process (no names included for authors or editors)

4 cycles in psychological science

1. Theory Data Cycle

2. Basic Applied Research Cycle

3. Peer Review Cycle

4. Journal to Journalism Cycle

science

psychology is the scientific study of mind and behavior

science is a systematic study of structure and behavior or physical or natural world through observation and experimentation

four ways of knowing

personal experience, intuition, authority, empirical evidence

measured variable

has levels that are simply observed & recorded in causal claims, dependent variable

correlational research, to test a frequency or association claim

Ex. height, IQ, gender, depression level

found through questions. These cannot be manipulated (ex. age can't be changed)

manipulated variable

controlled independent variable, usually by assigning study participants to different levels of that variable in causal claims.

experimental research, to test a causal claim

Ex. dosage of prescription, different test atmospheres

Some variables can't be manipulated because it is unethical. Ex. childhood trauma

Some variables can be both measured or manipulated. Ex. choosing children who already take music lessons, or assigning some children to take music lessons

terms for association claims

predictor variable (on X axis, similar to independent variable)

criterion/outcome variable (on Y axis, similar to dependent variable)

construct / conceptual definition

a careful, theoretical definition of the abstract construct, the name of the concept being studied, which is used to create the operational definition about how to measure the variable.

Ex "a person's cognitive reliance on caffeine"

Ex. "happiness" conceptual definition was subjective well-being, which was operationalized by reporting personal 1-7 happiness

operationalize

when testing hypotheses with empirical research, you do this to show how the construct is measured or manipulated for a study. Sometimes this is simple, but other times it is difficult to see (ex. personality traits)

Ex. to test coffee consumption, you can have ppl tell an interview the amount of coffee they drink, or you can have them use an app to record what they drink overtime

operationalize example

conceptual variable: stress

Can be operationalized by:

-self report measure (participant report anxiety)

-physiological measure (concentration of cortisol in saliva)

-behavioral measure (knee tapping or constant eye movement)

-clinical measure (evaluation by trained professional)

claim

an argument someone is trying to make (can be based on personal experience, text evidence, rhetoric). Researchers make claims about theories based on data, and journalists make claims about studies read in empirical journals

-frequency

-association

-causal

Not all claims are based on research. Could be anecdotes in popular media

association claims

Argues that one level of a variable is likely to be associated with a particular level of another variable. They correlate, meaning that when one variable changes, the other tends to change. Does not make perfect predictions, but makes estimates more accurate. Shows a claim between at least two variables. PROBABALISTIC

Positive, negative, or 0 associations through scatterplots. Measured, not manipulated

Language: associate, link, correlate, predict, tie to, be at risk for, more likely to, prefers

Ex. “speech delays could be linked to mobile devices” “countries with more butter are happier”

"what factors predict X?" "Is X linked to Y?"

frequency claims

Describe a particular rate or degree of a single variable. Easily identified because they only focus on one variable. Among large surveys

Some reports give a list of single variable results, all of which are frequency claims. These reports don’t attempt to correlate variables, they simply state %’s

Ex. “39% of teens …” “Screen time for kids more than doubles”

causal claims

Argues one variable is responsible for changing the other. Must have two variables. Could be based on zero association that reports a lack of cause (ex. Vaccines do not cause autism)

Language: cause, enhance, affect, decrease, change, exacerbates, promotes, distracts, adds. More active and forceful

May contain tentative language: “could, may, seem, suggest, potentially, sometimes”. Advice is also considered causal because it implies that if you do X, then Y will happen

Ex. “family meals curb eating disorders” “pretending to be batman helps kids stay on task”

validity

whether the operationalization is measuring what it is supposed to --> the appropriateness of a conclusion

valid claim is reasonable, accurate, and justifiable

-construct

-external

-statistical

-internal

construct validity

how well a conceptual variable is operationalized. How well a study measured or manipulated a variable. Each variable must be measured reliably (repeated testings) and that different levels of a variable accurately correspond to true differences

If you conclude one variable was measured poorly, you cannot trust the study's conclusions

Ex. concerning construct validity is if people in Marshmallow experiment say they don't like marshmallows anyway

Ex. Watching cell phone usage at intersections or looking at data collector on phone is probably better results than just asking if people text and drive

Ex. "people who multitask are the worst at it" --> need to know how they define success

Ex. "adding school recess prevents behavioral problems" --> need to know did you add 10 or 40 minutes, what do you consider behavioral

external validity

how well the results of a study generalize or represent people besides those in the original study for frequency claims. Can it apply to other populations, context, places.

follows generalizability - how did the researchers choose the study’s participants, and how well do those participants represent the intended population? Which people were chosen to survey, how were they chosen?

How well does the link hold in other contexts, times, places (like the real world)? How representative are the manipulations and measures?

statistical validity

the extent to which a study’s statistical conclusions are precise, reasonable, and replicable for frequency claims. Improves with multiple estimates/studies for an estimate of the value of true population.

Has the study been repeated? How precise are estimates? What is likelihood this was found by chance?

Measured through a point estimate (%) for strength of claim, and the precision (MOE or confidence interval) How well do the numbers support the claim?

studies with smaller samples have less precise (wider) CI

Criteria for causation

1. Covariance - ex. As A changes, B changes

2. Temporal precedence means the method was designed so that the causal variable clearly comes first in time (ex. A comes before B). By manipulating a variable, you ensure that it comes first.

3. Internal validity is ability to eliminate alternative explanations for the association. When researchers manipulate a variable, they can control for alternative explanations.

experiment

psychologists manipulate the variable they think is the cause (independent variable) and they measure the variable they think is the effect (dependent) to support a claim

Ex. Being in batman condition showed persisting longer with boring task if they self-distanced

Must use random assignment to ensure all participant groups are as similar as possible (increases internal validity)

covariance

are the variables in a causal claim systematically related? Is a change in one variable reliably associated witha change in the other

causation example: Why is there a correlation between per capita consumption of margarine and decreasing divorce rate?

could be based on economic explanation (when things are more expensive, the economic strain on relationships leads to divorce)

In order to prove causation, must put people in different groups and control their margarine consumption, and see that when you change margarine consumption, there is a change that comes after in their likelihood to get divorced

increasing internal validity

Use systematic assignment of participants to levels of a variable -->

Reduces possibility that participants in different groups just happen to show differences by change

Helps to ensure that participants in different groups are similar in every other way

Are alternative explanations sufficiently ruled out by the study's design?

incorrect causal claim

Eating family dinners reduce eating disorders. This study showed covariance, however the researchers measured both variables at the same time so temporal precedence is not shown (bc eating disorders cause embarrassment of eating in front of others, so this might have caused reduced family meals).

Also cannot show internal validity because this was not an experiment so there were many other alternative explanations (ex. academically high achieving girls are too busy to eat and are more susceptible)

prioritizing validities

External validity is not always possible to achieve, and it might not be the priority for research. Might instead want to show internal validity focused on making each trial group equivalent. Future research could confirm that this works for other groups in different locations.

Polling research can be expensive, so they might have to use a short survey which sacrifices construct validity in order to achieve external validity.

Cohen's d

shows effect size in terms of how far apart the two means are, in standard deviation units.

The larger the effect size, the less overlap between the two experimental groups. If effect size is 0, there is full overlap.

d = (M1 - M2) / Sd pooled

d of 0.8 is strong, 0.5 is moderate, and 0.2 small (dependent on context)

a d of 0.72 means the avg score of group A is 0.72 stdev higher than average of group B

r to determine relationship strength

shows relationship between two quantitative variables, while d is used when one variable is categorical. r can only range between 1 and -1, but d can be outside of that range.

Typically, r * 2 = d

effect size

The larger an effect size is, the more important the result seems to be. But, a small effect size is not always unimportant

Ex. r=0.05 for being friendly and positive outcome, which when applied all over could have a great impact

in some cases, it is easy to evaluate strength (ex. written notes group test score is 8 points higher) without effect size

most useful when we are not familiar with the scale the variable is measured through (ex. self esteem units). Helpful to compare to effect size from another study that we know

inferential statistics

techniques that use data from a sample to estimate what is happening with the population

estimation & Precision

-point estimates

-confidence interval

null hypothesis significance

-p value

estimation & precision

estimates a value you don't know, and gives a range of precision around your estimate

-large samples

-population value is unknown

-sample data is unbiased

uses confidence interval which contains the true population level a certain % of the time

point estimates are a single initial estimate based on sample data of the true value in the population (might be a % or difference of means (effect size) or relationship of variables (r) )

steps of estimation & precision

1. State a research question "how much" or "to what extent"

2. Design a study, operationalize the question in terms of manipulated or measured variables

3. Collect data and compute point estimate and CI

4. Interpret results in context of your research question

5. Reconduct the study and meta-analyze the results

point estimate for beta (multiple regression)

Beta represents the relationship between a predictor variable and dependent variable, controlling for other variables.

Ex. beta for viewing sexual content on TV and teen pregnancy rate was controlled for age, grades, & ethnicity. Beta = 0.44. 95% CI for this would be [0.23, 0.63]. Because 0 is far outside the CI, the data supports some level of relationship, however we are uncertain how strong it is because CI is compatible with both weak and strong relationships

NHST Procedure

1. Assume there is no effect (population difference = 0)

2. Collect data & calculate result

3. Calculate the probability of getting a result of that magnitude, or even more extreme, if the null is true

4. Decide whether to reject or accept the null hypothesis

null hypothesis significance testing

-binary in terms of yes or no for significance

-Computes a test statistic (t, r, or F) and compares it to a null

-Reject when p value < 0.05, but this doesn't tell you about magnitude

-Wants large sample to reject the null hypothesis

-t value for sample is compared to kinds of t we would get if null was true. If t from sample is large (usually > 2), we can reject null

-When standard error is small, t statistic is larger, making it easier to reject

-Type I (5% chance of saying a result did not come from null when it really did) or Type II error

-Remember to check the effect size because even if something is statistically significant, it might be small in practical terms

-Remember that finding a nonsignificant result does not mean "the null hypothesis is true"

new statistics

-Uses results from a sample to make an estimation about population value

-Computes a point estimate (d, r, or difference score) and creates CI

-Reject when 0 is not in null

-Wants large sample for a narrower CI to reject the null

-t value is part of MOE

-When standard error is small, precision of estimate is better and CI is narrower

-Possible errors is alpha, missing the true population value 5% of the time

-Remember that CIs from each study will slightly vary

open science process

Pre Registered: red check

Open data: blue bars

Open materials: orange box

marshmallow experiment

rational choice theory states individuals use rational calculations to make choices & outcomes aligned with own personal objectives

Question: How do kids use social information to make adaptive decisions about waiting?

Hypothesis: kids who interact with the reliable researcher will wait longer than kids who interact with an unreliable researcher

SUPPORTED

conceptual variable: delay of gratification

operational variable: ability to wait 2 minutes

alternative hypothesis NOT SUPPORTED

Type of claim practice:

1. "At times, children play with the impossible"

2. "Anxiety risk tied to social mid use"

3. "Binge drinking linked to sleep disruption"

4. "Loneliness makes you cold"

5. "Collaboration gives a memory lift to elders"

6. "Active learning boosts retention"

Causation

1. F

2. A

3. A

4. C

5. C

6. C