Research Methods (IB Psych)

1/33

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

34 Terms

Definition of Experiment

Research method used to investigate cause-and-effect relationships between variables, by manipulating an independent variable to measure the effect on the dependent variable.

Types of Experiments

Lab: Conducted in a controlled environment where the researcher manipulates the IV and controls extraneous variables.

Field: Conducted in a natural, real-world setting, but the researcher still manipulates the IV.

Natural: The IV occurs naturally (not manipulated by the researcher) and is studied in either a lab or real-world setting.

Evaluation of Experiments

Strengths: The only method to test cause and effect, so more scientific.

Weakness: If they are heavily controlled they can lack validity.

Definition of Survey

Self-report method that uses written questions to obtain information about participants. Made up of questionnaires including open questions (descriptive, qualitative) and closed questions (quantitative, like Likert scales etc.).

Types of Survey Questions

Open: open-ended questions whose answers are usually descriptive, and qualitative (like short-answers, essay-style, etc.).

Closed: close-ended questions whose answers are generally numerical and quantitative (like Likert scales, true or false, etc.).

Evaluation of Surveys

Strength: They are often generalizable as they are great for large samples.

Weakness: Their validity can be questionable as it is hard to check if answers are true.

Definition of Correlations

Used to examine the relationship between two or more variables to determine if they are associated. Can’t establish a causal relationship as they don’t actually manipulate variables, but can establish a link between two variables.

Types of Correlation

Positive correlation: as one variable increases, so does the other.

Negative correlation: as one variable increases, the other decreases.

Zero correlation: no relationship exists between the variables.

Evaluation of Correlations

Strength: They are quantitative so can be considered reliable.

Weakness: They do not establish cause-and-effect.

Definition of Interviews

Self-report method that uses verbal question to obtain information about participants.

Types of Interviews

Structured: all questions are pre-planned.

Unstructured: only the first question is preplanned

Semi-structured: questions are pre-planned but they are flexible.

Evaluation of Interviews

Strength: They are qualitative, so in-depth and valid.

Weakness: They can be influenced by interviewer bias.

Definition of Observation

Research method that obtains information by watching participants.

Types of Observation

Controlled: situation is set up.

Uncontrolled: real-life situation.

Covert: undercover (participant is unaware they are being watched).

Overt: in the open (participant is aware they are being watched).

Participant: observer takes part in action.

Non-participant: observer watches from afar.

Structured: has behavior categories and records specific behavior.

Unstructured: records everything.

Evaluation of Observations

Strength: they are valid as they study what people do instead of what people say they’ll do.

Weakness: They are not reliable as behaviors can be misinterpreted.

Definition of Case Study

In depth study of an individual or small group, utilizing various methods of data collection (triangulation).

Types of Value in Case Studies

Intrinsic Value: they can help the participant.

Extrinsic Value: they can help build new theories.

Evaluation of Case Studies

Strength: they are valid as they collect a lot of data.

Weakness: they are not generalizable as the sample is always unique and small.

Definition of Internal Validity

The extent to which a study can confidently demonstrate a cause-and-effect relationship between the independent variable and the dependent variable, without being influenced by confounding variables or bias.

Definition of External Validity

The extent to which the results of a study can be generalized beyond the specific conditions of the experiment — to other people, settings, times, and measures.

Threats to Internal Validity

Participant bias: variables about the participant that may affect the results of the study.

Demand Characteristics: things about the study that may give away the aim, making participants behave differently.

Reactivity: participants behaving differently when they know they’re being watched

Placebo effect: people believing something works/doesn’t work just because they were told so. (nocebo effect: smth will hurt them bc they were told so)

Social desirability bias: people behaving a certain way to be liked etc.

Carryover effects: happens in repeated measures (practice - they get good, interference - previous condition info interferes, fatigue - when they get tired of too many conditions)

Types of External Validity

Ecological validity is whether the situation of the experiment was “natural” or “realistic.”

Population validity refers to whether the sample used is representative of the population from which it was drawn

Definition of Construct Validity

How well a test or study actually measures the psychological concept (the “construct”) it claims to measure.

Types of Experimental Design

Independent Measures: each participant takes place in one condition.

Repeated Measures: each participant takes place in all conditions.

Matched Pairs: participants are matched on certain characteristics and then each partner does one condition.

Random Allocation

When participants are randomly assigned to a condition of the study to avoid participant bias.

Counterbalancing

Where half of the participant group experience condition A then condition B, while the other half experience condition B then condition A, to avoid carryover effects.

Representation Generalizability

Selection Bias

Participants self-select into the study (ex. volunteers with strong opinions

The researcher intentionally or unintentionally chooses a certain type of person.

Certain groups are excluded from the sample due to how the participants are recruited.

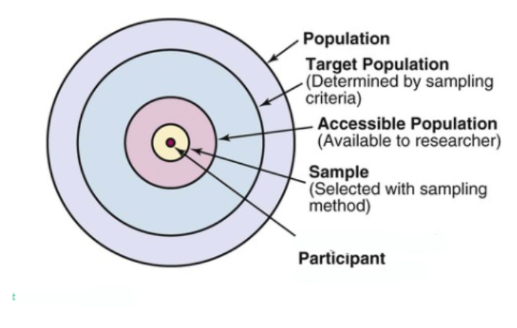

Self-Selected/Volunteer Sampling

Participants recruit themselves into the study.

Advantage: quick, convenient, accesible.

Disadvantage: often biased, low external validity.

Opportunity Sampling

Researcher chooses the sample from a certain place.

Advantage: quick, convenient, accesible.

Disadvantage: often biased, low external validity.

Random Sampling

Researcher picks the sample randomly, each participant in the target population has an equal chance of getting in.

Advantage: high external validity, controls for selection bias

Disadvantage: requires a full list of the target population, which is often not accessible, its time consuming and often impractical.

Systematic Sampling

Participants of the study are selected in a logical way from a target population. Like every 10th/20th etc person is chosen from a list or register.

Advantage: simplicity, cost-effectiveness, and ability to provide a uniform representation of the population.

Disadvantage: potential for bias if the population has a periodic pattern and the need for a complete and accurate sampling frame.

Stratified Sampling

A probability sample where participants are divided into groups based on different characteristics and the sample is chosen from each group randomly.

Advantage: high external validity.

Disadvantage: complex and time consuming.

Ethical Considerations

Consent (informed)

Anonymity

Right to Withdraw

Deception

Undue stress and harm

Debriefing