Chapter 14 - Virtual Machines

1/166

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No study sessions yet.

167 Terms

Traditionally, a PC or server hosted a single...

OS for running applications

Virtualization technology allows a PC or server to simultaneously run...

more than one OS or more than one session of the same OS

In this case, the system is said to host a number of

virtual machines

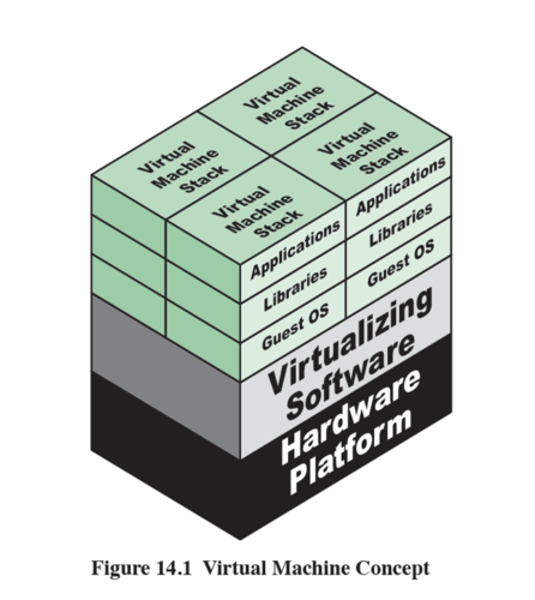

Virtual Machine Concept

see diagram

Virtualization was used during the 1970s in

IBM's mainframe systems

Virtualization came mainstream in the early

2000's when it became commerically available on x86

One application, one server

easier to support and administer

As hardware improved, servers became

underutilized, and each one required power, cooling maintenance

VMs relieved the stress of

underutilized servers

The software for virtualization is called a

virtual machine monitor, or VMM, or hypervisor

It acts as a layer between the hardware and the VMs to

act as a resource broker

Hypervisor allows multiple VMs to safely...

coexist on a single physical host

Each VM has its own OS, which can be...

same or different from host OS

Consolidation Ratio

Number of VMs that can run on a host

Today there are more virtual servers than

physical servers

Original hypervisors provided ratios from

4:1 up to 12:1

Reasons for Virtualization

•Legacy hardware: run old application on modern hardware

•Rapid deployment: physical server may take weeks, VM may take minutes

•Versatility: run many kinds of applications on one server

•Consolidation: replace many physical servers with one

•Aggregating: combine multiple resources into one virtual resource, such as storage

•Dynamics: new VM can easily be allocated, such as for load-balancing

•Ease of management: easy to deploy new VM for testing software

•Increased availability: VMs on a failed host can quickly be restarted on a new host

Consolidation

replace many physical servers with one

Legacy hardware

run old application on modern hardware

rapid deployment

physical server may take weeks, VM may take minutes

versatility

run many kinds of applications on one server

aggregating

combine multiple resource into one virtual resource, such as storage

dynamics

new VM can easily be allocated, such as for load-balancing

ease of management

easy to deploy new VM for testing software

increased availaibility

VMs on a failed host can quickly be restarted on a new host

Virtualization is a form of

abstraction

Just as an OS abstracts disk I/O commands from the user, virtualization abstracts...

physical hardware from VMs it supports

The virtual machine monitor or

hypervisor provides abstraction

The VM Monitor acts as a broker, or...

traffic cop, acting as a proxy for the VM as they request resources of the host

A VM is configured with

some number of processors, some amount of RAM, storage resources, and network connectivity

It can then be powered on like a physical server...

loaded with an OS and utilized like a physical server

It is limited to seeing only the

resources it has been configured to see

One physical has may support

many VMs

The hypervisor facilitates I/O from the...

VM to the host and back again to the correct VM

Privileged instructions must be caught and handled by

the hypervisor

Performance loss can occur with

hypervisors

A VM instance is defined

in files

A configuration file defines the number of

virtual processors (vCPUs), amount of memory, I/O device access, and network connectivity

The storage the VM sees may just be files in the

physical file system

When the VM is booted

additional files for logging, paging, and other functions are created

These files may be copied to

back up the VM or migrate it to a new host

The new VM may also may also quickly be created from

a template that defines hardware and software settings for a specific case

execution management of VMs

scheduling, memory management, context switching, etc

device emulation and access control

emulating devices required by VMs, mediating access to host devices

execution of privileged operations

rather than run them on host hardware

management of VMs (lifecycle management)

configuration of VMs and controlling VM states (start, pause, stop)

administration

hypervisor platform and software admin activities

Hypervisor functions

- Execution management of VMs

- Devices emulation and access control

- Execution of privileged operations by hypervisor for guest VMs

- Management of VMs (also called VM lifecycle management)

- Administration of hypervisor platform and hypervisor software.

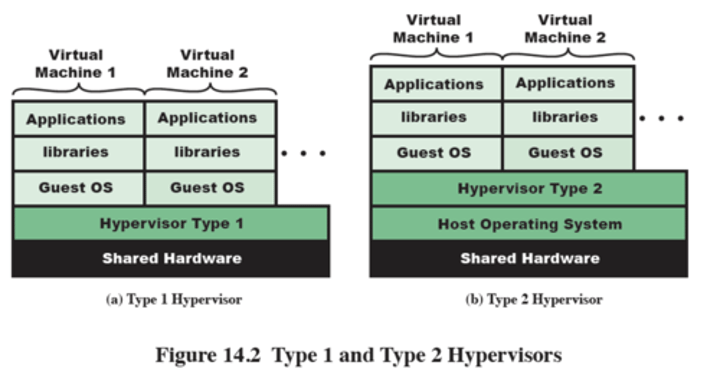

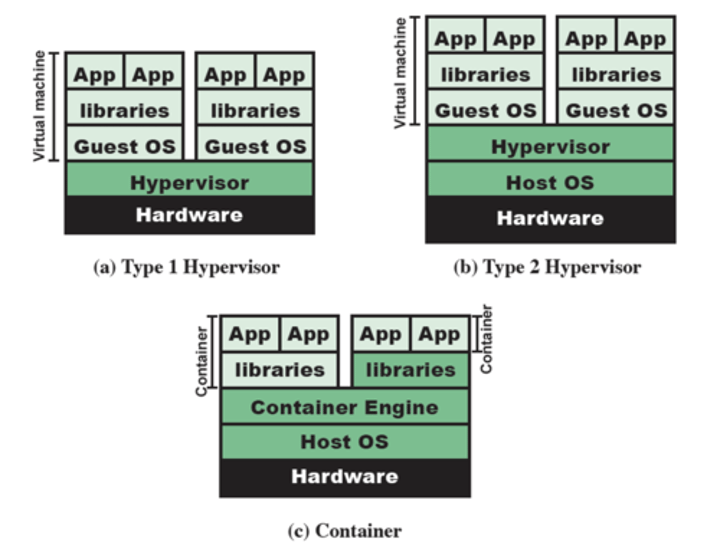

Type 1 Hypervisor

1. runs directly on host hardware much like an OS

2. directly controls host resources

ex: VMware ESXi, microsoft hyper-v, Xen

Type 2 Hypervisor

1. Hypervisor runs on host's OS

2. relies on host OS for hardware interactions

ex: VMware workstation, oracle VM virtual box

Hypervisor Type 1 and 2

see diagram

Type 1 performs

better than Type 2

Type 1 is more ______ than Type 2

secure

Type 2 can run on

a system being used for other things, like a user's workstation

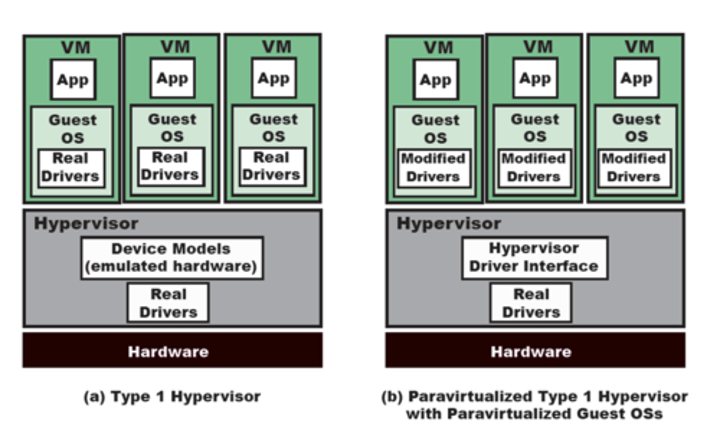

Paravirtualization is a software-assisted

virtualization technique

The OS is modified so that calls to the hardware are

replaced with calls to the hypervisor

This is faster with less overhead

but requires a modified OS

Paravirtualization support has been offered in

Linux since 2008

Paravirtualization diagram

see diagram

Both AMD and Intel processors provide support for

hypervisors

AMD-V and Intel VT-X provide hardware-assisted

virtualization extensions for the hypervisor to use

Intel processors offer extra instructions called

VMX (Virtual Machine Extensions)

Hypervisors can use these instructions rather than

performing these functions in code. OS does not require modification in this case

A virtual appliance consists of applications and an OS distributed as

a virtual machine image

A virtual appliance is independent of hypervisor or

processor architecture

A virtual appliance can run on either a

type 1 or type 2 hypervisor

deploying a virtual appliance is easier than

installing an OS, installing the apps, configuring, and setting it up

besides application use, a security virtual applicance (SVA) is a

security tool that monitors and protects the other VMs

SVA's can monitor the state of the VM including

registers, memory, and I/O devices as well as network traffic, through a special API of the hypervisor.

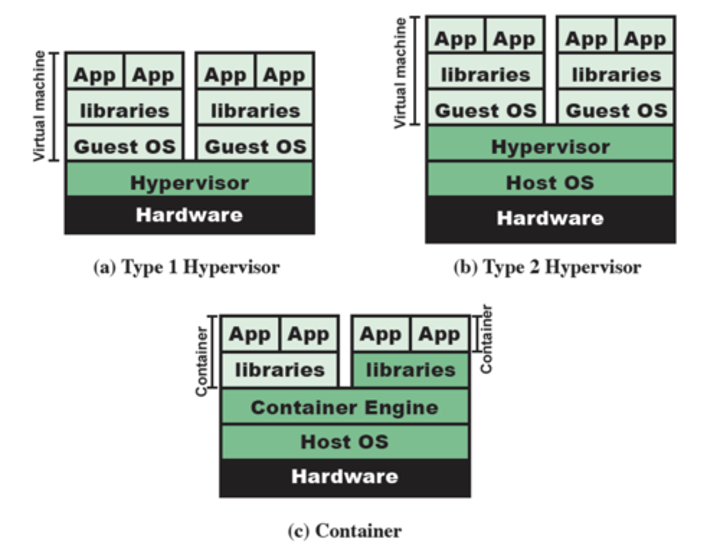

Another approach to virtualization is

container virtualization

Software running on top of the host OS

kernel provides an isolated execution environment

Unlike hypervisor VMs, containers do not aim

to emulate physical servers

Instead, all containerized applications on a host share a

common OS kernel

This eliminates the need for

each VM to run its own OS and greatly reduces overhead.

Much of container technology

was develop for Linux

In 2007, the linux process API was extended to permit the

containerization of the user environment

Originally called process containers

the name later became control groups (cgroups)

normally all processes are descendants of the

init process forming a single process hierarchy

control groups allow for multiple process

hierarchies in a single OS

the hierarchy is associated with

system resources at configuration time

Control groups provide

resource limiting, prioritization, accounting, control

resource limiting

limit how much memory is usable

priortization

some groups can get a larger share of CPU or disk I/O

accounting

can be used for billings purposes

control

groups of processes can be frozen or stopped and restarted

For container, only a small container

engine is needed

it sets up each container as an

isolated instance by requesting resources from the OS

each container application then

directly uses the resources of the host OS

Container lifecyle

setup, configuration, management

setup

enabling the Linux kernel containers, installation of tools and utilities to create the container environment

Configuration

specify IP address, root file system, and allowed devices

Management

startup, shutdown, migration

In a VM environment, a process executes inside

a guest virtual machine

An I/O request is sent to the guest OS to an

emulated device the guest OS sees

The hypervisor sends it through to the host OS

which sends it to the physical device

By contrast, an I/O request in a container environment is routed through

kernel control group indirection to the physical device.

Data Flow for I/O operation via Hypervisor and Container

see diagram

Container Advantages

1. by sharing the OS kernel, a system may run many containers compared to the limited number of VMs and guest OSs in a hv env

2. app performance is close to native system performance

Container Disadvantages

-Container applications are only portable across systems with the same OS kernel and virtualization support features.

-An app for a different OS than the host is not supported.

-May be less secure if there are vulnerabilities in the host OS.

Container File System

Each Container sees its own isolated file system