W6: Generalization & Overfitting

1/25

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

26 Terms

Understanding Generalization

How well a model performs on new, unseen data after training.

Identifies patterns instead of just memorizing training examples.

Build models that make accurate predictions on future data, not just past data.

Important because real-world data changes over time.

May work perfectly on training data but fail in real-world applications.

—

Measured by comparing the model's accuracy on training data vs test data.

Big drop in accuracy from training to testing = overfitting.

More training data helps improve generalization, but only up to a point.

Overfitting

Happens when a machine learning model learns the training data too well, including noise and random details. Causes the model to perform well on training data but poorly on new data.

Occurs when a model is too complex for the training data, is trained for too long

Too many features compared to examples (the "curse of dimensionality").

Underfitting

Model is too simple to learn patterns in the data, leading to poor performance on both training and test data.

Common example is using a linear model for a complex, nonlinear problem. No matter how much data you add, the model cannot capture the real relationship.

Easy to spot because the model performs poorly overall.

Fixing it requires using a more complex model or adding relevant features, but this must be balanced to avoid overfitting.

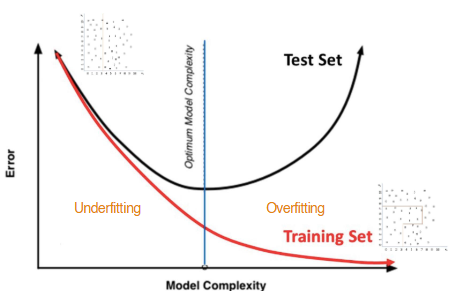

Bias-Variance Tradeoff

Goal is to balance bias and variance for a model that generalizes well to new data. Visualized as a U-shaped curve:

Increasing model complexity reduces bias (less underfitting).

But it increases variance (risk of overfitting).

The optimal model finds the balance where total error is minimized, balance is key to building effective machine learning models.

Bias

Error from oversimplifying the model →

leads to underfitting (missing important patterns).

Variance

Sensitivity to training data →

leads to overfitting (capturing noise).

Techniques to Prevent Overfitting

Techniques to improve/simple model generalization:

Regularization

Early Stopping

Feature Selection & Dimensionality Reduction

Regularization

Adds a penalty to large parameter values to control complexity.

L1 (Lasso): Shrinks some parameters to zero (feature selection).

L2 (Ridge): Reduces parameter magnitude without eliminating them.

Early Stopping

Stops training when validation performance worsens, preventing the model from memorizing data

Feature Selection & Dimensionality Reduction

Reduces input features to focus on the most relevant data.

Filter methods: Rank features using statistical measures.

Wrapper methods: Test feature subsets based on model performance.

Embedded methods: Select features during training.

Random Forest

Protects against overfitting by using multiple independently trained trees, making it robust and reliable with minimal tuning.

Prevents overfitting using three key techniques:

Bagging (Bootstrap Aggregating)

Feature Randomization

Out-of-Bag (OOB) Estimation

Bagging (Bootstrap Aggregating)

Each decision tree is trained on a random subset of the data, ensuring diversity.

Some data points appear multiple times in a tree’s training set, while others are left out.

Predictions from multiple trees are averaged (for regression) or voted on (for classification), the model focuses on real patterns rather than noise, reducing overfitting.

Feature Randomization

Instead of considering all features at each split, Random Forest selects a random subset, forcing trees to explore different feature combinations.

Prevents the model from relying too heavily on specific features, leading to better generalization.

Typically, sqrt(n) features are used for classification and n/3 for regression, where n is the total number of features.

Out-of-Bag (OOB) Estimation

Since about one-third of the data is left out of each bootstrap sample, these unused data points act as a built-in validation set.

By evaluating model performance on __ samples

Random Forest provides an unbiased estimate of its accuracy without needing a separate validation set, helping detect overfitting early.

XGBoost: Advanced Regularization for Better Generalization

Can deliver better performance with its focus on fixing errors, but needs careful tuning of its regularization to generalize well.

Prevents overfitting using three key techniques:

Regularization

Tree Pruning

Learning Rate (Shrinkage)

Mechanisms make it a powerful and reliable model, known for its strong generalization and success in machine learning tasks.

Regularization

Unlike standard gradient boosting, XGBoost adds both L1 (Lasso) and L2 (Ridge) to its objective function.

L1 helps by removing unnecessary features, making the model more sparse, while L2 limits the influence of any single feature, preventing it from dominating the model.

Terms ensure a balance between fitting the data and maintaining generalization.

Tree Pruning

Instead of allowing trees to grow indefinitely, XGBoost prunes them when additional splits provide little benefit.

"grow and prune" strategy removes unnecessary branches, keeping the model simple and reducing overfitting.

Pruning decisions are guided by the regularized objective function, ensuring only valuable splits are kept.

Learning Rate (Shrinkage)

XGBoost reduces the impact of each tree by applying a small learning rate (typically 0.01 to 0.3).

This slows down learning, preventing the model from memorizing noise.

While a lower learning rate requires more trees for optimal performance, it results in better generalization and stability.

Improving Generalization

To improve model generalization, focus on three main areas: data preparation, algorithm choice, and training strategies.

Cross-validation

Feature Engineering

Ensemble Methods

By following these practices, you can make your model more reliable on new, unseen data.

Cross-validation

Instead of a single train-test split, use cross-validation (like k-fold) to assess model performance across multiple data partitions.

This helps catch overfitting early and gives a more reliable estimate of how the model will perform on new data.

Feature Engineering

Transform raw data into meaningful features by adding new ones, removing irrelevant ones, and handling issues like multicollinearity.

This helps the model focus on the important patterns and improves generalization

Ensemble Methods

Combine multiple models (e.g., Random Forest and XGBoost) to create a stronger system.

Stacking and blending can help by reducing individual model biases and improving overall performance.

Real-World Applications and Case Studies

Generalization and overfitting have key impacts in real-world applications, especially when using Random Forest and XGBoost. Here are a few examples:

Financial Fraud Detection

Healthcare Predictive Models

E-commerce Recommendation Systems

In all these cases, ensuring good generalization helps avoid overfitting and keeps models effective over time.

Financial Fraud Detection

Fraud models need to generalize to new fraud patterns.

Random Forest works well here because it handles imbalanced data (fraud cases are rare) and resists overfitting.

It can detect new fraud methods, so companies regularly retrain models as fraud patterns change.

Healthcare Predictive Models

Models often struggle to generalize across diverse patient populations.

XGBoost helps by using regularization to focus on stable patterns and avoid irrelevant correlations.

These models also use domain knowledge and stratified sampling to ensure they work for different patient groups

E-commerce Recommendation Systems

These systems need to generalize from past purchase data to predict future preferences.

Overfitting leads to outdated recommendations.

A hybrid approach using both Random Forest and XGBoost captures complex patterns and handles categorical data.

Ensuring relevant recommendations through ongoing testing and validation.