quantitative research designs

1/52

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

53 Terms

reasons for quantitate research designs

What are the components of a quant design?

– Survey

– Population and Sample

– Instruments and measures

› Data analysis and interpretation

– Correlation ≠ Causation

› Validity and Generalizability

criteria for inferring causality

– 1. Time sequence (The cause must precede the effect in

time.)

– 2. Correlation (Changes in the cause are accompanied by

changes in the effect.)

– 3. Ruling out alternative explanations

4. Strength of correlation

5. Consistency in replication

6. Plausibility and coherence

internal validity

refers to how well an experiment is done, especially whether it avoids confounding (more than one possible independent variable [cause] acting at the same time). The less chance for confounding in a study, the higher its internal validity is.

› Ruling out alternative explanations pertains to the internal validity of a study

internal validity regarding causal inference

– Internal validity – the confidence we have that the results accurately depict whether one variable is or is not the cause of the other

– External validity – refers to the extent to which we can generalize the findings

more on variable and internal validity

Extraneous variables are variables that may compete with the independent variable in explaining the outcome of a study.

› A confounding variable is an extraneous variable that does indeed influence the dependent variable.

› A confounding variable systematically varies or influences the independent variable and also influences the dependent variable.

› Researchers must always worry about extraneous variables when they make conclusions about cause and effect.

threats to internal validity

1. history

2. maturation or the passage of time

3. testing

4. instrumentation changes

5. statistical regression

6. selection biases

history

Did some unanticipated event occur while the experiment was in progress and did these events affect the dependent variable?

maturation

changes in DV due to normal developmental processes

instrument changes

did any change occur during the study in the way the DV was measured

statistical regression

An effect that is the result of a tendency for subjects selected on the bases of extreme scores to regress towards the mean on subsequent tests.

selection

selecting participants for the various groups in the study. Are the groups equivalent at the beginning of the study?

› Were subjects self-selected into experimental and comparison groups? This could affect the dependent variable.a

dditional threats

Measurement Bias

› People who supply the ratings of the groups should not know the experimental status of the participants

research reactivity

› Biasing comments by researchers

› Experimental demand characteristics and experimenter

expectancies

› Obtrusive observation

›. Novelty and disruption effects

› Placebo effects

experimental mortality, what is attrition?

participants iwll drop out of an experiment before it is completed, affecting statistical comparisons and conlcusions

to minimize attrition

1. provide reimbursement to participants

2. avoid intervention or research procedures that disappoint or frustrate

3. utilize tracking methods

two divisions of external validity

population validity

ecological validity

population validity

how representative is the sample of the population

how widely does the finding apply

ecological validity

Ecological validity is present to the degree that a result generalizes across settings. Types include:

– Interaction effect of testing

– Reactive effects of experimental arrangements

– Multiple-treatment interference

– Experimenter effects

threats to external validity

Interaction effect of testing: Pre-testing interacts with the experimental treatment and causes some effect such that the results will not generalize to an untested population.

Hawthorne effect

An effect that is due simply to the fact that subjects know that they are participating in an experiment and experiencing the novelty of it — the Hawthorne effect

multiple treatment interference

When the same subjects receive two or more treatments as in a repeated measures design, there may be a carryover effect between treatments such that the results cannot be generalized to single treatments

designs (two types)

pre-experimental and experimental

quantitative vs qualitative approaches to research

Quantitative: Testing objective theories by examining relationship among variables

› Qualitative: Exploring and understanding meaning individuals/groups ascribe to social or human problem

› Mixed-Methods: Collect Quant and Qual

pre-experimental pilot studies

Some have an exploratory or descriptive purpose and can have considerable value despite having a low degree of internal validity

– Despite their value as pilot studies, pre-experimental designs rank low on the evidence-based practice research hierarchy because of their negligible degree of internal validity

One-group pre-test-posttest design

establishes both correlation and time order

-this design assesses the dependent variable before and after the stimulus (intervention) is introduced; o1 x o2

this design doesn’t account for factors other than the IV that may have caused change

Post-test only design

x o

o

asses the DV after the stimulus is introduced for one group, while also assessing the DV for a second group that may not be comparable and was not exposed to the IV

Experimental Designs

try to control for threats to internal validity by randomly assigning research participants to experimental and control groups.

Next, they introduce one category of the independent variable to the experimental group, while withholding it from the control group

– Then, they compare the extent to which the experimental and control groups differ on the dependent variable

pre-test post-test control group design

R O1 X O2

R O1 O2

The R stands for random assignment of participants to either group; O1 represents pretests, and O2 represents posttests; X represents the tested intervention

Post test only control group

R X O

R O

might opt for if pretest has an impact

random assignment

Solomon Four-group design

R O1 X O2

R O1 O2

R X O2

R. O2

highly regarded, rarely used. Used when wanting to know amount of -pre/post test change but worried about testing effects. Logistically difficult.

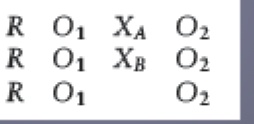

alternative treatment design with pretest

compare effectiveness of two alternative treatments, with pretest.

dismantling studies

similar type of design can also determine which components of the intervention may or may not be necessary to achieve its effects

– The first row represents components A and B

– The second row represents treatment A only; the third row represents treatment B only

– The fourth row represents the control group

Randomization (random assignment) is not the same as random samplin

participants to be randomly assigned are

individuals who voluntarily agreed to participate in the

experiment, which limits the external validity

In randomization, research participants who make up the study population are randomly divided into two samples

matching

Although randomization is the best way to avoid bias in assigning research participants to groups, it does not guarantee full comparability

– One way to improve the chances of obtaining comparable groups is to combine randomization with matching:

– Pairs of participants are matched on the basis of their similarities on one or more variables

– one member of the pair is assigned to the experimental group, and one to

the control group

testing

A pre-test may sensitize participant in unanticipated ways and their performance on the post-test may be due to the pre-test, not to the treatment, or, more likely, and interaction of the pre-test and treatment.

› Example: In an experiment in which performance on a logical reasoning test is the dependent variable, a pre-test cues the subjects about the post-test.

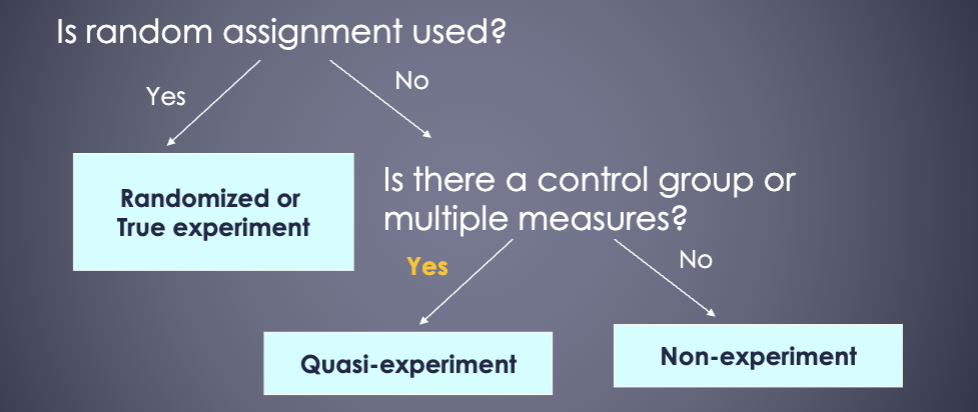

Quasi-experimental designs

If random assignment is not used, but there is a control group or multiple measures, it is a quasi experiment

when do they help

when control groups and randomization is not feasible, say if withholding services is unethical

the nonequivalent groups design

The most frequently used in social research

› Try to select groups that are as similar as possible to

compare the treated one with the comparison one

– e.g. two comparable classrooms or schools

› Cannot be sure whether the groups are comparable?

– Require a pretest and posttest

Nonequivalent comparison groups design

These can be used when we are unable to randomly assign participants to groups, but can find an existing group that appears similar to the experimental group, and can be compared to it

ways to strengthen the internal validity of the nonequivalent ocmparison groups design

multiple pretests

switching replication (adminestering the treatment to the comparison group after the first posttest)

regression discontinuity design

A useful method for determining whether a program of treatment is effective

› Participants are assigned to program or comparison groups based on a cutoff score on a pretest

treatment can be given to those most in need

simple time-series designs

mphasize the use of

multiple posttests

– The simple interrupted time-series design does not

require a comparison group; notation:

O O O O O O X O O O O O O

multiple time series designs

greater internal validity,

an experimental group and a nonequivalent comparison group are measured at multiple points in time before and after an intervention

cross-sectional studies

A cross-sectional study examines a phenomenon by taking a cross section of it at one point in time

– Cross-sectional studies may have exploratory, descriptive or explanatory purpose

case control studies

A case control design study compares groups of cases that have had contrasting outcomes and collects retrospective data about past differences that might explain the difference in outcomes

– Can be fraught with problems that limit what can be inferred or generalized

› Memories could be faulty

› Recall bias

practical pitfalls in carrying out experiments and quasi experiments

is as follows

fidelity of the intervention

Refers to the degree to which the intervention

delivered to clients was delivered as intended

contamination of the control condition

Even if the intervention is delivered as intended, the control condition can be contaminated if control group and experimental group members interact

resistance to the case assignment protocol

Practitioners tend to believe their services are effective, so may not be committed to adhering to the research protocol, believing they know what is best for the client

client recruitment and retention

Recruiting a sufficient number can be difficult

when research must rely on referrals of clients from outside the agency

strengths of quasi experimental design

useful in generating results/trends for social sciences

easily integrated with indvidual case studies

reduces the time and resources required for experimentationw

weaknesses

lacks randomization

doesn’t explain pre-existing factors and influences outside of experiment

serious limitations in terms of validity

ongoing debate

Recruiting a sufficient number can be difficult when research must rely on referrals of clients from outside the agency