Process Management: Synchronisation

5.0(1)

5.0(1)

Card Sorting

1/89

Earn XP

Study Analytics

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

90 Terms

1

New cards

how do cooperating processes share data?

* share data indirectly through access to files or messages

* directly sharing data eg. sharing memory

* directly sharing data eg. sharing memory

2

New cards

when can processes and threads be interrupted?

* processes and threads can execute parallel or concurrently and could be interrupted at any time especially when completing the execution

* this would cause the CPU to execute instructions of another process leaving the first one incomplete

* this would cause the CPU to execute instructions of another process leaving the first one incomplete

3

New cards

what can go wrong when processes share data?

* concurrent access to shared data may lead to data inconsistency eg. the money problem e.g. 2 people trying to deposit money to the same account at the same time, when account is updated it may not show the correct amount

* causes the critical section problem

* causes the critical section problem

4

New cards

what do we need to do to prevent data inconsistency between processes?

need to use mechanisms to ensure the execution order of cooperating processes allows the data to stay consistent

5

New cards

what mechanism to we use to solve the critical section problem?

Peterson's solution

6

New cards

what is a race condition?

When several processes access and manipulate the same data (e.g. shared variable) concurrently and the outcome of the execution depends on the particular order in which the access takes place.

7

New cards

how do we guard against the race condition?

synchronise the processes to ensure that only one process at a time can manipulate the variable counter

8

New cards

what is required of the system for the critical section problem?

* There is a system of n processes {P0, P1, ..., Pn−1}.

* Each process has a critical section segment of code.

* In the critical segment the process may be changing common variables, updating tables, writing files, etc.

* When one process is executing in its critical section, no other process is allowed to execute in its critical section as this could conflict

* Each process has a critical section segment of code.

* In the critical segment the process may be changing common variables, updating tables, writing files, etc.

* When one process is executing in its critical section, no other process is allowed to execute in its critical section as this could conflict

9

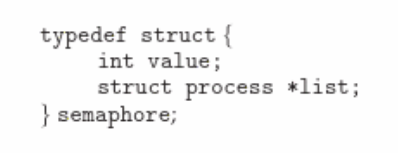

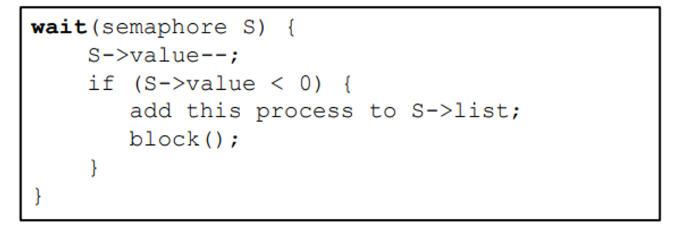

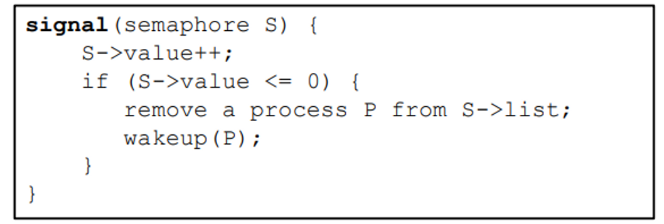

New cards

what is the critical section problem?

* how do we design a protocol that processes can use to co-operate and ensure no two processes are executing in their critical sections at the same time

* each process needs to request permission to enter it’s critical section

* each process needs to request permission to enter it’s critical section

10

New cards

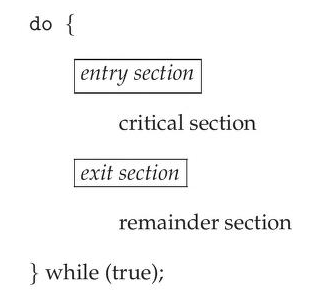

what is the general **code structure** of a process?

entry section

critical section

exit section

remainder section

critical section

exit section

remainder section

11

New cards

what is the entry section?

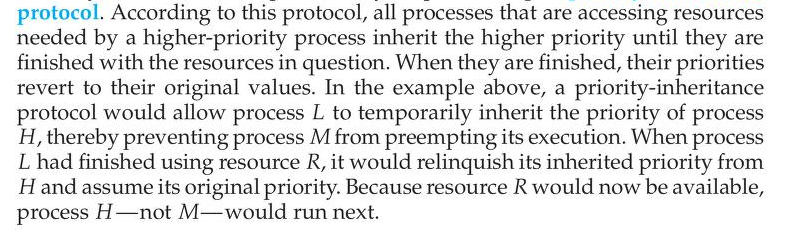

code that requests permission to enter the critical section

12

New cards

what is the critical section?

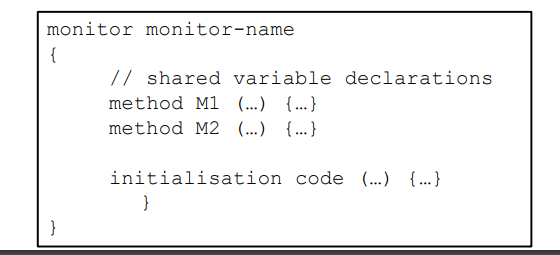

the protected code segment

13

New cards

what is the exit section?

notifies the system that the process is exiting its critical section

14

New cards

what is the remainder code?

the rest of the processes code

15

New cards

what are the 3 requirements of a solution to the critical section problem?

mutual exclusion

progress

bounded waiting

\

many peanut butters

progress

bounded waiting

\

many peanut butters

16

New cards

what is the mutual exclusion requirement?

If process Pi is executing in its critical section, then no other processes can be executing in their critical sections.

17

New cards

what is the progress requirement?

If no process is executing in its critical section and there exist some processes that wish to enter their critical sections, then only those processes that are not executing in their remainder sections can participate in deciding which will enter its critical section next, and this selection cannot be postponed indefinitely.

18

New cards

what is the bounded waiting requirement?

Between the time a process has made a request to enter its critical section and before that request is granted, a bound must exist on the number of times that **other** processes are allowed to enter their critical section

19

New cards

how can the critical section problem occur in operating systems?

* many kernel-mode processes can be active at one time

* the kernel code (code implementing the os) is subject to race conditions

* e.g. kernel data structure has a list of files and multiple processes want to open files simultaneously

* the kernel code (code implementing the os) is subject to race conditions

* e.g. kernel data structure has a list of files and multiple processes want to open files simultaneously

20

New cards

what are the 2 approaches to handle critical sections in operating systems?

pre-emptive kernels

non pre-emptive kernels

non pre-emptive kernels

21

New cards

what is a pre-emptive kernel?

allows process to be pre-emptive while running in kernel mode. more responsive as less risk that a kernel mode process will run really long

22

New cards

what is a non pre-emptive kernel?

does not allow a process to be pre-emptive while running in kernel mode. kernel mode process will run until it exits. free from race conditions

23

New cards

what are the limitations of Peterson’s solution?

can’t guarantee it will work on all architectures because of the way modern computers perform machine language instructions like load() and store()

24

New cards

why is the Peterson’s solution used?

Good algorithmic description of solving the critical section problem.

25

New cards

what is the Peterson’s solution?

* Restricted to two processes, Pi and Pj, that alternate executions between their critical and remainder sections.

* j = 1-i

* required the two processes share two data items

* j = 1-i

* required the two processes share two data items

26

New cards

what data items do processes share in the Peterson’s solution?

int turn

boolean flag\[2\]

boolean flag\[2\]

27

New cards

what is the turn variable?

indicates whose turn it is to enter its critical section, e.g., if turn == 0 then Pi is allowed to enter critical section

28

New cards

what is the flag\[2\] variable?

indicates if a process is ready to enter its critical section, e.g., if flag\[i\]== true then Pi is ready to enter critical section

29

New cards

how does the code for Peterson’s solution work for the 2 processes?

* when process is ready it sets its flag variable and turn variable is set to other process

* if the flag\[process\] = true and the turn = process then it means that the process is ready to enter and it is allowed to enter. *[ but has to experience bust wait to get to this ]*

* after critical section executes then the flag\[process\] variable set to false to show that the process is not ready to enter critical section anymore

* if the flag\[process\] = true and the turn = process then it means that the process is ready to enter and it is allowed to enter. *[ but has to experience bust wait to get to this ]*

* after critical section executes then the flag\[process\] variable set to false to show that the process is not ready to enter critical section anymore

![* when process is ready it sets its flag variable and turn variable is set to other process

* if the flag\[process\] = true and the turn = process then it means that the process is ready to enter and it is allowed to enter. *[ but has to experience bust wait to get to this ]*

* after critical section executes then the flag\[process\] variable set to false to show that the process is not ready to enter critical section anymore](https://knowt-user-attachments.s3.amazonaws.com/d5a48d4913534ec8a57bd9c616096eaa.jpeg)

30

New cards

does Peterson’s solution meet the critical-section solution requirements?

yes

31

New cards

how is the mutual exclusion requirement met?

* P, enters its critical section only if it’s flag is true and turn is the correct value

* in order for both process to be in their critical section then flag\[0\] == flag \[1\] == true and turn = 1 and turn = 0 which is not possible as turn value would be over written and can’t be 2 values at once

* in order for both process to be in their critical section then flag\[0\] == flag \[1\] == true and turn = 1 and turn = 0 which is not possible as turn value would be over written and can’t be 2 values at once

32

New cards

how is the progress requirement met?

P0 sets turn to 1 and vice versa and the values are changed when the process is in its entry section (flag variable changed) , not its remainder section

33

New cards

how is the bounded waiting requirement met?

because of the alternation in the turn value no process waits more than one tern to executed

34

New cards

what are the 3 software based solutions to solve the critical section problem?

* mutex locks

* semaphores

* monitors

* semaphores

* monitors

35

New cards

why are software solutions favoured over hardware solutions?

hardware based solutions are complicated and inaccessible to application programmers so that’s why OS designers design software tools

36

New cards

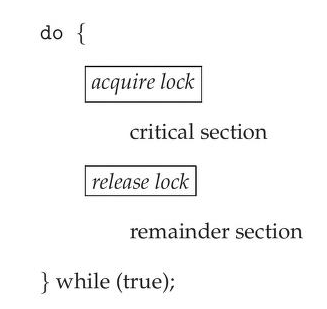

what are mutual exclusion/ mutex locks?

* the simplest tool to solve the critical section problem

* the critical section is protected with a lock, only the process who has access to the lock has access to execute their critical section

* the critical section is protected with a lock, only the process who has access to the lock has access to execute their critical section

37

New cards

what variables and methods are used for mutex locks?

* a boolean variable \[available\] is used to indicate if the lock is available or not

* acquire() = getting the lock

* release() = releasing the lock

* acquire() = getting the lock

* release() = releasing the lock

38

New cards

what is the code solution using mutex locks?

39

New cards

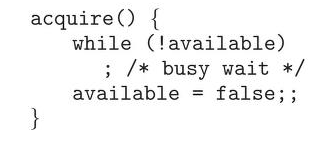

what is the acquire() method?

* if the lock is available the process calls aquire() and succeeds and the lock is made unavailable.

* processes are blocked from using acquire() on unavailable locks with a busy wait until the lock is released

* processes are blocked from using acquire() on unavailable locks with a busy wait until the lock is released

40

New cards

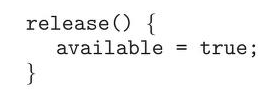

what is the release() method?

when a process is done executing their critical section they can release the lock and set available to true so that other processes can acquire it.

41

New cards

how must acquire() and release() be called?

calls to acquire() and release() must be atomic and usually implemented via hardware atomic instructions - atomic means that if one method is altering the value then the other method cannot

42

New cards

what is the main disadvantage of mutex locks?

* requires busy waiting where others process ready to enter CS ==must loop and call acquire() until the lock is released==

* this wastes CPU cycles that could be used productively by other processes

* this wastes CPU cycles that could be used productively by other processes

43

New cards

what is the mutex lock called when it requires a busy wait?

spinlock

44

New cards

what are the advantages of spinlocks?

* no context switch is required

* good when locks only need to be held for a short time

* good for multiprocessor systems where one thread can spin and the other can execute CS

* good when locks only need to be held for a short time

* good for multiprocessor systems where one thread can spin and the other can execute CS

45

New cards

how do mutex locks compare to semaphores?

more sophisticated than mutex locks to synchronise processes

46

New cards

what is a semaphore?

* a semaphore is an `int s` that is only accessed through initialisation, or 2 atomic operations (wait() and signal() which cannot run at same time and cannot be interrupted

* when one method changes the value of a semaphore no other process can simultaneously modify the same semaphore value

* when one method changes the value of a semaphore no other process can simultaneously modify the same semaphore value

47

New cards

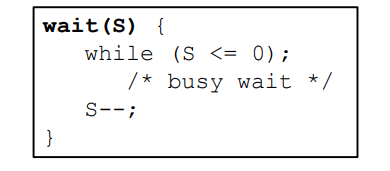

what is the wait() method?

wait() while semaphore is less than or equal to 0, wait. after waiting the process will decrement the semaphore and show that waiting is finished

48

New cards

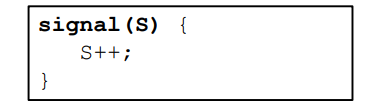

what is the signal() method?

signal() increment value of the semaphore, shows that the previous process has finished

49

New cards

what are the 2 types of semaphores an OS can distinguish between?

binary semaphore

counting semaphore

counting semaphore

50

New cards

what is a binary semaphore?

binary semaphore - int value that can only range between 0 and 1 (similar to mutex lock - used on systems that do not provide mutex locks)

51

New cards

what is a counting semaphore?

counting semaphore - int value that can range over unrestricted domain, to control access to a given resource consisting of a finite number of instances

52

New cards

how does a counting semaphore work to control access to a resource consisting of a finite number of instances?

* semaphore is initialised to the number of resources available

* each process that wants to use the resource performs the wait() operation on the semaphore which makes the semaphore -= 1

* when a process is finished with the resource it calls signal() which makes the semaphore += 1

* when semaphore = 0, means that all resources are being used. any other processes that want to use the resource will be blocked until the count > 0

* each process that wants to use the resource performs the wait() operation on the semaphore which makes the semaphore -= 1

* when a process is finished with the resource it calls signal() which makes the semaphore += 1

* when semaphore = 0, means that all resources are being used. any other processes that want to use the resource will be blocked until the count > 0

53

New cards

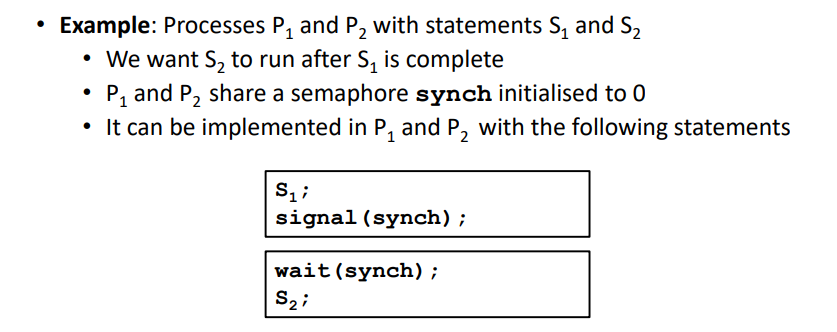

explain how this example works

* whilst s1 is executing sync is still 0

* since synch is already 0 s2 will have to stay in wait() and can only leave wait() once s1 has issued signal() to increment synch > 0

* classic definition of a semaphore

* since synch is already 0 s2 will have to stay in wait() and can only leave wait() once s1 has issued signal() to increment synch > 0

* classic definition of a semaphore

54

New cards

what is the classic definition of a semaphore?

the value of a semaphore can never be < 0

55

New cards

what do we do to avoid semaphores suffering from busy waiting like mutex locks did?

* change the definition of the semaphore

* modify the wait() and signal() methods

* semaphore can block the process when it isn’t available to free CPU time

* semaphore can wake up the process when it is available

* (process want to access the semaphores themsleves)

* modify the wait() and signal() methods

* semaphore can block the process when it isn’t available to free CPU time

* semaphore can wake up the process when it is available

* (process want to access the semaphores themsleves)

56

New cards

how does the semaphore implementation change to prevent busy waiting?

* each semaphore has a int value and a list of (waiting) processes

* a wait(), adds a process to the waiting list

* a singal(), removes a process form the waiting list to awaken it

* a wait(), adds a process to the waiting list

* a singal(), removes a process form the waiting list to awaken it

57

New cards

how does the wait() method change to prevent busy waiting?

* decrements the semaphore

* if the semaphore value is negative it means that there’s no resources available so

* places the process into a waiting queue associated with the semaphore

* executes `sleep()/ block()` to suspend the process

* switching the process state = ***waiting***

* control is passed to CPU scheduler which selects another process to execute

* if the semaphore value is negative it means that there’s no resources available so

* places the process into a waiting queue associated with the semaphore

* executes `sleep()/ block()` to suspend the process

* switching the process state = ***waiting***

* control is passed to CPU scheduler which selects another process to execute

58

New cards

how does the signal() method change to prevent busy waiting?

* semaphore value is incremented

* if semaphore is ≤ 0 means that a resource is available and a process can be selected for execution

* process removed from semaphore list

* process is restarted using the `wakeup()`

* process state changes from ***waiting → ready***

* process is placed in the ready queue

* depending on the CPU scheduling algorithm the cpu may or may not be switched from currently running process to the new, ready process

* if semaphore is ≤ 0 means that a resource is available and a process can be selected for execution

* process removed from semaphore list

* process is restarted using the `wakeup()`

* process state changes from ***waiting → ready***

* process is placed in the ready queue

* depending on the CPU scheduling algorithm the cpu may or may not be switched from currently running process to the new, ready process

59

New cards

is the semaphore used to prevent busy waiting a classic definition of a semaphore?

* no, the value can be negative

* magnitude of semaphore = number of processes waiting on the semaphore

* magnitude of semaphore = number of processes waiting on the semaphore

60

New cards

how must wait() and signal be called()?

* executed atomically so that no 2 processes can execute wait and signal operations on the same semaphore at the same time.

* for single CPU core, use interrupts to inhibit the time that wait() and signal() operations are executed

* for multiprocessor, interrupts disabled and can use spinlocks or compareandswap() operations

* for single CPU core, use interrupts to inhibit the time that wait() and signal() operations are executed

* for multiprocessor, interrupts disabled and can use spinlocks or compareandswap() operations

61

New cards

have we eliminated the busy wait with the updated wait() and signal() method?

* no, only moved the busy wait from the entry section to the critical section, and limited busy wait to only the critical sections of the wait() and signal() operations which are short.

* since the critical sections are shot it means its almost never occupied so busy wait occurs rarely

* since the critical sections are shot it means its almost never occupied so busy wait occurs rarely

62

New cards

what are the disadvantages of semaphores with waiting queues?

can result in deadlock

63

New cards

what is deadlock?

when 2 or more processes are waiting indefinitely for an event that can be caused only by one of the waiting processes

64

New cards

what event do processes wait for in deadlock when using semaphores?

process is waiting for the other process to execute signal()

65

New cards

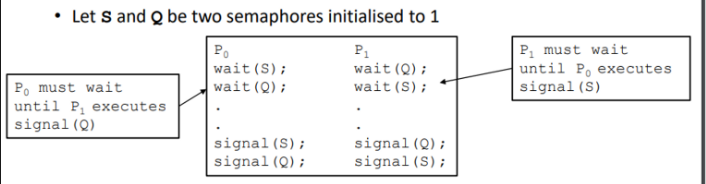

explain an example of semaphore deadlock

* P0 executes wait(S)

* P1 executes wait(Q)

* P0 must wait until P1 executes signal(Q)

* P1 must wait until P0 executes signal(S)

* P1 executes wait(Q)

* P0 must wait until P1 executes signal(Q)

* P1 must wait until P0 executes signal(S)

66

New cards

what other problems are related to deadlock?

starvation

67

New cards

what is starvation?

* indefinite blocking

* a process waits indefinitely within the semaphore and may never be removed from the semaphore queue

* a process waits indefinitely within the semaphore and may never be removed from the semaphore queue

68

New cards

how can starvation occur?

if we remove processes from the semaphore queue in LIFO, should be removed in FIFO

69

New cards

what is priority inversion?

* a scheduling problem when a lower-priority process holds a lock and edits kernel data that’s needed by a higher-priority process to modify the same data

* the higher-priority process has to wait for the lower-priority process and the lower process affects how long the higher process has to wait

* the higher-priority process has to wait for the lower-priority process and the lower process affects how long the higher process has to wait

70

New cards

what type of systems does priority inversion occur?

systems with 2 or more priorities

71

New cards

how is priority inversion solved?

priority-inheritance protocol

72

New cards

what is priority inheritance protocol?

73

New cards

what is an abstract data type ?

encapsulates data with a set of functions to operate on the data, that are independent of any specific implementation of the ADT

74

New cards

what is a monitor?

* an ADT provided by programming languages that provides convenient and effective mechanism for process synchronisation (high level abstraction)

* includes a set of programmer defined operations that have mutual exclusion (ensure only one process within the monitor can be active at a time)

* includes a set of programmer defined operations that have mutual exclusion (ensure only one process within the monitor can be active at a time)

75

New cards

how does a monitor prevent concurrency problems?

* only one process can access the monitor at a time and the internal monitor variables are only accessible by the functions within the monitor

* the programmer doesn’t need to code the synchronisation constraint explicitly unless they want to define a conditional variable to suit a specific synchronisation scheme

* the programmer doesn’t need to code the synchronisation constraint explicitly unless they want to define a conditional variable to suit a specific synchronisation scheme

76

New cards

how effective is a monitor?

not powerful enough on its own to model synchronisation schemes but can add detail e.g. if multiple threads want to access the data MySharedData, then have MySharedData as a monitor and use mutex locks to determined when a thread can access it

77

New cards

what are 2 examples of classic synchronisation problems?

* bounded buffer (producer-consumer)

* dining philosophers

* dining philosophers

78

New cards

what is the bounded buffer (producer consumer problem)?

* Producer produces information, the information is consumed by consumer

* They share a data structure (e.g. a buffer) that has a maximum size

* both processes can update the counter variable so have the ability to update counter in an uncontrolled manner

* running both processes (producer and consumer) in parallel or concurrently can increase efficiently eg. producer may populate the buffer in a faster rate than consumer consumes items.

* They share a data structure (e.g. a buffer) that has a maximum size

* both processes can update the counter variable so have the ability to update counter in an uncontrolled manner

* running both processes (producer and consumer) in parallel or concurrently can increase efficiently eg. producer may populate the buffer in a faster rate than consumer consumes items.

79

New cards

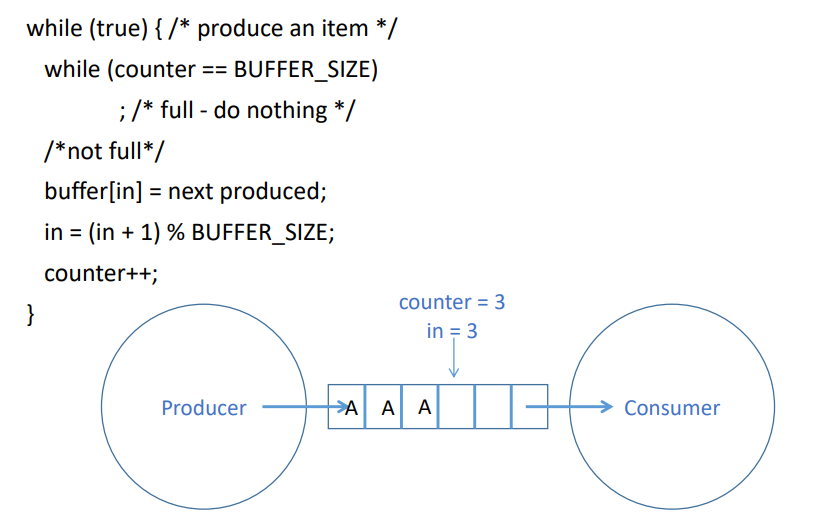

what is the code for the producer side?

80

New cards

explain the variables used in the producer side

* Buffer is a circular list with max size BUFFER_SIZE

* “in” keeps track of the position in the list

* “counter” keeps track of how many things in the list

* “in” keeps track of the position in the list

* “counter” keeps track of how many things in the list

81

New cards

how does the producer side work?

* If the buffer is full (counter = BUFFER_SIZE) then do nothing (busy waiting)

* Producer produces something (say a character) and puts it in position buffer\[in\]

* Move the pointer to the next position

* in = (in + 1) % BUFFER_SIZE;

* i.e. if in is 3 and BUFFER_SIZE is 6, the above would be 4 = (3 + 1) % 6 (convenient way of limiting in)

* Add one to the counter to keep track on how many things are in the buffer (counter++)

* Producer produces something (say a character) and puts it in position buffer\[in\]

* Move the pointer to the next position

* in = (in + 1) % BUFFER_SIZE;

* i.e. if in is 3 and BUFFER_SIZE is 6, the above would be 4 = (3 + 1) % 6 (convenient way of limiting in)

* Add one to the counter to keep track on how many things are in the buffer (counter++)

82

New cards

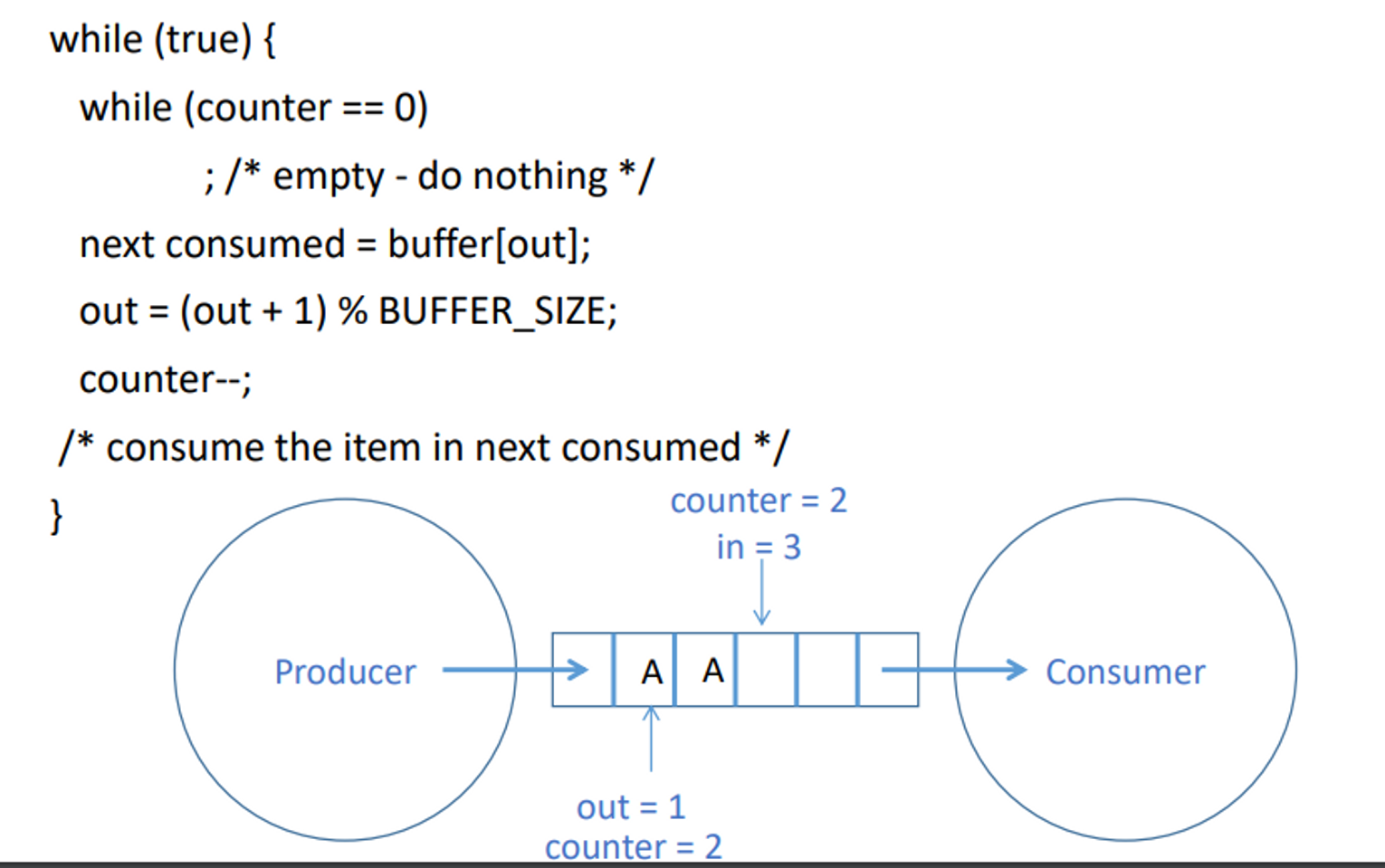

what is the code for the consumer side?

83

New cards

explain the variables used in the consumer side

* Buffer is a circular list with max size BUFFER_SIZE

* “out” keeps track of the position in the list

* “counter” keeps track of how many things in the list

* “out” keeps track of the position in the list

* “counter” keeps track of how many things in the list

84

New cards

how does the consumer side work?

* If the buffer is empty (counter = 0) then do nothing (busy waiting)

* Consumer consumes something (whatever is in the buffer) from position buffer\[out\]

* Move the pointer to the next position

* out = (out + 1) % BUFFER_SIZE;

* i.e. if out is 0 and BUFFER_SIZE is 6, the above would be 1 = (0 + 1) % 6 (convenient way of limiting out)

* Subtract one from the counter to keep track on how many things are in the buffer (counter--)

* Consumer consumes something (whatever is in the buffer) from position buffer\[out\]

* Move the pointer to the next position

* out = (out + 1) % BUFFER_SIZE;

* i.e. if out is 0 and BUFFER_SIZE is 6, the above would be 1 = (0 + 1) % 6 (convenient way of limiting out)

* Subtract one from the counter to keep track on how many things are in the buffer (counter--)

85

New cards

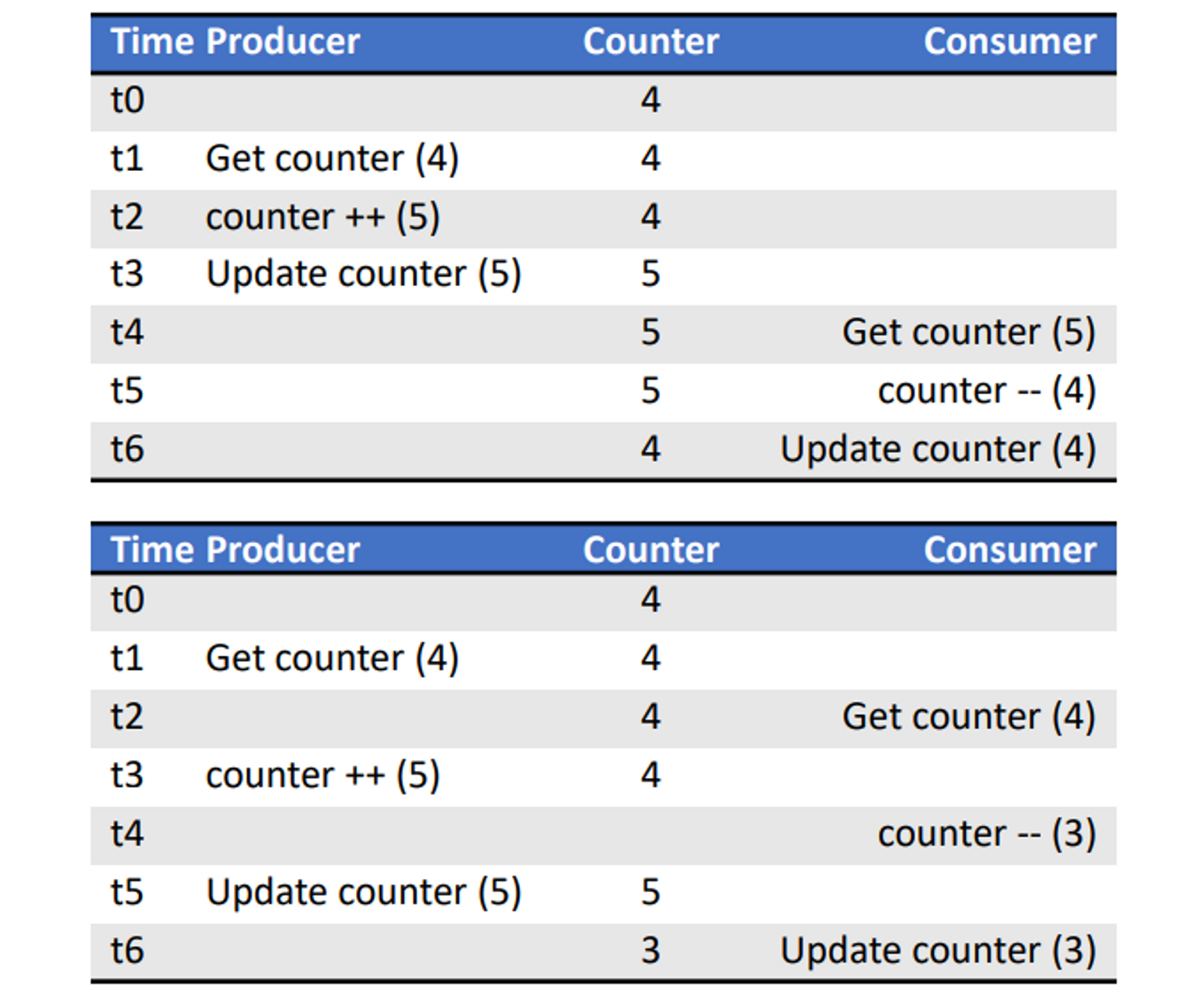

what is an example of the producer and consumer conflicting with the counter variable?

top example - producer executes fully first and then consumer executes fully, sequential

\

bottom example - producer and consumer execute concurrently and counter variable is modified incorrectly, overwritten

\

bottom example - producer and consumer execute concurrently and counter variable is modified incorrectly, overwritten

86

New cards

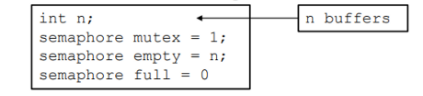

what is the solution to the bounded buffer problem?

* producer and consumer share multiple data structures

* instead of sharing a single circular list, we have a pool consisting of n buffers, each capable of holding only 1 item.

* a mutex semaphore is used to provide mutual exclusion access to the buffer pool

* 2 separate semaphores are used, one to count the number of empty buffers and one to count the number of full buffers

* instead of sharing a single circular list, we have a pool consisting of n buffers, each capable of holding only 1 item.

* a mutex semaphore is used to provide mutual exclusion access to the buffer pool

* 2 separate semaphores are used, one to count the number of empty buffers and one to count the number of full buffers

87

New cards

what are the INITIAL values of the shared data structures for the bounded buffer solution?

88

New cards

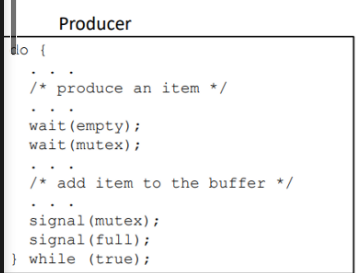

what is the producer code for the solution?

89

New cards

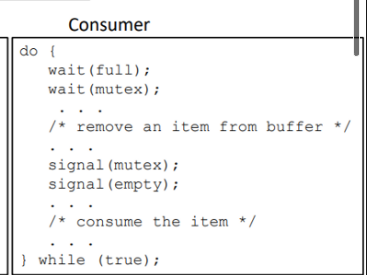

what is the consumer code for the solution?

90

New cards

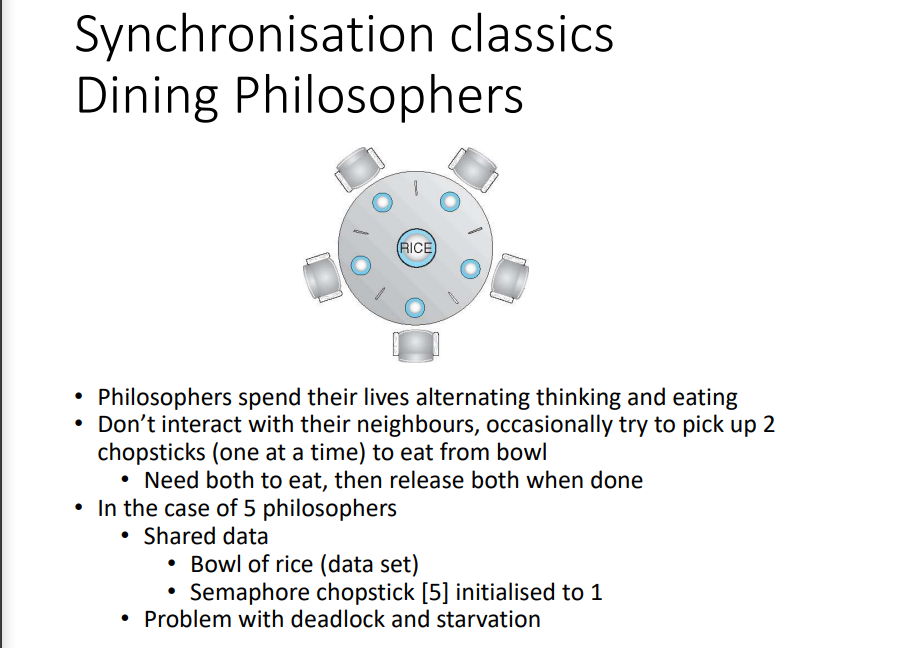

what is the dining philosophers synchronisation problem?