Option B Modelling and Simulation

1/65

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

66 Terms

Modelling

The process of creating a computer model of a system to allow for recording of relevant data and processing the data based on accurate rules to produce useful output.

- Allows us to try and forecast information

Advantages of Spreadsheets for Mathematical Modelling

Allows formulas to be created and copied for large amounts of data

Allows values to be looked up from lookup tables and data to be recorded and updated without calculations having to be redone.

Simulation

A process of varying the inputs and/or rules of a mathematical model to observe the effects.

Visualisations

Graphical representations of the outputs of computer models or simulations. Whether 2D or 3D they can be beneficial in communicating complex data sets in a way that is easily and quickly understood by the audience e.g a large number of temperature and location measurements can be grouped and plotted on a map to be easily understood.

The process of taking a mathematical representation stored in memory and generating and image from it.

Limitations of Computer Models

Computer models are designed by humans and so are limited by the designer selecting the correct data. They are also limited by the computer resources such as memory secondary storage and processor time e.g. a model of global climate would require huge amounts of memory to store the many variables required.

It is not possible to know all of the variables available

Processing power and storage capacity are not limitless

Models always involve assumptions

Models only represent aspects which we are aware of, understand and can quantify.

A model could have a bug leading to false results.

Human - incompleteness, choice, assumption

Grouping for Collections of Data Items

Data in a model should be grouped to allow for clarity e.g. a model of atmospheric measurements taken around the world could be grouped by the date taken and organised on one row of a model. Grouping of data could also be used to find trends in data and see the correlations.

Test Case to Evaluate a Model

Used to identify if a model is functioning correctly. It specifies the inputs and expected outputs of a model. The actual outputs of the model are then compared with the expected outputs to identify any problems with the model.

Effectiveness of Test Case

- They need to test all possible inputs of a model, delete inputs and add inputs. (test for normal, extreme and erroneous data)

- Check the model fully, not partially.

Discuss the Correctness of a Model

How well the model reproduces actual data observed in the real world system. The more accurately outputs of the model reflect the actual observed phenomena, the more correct it is.

Abstraction for Modelling

The process of modelling involves creating abstractions of other systems that focus on and include the relevant details of a system such as inputs, outputs and rules but do not include every detail about the system.

Rules that Process Data

- mathematical formulae

- pseudocode

- algorithms

- tables of inputs and output values

- detailed english description

Difference between a Model and a Simulation

A model represents a real life system using mathematical formulas and algorithms.

Meanwhile, a simulation uses models to study the system by inserting different inputs and varying the rules and analysing the outputs.

Software and Hardware Needed for a Simulation

Depends so for example in a Flight Simulator:

Software required:

Image processing software, 3D rendering software

Hardware required:

Powerful CPU / Graphic Processing Unit, large amount of memory

Genetic Algorithms

A method for solving both constrained and unconstrained optimization problems based on a natural selection process that mimics biological evolution. The algorithm repeatedly modifies a population of individual solutions.

[Sample IB Response]

An initial population set is chosen randomly/pseudo randomly;

A fitness function is applied to each population;

The fittest members are selected for the next stage;

Genetic operators are applied such as crossover / mutation;

The process is repeated until an acceptable level of fitness is found / a plateau is reached / a maximum number of iterations has been reached;

Advantages and Disadvantages of Simulations

Advantages:

- Dangerous situations can be simulated safely

- Expensive disasters can be simulated relatively cheaply

- Very rare occurrences can be simulated frequently

Disadvantages:

- May not be realistic enough for some purposes

Visualisation

Producing graphs or animations based on computer data, to help understand and analyse it.

Uses of 2D Visualisation

Block diagram to visualise amounts of money by making sizes relative to the amount of money.

MRI (2D visualisation)

How often words appear in a text

Used to represent data in a clearer and more easily understandable format.

Memory Needs of 2D Visualisation

As simple as static graphics, memory requirements are similar to a graphic image. Interactive 2D visualisations involve several images and code which would occupy more space.

3D Visualisation Uses

- Climate change modelling

- Modelling the dispersion of gases

- Traffic

- Human population

Wire Framing

Its a visual 3D object in Computer Graphics. Vertices and lines define the shape of the object.

Ray Tracing

A technique in computer graphics for generating an image by tracing the path of light through pixels in an image plane and simulating the effects of its encounters with virtual objects.

Lighting

Plays an important role in how we perceive 3D objects. When rendering a 3D model the location, intensity and number of a _____ sources is modelled to calculate the brightness of each pixel in a rendered shape.

Key Frames

In animation and filmmaking, it is a drawing which defines the starting and ending points of any smooth transition.

Texture Mapping

Wrapping a texture map around a 3D object in order to make it appear more realistic

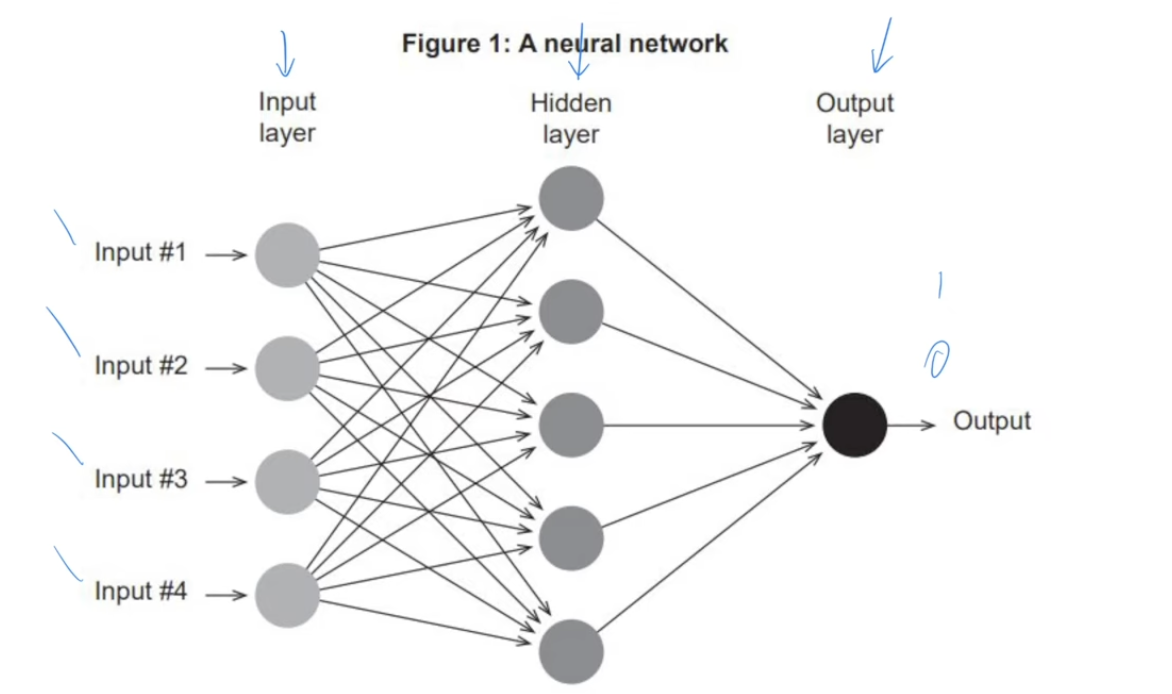

Structure of Neural networks

Have 3 different layers:

Input Layers - Responsible for accepting data, either for training purposes or to make a prediction

Hidden Layers - Responsible for deciding what the output is for a given input; where the “training” occurs

Output Layers - Outputs the final prediction

Speech Recognition

The process of turning digitised audio of spoken words into text.

Optical Character Recognition (OCR)

The mechanical or electronic conversion of images of typed, handwritten or printed text into machine-encoded text. This usually involves the use of unsupervised learning to recognise the characters.

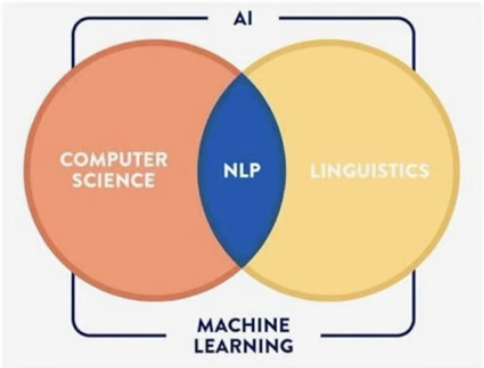

Natural Language Processing

A field of computer science, artificial intelligence, and computational linguistics, that enables computers to understand, interpret, and generate human language.

It combines computational linguistics—rule based modeling of human language—with statistical, machine learning, and deep learning models.

It is used to automate and enhance the processing of vast amounts of language data for various applications, such as translation services, sentiment analysis, customer service automation, and information extraction

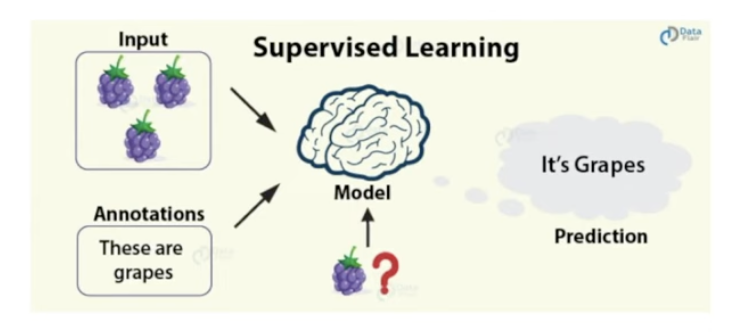

Supervised Learning

Type of machine learning in which the desired output is known. Therefore the output will be checked against the predetermined output and adjusted accordingly to analyse the next inputs better.

[IB Response]

When the outcome related to a given input is already known

The neural network (“learner”) can recognize objects and name them based on labels already given and “learned”

![<p>Type of machine learning in which the <strong>desired output is known</strong>. Therefore the <strong>output</strong> will be <strong>checked against the predetermined output </strong>and <strong>adjusted accordingly </strong>to <strong>analyse the next inputs better</strong>.</p><p></p><p>[IB Response]</p><ul><li><p>When the <strong>outcome</strong> related to a given input is <strong>already known</strong></p></li><li><p>The neural network (“learner”) can recognize objects and name them based on labels already given and “learned”</p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/95c28aff-eeb2-469d-8424-4f92f18fbdba.png)

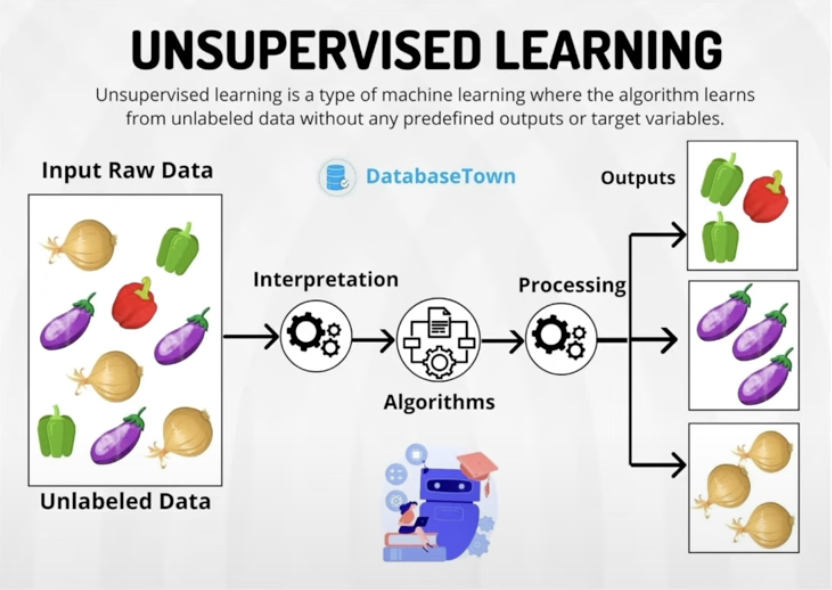

Unsupervised Learning

Type of machine learning in which there is no predetermined output. Seeks to identify unknown patterns within sets of data.

[IB RESPONSE]

No examples of desired outcome are given with input data to help with learning

The “learner” (machine learning algorithm) must deduce its own solution through classification

Key Structures of Natural Language

-noun

-verb

-syntax

-semantics

Noun

The object word in a sentence

Verb

The action word in a sentence

Syntax

The rules which define how a language is structured

Semantics

The study of the meaning of language

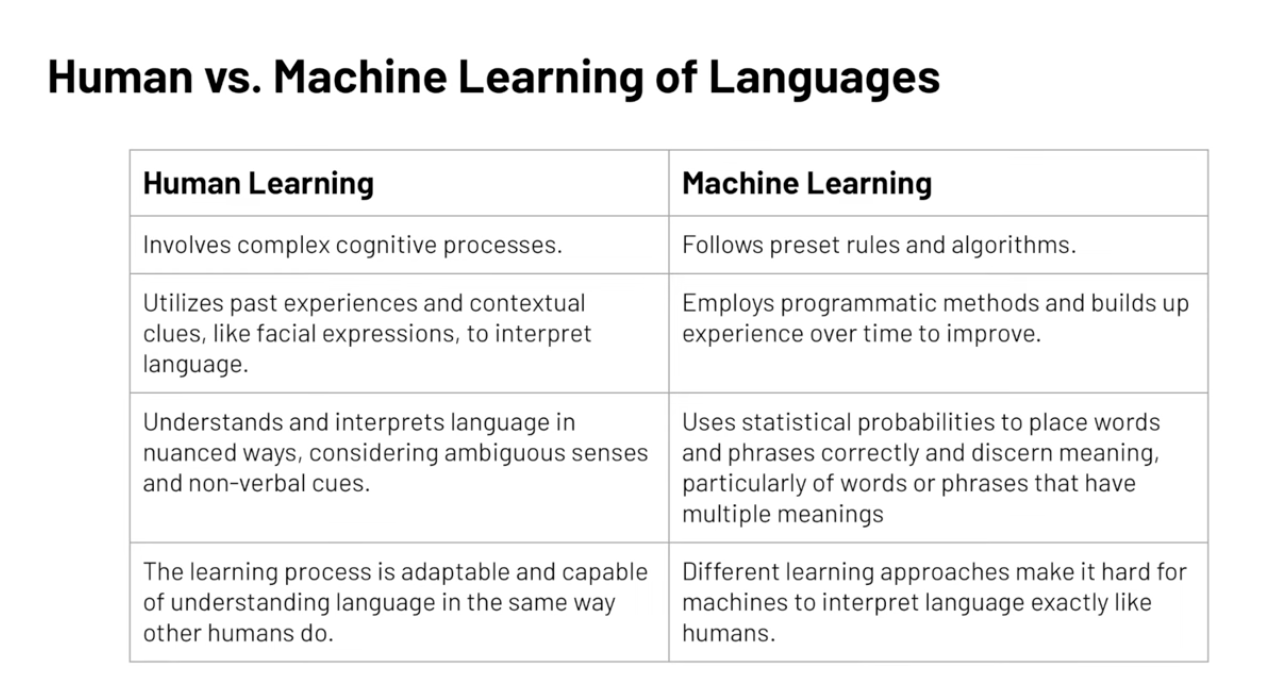

Difference between Human and Machine Learning

People learn through experience that subjectively relates words to past experience to give words their meanings. This is cognitive learning.

Machine must be programmed with algorithms that can develop into solutions to problems e.g. genetic algorithms and neural networks. The vast amount of data now available via the world wide web allows machine learning algorithms to statistically analyse huge amounts of data to develop complex probabilistic models and suggest the most likely solutions.

Cognitive Learning

The acquisition of mental information, whether by observing events, by watching others, or through language

Heuristics

Allowing someone to discover something by their selves.

Probabilities

Some natural language processing software uses probabilities of the combinations of certain words to try process and interpret natural language sentences.

Evolution of Modern Machine Translators

Early machine translators would only translate individual words in a dictionary.

Next, they would analyse grammar and dependencies.

Now, they are able to translate whole phrases using probabilities and computer learning to develop models of commonly used phrases.

Role of Chatbots

Algorithms that are available for people to "chat" with online. Open for anyone to "chat" to, meaning the algorithms can be tested large numbers of times by large numbers of different people and collect large amounts of data of actual human natural language. This data can then be analysed to identify common structures and phrases in use in natural language.

Latest Advances in Natural Language Processing

Algorithms that can interpret and action spoken commands such as Apples Siri on its smartphones and tablets. Also used to "mine" social media texts to gather data on health finances and to identify sentiment and emotion toward products and services (to be used for marketing purposes).

Difficulties in Machine language Translation

Although words can be recognised they could have many meanings.

Natural language does have syntax (rules) but they are not strictly applied e.g. the same words can be nouns or verbs.

Words in the same language can have very different meanings based on location e.g. fanny, fag etc.

Evaluate the advantages and disadvantages to society of the rapid and sophisticated analysis of information on social networks.

Important developments and views get published quickly; Such as in dangerous situations (war, earthquake etc);

A lot of the information is very subjective or politically undesirable; Sources easily traced can lead people into dangerous situations...;

Overall international boundaries could be opened leading to a safer world; But it depends on who uses the information...;

Distinguish between the structures of natural language that can be learnt by robots and those that cannot.

A robot can be programmed to apply rules/syntax to natural language;

A robot can be programmed to recognise certain vocabulary;

A robot would not be able to work out repetition of the same word with different meanings;

Discuss the differences between human and machine learning in relation to natural language processing.

Human cognitive learning/complex thought process include past experience of the ways in which words are used / ambient senses / context such as facial expressions when words are used etc;

Machine learning involves following preset rules combined with heuristics - building up experience over time and using probabilities to place words/phrases correctly.

These different approaches to learning make it difficult for machines to use/interpret language in the same way as humans;

Research into cognitive learning/human thinking could mean that (given time), machines could be taught natural language;

Neural Networks

Simplified version of the human brain. They are designed to allow us to identify categories or outcomes, make sense of different types of information, or recognize patterns in data. These networks improve by learning from tasks or examples overtime.

Sigmoid

Part of a Hidden Layer. Outputs between 0 and 1, useful for probabilities

ReLU

Part of a Hidden Layer. Passes only positive values, enhancing computational efficiency

Weights

Part of a Hidden Layer. Parameters that adjust the strength of input signals between neurons in different layers of a neural network. These are critical for learning because they change during training to improve the network’s predictions

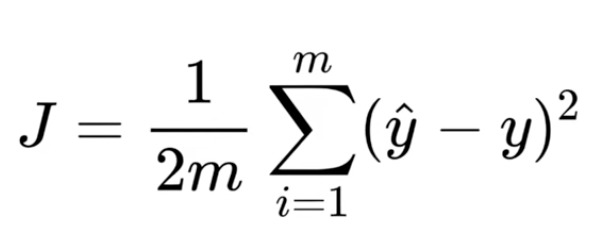

Cost Function (Fitness Function)

Measures the difference between a neural network’s predictions and the actual target data. A guide for the network’s learning process, with the aim to minimize this function across training iterations. Lower values of this function indicate a more accurate model and better performance on the given task

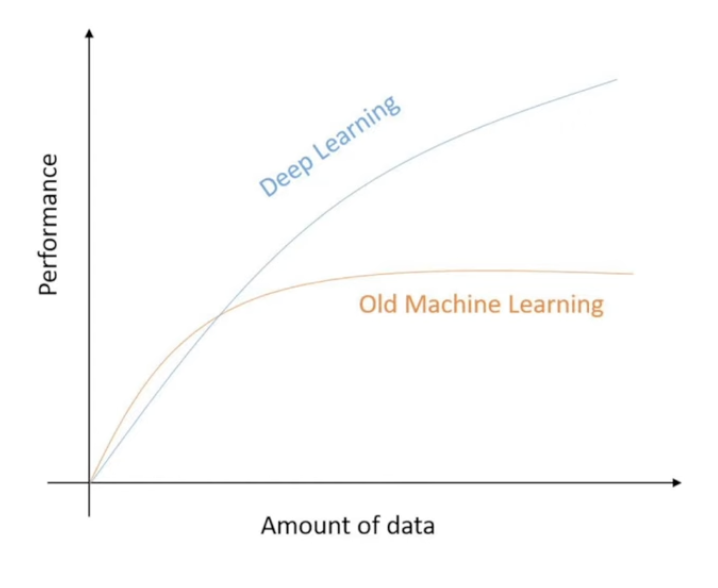

Performance Factors of Neural Networks

Increase the number of inputs

Increase the number of hidden layers

Applications of Neural Networks

Image Recognition

Optical Character Recognition (OCR)

Predictive Text

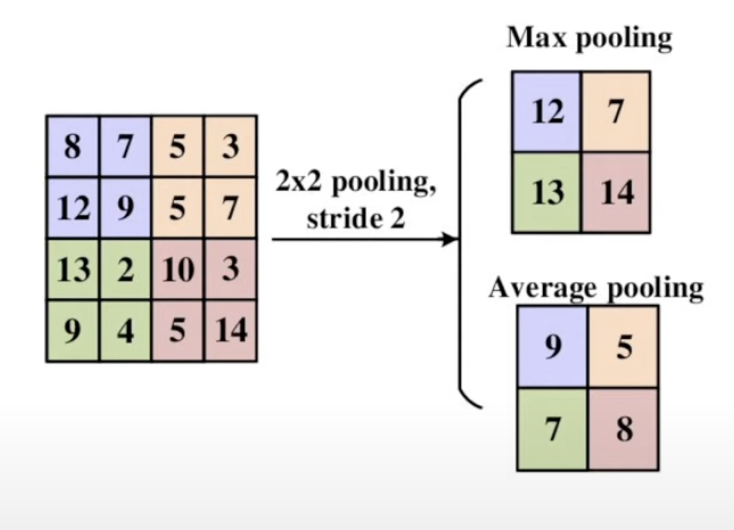

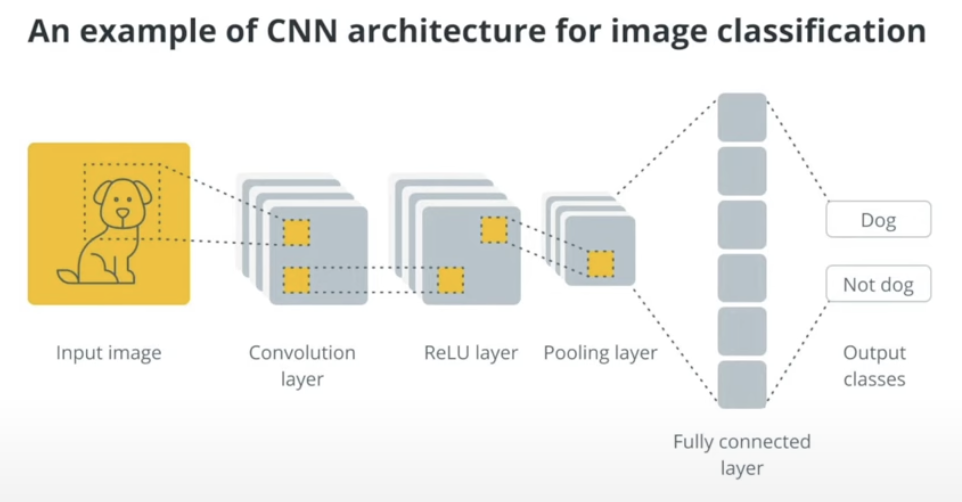

Pooling Layers

Used in neural networks to reduce the dimensions of the data they process. They work by summarizing the features detected in the input data, typically in an image or a feature map created from an image. Result represents an image using less data, decreasing the computation load

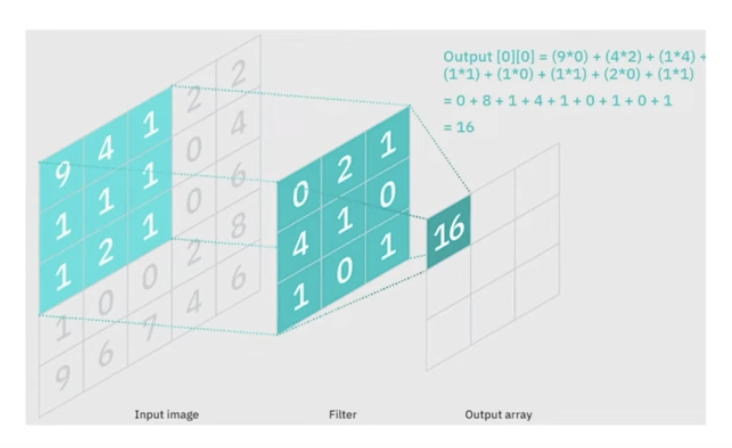

Convolution

A mathematical operation used mainly in Convolutional Neural Networks (CNNs) for image processing. It involves using a small matrix of numbers (kernel or filter), that moves across an image to analyze specific areas.

Why use convolution?

Feature detection: helps the neural network detect important features in the text i.e. the shape of letters and numbers. This is crucial because different fonts and handwriting can very significantly, and picking out these details helps the system recognize the characters accurately.

Robustness: The OCR system becomes more robust to variations in size, orientation, and distortion of the text by focusing on features rather than the whole image. This means it can still recognize text effectively even if the image quality is not perfect.

Efficiency: Reduces the amount of information the network needs to process by extracting only the important features from the image, making the network faster and less computationally expensive to run.

Convolution Layers

Often used in the OCR/image recognition process. Consists of a layer of “neurons” that don’t take part in the learning process, but simply perform convolutions

Network Architecture: Diagram

An example of CNN architecture for image classification

Feedforward

Data going through a network. The process behind this is it consists of a convolution layer, ReLU activation layer, and pooling operations.

Learning Rate

Mathematical factors of a neural network

Hyperparameters

Involves mathematical variables that are used to control the operations of neural networks, and might need to be changed to optimize the operations and the accuracy of a neural network.

The Process of Supervised Learning

[IB Response]

Uses a (training) set of input data and known response values to compare the model’s output with actual values from the past which will give an error in the estimate;

The model is then changed to minimize this error;

This process is iterated until a sufficiently accurate model is produced, which can then be used to make predictions/classifications;

Advantages and Disadvantages of Supervised Learning

Advantages:

High Accuracy: Models can achieve high levels of accuracy if trained on sufficient and well-labeled data

Easier to understand and interpret: Models, especially simpler ones like linear regressions, are often more interpretable, making them easier to troubleshoot and understand

Clear Objectives: The objectives are clearly defined, and the model is trained to achieve specific tasks based on labeled examples

Disadvantages:

Requires labeled data: Needs a large amount of labeled data, which can be costly and time-consuming to prepare

Limited to known problems: Can only be applied to problems where the output categories or results are known beforehand

Sensitivity to data quality: The performance heavily depends on the quality of the data; poor data can lead to poor model performance

Advantages and Disadvantages of Unsupervised Learning

Advantages:

Discovering hidden patterns: Excels at identifying hidden structures and patterns in data without preexisting labels

No need for labeled data: Does not require labeled data, making it suitable for situations where data labeling is impractical or impossible

Flexible and exploratory: Allows for exploratory data analysis to find unforeseen relationships without the need for predefined hypotheses or models

Disadvantages:

Less accurate predications: without labeled outcomes to guide the learning process, predictions and interpretations can be less accurate than in supervised learning

More complex to understand and interpret: The models and their results are often more difficult to interpret, as there’s no straightforward metric like prediction accuracy for evaluation

Lack of objective evaluation criteria: Without predefined labels, it’s challenging to objectively evaluate the model’s performance or the significance of the findings

3 Main Types of Machine Learning Algorithms

Supervised

Unsupervised

Reinforcement

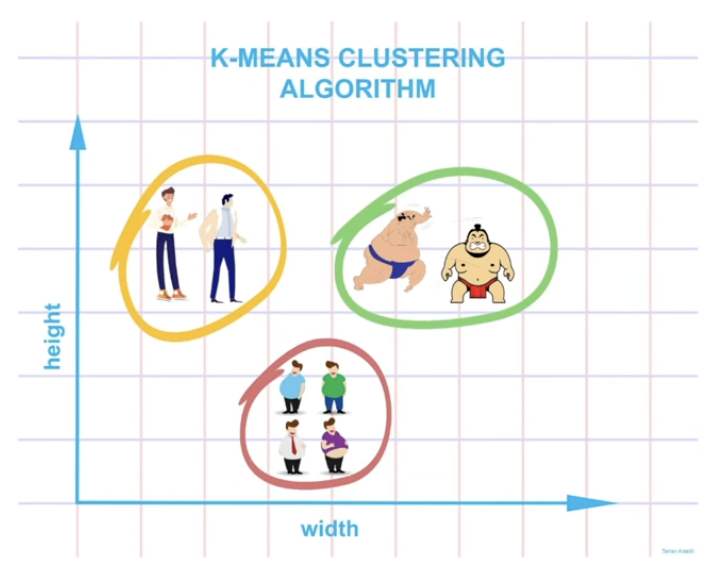

Cluster Analysis

A technique—that uses unsupervised learning—used to group sets of objects in a way that objects in the same group—called a cluster—are more similar to each other than to those in other groups.

The primary goal is to discover natural groupings within the data, to identify patterns and structures that may not be immediately apparent