Lecture 12: Olfaction and Taste and Multisensory Perception

1/103

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

104 Terms

Smell is the only sense

that doesn’t go through thalamus

When we sleep, thalamus (big unit of control) will shut down all our senses

Therefore, when we sleep our sense of smell still works

Odours

Olfactory sensations

Chemical compounds that are

Volatile

Substance easily evaporated at normal temperatures.

Small

Hydrophobic

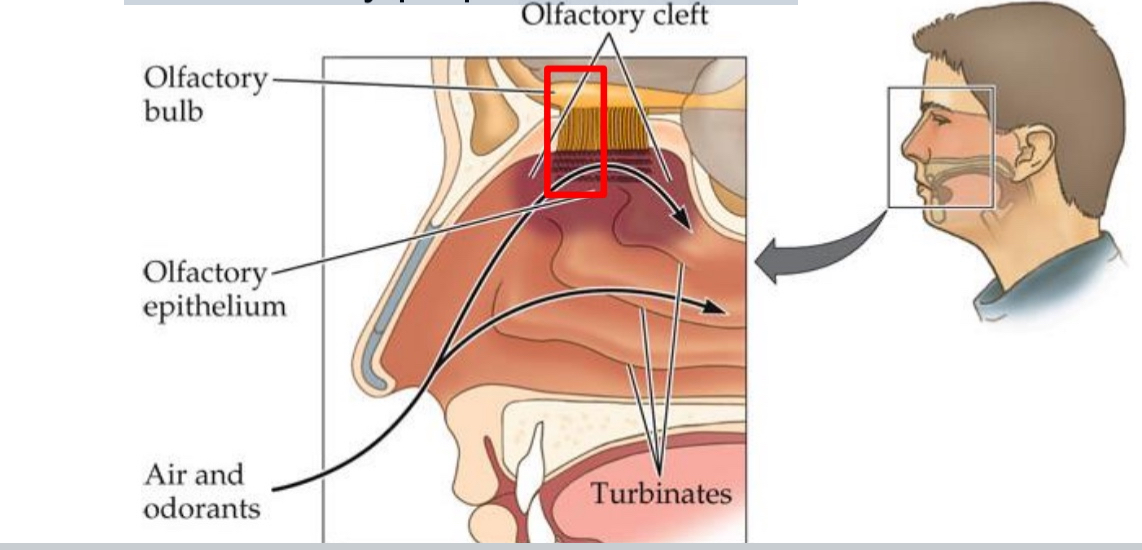

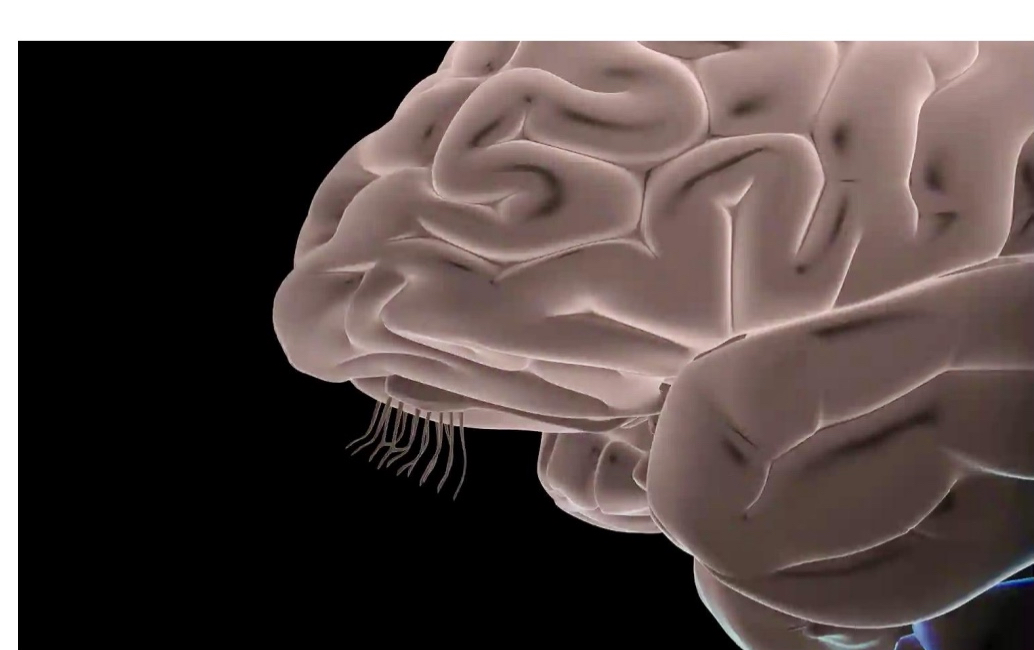

The human olfactory apparatus

Nose: Small ridges, nostrils (nasal cycle)

Nose primary purpose is breathing, then smelling

Nostrils are usually different sizes and charge throughout the day → enhances different chemicals

Sniffing

olfactory cleft, olfactory epithelium

Secondary purpose of the nose

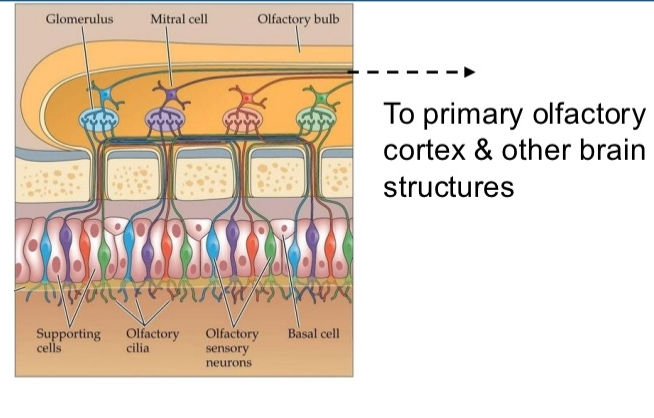

The olfactory epithelium

the “retina” of the nose contains 3 types of cells:

Supporting cells

Basal cells (precursors to…)

Olfactory sensory neurons (OSNs)—cilia protruding into mucus covering olfactory epithelium

Olfactory receptors (ORs): Interact with odorants ➔ action potentials along…

Olfactory nerve (cranial nerve I; thin axons, slow)

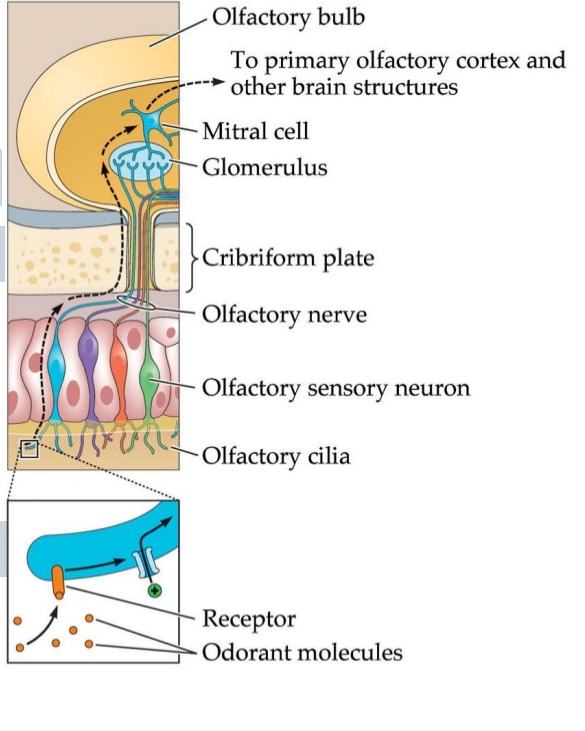

Olfactory receptors (ORs):

Located in the olfactory epithelium of the nose.

Function: Detect odorant molecules that bind to them.

This binding triggers a signal cascade, leading to depolarization and the generation of action potentials.

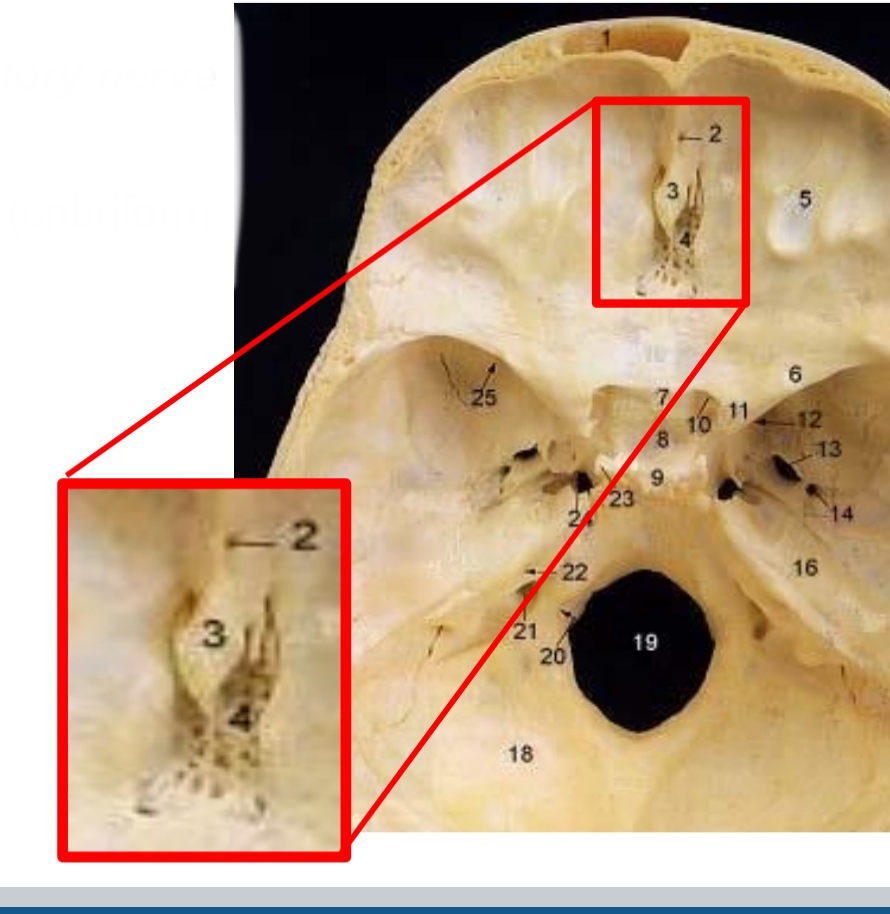

These action potentials travel through the cribriform plate (a porous part of the skull).

The axons of these olfactory receptor neurons bundle together to form the olfactory nerve (Cranial Nerve I).

The signal continues to the olfactory bulb, where initial processing occurs.

1:1 ratio (one gene that has building plans for one receptor)

How do odorant molecules trigger neural signals in olfaction?

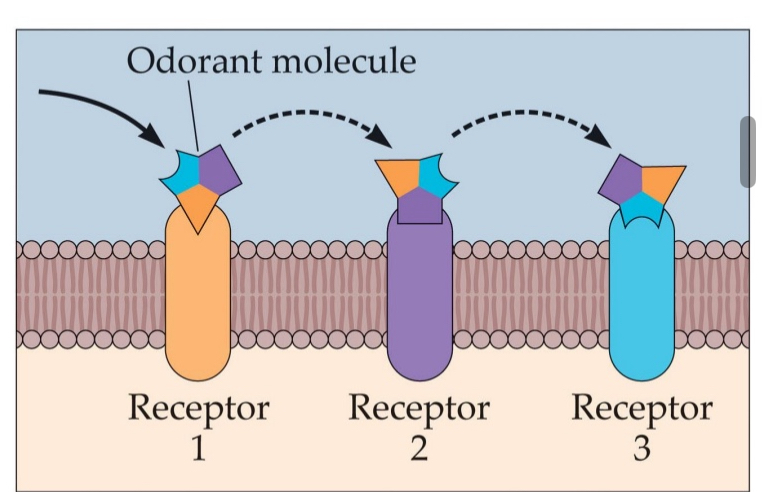

Odorant molecules bind to G-protein-coupled olfactory receptors located on olfactory sensory neurons.

This activates a signal transduction cascade, leading to the opening of ion channels.

Sodium (Na⁺) ions enter the cell, causing depolarization of the neuron.

If depolarization reaches threshold, it triggers an action potential, sending the signal to the brain.

Depolarization

when the inside of the cell becomes less negative (more positive) compared to the outside.

Olfactory nerve

cranial nerve I; thin axons, slow

All the hairs on the toothbrush collectively

Cilia stimulated by odorants (not mechanical shearing forces) making olfactory system a chemical sense

Lesions to olfactory nerve cause

anosmia

anosmia

Damage like electric razor (like shaving the olfactory nerve)

Head trauma (cribriform plate)

Infections → swelling of the nerve

Olfactory loss can cause great suffering:

Sense of flavour lost: all food will taste bland

Sensing danger: won’t smell smoke if you forget to turn of the stove

Quite common

Early symptom for Alzheimer’s, Parkinson’s

Anosmia and parosmia due to COVID-19

Covid-19 in October 2020

Lost sense of smell (and taste)

After 2 months of recovery, everything smelled like ashtrays

Early 2021: smelling and tasting rotting flesh

Since August 2021: can barely eat, social isolation

Pinching nose helps

Sign of regeneration

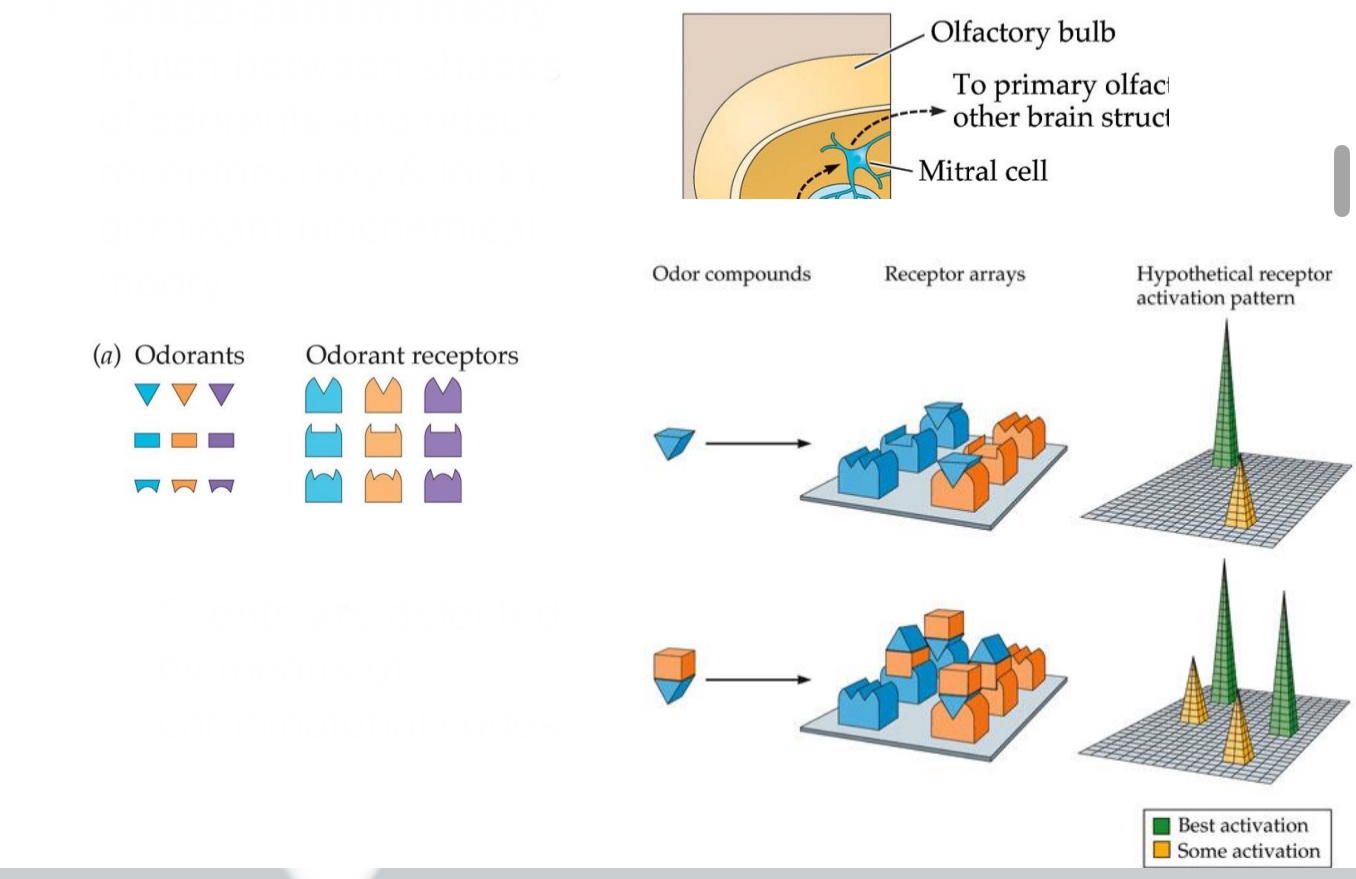

The olfactory bulb:

Ipsilateral projection

Glomeruli: spherical structures in which OSNs synapse with mitral cells and tufted cells

Chemo-topography: glomeruli sort according to OR’s

Glomeruli:

spherical structures in which OSNs synapse with mitral cells and tufted cells

Spherical structures where olfactory sensory neuron (OSN) axons synapse with two types of output neurons:

Mitral cells

Tufted cells

Each glomerulus receives input from OSNs expressing the same type of olfactory receptor (OR).

Chemo-topography:

glomeruli sort according to OR’s

Refers to the spatial organization of glomeruli in the olfactory bulb.

Glomeruli are sorted based on the type of OR they receive input from.

This creates a chemical map of odor information, where different odors activate distinct regions.

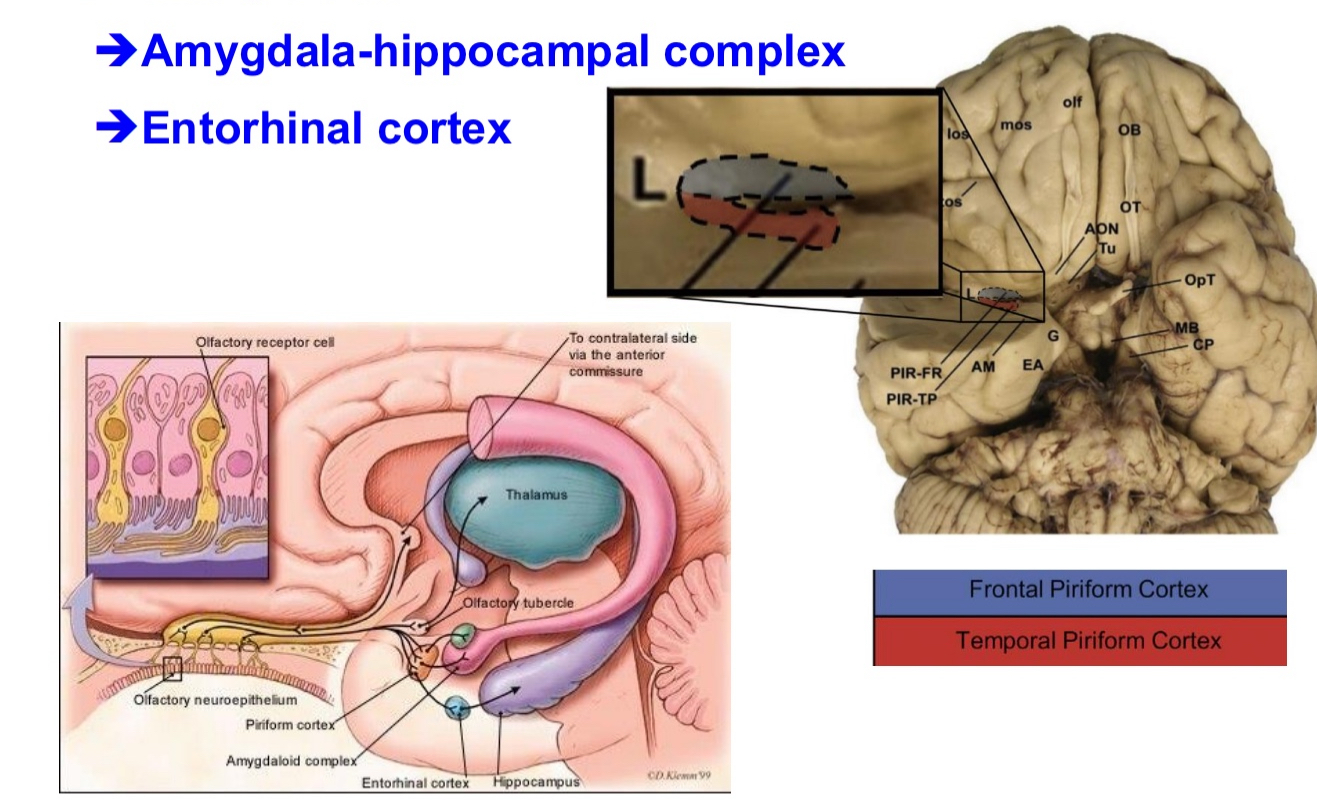

Olfactory cortex

After odor information is processed in the olfactory bulb, it travels directly to several brain regions without first going through the thalamus (which is unusual for sensory systems).

➔Amygdala-hippocampal complex

➔Entorhinal cortex

Amygdala-Hippocampal Complex

Involved in emotional and memory-related processing of odors.

Explains why smells can trigger strong memories or emotional responses.

Entorhinal Cortex

A gateway to the hippocampus.

Important for forming olfactory-related memories and spatial context.

The genetic basis of olfactory receptors:

1 gene for one receptor (1:1 ratio)

~1000 different olfactory receptor genes, each codes for single type of OR

Pseudogenes: dormant, don’t produce proteins (i.e., ORs), 20% in dogs, 60-70% in humans

Trade-off between vision and olfaction?

Pseudogenes:

dormant, don’t produce proteins (i.e., ORs), 20% in dogs, 60-70% in humans

Multisensory perception

a feel of scent:

Odorants can stimulate somatosensory system (touch, pain, temperature receptors)

These sensations are mediated by the trigeminal nerve (cranial nerve V)

Also see taste!

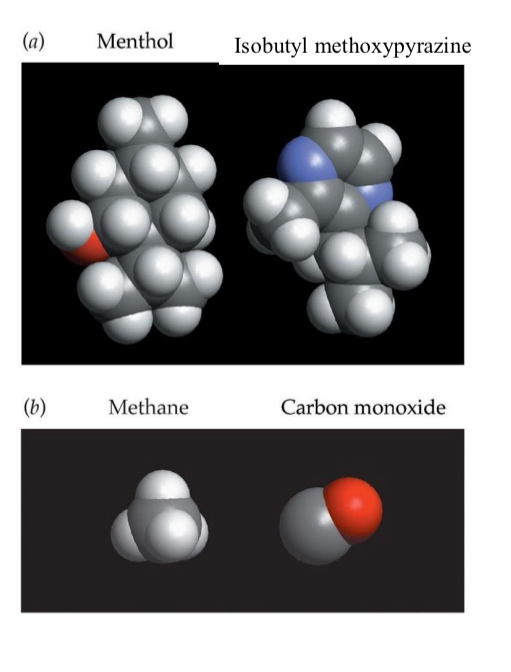

Shape-pattern theory:

Match between shapes of odorants and odour receptors (key & lock); dominant biochemical theory

Odarant receptors are the lock and odorants are the key to cause G protein coupled process

Scents are detected by means of combinatorial codes

A single odorant can activate multiple receptors.

A specific pattern of receptor activation leads to the perception of a particular smell

Each combination causes an action potentials, some might be more stronger (or a better fit) than others

We perceive odours based on distributed patterns of receptor activation.

Each odorant can bind to multiple types of olfactory receptors, not just one.

Each receptor can respond to many different odorants, depending on their shape.

This means that a single scent creates a unique combination (or "pattern") of activated receptors.

The brain decodes this pattern across the olfactory system to recognize the specific odour — similar to how letters form words.

This supports the Shape-Pattern Theory and explains how we can detect thousands of different smells with a limited number of receptor types.

The importance of patterns:

We detect a multitude of scents based on ‘only’ 300-400 olfactory receptors … how?

We can detect the pattern of activity across various receptor types

Similar to metameres in colour vision: phenyl ethyl alcohol = rose

Same receptors might create same response patterns to different molecules

More _____ than there are _______

Odourants, receptors

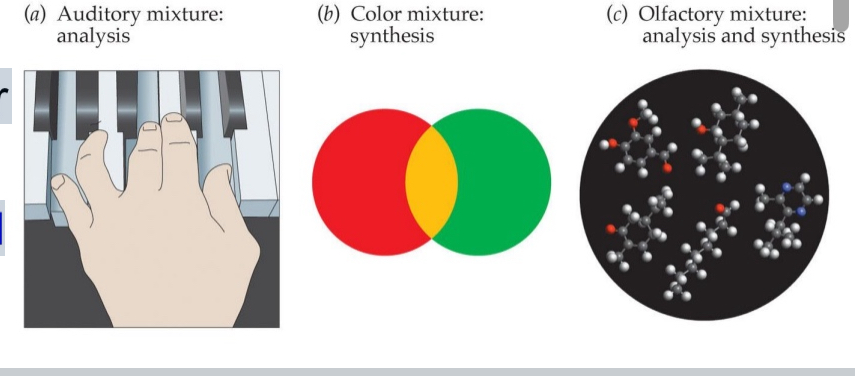

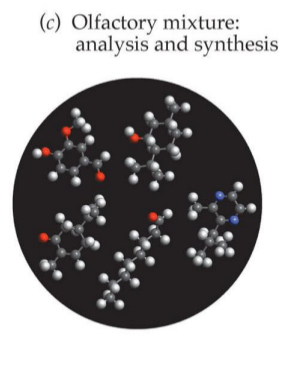

Odour mixtures:

We rarely smell “pure odorants,” rather we smell mixtures

Chocolate smell is based on: ~600 odorants even though we have 300 - 400 receptors

Like how in vision we have 3 photoreceptors and can sense all kids of photoreceptors

Olfaction rather synthetic but can be trained

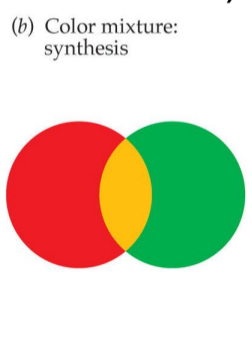

How do we process the components in a mixture of odorants?

Two possibilities:

Analysis (audition: hi/low pitched tones)

Synthesis (metameres in colour vision)

Analysis

audition: hi/low pitched tones

We detect and identify individual components of a mixture.

Like in audition, where you can hear both a high and low-pitched tone at the same time.

Example: Smelling mint and lemon and recognizing both distinctly.

Synthesis

metameres in colour vision

We perceive the mixture as a new, unified scent, not the individual components.

Like in color vision, where combining wavelengths (e.g., red + green) creates the perception of a new color (e.g., yellow).

Odor mixtures can form olfactory metamers — different combinations that smell the same.

Olfactory mixture

Analysis and synthesis

When we are not trained we sense mixtures of odours in synthetic way (synthesis)

We can train to splitting apart different senses (analysis)

Olfactory detection thresholds:

Depend on several factors, e.g., length of carbon chains (vanilla!)

Certain chemical properties of the odourants means we are better at sensing that

Women:

lower smelling thresholds than men, depending on menstrual cycle but not pregnancy

Professionals can distinguish up to 100,000 odors (e.g., professional perfumers, wine tasters)

NOPE WRONG

New research suggests that humans can distinguish at least 1 trillion olfactory stimuli

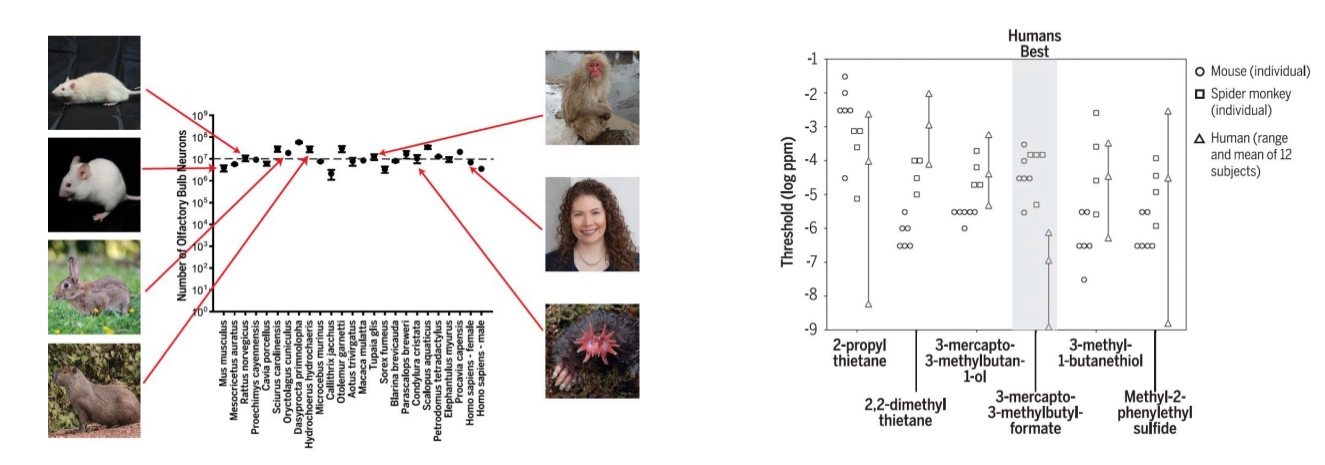

Common smelling myth

humans have a poor sense of smell compared to other animals, like dogs

Correction: Humans don’t have poor smell compared to other species

Olfactory bulb only small relative to brain

The olfactory bulb is only small relative to overall brain size, not in absolute terms

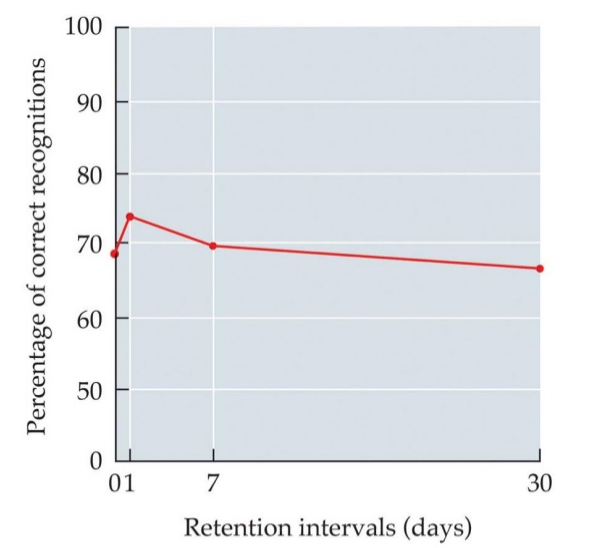

Recognition: Smell and memory

Smell does not enhance memory but we have a good memory for smell

Durability:

Our recognition of smells is durable even after several days, month, or years.

Identification:

smell and language

Attaching verbal label to smell is not easy (few words for smell)

“Tip-of-the-nose phenomenon”

Disconnect btw. language and smell (left vs. right brain)??

An example

Patrick Süskind’s: The Perfume: The Story of a Murderer

“Tip-of-the-nose phenomenon”

Similar to the “tip-of-the-tongue” phenomenon in language.

Happens when you recognize an odour as familiar, but can’t name or identify it.

Common because olfaction is weakly linked to language:

Odour perception is processed in limbic regions (emotion & memory),

but not strongly connected to language areas in the brain.

Might be because language in left hemisphere and smell is in the right

This highlights the difficulty of verbalizing smells, even if we’ve experienced them many times before.

Patrick Süskind’s: The Perfume: The Story of a Murderer

Grenouille’s (main character) inability to describe smells reflects the weak connection between smell and language (tip-of-the-nose phenomenon). The story also explores how scent can manipulate perception, identity, and social behavior.

Adaptation:

Sense of smell – change detector

E.g., walking into bakery, plumber, perfume store

Odors bind to G protein-coupled receptors that indirectly open Na+ channels

Receptor adaptation: Continuous stimulation; GPCRs bury themselves inside cell

Intermittent stimulation: e.g., smell at IKEA

Cross adaptation: reduced detection of odor after exposure to odors that stimulate the same ORs.

Olfactory Adaptation

The sense of smell acts as a change detector — it’s highly sensitive to new odors, but adapts quickly to continuous stimulation.

Types of Adaptation:

Receptor Adaptation

Intermittent Stimulation

Cross-Adaptation

Receptor Adaptation

Mechanism: Odorants bind to G-protein-coupled receptors (GPCRs), which indirectly open Na⁺ channels, causing depolarization.

With continuous stimulation, GPCRs may internalize (bury themselves inside the cell), reducing response.

➤ Example: You stop noticing the smell of a bakery or perfume store after a few minutes.

Coffee beans used to deadapt nose to smell (in perfume store), or just sniff into sleeve (plumber)

Intermittent Stimulation

If odor is not constant, adaptation is less likely.

➤ Example: Smelling something again when walking into another section of IKEA.

Cross-Adaptation

Exposure to one odor can reduce sensitivity to another odor that uses the same olfactory receptors.

➤ Example: Smelling one floral scent may reduce your ability to detect another floral scent shortly after.

Cognitive (and other kinds of) habituation:

After long-term exposure to an odorant, a person’s ability to detect it diminishes significantly. This effect can last even after the odor is gone.

Example: After returning from vacation, you notice your house has a smell you hadn’t detected before — a sign you had habituated to it previously.

Long-term (not necessarily cognitive) mechanisms

Longer term receptor adaptation

Prolonged exposure leads to desensitization at the receptor level.

Olfactory receptors bury themselves in the membrane of the olfactory sensor neurons → more permanently and long term

Odorant molecules may be absorbed into bloodstream causing adaptation to continue

Molecules may enter the bloodstream, maintaining internal exposure and continued adaptation.

Ex. Eat garlic, odour will enter bloodstream, that will pass through olfactory epithelium. Therefore your blood is adapting to smell of garlic. You can’t smell the garlic but people around you can

Cognitive-emotional factors

Odors believed to be harmful won’t adapt

Odors perceived as harmful or dangerous often do not habituate, due to emotional and attentional salience.

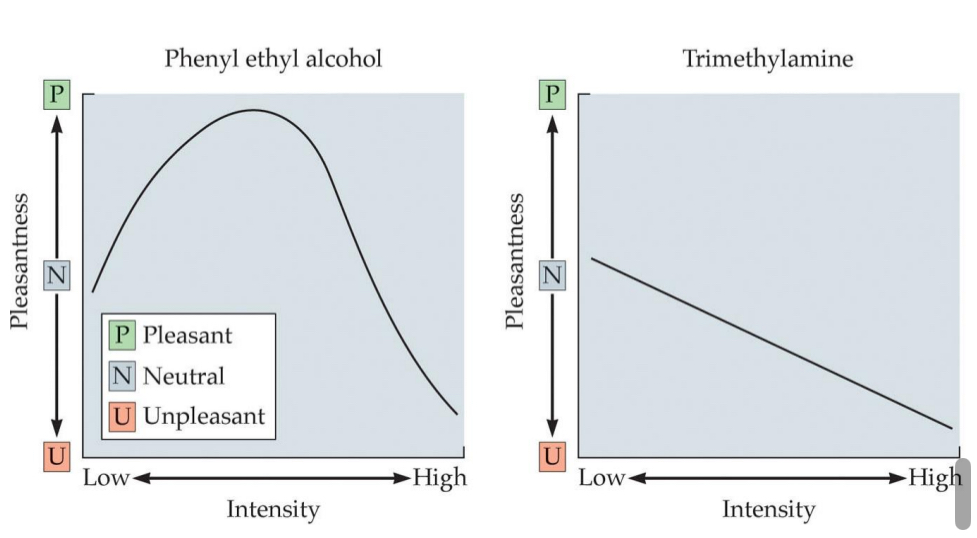

Odor hedonics:

The liking dimension of odor perception; typically measured with scales pertaining to an odorant’s perceived pleasantness, familiarity, and intensity

We tend to like odors we’ve

smelled many times before (nurture!)

Influence of intensity

Babies like slight smell of poo

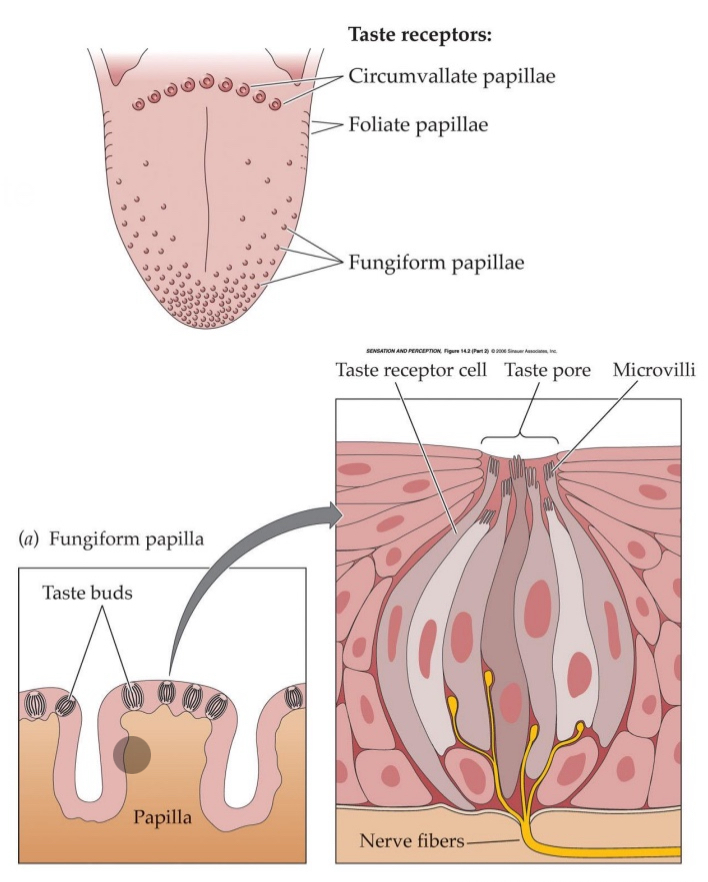

Taste buds

Create neural signals conveyed to brain by taste nerves

Embedded in structures: Papillae (bumps on tongue)

Each taste bud contains taste receptor cells

Information is sent to brain via cranial nerves

Cats use tongue to brush fur

What are the bumps on the tongue

papillae

Each taste bud contains

taste receptor cells

Tastants

chemical substances that, when dissolved in saliva, stimulate taste receptor cells, triggering the sensation of taste

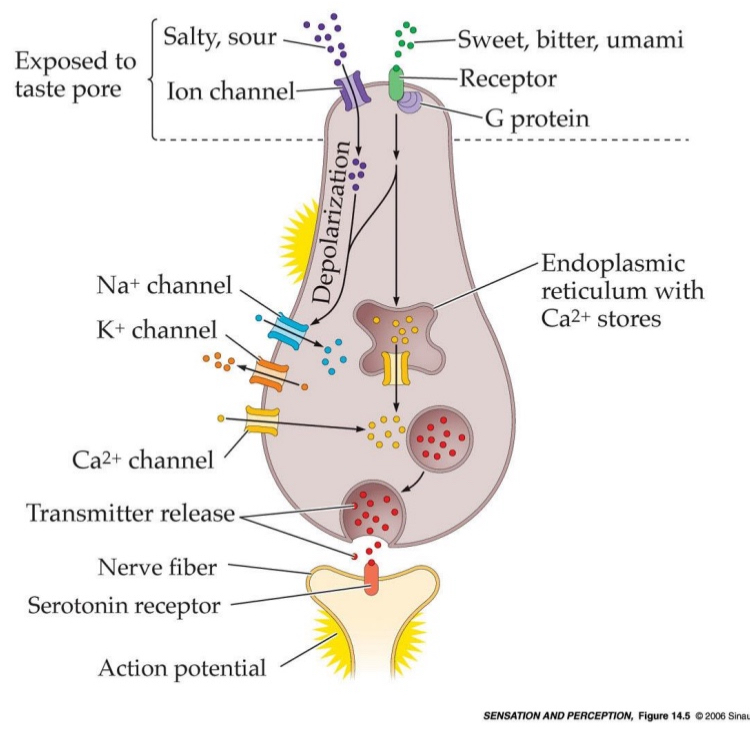

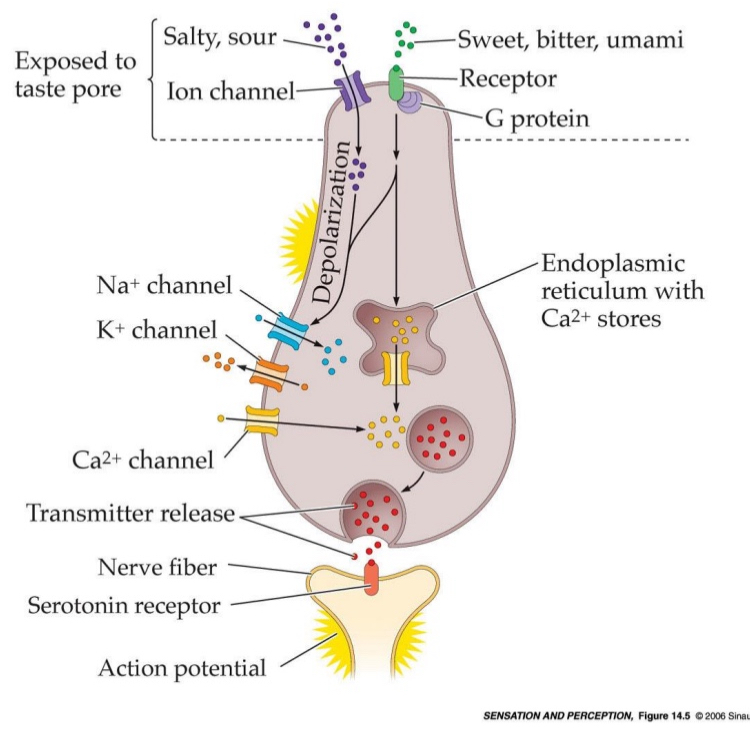

2 types of tastants

Channels for salty & sour

G protein-coupled receptors

Ion channel tastants

used for salty and sour

Tastants enter ion channels on the taste receptor cells.

Salty: Na⁺ ions flow through sodium channels.

Sour: H⁺ ions (from acids) affect proton-sensitive channels.

Ion enters the cell → Depolarization causes sodium channels open → calcium will enter cell and potassium will leave → transmitters released into synaptic cleft

G protein-coupled receptor tastants

used for sweet, bitter, and umami

Tastants bind to G protein-coupled receptors (GPCRs) on the membrane.

This activates a signal cascade (series of biochemical events that happen inside a cell after a receptor is activated — like a chain reaction) inside the cell, leading to depolarization, influx of Ca and neurotransmitter release into synaptic cleft

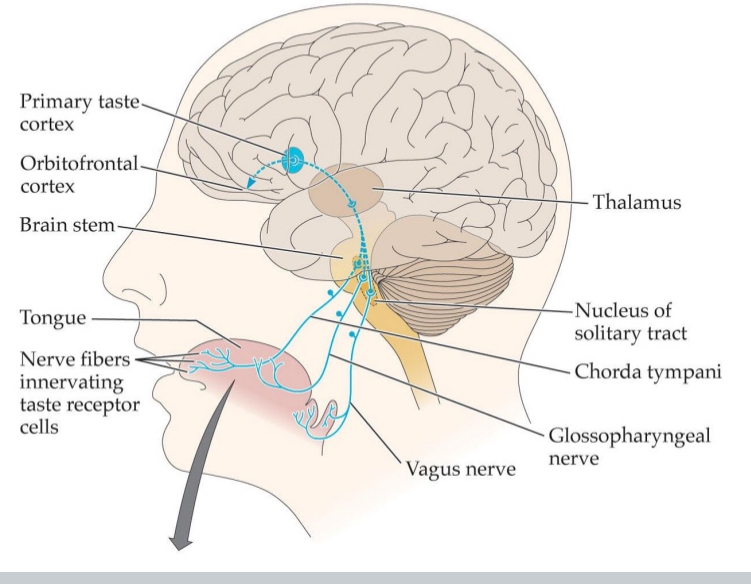

Central nervous system

Facial nerve/chorda tympani (VII), glossopharyngeal nerve (IX), vagus nerve (X)

Gustatory information travels through medulla and thalamus to cortex

Primary cortical processing area for taste: Insular cortex

Orbitofrontal cortex: Receives projections from insular cortex

Some orbitofrontal neurons are multimodal

Primary cortical processing area for taste:

Insular cortex

Orbitofrontal cortex:

Receives projections from insular cortex

Taste Pathway to the Central Nervous System

Taste receptor cells on the tongue detect tastants.

Signals are carried to the brain via three cranial nerves:

Facial nerve (VII) – via chorda tympani

Glossopharyngeal nerve (IX)

Vagus nerve (X)

These nerves send signals to the medulla (brainstem).

From the medulla, information is relayed to the thalamus (the sensory relay station).

Then it goes to the primary taste area: the insular cortex

From the insular cortex, taste info is sent to the orbitofrontal cortex (OFC).

The orbitofrontal cortex integrates taste with smell, vision, and touch – it contains multimodal neurons.

Salty:

Salt made up of two particles: Cation (+ anion)

Sodium saccharine is a salt even though we perceive it as a sweet because the anion is sugary

Ability to perceive salt & liking: not static

Gestational experiences may affect liking for saltiness (if mother as lots of salt during pregnancy, baby might like more salt as it grows up)

Sour:

Acidic substances

At high concentrations, acids will damage both external and internal body tissues

Bitter:

Quinine: Prototypically bitter- tasting substance

Cannot distinguish between tastes of different bitter compounds

poisonous

Bitter sensitivity is affected by hormone levels in women, intensifies during pregnancy

Will reject bitterness to protect the embryo

Sweet:

Evoked by sugars

Many different sugars that taste sweet

Increase appetite and artificial sweeteners

Umami

umai = ‘delicious’, mi = taste

Savouriness; pleasant "brothy" or "meaty" taste of glutamate

Tomatoes, shellfish, cured meats, cheese, green tea

MSG (monosodium glutamate) → artificial way of increasing taste of umami

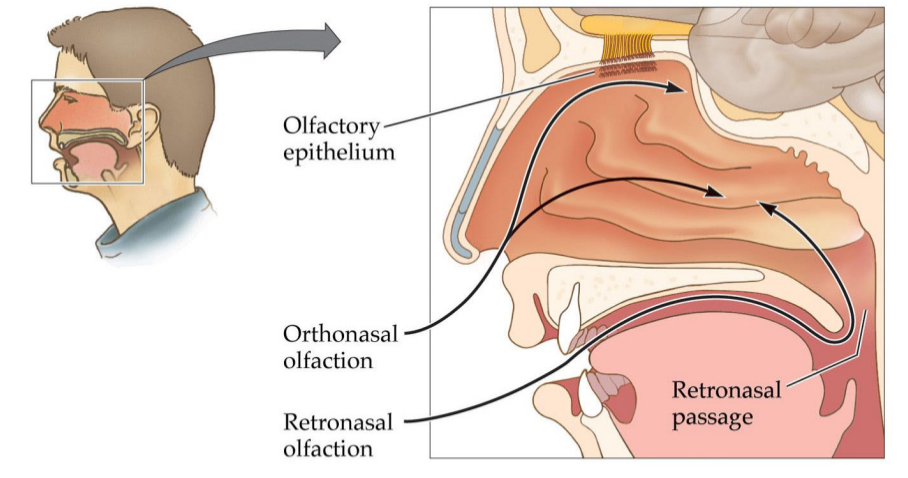

Retronasal olfactory sensations:

Flavour

Olfaction in the presence of taste & somatosenation

Olfactory sensation processed differently in cortex based on taste & somatosensory info

Flavor impoverished with stuffy nose or when we pitch nose

You can get flavour through olfactory epithelium

Through nostrils when sniffing → sense of smell

Odours travel through back of mouth going up → perceived as flavour

Flavour

Flavour = Taste + Smell + Texture + Temperature + Sight + Sound

The "full experience" of food

When you pinch your nose, you block retronasal olfaction, so:

You lose the flavour

What happens when we cannot perceive taste but can still perceive smell?

Patient: Damaged taste, normal olfaction—could smell lasagna, but had no flavor

Brain processes odors differently, depending on whether they come from nostrils or mouth

Food industry:

Sugar to intensify sensation of fruit juice

Manipulates texture

Senses on the tongue determine whether we perceive flavour or not.

Enhance evidence that there if food in your mouth → sense its flavour

But they also determine where we perceive flavour.

When you eat an apple you don’t experience that you have an apple in your nose.

can you sense flavour if you can’t smell

Flavour get reduced

With no taste but smell:

You smell the fruity aroma but won’t register the sweetness—it might feel like you’re chewing on scented water.

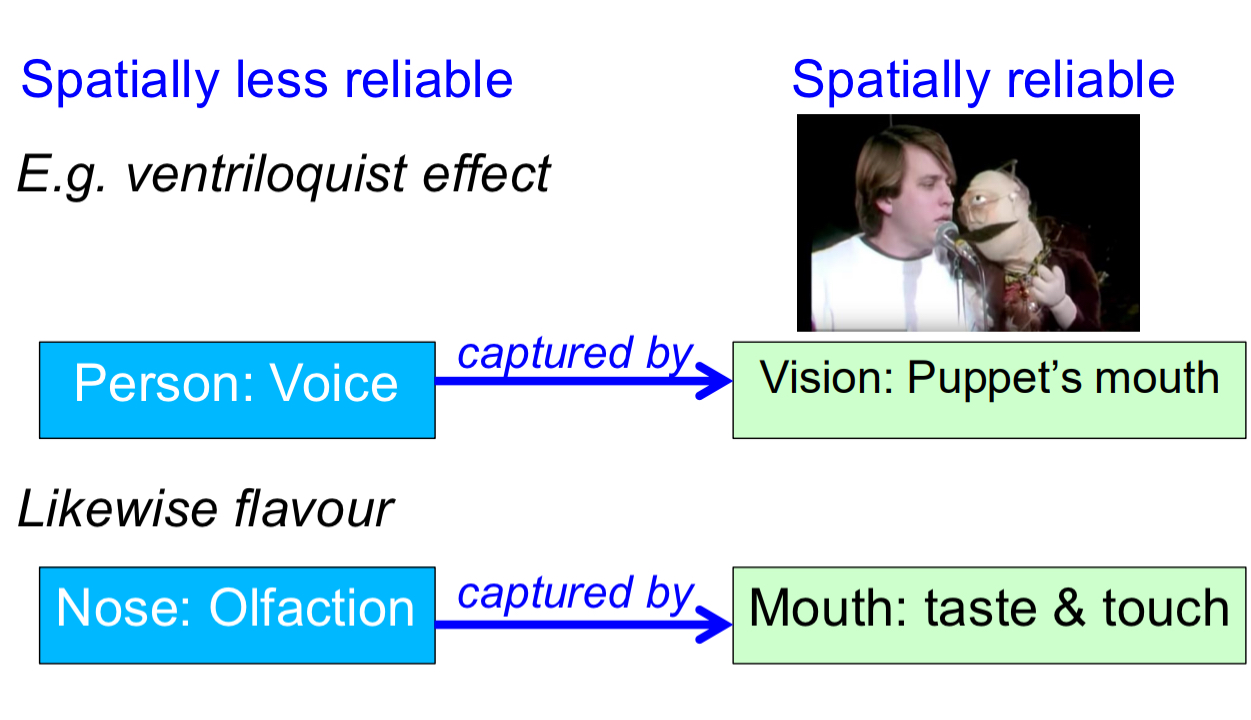

Perceptual capture:

dominance of one sense over other modalities in creating a percept (e.g., about location)

Spatially less reliable vs spatially reliable

Example

When you eat an apple, the smell of the apple comes from retronasal olfaction (odor molecules travel from the mouth to the nose).

However, you perceive the apple as being in your mouth, not your nose, even though the smell is detected in the nose.

This happens because the sense of touch and taste (from the mouth) capture the percept — they dominate the sense of smell, assigning the location of the flavor experience to the mouth.

Spatially less reliable: nose and olfaction. Spatially reliable is the mouth and taste and touch

Example

ventriloquist effect: Spatially less reliable is persons voice. Spatially reliable is vision of the puppet’s mouth

Why combining/integrating sensory sources?

Combined alarm systems (jungle, darkness, braking)

Someone honking at you, so you don’t realize where the its coming from, but then you use vision to identify the danger

Object identification: information from different modalities support each other

Why babies put lots of things in their mouth because detailed somatic sensation in the mouth. → multisensory experience

Visual-auditory localization (twilight, peripheral visual field, occlusion, cluttered scenes)

When it’s getting dark, vision is not great, so you’ll depend on other senses like hearing.

Reliable info for control of locomotion

Tells body we are upright, so we don’t fall over.

Coherence of the world: object unity

Almost all we sense and perceive is

Multi sensory

Multisensory integration:

redundant information

2 or more sensations about a single event in the outside world

e.g., vision as well as audition.

‘What the eye sees’ + ‘what the ear hears’

2 senses, and both are sensing the same thing

Car driving by → event

Eyes see the car

Ears hear the car

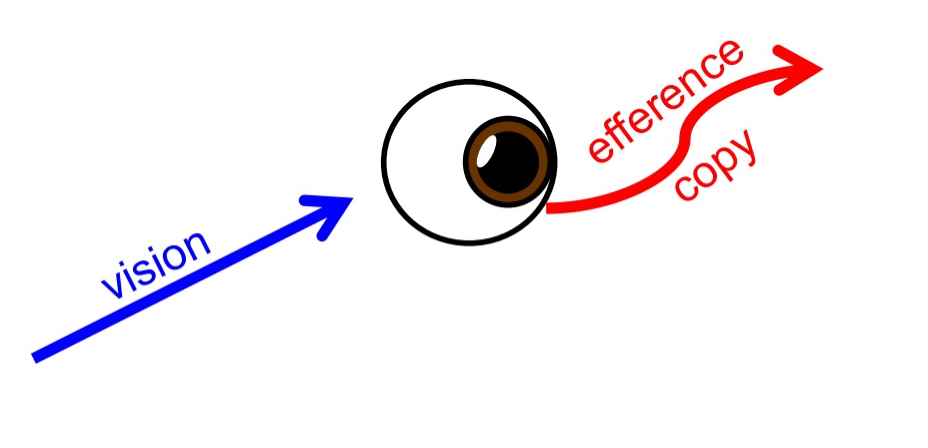

When doing smooth pursuit with eyes

Because your eyes are moving at the same speed as the object, the image of the object remains stable on your fovea.

As a result, the object (e.g., the car) appears not to move relative to your gaze — it looks stationary, even though it's actually moving in the world.

So if I am doing smooth pursuit of a car I need efferent copy

But you can’t sense a car with eye muscles or an efferent copy

Need to put vision and efferent copy together to sense motion

Multisensory combination:

non-redundant information

Sensing the movements/ the spatial orientation of a sensor (e.g., sensing eye or head movements)

one or more senses …

E.g., ‘what happens to the eye’ + ‘what the eye sees’

different types of information, but not always from different sensory organs

multisensory integration or combination: lip reading + listening

multisensory integration

McGurk tricks this concept

McGurk effect

when a person watches a video of someone saying "ga" while simultaneously hearing the audio of "ba". Instead of hearing "ba" or "ga", the listener often perceives a third sound, "da".

Exception to lip reading

multisensory integration or combination: ventriloquist effect

Tricks multisensory integration

See and hear talking

multisensory integration or combination: hearing with the head turned

Multisensory combination

1. Auditory Input (from your ears)

Your ears detect the direction of the sound based on things like:

Time difference between the two ears

Intensity difference

But this only tells you where the sound is relative to your head.

2. Proprioception (from your muscles and joints)

Proprioception tells your brain where your head is pointing in space.

It comes from sensors in your neck muscles and joints.

multisensory integration or combination: looking at a shape & touching it

multisensory integration

Same info, different senses

multisensory integration or combination: Pinocchio illusion

multisensory combination

Vibrating muscle tells you about posture of arm, then touching your nose tells you about the length of your nose

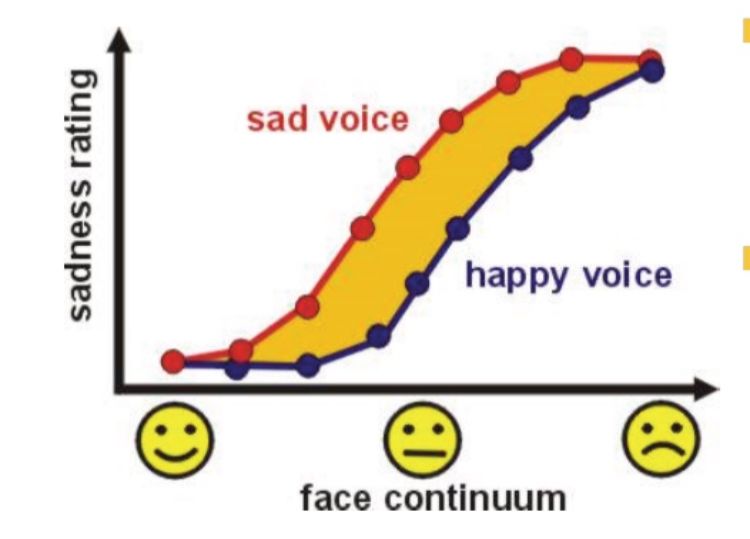

sound and light to read emotion

Observers asked to rate the sadness of a series of faces with different expressions between happy and sad

Sadness ratings follow a psychometric function: from low to high for happy to sad faces

Integrating the face display with a sad or happy voice shifts the psychometric curve to higher or lower sadness ratings

For neutral: you are forced to say happy or sad. So 50% of time you will say that happy, 50% you will say thats sad.

When you hear a sad voice, more likely to say thats a sad face

When you hear a happy voice: more likely to say thats a happy voice

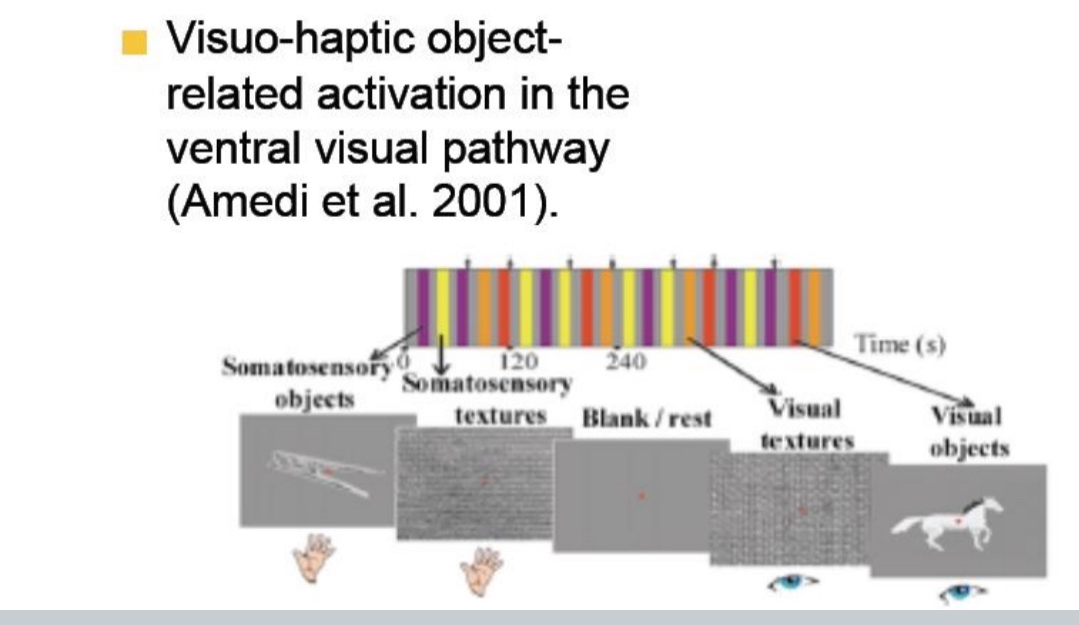

Light and touch for object perception

Amedi et al. (2001) showed that the ventral visual pathway, especially the lateral occipital complex (LOC), is activated not only by visual object recognition but also when objects are explored through touch (haptics).

This suggests that object representations in the brain are modality-independent and shared across vision and touch.

Multisensory neurons

Single cell recordings in cat superior colliculus

Multimodal receptive fields

Bimodal neurons in premotor cortex respond to light and touch.

Their visual Receptive Fields moves with the hand, not the eye

l.e., hand-centred visual RFs

What are bimodal neurons in the premotor cortex, and how do their receptive fields behave?

Bimodal neurons in the premotor cortex respond to both touch and visual stimuli. Their tactile RFs respond to touch on specific body parts (e.g., the hand), and their visual RFs respond to objects near those body parts. These visual RFs are hand-centered, meaning they move with the hand rather than staying fixed in eye-centered space.

Top Diagram

A neuron receiving inputs from visual, auditory, and somatosensory (touch) sources.

This means it integrates information across different sensory modalities.

This happens in areas like the superior colliculus (in cats) and premotor cortex (in monkeys/humans)

Bottom Diagrams

The neuron has:

A tactile receptive field (RF) — responds to touch on the hand (blue zone).

A visual receptive field — responds to visual stimuli near the same hand (pink area).

But here’s the key insight:

The visual RF is hand-centered — it moves with the hand, not the eyes.

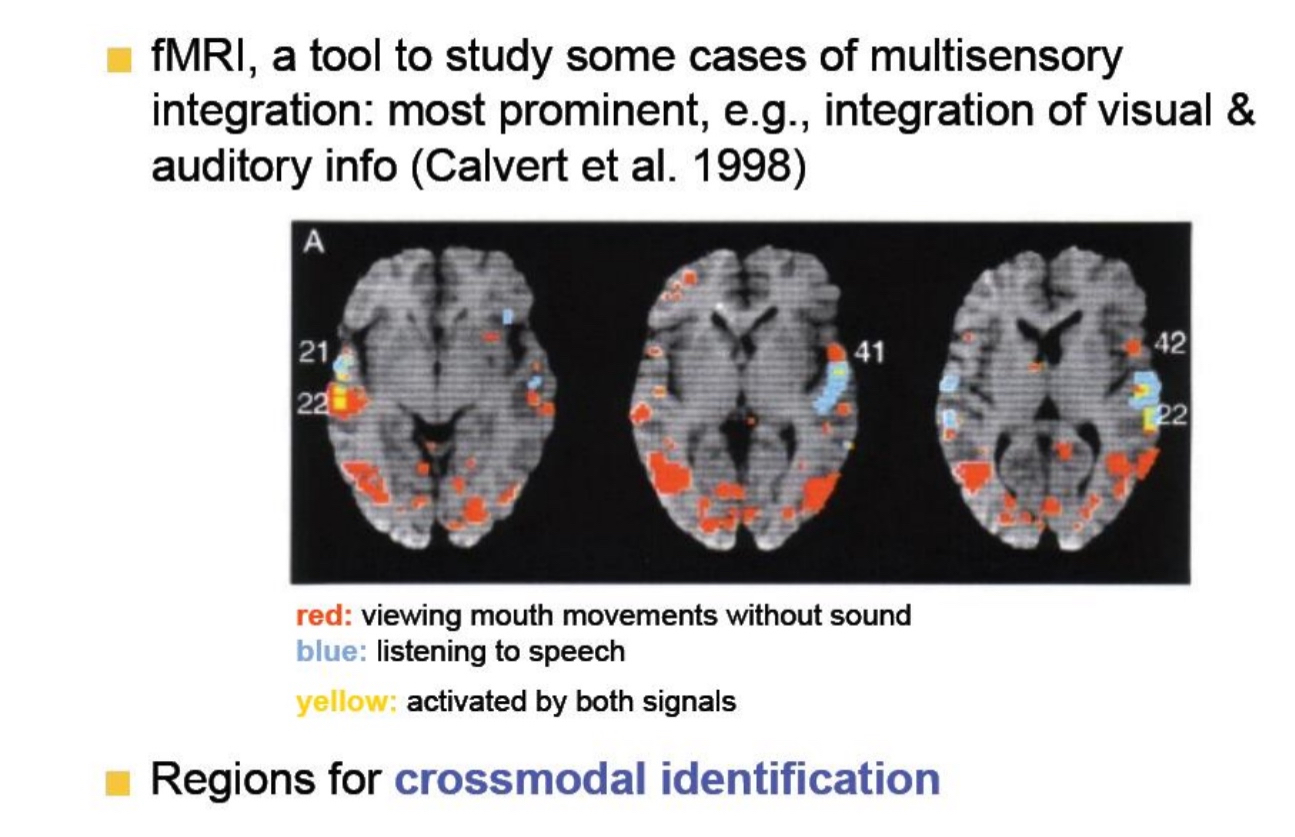

Calvert et al., 1998 - fMRI Study

This section is showing how fMRI (a brain imaging tool) helps scientists study how visual and auditory information are integrated in the brain.

Red = Brain regions activated when people view silent mouth movements (visual only).

LOC

FFA

Blue = Brain regions activated when people hear speech (auditory only)

Auditory cortex In left hemisphere

Yellow = Brain regions activated by both—where visual and auditory info come together = multisensory integration.

These yellow areas are key for crossmodal identification, which means:

Recognizing something (like speech) by combining information from multiple senses (e.g., lip movements + sound).

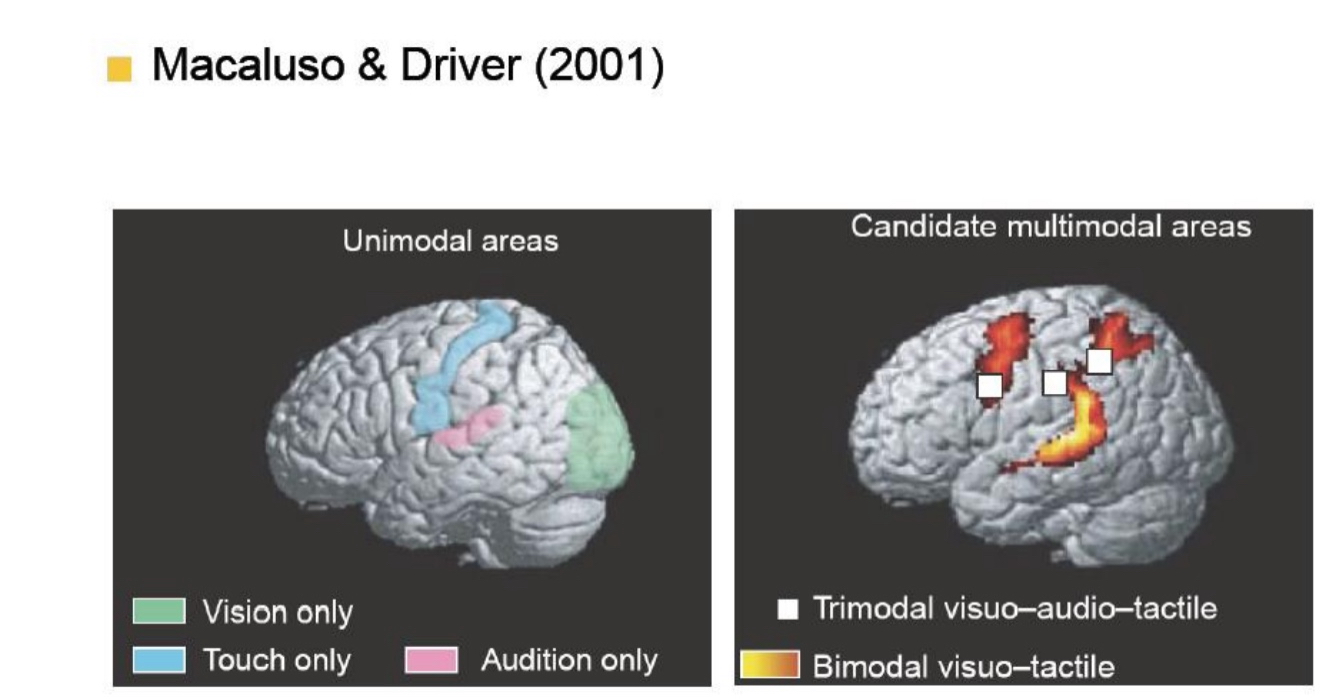

Macaluso & Driver, 2001

This shows different types of brain areas:

Left Image – “Unimodal areas”:

These are areas that only process one sense:

Green = Vision only: Area v1, v2,v3

blue = Touch only

Pink = Audition (hearing) only

Right Image – “Candidate multimodal areas”:

These are brain areas involved in integrating multiple senses: respond no matter what sense you get. Neurons that have receptive fields for more than just one modality.

White squares = Areas that respond to three senses: vision + hearing + touch (trimodal)

Yellow-orange = Areas that respond to two senses: vision + touch (bimodal)

point is : Your brain doesn’t treat senses as totally separate. It has specific regions that combine them, especially when you need to make sense of the world (like understanding speech from both sound and lip movements).

Unimodel area

Will respond when you have a multisensory stimulus

If see a light in darkness, but hear a click in the same direction, the visual cortex will respond more strongly

But that doesn’t make it a multisensory area because if you don’t have vision, it won’t respond

Visual areas can respond more strongly if you have more than just vision, but won’t respond at all in the absence of vision

Body schema: Conceptualization

Body image: lexical-semantic knowledge of the body and its functions

Ex. You have 2 hands, 2 eyes

Body structure description: knowledge of spatial relationships

Hand is connected to lower arm, connected to upper arm then shoulder

Body schema: dynamic online-representation of the body in space from somatosensory afferences

Clear sense of what is our body and where it is, posture

Body schema:

dynamic online-representation of the body in space from somatosensory afferences

Clear sense of what is our body and where it is, posture

Manipulation of body schema through vision

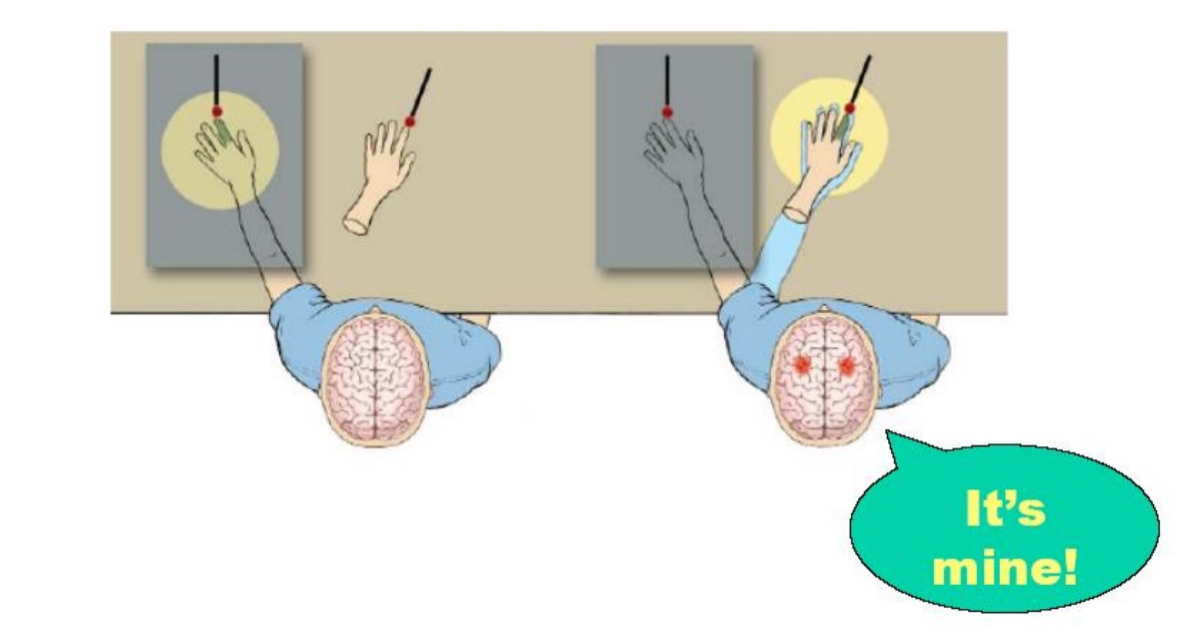

Rubber hand illusion

Rubber hand illusion

is a psychological experiment where a fake rubber hand is placed in front of a person while their real hand is hidden.

Both the fake hand and the real hand are stroked simultaneously and in the same way. After a short time, many people start to feel as if the rubber hand is their own.

This happens because the brain combines:

Visual input (seeing the rubber hand being touched),

Tactile input (feeling touch on the real hand), and

Proprioception (awareness of where your limbs are).

When these inputs are synchronized, the brain becomes confused and re-maps the sense of ownership onto the rubber hand

It shows how multisensory integration shapes our sense of body ownership, and how the brain can be tricked into adopting external objects as part of the self when sensory signals line up. It also highlights the brain’s flexibility and dependence on vision for body perception.

The illusion doesn’t work if the touch is not synchronized — timing matters.

It’s often used to study body image, phantom limb pain, and even conditions like schizophrenia.

Brain areas involved include the premotor cortex and posterior parietal cortex, which are involved in mapping the body and integrating sensory signals.

Manipulation of body schema through vision

Out of body illusion/experience

Out of body illusion/experience

is a lab-induced version of an Out-of-Body Experience (OBE) where a person feels as if their conscious self is located outside their physical body — often watching themselves from behind or above.

This illusion can be created experimentally by:

Placing a camera behind the participant,

Showing them a live video feed of their own back through a headset,

And synchronously stroking both the person's chest (which they feel) and the virtual chest (which they see).

When the brain integrates mismatched visual, tactile, and proprioceptive signals, people may identify with the virtual body instead of their own.

What it reveals:

The sense of self-location and body ownership is constructed by the brain from multisensory input (vision, touch, balance, proprioception).

The brain areas involved include the temporoparietal junction (TPJ), which plays a key role in integrating body-related signals and in self-other distinction

Out-of-body’ experiences (OBEs) definition:

sensations in which consciousness becomes detached from the body, taking up a remote viewing position

Near-death experiences, Independant of cultures (has nothing to do with religious beliefs or anything like that)

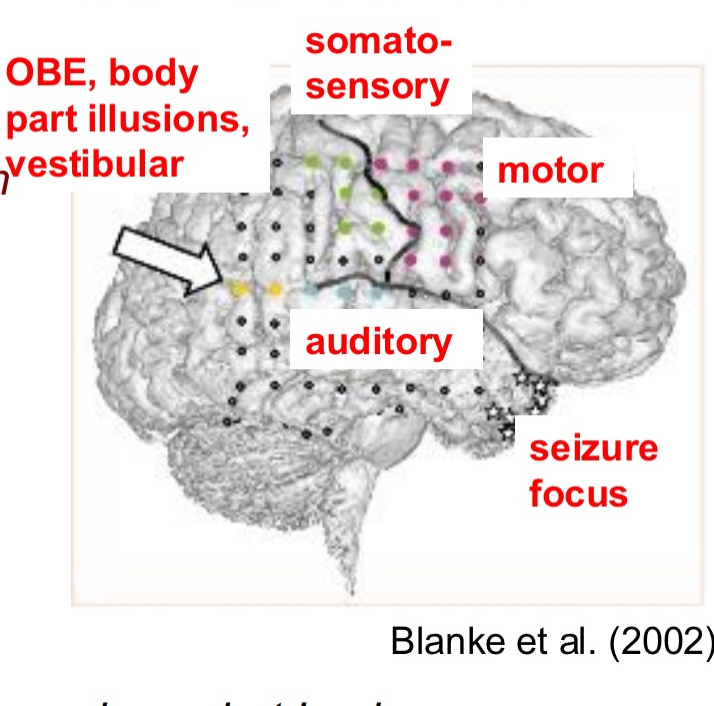

Women had seizures and couldn’t be cured with medication, decided to cut of part of brain to stop them

Make sure the part that is being cut, is not crucial to quality of life.

Dr opened skull while patient was awake, using local anesthesia, and place a mesh of electrodes (coloured dots).

Then stimulate each electrode separately to see what the response of the patient is

When stimulate electrodes coloured in

blue → auditory illusions

Green → felt she was being touched, somatosensory illusions

Purple → movements from person, motor

Yellow → out of body experiences, body part illusions, vestibular ( angular gyrus was stimulated)

Vestibular: sinking into the bed, falling

“I see myself lying in bed, from above, but I only see my legs and lower trunk”