Current cog Lecture 6

1/24

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

25 Terms

Taxonomy II: Sources / Directing of attention

Stimulus-driven attention: Attention drawn to salient stimulus

“Salience-driven”; “Bottom-up”; “Exogenous”; “Involuntary”; “Automatic”; “Feedforward-based”

Goal-driven attention: Attention initiated by current behavioural requirements

“Top-down”; “Endogenous”; “Voluntary”; “Controlled”; “Feedback-based”

Experience-driven attention: Attention driven by learned context or value

“Selection history”; “Reward”; “Priming”; “Automatic”

In the real world

We often know what we want, just not where it is

We activate some representation of the goal, or target object

We scan around until we find a match

Especially helpful when target object does not “pop out”

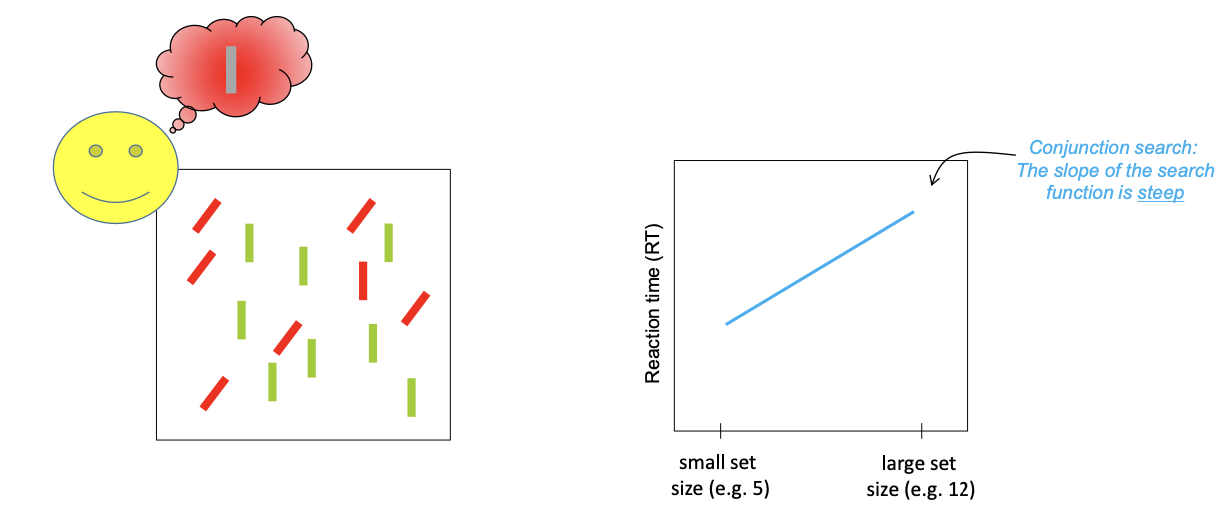

In the lab: Goal-driven visual search

Task: Find the red vertical bar

You probably try limit search to the red items, looking for a vertical one

Slower, more serial than single feature search

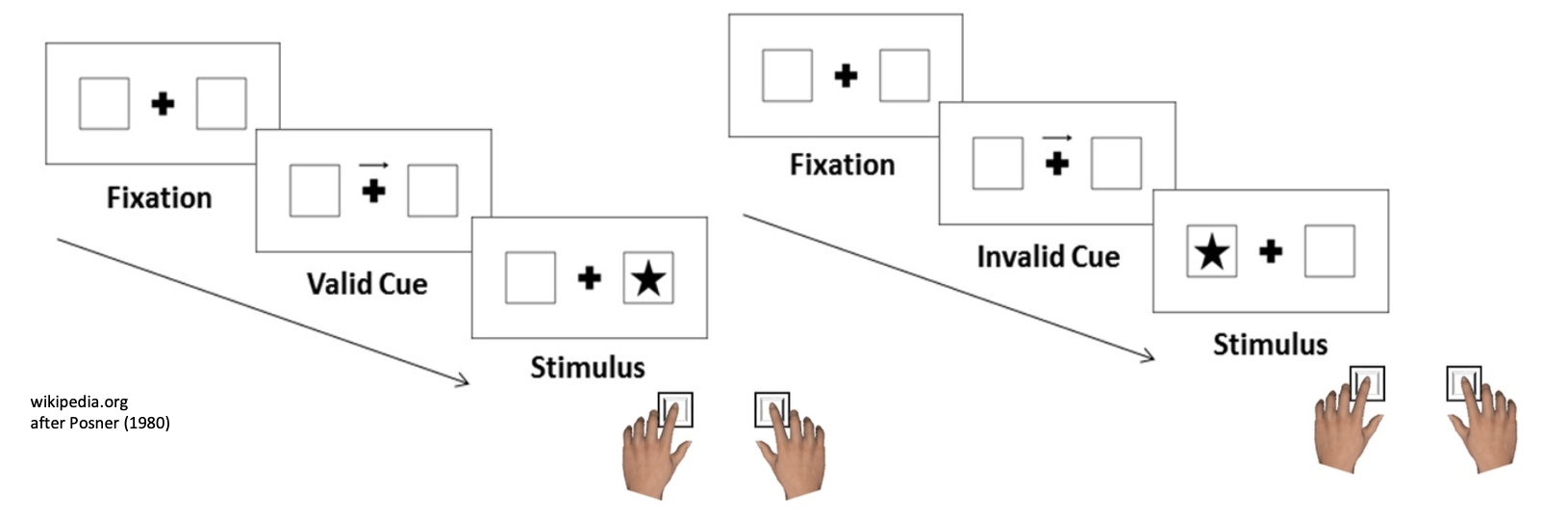

Goal-driven attention to space: Spatial cueing

We can deliberately direct our attention on purpose in space (independent of the eyes!)

Task: Keep eyes on central fixation cross. Attend to location pointed out by arrow cue.

Respond to target stimulus appearing.

Conditions: Target appears in cued location (valid cue, most of the times, e.g. 80%), or in other location (invalid cue, rare, e.g. 20%)

Primary measure: Manual RT

Results: Cue validity effect: Faster response after validly cues, slower after invalid cues → Covert attention

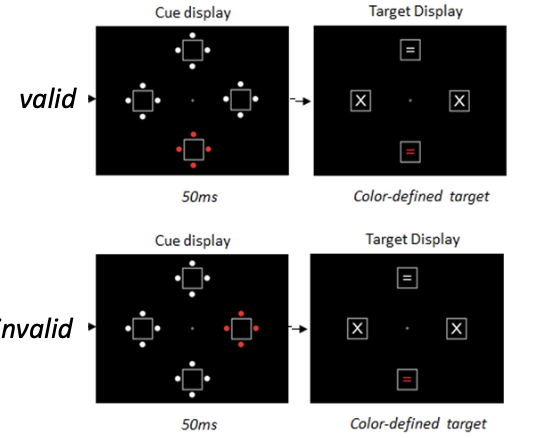

Goal-driven attention to features: Contingent Capture

We can deliberately look for certain features and not others, e.g. red.

Task: Look for a red X or = sign in the final display – ignore anything else

A cue display appears just before, one of the cues matches the color of what you’re looking for (i.e. red)

Conditions: The cue indicates the target position (valid cue, 25% of trials), or not (invalid cues, 75%)

Primary measure: manual RT

Results: Cue validity effect

Conclusion: When actively looking for a feature (here red), attention is inadvertently caught by that feature: Contingent capture

But is this because observers are deliberately looking for red, or because red is salient?

We need a control condition: Look for a single white onset target instead

Same task: Determine if X or =

Same spatial validity manipulation of red cue

If goal-driven, then no cuing effect

Results: No cue validity effect

Conclusion holds!

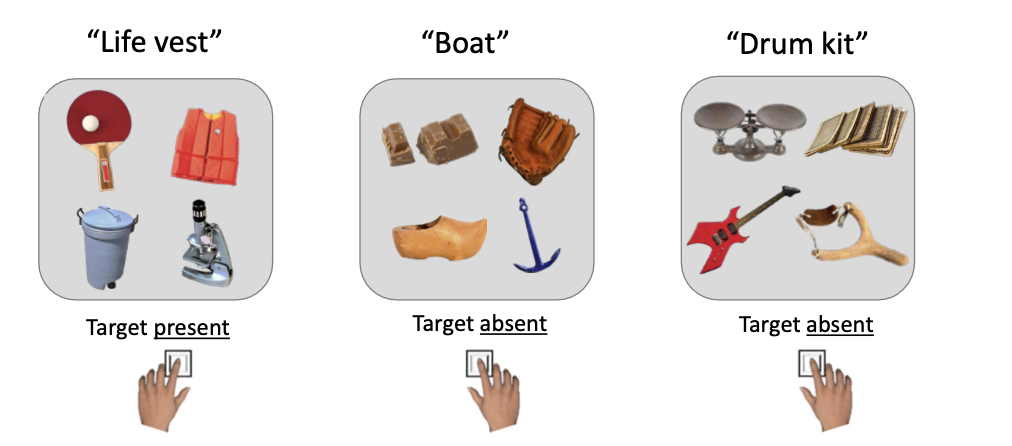

Goal-driven attention to objects and their meaning

Task: Visual search for a target object

Conditions: There can be visually related and semantically (meaning) related distractors

Primary measure: Eye fixations as a function of time

Results: people’s attention is drawn to both visual and semantic distractors, but visual distractors seemed to be more sailent

Goal-driven attention is flexible and task-dependent

Pioneering work by Yarbus (1967)

People fixated eyes differently on pictures depending on what task they got before → Top-down goals determine exploration

Study: Stimulus-driven attention vs Goal-driven attention

Task: make an eye movement to left-tilted item, and ignore the right-tilted item

Two main conditions: target can be the more salient one, or the less salient one (and we also varied eccentricty)

Here probability of going to the salient item

Results: While stimulus-driven attention is fast and short, goal-driven attention is slow and longer-lasting

When attention was stimulus-driven, people quickly looked at the more salient item — but this effect didn’t last long.

When attention was goal-driven, it took longer to focus, but the attention stayed longer and was more stable.

Attention versus expectation

Top-down attention

about the relevance of a stimulus

selects a stimulus

enhances the signal

flexible, adaptive

Top-down expectation (prediction)

about the likelihood of a stimulus

selects an interpretation

suppresses the expected signal (predictive coding)

learned, engrained

Comparison

Stimulus-driven

Driven by physical feature contrast

“Pops out” in parallel

Involuntary, automatic, little control

Early, rapid, and short-lived

Feedforward

Goal-driven

Driven by goal: space, physical feature, object, or more abstract properties like meaning

Helps selection especially when target cannot be detected in parallel

Voluntary, controlled

Relatively late and slow

Feedback (as we will see next)

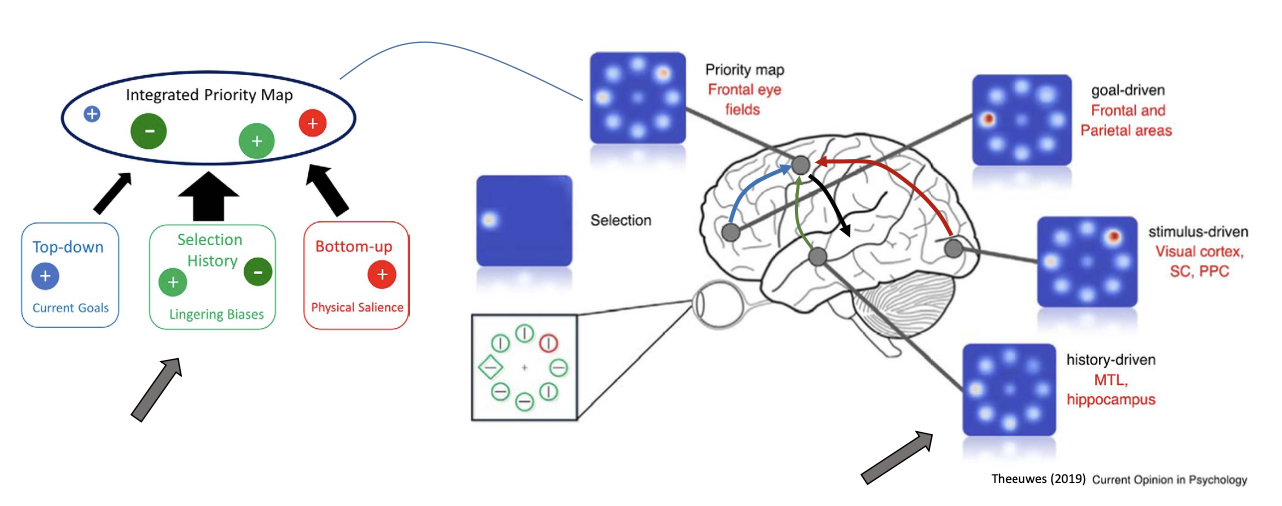

A Relevance Model

Guided Search model (Wolfe, 1990 onwards)

Feature maps (as in saliency models)

Top-down bias by increasing weights on relevant features

Bottom-up: feature maps (like in saliency models) that show what stands out in the image.

Top-down: our goals or expectations, which boost the weight of relevant features (e.g. if you’re looking for something red, red features get more weight).

Combines into top-down relevance map (here “activation map”)

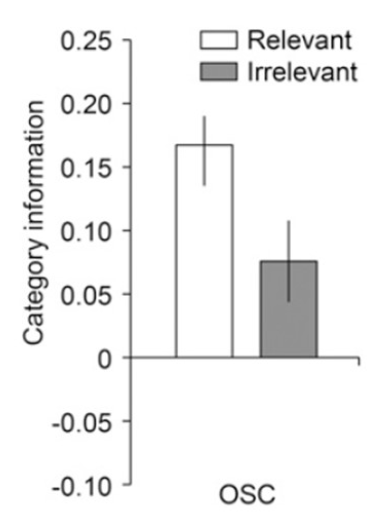

Evidence for weighting: fMRI multivoxel pattern analysis

Task: Attend to humans or vehicles

Some voxels are more active for some categories than for others, while other voxels are less active

Hence different categories of objects come with different voxel patterns

Conversely: Once we know the patterns for a particular person, we can reconstruct what they are seeing, or attending!

Results:

The same for space: fMRI

First, map out space for each of four locations with retinotopic mapping

Task: Monitor each of the locations in turn for a brief flip of the line

Result: Enhancement of location-related brain activity

The same for space: EEG

Attend to the left or attend to the right

Early components in the event-related potential (P1, N1, P2, N2) are enhanced for attended stimuli

So attention somehow enhances the neural representation of the attended information. How?

→ Attention results in increased firing rate of relevant neurons

→ Attention changes receptive field tuning

Receptive field tuning

Look these three objects, and imagine three V4 neurons, each sensitive to one of them

Receptive field sizes will overlap

Plus neurons are mutually inhibiting: lateral inhibition

As a consequence, neurons compete heavily for representing “their” object!

Solution: Attention biases this competition, and the receptive field effectively shrinks

Reasons for attention

“Capacity limits” or “Resource limits”

But these have limited explanatory value

We need to make explicit what the limiting factors are

Competition for receptive field representation is one such limit

Areas driving the feedback

Posterior parietal areas: top-down switching attention whether to locations or features

Task: Attend Left/Right, or Attend Red/Green, and report the motion direction

Manipulation: Change task between location and color tasks, or both

→ general purpose fronto-parietal attention network

(A ventral network driving stimulus-driven selection)

A dorsal network driving goal-driven selection

With considerable overlap → priority map which combines bottom-up and top-down information

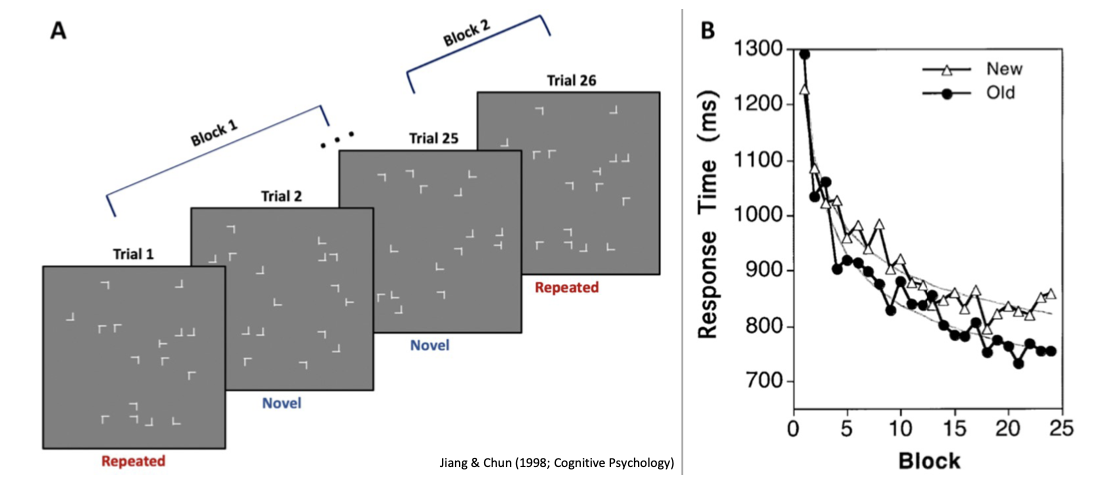

Repetition effects: Contextual cueing

Task: Find the T and indicate its rotation

Conditions: some displays are repeated in the next block

Result: Repetition leads to faster detection

Even though people are unaware of the repetition! → Implicit context effect

Does not occur for amnesics with hippocampal damage

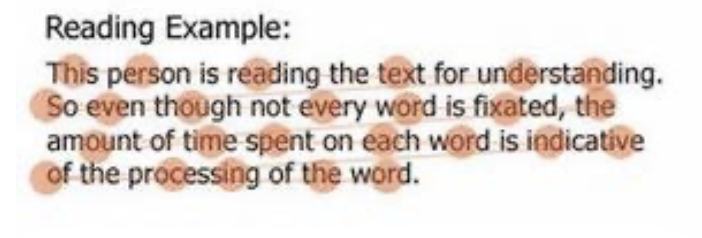

Other context effects: Reading

High frequent and predictable words are not fixed

Context effect: Scene knowledge

Scene context strongly guides where we look

When given a task: E.g. look for a pan

But even under free viewing there are strong biases

“Places of interest”

Center bias

Faster recognition with age

Semantic and syntactic violations demand extra attention

So experience influences selection

Repetition effects: Intertrial priming

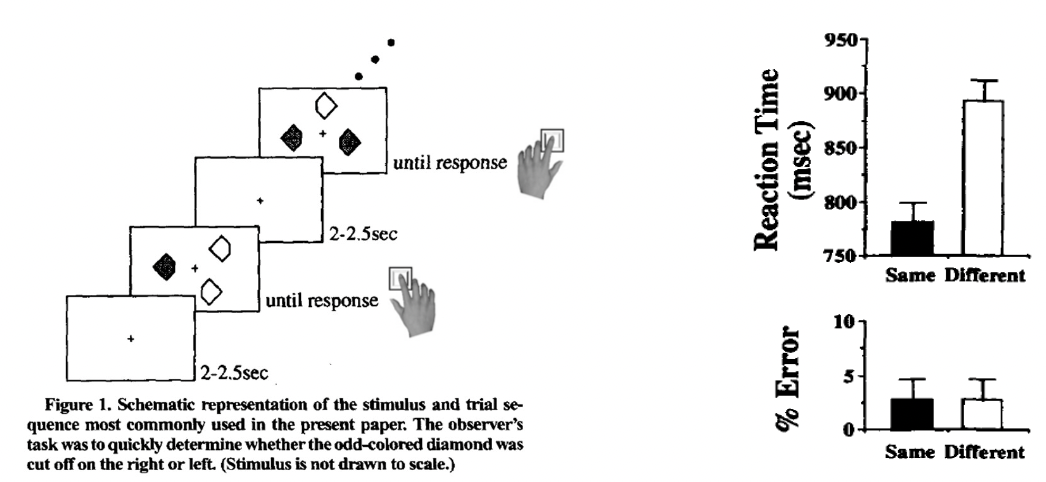

Task: Find the uniquely colored diamond , determine which side cut off

Conditions: color scheme repeats, or switches from one trial to next

Faster with repetition, but become slower if countinuesly flips around

Is this automatic or under voluntary control?

Conclusion: Observers are strongly driven by what they did previously, with little control

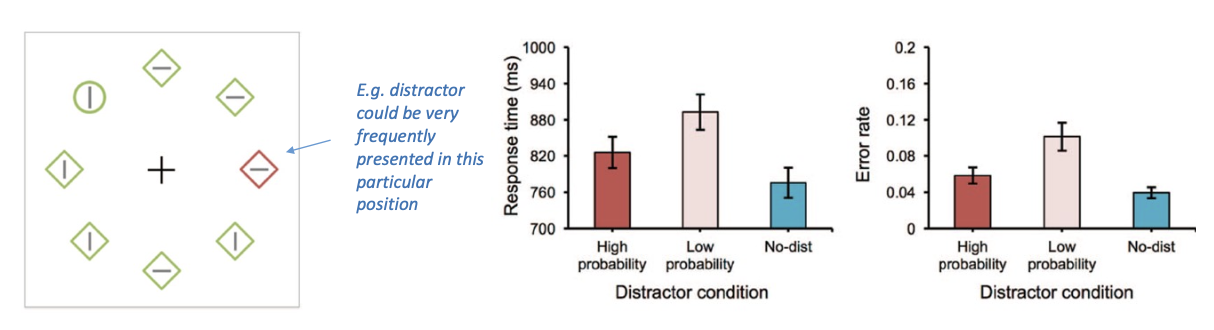

Repetition effects: Distractor suppression

Can you prevent capture?

Same design, stimuli & task as Theeuwes (1992)

Two conditions: Distractor at any position (random, low probability) vs. distractor most frequently at one particular position (high probability)

Results: Frequent distractor location suppressed or avoided

Conclusion: Some control possible with extensive repetition

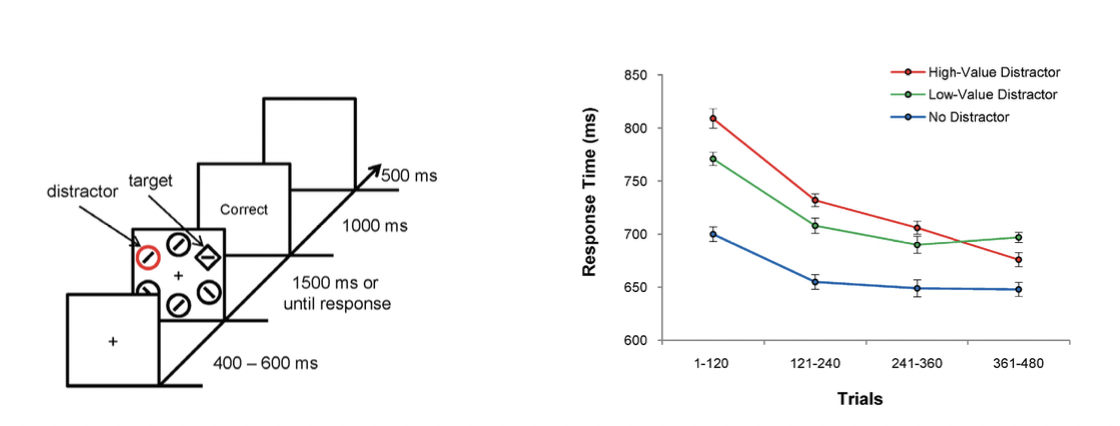

Value-driven attention: Effects of rewards

Two phases: Training phase and test phase

Training phase task: Find red or green target, respond to line inside

Conditions: One target associated with high monetary reward, other with low reward

Test phase task: Find diamond, respond to line inside. Ignore anything else

Conditions: Distractor previously associated with high versus low reward, or no distractor

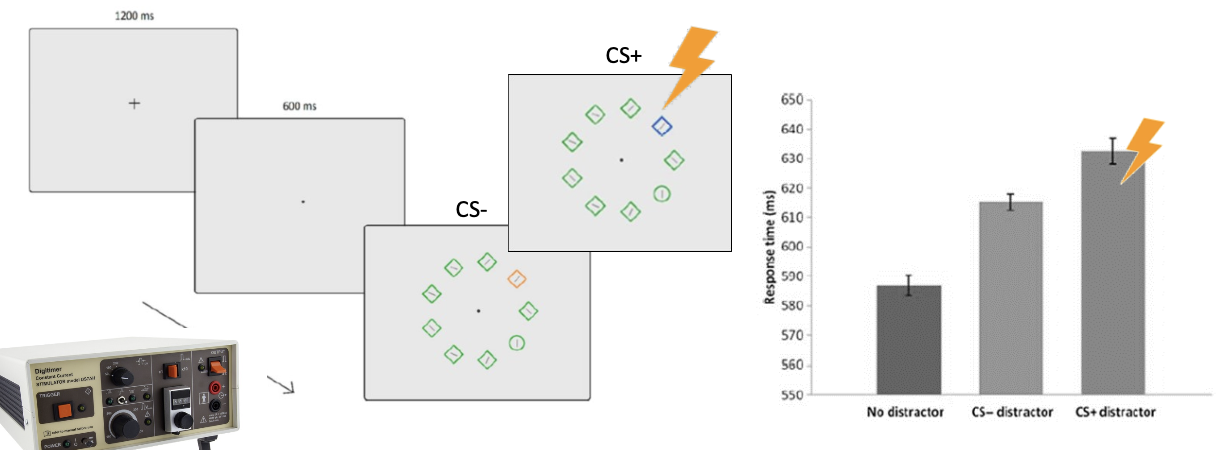

Value-driven attention: Effects of punishment

Task: Find deviant shape, ignore distractors

One distractor associated with electric shock (CS+), the other not (CS-)

The complete priority map

Stimulus-driven, goal-driven, and experience-driven influences interact to create an overall priority map.

Frontal Eye Fields may be one candidate to represent this overall priority map