Current cog Lecture 5

1/18

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

19 Terms

Attention

Selection of the sensory input

Unattended → unaware, inattentional blindness

Looking is not attending

Conversely: Attention improves perception

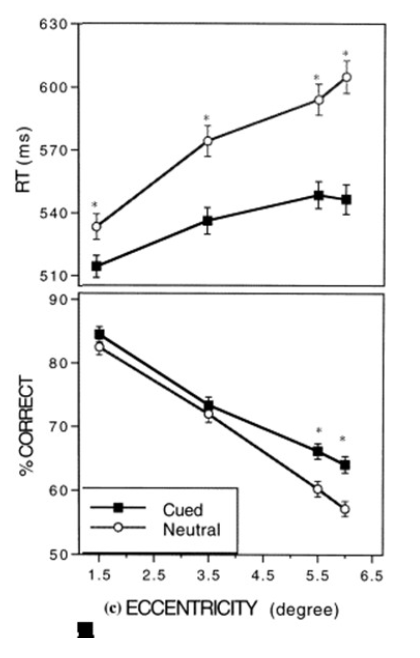

Example from spatial cueing paradigm

Fixate on middle dot. A cue then draws attention to a location.

Task: discriminate the target (appearing at cued or non-cued location)

Primary measures: RT (ms) and accuracy (% correct)

Conclusion: RT was faster and more correct for cued location

Reason for attention

Inattentional blindness: shows we cannot process everything up to the same level of awareness

Brain has insufficient capacity or mental resource to process all sensory information

Must make a selection (hence often referred to as selective attention)

Information processing has a bottleneck: From parallel to serial

Taxonomy I: Targets of attention

Attention comes with a sense of direction. To what?

Internal attention: Own thoughts, memories, and action plans (intentions)

External attention: Sensory input

Different modalities

Space

Time

Features

Objects

Taxonomy II: Sources of attention

What is then directing attention?

Stimulus-driven attention: Attention drawn to salient stimulus

“Salience-driven”; “Bottom-up”; “Exogenous”; “Involuntary”; “Automatic”; “Feedforward-based”

Goal-driven attention: Attention initiated by current behavioural requirements

“Top-down”; “Endogenous”; “Voluntary”; “Controlled”; “Feedback-based”

Experience-driven attention: Attention driven by learned context or value

“Selection history”; “Reward”; “Priming”; “Automatic”

Example from the lab: Spatial cueing

Task: Search for a target letter (e.g. “R” or “B”), press button accordingly

Stimulus-driven cue (peripheral onset)

Goal-driven cue (central arrow): may tell you where the target is

Cue is either valid (target appears at indicated position) or invalid (target appears at other position)

Results:

Stimulus-driven is automatic

Goal-driven is controlled

Other taxonomies

Selective attention versus divided attention

Transient attention versus sustained attention

Attention in the real world

What are the characteristics here that attract attention?

Attention is “caught”: Attentional capture

Attention driven by some feature contrast

Attention driven to a location

In the lab: Color saliency

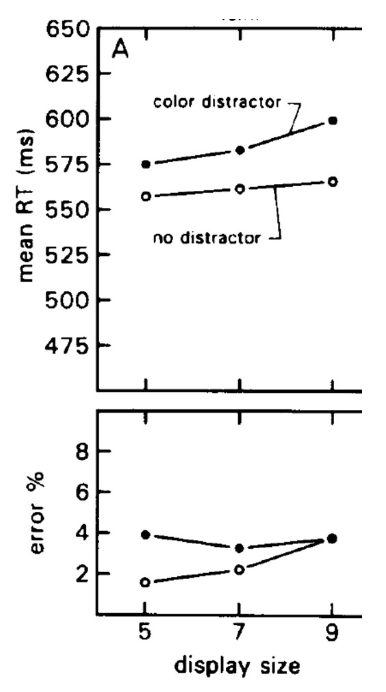

Classic study from Theeuwes (1992)

Task: Look for deviant shape; ignore deviant color report the orientation of the little line segment (horizontal/vertical), as fast as you can

Primary measure: Reaction time (RT);

Secondary measure: Response accuracy (% error)

Two important conditions: No salient distractor vs. Salient distractor

Conclusion: Salient distractor interferes, so must have captured attention

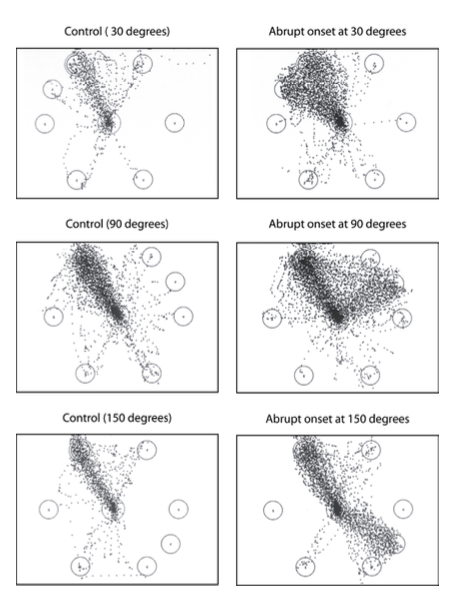

Follow-up study looking at eye movements (Theeuwes et al. , 2003)

Same design, stimuli & task

Primary measures: First saccade direction, fixation duration

Conclusion: Salient distractor interferes, so must have captured attention → saliency also briefly captures the eyes

In the lab: EEG

Some event-related potentials (ERPs) in the EEG signal reflect attention

Most prominent is the N2pc

Posterior, contralateral, negative component, lateralized (left or right), around 250 ms after stimulus onset

There also exists a positive potential, reflecting suppression, called the Pd

Applying this EEG technique to saliency (Hickey et al., 2006; JOCN)

Same design, stimuli & task as Theeuwes (1992)

Primary measure: Attention-related contralateral negative component, called N2pc

Results: N2pc contralateral to target, but also contralateral to salient distractor

Conclusion: distractor attracts attention

In the lab: Abrupt onset saliency

Grey items change to red, except one. Letters appear inside.

Task: Make an eye movement to the grey object, and report its letter (C or reverse C) ignore the abrupt onset of a new object

Primary measures: RT; accuracy of first saccade (% to target)

Result: ~40% of saccades directed towards irrelevant onset

Secondary measures:

Saccadic latency

Saccadic trajectory

Conclusion: Onsets capture attention automatically; Occurs fast & early

In the lab: Orientation saliency

Grid of orientations, of which two items deviate; Vary orientation of background items, so that one of the items is more salient than the other

Task: make an eye movement to left-tilted item, and ignore the right-tilted item

Primary measure: saccadic accuracy (% towards the target, versus % towards salient item)

Two main conditions: target can be the more salient one, or the less salient one (and we also varied eccentricty)

Conclusion: Saliency influences attention early in time

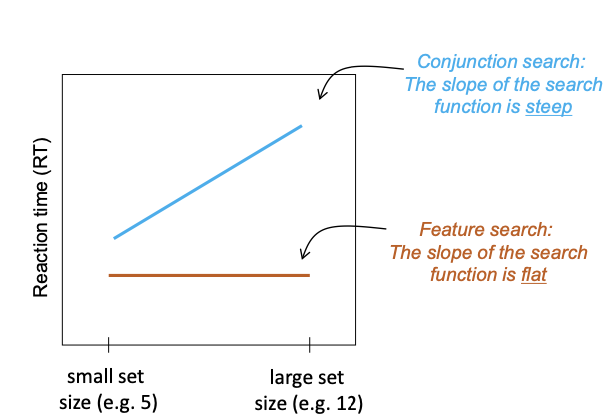

In the lab: Visual search

Task: Find the unique object (target)

Condition 1: Here defined by unique feature: “feature search”

Important: Vary the set size (= number of items in the display; also called display size)

Primary measure: RT as a function of set size, referred to as search slope

Results: Detection does not suffer from additional items, slope is flat

Condition 2: Here defined by combination of features: “conjunction search”

Results: Detection suffers from additional items, slope is steep

Conclusions:

Salient feature contrasts are detected in parallel across the visual field: before the bottleneck

Nonsalient contrasts require serial scanning: bottleneck

Control over capture

Can you prevent capture?

Same design, stimuli & task as Theeuwes (1992)

Two conditions: Distractor at any position (random, low probability) vs.

distractor most frequently at one particular position (high probability)

Results: Frequent distractor location suppressed or avoided

Conclusion: Some control possible with extensive repetition

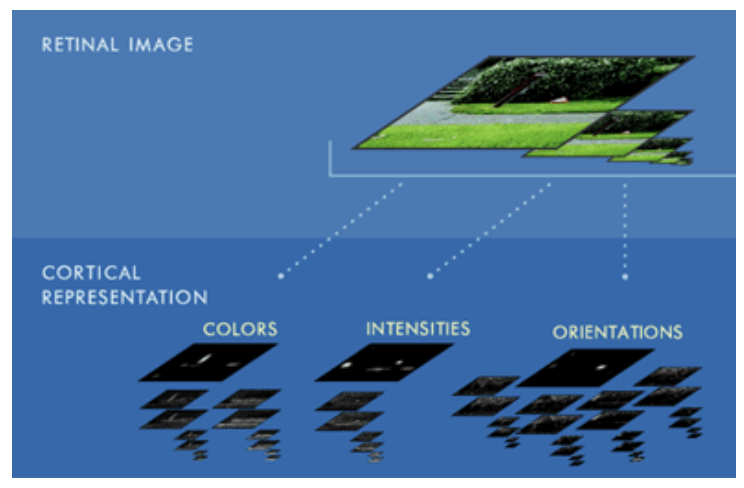

Feature maps

Different areas have different visual functions

Even for basic physical features like intensity, orientation, motion, color

We can represent this schematically in maps like this, called feature maps

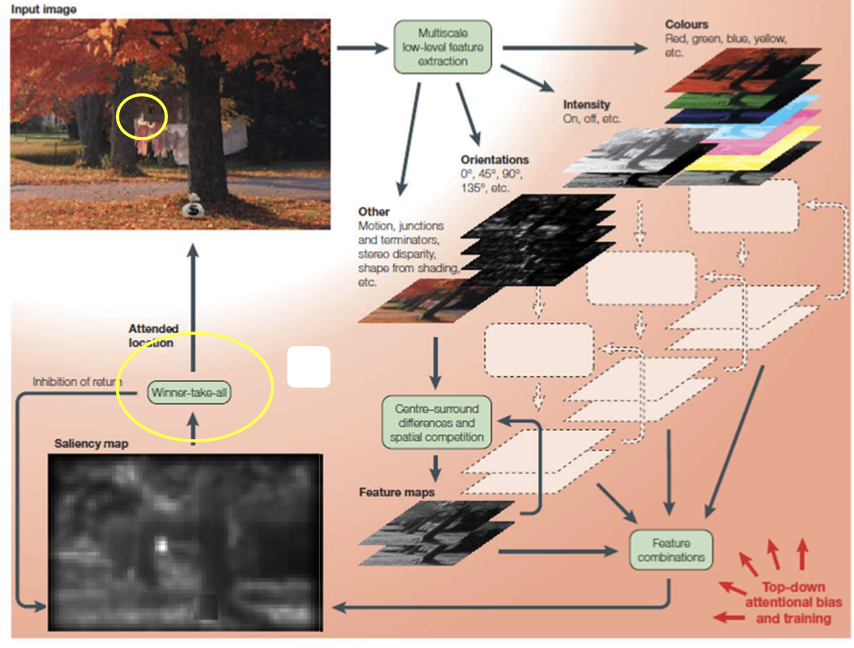

A Saliency Model

Itti & Koch (2000) built a computer model that does the same

How? → use image filters

Feature values are then combined into an overall saliency map

Activity in the saliency map represents the relative saliency of each location

A next step is called winner- take-all, to make the model actually choose the most salient location

It then makes an “eye movement” towards it

Finally, the location is suppressed, and the cycle repeats

Where is this saliency map?

Complex network of brain areas thought to be involved

A more ventral system that detects the salient features` (what)

A more dorsal system that flags important locations and triggers motor response (where)

fMRI work from Corbetta & Shulman (early 2000s)

A ventral network driving stimulus-driven selection

A dorsal network driving goal-driven selection

But consider overlap

Alternative models of saliency: The Information Theoretic approach

Do not need to transmit what is known, only what is not known

Let’s apply these principles to an image

We can build these principles into a computational model, where salient = maximal information value

And then compare it to human eye movements

Alternative models of saliency: The Bayesian Surprise approach

Yes, we can predict part of an image from another image part (Bruce & Tsotsos, 2009)

Better is to predict from the observer’s expectations

Example: Watching TV

From Shannon Information Perspective, white snow should continually attract attention, since maximally unpredictive

Bayesian approach: Update our observer model from “I’m watching CNN” to “I’m watching snow”

After that the snow loses its surprise value

Under this model: salient = largest update of our beliefs

Results: Predicts eye movements even better