PSYC 365 Midterm 2

1/151

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

152 Terms

(guest lecture) STUDY 1: Identifying patterns of cognitive-affective processing in bipolar and unipolar depression.

AIM

investigate if and how reward sensitivity, facial emotion judgement, and self-referential processing differ between acutely depressed people with MDD and BSD, as well as healthy controls.

HYPOTHESES

Reward sensitivity: MDD < BSD < control

Facial emotion recognition: MDD better than BSD

self-referential processing: MDD and BSD will perform equally, but better than the control

aka, recall more negative than positive traits compared to control group

CONCLUSION

nature of depressive episodes differ in BSD and MDD

more anticipatory reward sensitivity and positive self-referential encoding in BSD than MDD (BSD > MDD)

(guest lecture) STUDY 1: methods

3 groups: MDD, BSD, control

MDD and BSD: primary DSM-5 diagnosis based on MINI

control: no past or present psychiatric disorder

all participants required to complete the MINI (assess 17 most common metal health disorders), and 2 additional clinical assessments (MADRS, YMRS)

tasks: 3 behavioural tasks

monetary inventive delay task

facial emotion labelling task

self-referential encoding and memory task

STUDY 1: monetary incentive delay task

participants play towards a real draw and real money

participants have to press space bar as quickly as possible after seeing a smiley face; if respond quickly enough, will get tickets

participants asked how excited they felt after being told how many tickets they were playing for (eg, if press space bar quickly, you will get 10 tickets)

measuring anticipatory reward sensitivity

STUDY 1: reward sensitivity

ability to detect and derive pleasure

anticipatory reward sensitivity

consummatory reward sensitivity

patterns of differential neural activation in MDD vs BSD

but, don’t know precisely how they differentiate

STUDY 1: facial emotion judgement

ability to detect and differentiate different facial emotion expressions

people who have MDD are better than people with BSD at identifying facial expressions

BSD requires more intense expressions (less ambiguity) for accurate identification

STUDY 1: self-referential processing

types of cognitions we have about ourselves

eg, positive, negative, memory biases

MDD and BSD: associate themselves with negative instead of positive traits

but no direct comparison between conditions

overall limitation: few direct comparisons between aspects of cognitive-affective processing in people with MDD vs BSD

studies and comparisons have been conducted with people who are not acutely depressed

euthymic phase: not actively experiencing depressive symptoms

results

individuals with MDD had significantly lower anticipatory reward sensitivity than BSD and control groups

BSD no different rom controls in anticipatory sensitivity (MDD < BSD = control)

STUDY 1: facial emotion labelling task

classifying if a face is happy or sad

shift point: where on continuum someone shifted from identifying face as happy or sad

measuring if they shifted too early, late, or accurately in the middle

sloep: rate at which response shifted

results

no significant difference between all 3 groups

MDD = BSD = control

STUDY 1: self-referential processing task

shown a series of words, either positive or negative

positive: honest, responsible, exciting, etc.

negative: unkind, stupid, dumb

participants had to categorize which words they believed most accurately described them

memory task: asked what words they could remember

results

MDD and BSD ended similar number of negative words

MDD endorsed significantly fewer positive traits than BSD

BSD endorsed significant fewer positive traits than control

MDD and BSD did not differ significantly for number of negative traits or negative self-referential memory bias

negative traits: MDD = BSD > control

positive traits: MDD < BSD < control

(guest lecture) STUDY 2: defining cognitive-affective processing subgroups in MDD and BSD

PRIOR KNOWLEDGE:

differences in MDD vs BSD cognitive-affective processing among currently depressed individuals

people with BSD ascribed more positive traits to themselves compared to those with MDD and controls

greater anticipatory reward sensitive for BSD compare to MDD

heterogeneity

how well does this map onto diagnostic status

prev. research has explored clusters of cognitive-affecting processing across the mood spectrum, including participants who are not acutely depressed

among those studies, they looked primarily at facial recognition tasks

difficult to quantify heterogeneity when just looking at group

AIM:

identify data-driven subgroups based on cognitive-affective processing amongst acutely depressed individuals with MDD and BSD

STUDY 2: methods

3 groups

MDD

BSD

control group

2 tasks

monetary incentive delay task

self-referential encoding and memory task

analyses

k-means clustering: identify clusters/sub-groups

one-way ANOVA: assess cluster differences in task performance as well as clinical (MADRS score) and demographic variables

chi-square: asses proportion of diagnoses across clusters

STUDY 2 results: identifying clusters results

identifying clusters/sub-groups (k-means clustering)

CLUSTER 1

lower reward anticipation

higher negative encoding

lower positive encoding

more negative memory bias

CLUSTER 2

higher reward anticipation

lower negative encoding

higher positive encoding

less negative memory bias

STUDY 2 results: assessing cluster differences

testing significance of the differences between CLUSTER 1 and CLUSTER 2 (one-way ANOVA)

Group 1: negative low-rewarders (NLR)

lower anticipatory reward sensitivity and lower positive self-referential encoding

higher negative self-referential coding and negative memory bias

Group 2: positive high-rewarders (PHR)

MADRS score amongst patients did not differ significantly in both groups

STUDY 2 results: assessing proportions results

more MDD patients were NLRs

no significant difference in BSD patients who were NLRs or PHRs

more HC participants were PHRs

STUDY 2: conclusions and implications

NLR and PHR represent distinct cognitive-affective processing subgroups

there is heterogeneity in cognitive-affective processes across the mood spectrum

some patients (MDD, BSD) cluster together with most health controls

personalized treatment approaches are important

(guest lecture) STUDY 3: PPCS

PPCS: persistent post-concussion symptoms

more than 30 million people sustain a concussion each year

minority (18-31%) have persistent symptoms (PPCS) which last month to even years

depression, irritability, confusion, etc.

PPCS has a significant impact on overall wellbeing and quality of life

why some people develop PPCS (and others don’t):

injury-related characteristics

GCS score

duration of PTA

significant imaging findings

psychosocial factors

pre injury mental health concerns

anxiety sensitivity

low social support

(study 3) fear-avoidance model of concussions

historically, people prescribed to “dark room” treatment after having a concussion

staying in a dark room until concussion symptoms go away

post-concussion symptoms: light sensitivity

catastrophizing: think light sensitivity is an indication brain is irreparably damage (rather than understanding it as a symptom of a concussion)

fear-avoidance behaviour: engaging in behaviours to reduce chance of experiencing these symptoms (eg, staying in a dark room)

douse, deconditioning, depressive symptoms: does not engage in typical activities (eg, using light in room)

feeds into experiencing more concussive symptoms

because person has been in the dark for so long, will experience heightened light sensitivity when they emerge: feeds into cycle

s

(STUDY 3) role of attentional bias

attentional bias could be a cognitive process which underlies the maladaptive thought patterns and behaviours in the fear-avoidance model

in the fear-avoidance model of chronic pain, associations between fear-avoidance model constructs and attentional biases have been identified

no studies have yet to explore the connection between attentional biases and fear avoidance model constructs in PPCS

observed relationship between attentional bias to threat and:

post-concussion like symptoms

fear-avoidance behaviours

symptom severity

pain catastrophizing

catastrophizing could also effect attentional biases to threat

attentional biases

facilitation (attentional orienting)

how our attention is captured by various stimuli

we are wired to pay more attention to and respond quicker to certain things (eg, we’ll notice a bear before a ladybug)

attentional avoidance

attention located elsewhere (sky instead of bear)

disengaging attention

more difficult to disengage attention from threatening symptoms

PPCS may orient attention to post-concussive like symptoms

measuring attentional biases

experimental tasks

poor reliability

emotional stroop task

dot probe tasks

spatial cueing tasks

visual search tasks

used in study

gaze/eye tracking tasks

attentional blink tasks

(guest lecture) STUDY 3a: investigating attentional biases and the fear-avoidance model in adults with persistent post-concussion symptoms

AIMS:

establish whether attentional biases exist in PPCS

a) using attentional blink tasks, investigate if attentional biases (difficulty disengaging from pain-related stimuli) exists in individuals with PPCS

b) using movement eye-tracking tasks, investigate if attentional biases (preferential looking towards symptom-relevant stimuli exists in individuals with PPCS

HYPOTHESES

participants with PPCS will have greater difficulty disengaging attention pain faces from neutral faces, compared to participants who have recovered from their concussion

participants with PPCS will demonstrate longer dwell time on symptom relevant images (images expressing general and illness threats) compared to those who have recovered from their concussion

(guest lecture) STUDY 3b: investigating attentional biases and the fear-avoidance model in adults with persistent post-concussion symptoms

AIMS:

describe correlations between attentional biases and fear-avoidance model constructs (eg, fear-avoidance behaviour, pain catastrophizing, increased post-concussion symptoms)

a) describe correlations between attentional biases as measured on the attentional blink task (difficulty disengaging from pain-related stimuli) and fear-avoidance model constructs

b) describe correlations between attentional biases as measured on the gaze-time tasks (preferential looking towards symptom relevant stimuli and fear-avoidance model constructs

HYPOTHESES:

Participants who demonstrate greater attentional biases in difficulty disengaging attention from pain-related stimuli will also report greater severity of fear-avoidance model constructs

Participants who spend more time fixating attention on symptom-relevant stimuli will also report greater severity of the fear-avoidance model constructs

STUDY 3(a+b): methods

2 groups - recruited through SFU research pool

persistent post-concussion symptoms group (PPCS)

2 or more symptoms with moderate severity or higher

recovered group

1 or fewer symptoms with moderate severity or higher

sustained self-reported concussion at least one month prior

methods

questionnaires (step 1)

attentional bias experimental tasks

attentional blink task

dwell/gaze-time task

STUDY 3: attentional blink task

participants have to identify 2 targets

Target 1 (T1): pain or neutral face

Target 2 (T2): bird, flower, or furniture

distractors: scrambled images of objects between targets

lag: distance between images (lag by 3 and 7)

results

neutral faces

significant main effect of lag on accuracy of detecting the T2 image when the T1 image was neutral

no differences between the PPCS and recovered group in difficulty disengaging attention from neutral faces

attentional blink phenomenon in both PPCS and recovered group, when seeing neutral faces

no difference in attentional blink between the groups

pain faces

attentional blink phenomenon in both PPCS and recovered group, when seeing pain faces

did not see difference in attentional blink between 2 groups in difficulty disengaging attention from pain faces

PPCS sample did not demonstrate attentional biases toward symptom relevant stimuli

STUDY 3: gaze-time task

stimulates eye-tracking tasks with mouse movements

side by side images with overlay

neutral (spool of thread, q-tips)

general threat (forest fire, tornado)

concussion threat (MRI, basketball hitting someone in the face)

shown either

neutral - neutral

general threat - neutral

concussion threat - neutral

measurement: how long spent viewing each image

results

correlations between experimental task performance and fear-avoidance model constructs

weak positive correlation between anxiety and time spent viewing concussion images

no correlations between attentional biases to pain stimuli and fear avoidance model constructs were identified

no significant group x image type interaction

STUDY 3: severity analysis

DID NOT REVEAL that grouping participants by fear avoidance behaviour instead of symptom persistence impacted results

attentional blink task

no significant group x lag interaction for the neutral images

no significant group x lag interaction for the pain images

gaze-time task

no significant group x image type interaction

STUDY 3: why PPCS participants did not demonstrate attentional biases towards symptom relevant stimuli

possible explanations

attentional blink task may not be sensitive enough to detect group differences

the concussion images we chose may not be adequately threatening enough

sample consisted of participants removed from injury and did not endorse high levels of FAM constructs

pain faces may not have been fully representative of symptom relevant stimuli in the attentional blink task

may be more complex attention engagement and avoidance processes at play

limitations

experimental tasks and stimuli — no pre-existing concussion threat image stimuli

robustness of emotional variation of attentional blink task

participants characteristics (undergrads, non-treatment seeking, mostly sports-related concussions)

power (significantly underpowered for correlational analyses)

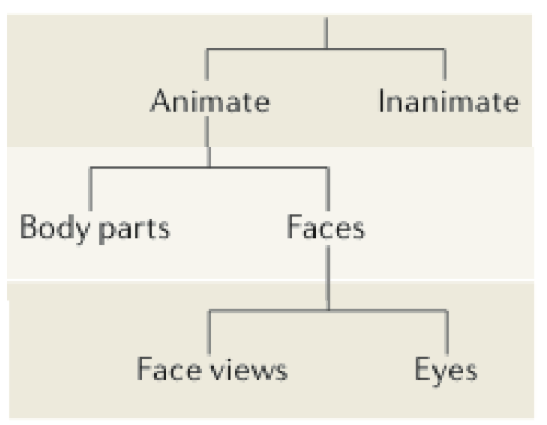

inferotemporal cortex

IT

important for object categorization

sensitive to semantic meaning

object categorization levels – ventral visual stream

superordinate

animate vs inanimate

basic

face vs house

subordinate

categorizing type

type of face (man); type of house (mansion)

exemplar

specific

president; white house

encoding approaches

divisions and hierarchies in the ventral visual cortex (IT)

the large the category, the more amount of brain area is devoted to it

smaller category, smaller amount of brain area

superordinate

min. 1cm

more abstract

basic

big component parts, ranging from few mm to cm

more concrete

subordinate

complex features

max 1 mm. and distributed

clarifying objects

not enough to simply recognize what we see; we also have to make sense of it

this involves classifying things, whether animate or inanimate

ventral temporal cortex and activation

left side activates more to animate

right side activates more to inanimate

categorizing bugs, birds, and mammals

previous research:

functional landmarks for broad categories (e.g., animate vs inanimate)

less known about finer distinctions (different classes of animal)

looked at patterns of voxels (RSA) to find similarity structures at the level of biological class

fMRI experimental design

simple recognition memory task

6 different series of same class animals (3 birds, 3 bugs)

shown class of animal and asked if it was similar to what they were shown

control measure to ensure participants were paying attention

had to match which stimuli were similar

RSA results

ventral stream (LOC & IT)

activation mapped onto biological class structure

stimuli represented in a way that matched behavioural similarity ratings

conclusion

human neuroimaging reveals a "hierarchical category structure that mirrors biological class structure in the ventral visual stream

lateral-medial organization

using encoding to assess brain mapping in the LOC

question: how does abstract representation of the continuum from bug to primate map onto brain space? is there a seamless transition?

findings: brain map for category differences between primate vs bugs looks similar to brain region classification of animate vs inanimate

lateral-medial organization

animal categories are represented in the LOC, ranging from medial to lateral

this is one of many dimensions of representing objects

using behavioural judgements as a target, researchers found semantic structures are reflected strongly throughout the LOC

learned from fMRI – encoding approach

responses to objects parts, and then whole objects as we move along the occipital cortex, from the EV to the LOC

more invariant processing and more category specificity as we move along the ventral stream

learned from fMRI – decoding approach

shows continuum of finer-grained categories

in the ventral stream: matches semantic judgements of animal class

in LOC: organized along a spectrum of inanimate (not like me) to very animate (like me)

perception

the brain’s best guess of what you’re hearing changes what you hear

but the stimuli itself does not change

eg, videos where at first you hear one sentence, but then upon being told the actual phrase, you hear it differently the second time

hallucinations can be thought of as uncontrolled perception

consciousness

less to do with pure intelligence, more to do with our experience as living and breathing

problems with perception

one cause, many signals (S1, S2, S3)

many causes, one signal (S1, S1*, S1**)

bottom-up processing of perception

feedforward feature based model (V1 → V2 → LOC → IT)

signal or information gets passed from one node to the next

“bottom up” processing involves mostly feed-forward processes as information gets passed from the V1 forward

populations of neurons respond to features of an object at an increasingly large scale and higher levels of abstraction

BUT

detected patterns of lower-level features can be interpreted in multiple different ways

need further information to decide between hypotheses of perception: why choose one over the other, when both account for lower-level features

thus: brain needs additional top-down information (information the brain generates and applies to the world — one’s expectation of what they will see)

von Helmholtz and predictive coding models

the brain is seen as a prediction machine

perception is just unconscious inference

we infer the cause of a sensation via its effects on us

inference: idea or conclusion drawn from evidence or reasoning

an educated guess

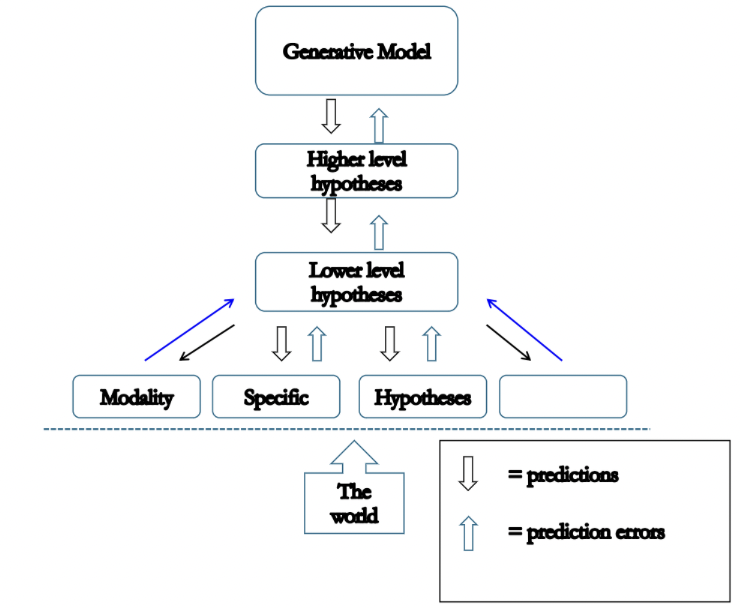

predictive coding model

generative model: our general understanding of the world around us (eg, there are no bears on campus)

higher level hypotheses: there are no bears on campus, so that brown blob must be a tree stump

lower level hypotheses: if we accept the hypothesis the blob is a bear, we would expect movement and sound/if the blob is a stump, there will be no movement

interrogating information presented by the higher level hypotheses

modality specific hypotheses: if we expect to see movement, our cortices responsible for registering movement will become more sensitive

if we expect to see face and eyes, the part of the visual cortex sensitive to eyes will be activated

prediction errors feed back to lower-level hypotheses

errors from lower-level hypotheses will feed back to higher-level hypotheses

WHAT WE ARE SEEING INFORMS OUR GUESS AT WHAT WE ARE PERCEIVING

E.G., if we expect to see a tree stump but then see movement, our higher-level hypothesis will change so we believe we are seeing a bear

*information all feedback to generative models: if we were wrong and it was a bear, not a stump, we will know in future encounters what to look for with bears, and be able to make more accurate predictions*

winning hypothesis will mach contents of perception

Egner et al.

Big Picture Question

Do predictive coding models explain visual object recognition better than classic hierarchical feature-based models

Examined by taking advantage of what we know about category selective voxels in the fusiform face area (FFA) and parahippocampal place area (PPA)

Research Question

Does BOLD activity in the FFA reflect responses to expectation and surprise? Or does it only reflect face features?

General hypothesis

Following the predictive coding model, FFA activity will be an “additive function” of expectation and surprise

Alternative hypothesis

there will always be more FFA activation to faces; expectation and surprise will not matter

visual perception: predictive coding

perception is inference

2 processing units at every level of visual hierarchy

representation (“conditional probability” or face expectation)

error (“mismatch between predictions and bottom-up evidence” or face surprise)

visual perception: feature detection

visual neurons just respond to features of an object

eg, FFA neurons respond to face features such as eyes, facial configuration, etc.

inference

a conclusion based on reasoning from the data; we don’t perceive the world directly

instead, we guess and then test our best guess

Egner et al. participants

young college students with normal or corrected to normal vision

N = 16

Egner et al. experimental design

fMRI study

some faces were upright, some were inverted

FFA sensitive to right-side up faces

view either faces or houses

each picture has a coloured border – colour is predictive of the type of accompanying stimulus

green: high face expectation

yellow: medium expectation

blue: low expectation

FFA activates more for faces; PPA activates more for houses

Egner et al. variables

independent variables

target vs non-target (upright vs inverted face)

stimulus probability (% of time for faces vs houses)

stimulus feature (house v face)

dependent variables

reaction time

BOLD response in FFA

BOLD response in PPA

Egner et al. predicted results

A – predictive coding

face expectation: expect higher FFA activation for high face expectation (than medium or low face expectation)

face surprise: higher FFA activation when have low expectation to see face (compared to medium or high expectation)

predictive coding: high FFA activation for faces in all 3 conditions

activation for faces: high > medium > low

FFA activity is additive

B – feature detection

higher FFA activation for face, compared to house, regardless of condition

same degree of activation for all conditions

Egner et al. specific hypotheses

predictive coding hypothesis: FFA responses to face and houses should be most different when face expectation is low

under low expectations, there is a lot more surprise if you see a face. no surprise if you see a house

thus, large activation is strictly due to surprise

feature detection hypothesis: FFA responses to face will always be greater than activation to houses, regardless of the expectation level

FFA just likes the features of faces; doesn’t care about level of expectation

thus, same activation across conditions

Egner et al. results

main effect: faster to identify inverted faces than houses

no difference in reaction time

fMRI results

FFA activity looks most similar to predictive coding model’s hypothesis

greater FFA responses for face than houses in low and medium conditions, but statistically indistinguishable in the high expectation condition

supports prediction of greater differences between faces and houses in low vs high expectation conditions

predictive coding model predicted a 1:2 ratio, where surprise contributed 2x as much as expectation

results do not fit with the feature detection model

additional attentive models

feature + attention

additive: baseline shift model

FFA activation is enhanced or repressed depending on expectation of faces for faces AND houses

multiplicative: contrast-gain model

FFA activation is enhanced or suppressed depending on expectation of faces for faces

Egner et al. reading question

Why did Egner et al. also analyze fMRI data in the PPA, as a test of whether the FFA results were generalized?

to make sure this effect is generalizing to other brain regions

without this finding, we cannot be certain the FFA is unique for coding

results were mirrored: pattern they saw in the FFA (for face expectation and surprise) they also saw in the PPA (for house expectation and surprise)

Egner et al. conclusions

pitted 2 views of how visual object recognition works in the ventral stream

feature detection model

bottom up

neurons respons to features that match preferred stimulus

feedforward foley of sensory information

hierarchical

feature focussed

predictive coding model

representation units code expected features

error units code mismatch between expected signal and actual signal from the world

expectations/prediction

based on memory/experience

pattern of results in the FFA were consistent with a response that added expectation and surprise and its predictions of computational models based on predictive coding

conclusion:

prediction coding models describe the process of visual inference better than feature detection models

encoding prediction and error is a general characteristic of how the brain works

Egner et al. critiques

we know more about relative contributions of prediction and error units

might be an interaction with attention if that was relevant to the task

don’t know how much the BOLD response reflects top-down vs bottom-up inputs

doesn’t take into account both PC and feature detection models, or a model that encompasses both

the brain’s job to minimize prediction error

predictive coding models

top-down processes

your representation or model of the world

generates prediction at every level of the visual hierarchy

tries to ‘explain away’ sensory signal

bottom-up signal

only prediction error gets passed forward (not actual signal)

propagated upward, based on match between model and sensory information

research on attention

many forms of attention, research is about studying different flavours of attention

selective attention

sustained attention

researchers agree: attention is limited

inattentional blindness

failure to see fairly major changes to a visual scene when you’re attending to something else

eg, gorilla video

asked to count how many basketball passes were made, then miss the gorilla walking through the scene

an example of selective attention

change blindness

failure to see gradual changes when they are not the centre of focal attention

eg, curtain changing colour

important concept for understanding sustained attention

forms of attention

overt vs. covert attention

selective attention

identifying targets

classic model: top-down and bottom-up

attentional sets in top-down attention

dorsal and ventral attention networks (DAN and VAN)

sustained attention

*top-down and bottom-up in attention not the same as in object recognition

general concepts, which can be applied in different ways to different cognitive processes + underlying brain systems

**dorsal and ventral attention systems not the same as dorsal and ventral visual streams

dorsal and ventral refer to directions

overt attention

with the eyes

the eye moves to focus on the object of attention

staring right at someone

gaze is focused and flexible

gaze and doves are aligned

attention is guided by the eyes

covert attention

with the mind

attend to an area of space, but the eye does not move

object of attention is in your peripheral vision

gaze is fixed on a specific point

gaze and focus are not aligned

attention is guided by the mind

selective attention

turning looking into seeing

allows you to filter and focus on relevant stimuli

the ability to discern important background information

can be overt or covert

helps in identifying targets

target importance is based on goal relevant, temporal relevance, and salience factors

why selective attention:

millions of bits of information hit our retina, and only a couple hundred bits reach parts of the ventral visual stream which are involved in high level object recognition

study of selective attention: studying how we filter the visual stream down to seeing what’s important

distinctness of targets is determined by two attentional systems

selective attention cannot be passive

a combination of sensing something and attending to it

factors which determine importance in selective attention

relevance to goals

looking for keys, so only paying attention to things that are shiny, small, moving

grabbiness

hearing sirens and seeing bright flashing lights outside window

pulls attention away from prior search

identifying targets

subsection of selective attention

we often use our attention to identify something we’re looking for

eg, keys on a crowded desk

in lab experiments: use visual experiments to create similar situations

results used to identify different attentional systems involved in selective attention

tasks: identifying an O in a grid of X

identifying the red X among black Xs

identifying the red O among red N and green O and N

selective attention systems

top-down attentional systems

bottom-up attentional systems

top-down attentional systems

controlled

deliberate (do it on purpose)

conscious

goal-directed (task- based: for a specific reason)

involves maintaining an attentional set

maintaining a mental template about what matters

neurons and regions relevant to the mental template then fire up in anticipation

neurons and regions tune to other distracting information are suppressed

important for deliberately focussing attention on what is relevant

process is conscious and obeys our will

we engage in top-down attention often

requires the use of an attentional set

bottom-up attentional system

automatic – feature based

involuntary, serves as a response

can feel against our will, since may not help us with our current goals

captures attention, contrary to our current goals

eg, flashing lights outside window grab your attention while you’re searching for your keys

feature-based

dependent on low level features: colour, motion, brightness, pitch, loudness

sirens, sudden motions, bright flashes of colour would all catch our attention

!sudden changes!

gradual change doesn’t capture our attention in this way

indicative of evolutionary advantages

captures attention without requiring attentional set

attentional sets

part of top-down attentional system processing –– task based

mental templates that allow us to selectively attend to a certain category of stimulus before it appears

involves mentally holding features or locations of the object you’re expecting

eg, keys

hold a mental template of features of the key (shiny), so that you pay attention to things that match the template and ignore things that don’t

this is an attentional set

MVPA experiments show that representations of targets could be decoded before an image is represented

suggests the brain keeps an attentional set active for the feature of those targets in advance

example of attentional sets

locating Waldo from his striped red and white shirt

mental template used to help identify an object

helps separate and engage with stimuli categories that are relevant to our goals

operates by identifying shared features between the template and stimuli

can consist of locations, features, or associated features

cued by FEF and IPS activation

dorsal attention network (DAN)

top-down processing

includes the frontal eye fields (FEF) and intraparietal sulcus (IPS)

can engage when planning

maps to regions of space or distinct features

attention mostly modulated by VAN and DAN

both VAN and DAN communicate with each to modulate V1 (visual cortex)

ventral attention network (VAN)

bottom-up processing

includes:

VFC: ventral forontal cortex

TPJ: tempo parietal junction

involved in responses

attention mostly modulated by VAN and DAN

both VAN and DAN communicate with each to modulate V1 (visual cortex)

biased competition

a neural mechanism for selective attention

activation of neurons tuned to task-relevant stimuli

used to create the attentional set

target neurons will preemptively fire

competing neurons will experience suppression

helps prime and regulate activity

occurs primarily in the DAN

sustained attention

staying on task, even when boring

used when we are required to attend to low attentional/mundane stimuli for long durations

typically measured with SART (sustained attention to response task)

individual differences associated with ADHD, impulsivity

performance can serve as a marker for ADHD and impulsivity

difficulties with sustained attention

easily distracted by non-relevant stimuli

often forgetful in daily activities

difficulty sustaining attention during activities

difficulty following instructions

failure to complete tasks

less attentive to details and making excessive errors

avoidance of activities that require sustained mental effort

motivation

the impulse to approach or avoid something that’s rewarding or punishing

the urge toward action

emotion

the physiological sensations of emotional arousal and subjective feelings that go with these sensations

the subjective experience

circuits respond to major life-challenging events

basic emotional brain systems are conserved across many different species, particularly in mammals

subcortical circuits respond to an event (loved ones death, threat to life)

circuits are centred on the brain stem, amygdala, and basal ganglia

circuits and various cortex exchange information to organize behavioural responses

for every reaction, there’s an action

someone dies, you seek social comfort

information exchanged with the fronto-parietal systems

important for high-order conscious cognition

eg, planning, remembering, ruminating

sensitivities of relevant sensory systems are changed

systems responsible for dealing with the events at hand

main focus: emotional guidance of attention

could apply to auditory attention, etc (not just visual)

visual cortex: DAN influences/modulates activity

emotional circuits do similar things as DAN: tune sensory cortices to what is motivationally or emotionally relevant

the best understood and most reliable circuits are deemed “Grade A Blue-Ribbon” emotional systems

grade A blue-ribbon emotional systems

evolutionary conserved in a wide variety of animals

positive inventives

social loss

rage

fearing punishment

blue ribbon: positive incentives

food, water, warmth, sex, social contact

desire, hope, and anticipation lead to reward seeking

short term: manifests as immediate desire for a reward

long term: manifests as hope for the future

approach behaviour

blue ribbon: social loss

loneliness, grief, and separation distress leads to panic

also: loss of social reward, social distress (eg, rejected from social group)

withdraw (withdraw from social situation)/approach (sending apologetic text to group, motivated by panic) behaviours

blue ribbon: rage

body surface, irritation, restraint, and frustration

hate, anger, and indignation leads to rage

approach behaviour (when angry, want to act on the emotion and ‘deal’ with the situation)

blue ribbon: fearing punishment

pain and threat of destruction

anxiety, alarm, and foreboding feelings leading to fear

avoidance behaviour (running or hiding from something)

amygdala (emotional and motivation)

hub for tagging emotional and motivational relevance in the necessary brain systems

amygdala is hooked up to other brain regions

amygdala acts as a hub in other networks

an important connective node

interconnected with many other regions (visual, auditory, sensory)

hooked into other sensory systems

amygdala has a key role in tagging what’s biologically important and guiding attention and memory

the reason biologically relevant things grab our attention because it’s relevant to our well-being

amygdala routes information to other nodes in the network

amygdala tells us to pay attention to certain things and act appropriately

amygdala has a key role in solving this problem: what is important, and what is to be done about it?

involved in a wide variety of emotional systems; tag emotional salient things in our environment and routes information to other brain regions — enhances our attention, learning, and memory

Inman paper

ultimately Inman tells us about the multifaceted influence of the amygdalae on behaviour

the amygdala influences emotional memory and emotional perception

subjective emotional perception: frontal cortex

autonomic physiology: hypothalamus

mood state: ventral striatum

declarative memory: hippocampus perirhinal cortex

remembering something that happened to us because it was emotionally relevant

facial emotion recognition: temporal visual cortex

amygdala & emotionally arousing systems

numerous studies have found that amygdala activity and visual cortex activity are grater for emotionally arousing images

amygdala has links to all areas of the ventral visual stream

amygdala sends information to every region of the ventral visual stream, like DAN and SAN

the amygdala is part of top-down visual processes

the amygdala biases our visual cortex in certain ways

biases what we are attentive to

the amygdala is key for tuning attention to what is emotionally relevant in our environment based on experience

attentional bias (amygdala)

when emotionally or motivationally relevant information captures our attention more readily than neutral information

what information is considered relevant can differ between people

eg, depression people may be biassed to attending to negative things (which reinforce their ideas that the world sucks; vicious cycle)

some categories are nearly universally relevant: food, attractive people, dangerous animals (bears, snakes)

amygdala’s role in attentional bias

the amygdala is important in tuning our attention to what’s emotionally relevant

sifts significant from the mundane

emotionally salient

things that pop out because of emotional relevance – stimuli that captures our attention and stands out from its surroundings because of its emotional relevance

eg, universally relevant categories

this is evidence of our emotional biases at play

patient SP

lost amygdala later in life

bilateral amygdala lesions – due to severe epilepsy

one amygdala was surgically removed, the other damaged by seizures

described as funny, likeable, average IQ

no seizures post-surgery, able to hold a job

so no drastic personality changes with the loss of amygdala

however: impaired emotional attention

could not detect emotionally relevant words in the same way we do

amygdala necessary for emotionally-guided attention, but not for feeling emotion

attentional blink

when you fail to see a second target stimulus in a stream of stimuli, when it comes too soon after a first target stimulus

too soon operationalized as within half a second

target stimulus = told to remember it

theories for attentional blink

resources are still occupied with processing the first stimulus

resources don’t become available to process the second stimulus until the first stimulus is fully processed (which takes about half a second)

thus, much harder for the second target to reach awareness; it is as though the mind blinks

emotional sparing

reduced attentional blink when the second target stimulus (T2) is high in emotional arousal

a measure of attentional bias

emotional attentional set guides attention to things that are emotionally relevant

SP emotional attention EXPERIMENT

task: identify the following, which are nestled within a series of words shown for 1/10th of a second each

T1: series of numerals in green

T2: word in green, which is either neutral or emotionally arousing

manipulation:

early: T2 came within 500 milliseconds after T1

expect participants to experience attentional blink

late: T2 comes after 500 milliseconds

do not expect an attentional blink

results:

control group

early manipulation

accuracy for neutral words: 30%

accuracy for emotional words: 60%

SP

early manipulation

accuracy for neutral AND emotional words: 20%

late manipulation

improved accuracy, not no significant difference between emotional or neutral words

SP cannot detect emotionally relevant words in the same way as control conditions

visual similarity control

manipulated so targets would stand out to greater or lesser degrees

SP and controls showed attentional blink sparing for words that were visually easier to perceive

confirms that SP does not have generally poor perception

manipulating visual similarity gets at bottom-up attentional processes

ventral attentional network (VAN) is responsible

SP has intact emotional experience:

SP and controls rated emotional state over 30 days

no difference in positive or negative emotional experience

conclusion:

the amygdala influences selective attention for emotional relevance, but not perceptual salience

the amygdala is necessary for emotionally-guided attention, but not for feeling emotion

attentional blink sparing

attentional blink sparing is shaped by experience

the amygdala is key for emotional sparing

eg: plane crash survivors

more likely to see related words

showed blink sparing for words related to the experience; controls did not

eg: soldiers

soldiers with PTSD more likely to perceive combat related words

less likely to rapidly regulate fear system response

MEG results: in PTSD groups, combat words that were identified caused the visual cortex to fire up for a period of time

fear systems

emotions

anxiety

alarm

foreboding

cognition/behaviour

greater attention

greater memory

freeze, fight, or flight (flee)

fear (definition)

when you face an identifiable threat in the near future

comes and goes

anxiety (definition)

threatening things that could (have the potential to) happen over time

fear system in overdrive

response to threat

we have limited attentional resources and limited physical resources

in the precedes of a strong threat, we pull our attentional and physical resources together and use them with full capacity to minimize the probability of body destruction

amygdala sends signals to the autonomic nervous system (ANS)

amygdala sends signals to the hypothalamus, the ANS kicks in, our heart rate increases

blood pressure rises, breathing quickens, stress hormones (adrenaline, cortisol) are released

blood flows away from the heart and towards limbs, like arms and legs – prepares them for action

anxiety activates our stress response to a lesser degree (even when the threat isn’t right to our face)

stress response happening every day, when unrequited, can be hard on both our physical and mental health

chronic stress can increase inflammation, which is associated with many diseases

symptom of the fear system in overdrive is an attentional bias to threat

eg, see a photo of a stick: anxiety would see a snake

we are in a wave of an epidemic of anxiety and depression

attentional bias to threat

anxiety creates an attentional bias for threat

seeing multiple emotions in a face, person is more likely to look at the angry person first

for anxiety and PTSD, attentional bias to threat is extreme

amygdala systems in overdrive harm more than help

anxiety can become debilitating and chronic

seeing threat everywhere comes at the expense of safety and reward – attention is a limited resource

avoidance - flight behaviour

avoidance is a fight behaviour

anxious people: amygdala is more sensitive to threat than reward

this leads to elevated physiological response, sensory processing, memory, and rumination

perception of a threatening thing is followed by avoidance (disengagement of attention)

people avoid attending to the thing they find threatening

BUT: this creates less opportunity to learn that these situations are not actually threatening

eg, find social situations threatening so avoid parties, but not being exposed to more social situations decreases a person’s opportunity to realize the situation isn’t threatening at all

attention - threatening stimuli

attention is captured by threatening stimuli

creates feedback loops, which cause and maintain clinical levels of anxiety

perceive threatening things, then the amygdala ramps up other systems

person then becomes more likely to remember and ruminate on the threatening things

this tunes attentional system more towards ‘threatening things’ in the future

how to reduce feedback loops (anxiety)

taking a step back

taking a deep breath

down regulating parts of the brain that are having the nervous system sensory response

approaching threatening stimuli in increments (eg, holding a spider)