AWS Certified Security - Specialty

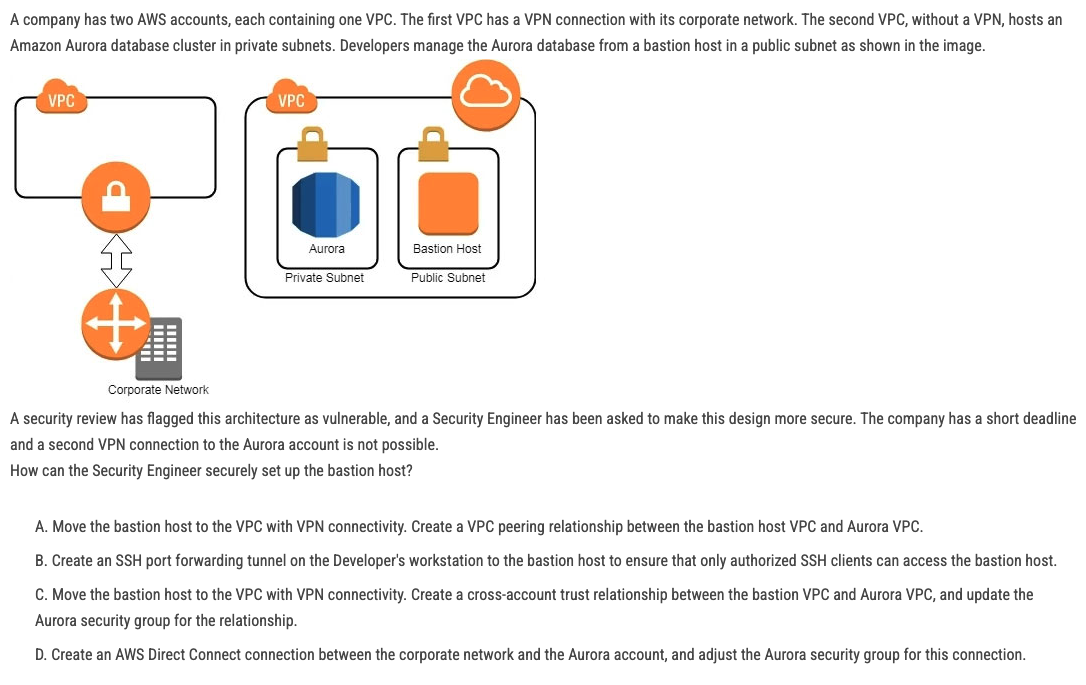

1/508

Earn XP

Description and Tags

Updated Feb 2025

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

509 Terms

The Security team believes that a former employee may have gained unauthorized access to AWS resources sometime in the past 3 months by using an identified access key.

What approach would enable the Security team to find out what the former employee may have done within AWS?

A. Use the AWS CloudTrail console to search for user activity.

B. Use the Amazon CloudWatch Logs console to filter CloudTrail data by user.

C. Use AWS Config to see what actions were taken by the user.

D. Use Amazon Athena to query CloudTrail logs stored in Amazon S3.

The correct approach to determine what actions a former employee may have taken within AWS using an identified access key is:

D. Use Amazon Athena to query CloudTrail logs stored in Amazon S3.

### Explanation:

- AWS CloudTrail records API calls made in your AWS account, including the user, service, and action performed. These logs are stored in an Amazon S3 bucket.

- Amazon Athena is a serverless query service that allows you to analyze data directly from Amazon S3 using standard SQL. By querying the CloudTrail logs stored in S3, you can filter for the specific access key and identify all actions performed by the former employee during the specified time frame.

### Why not the other options?

- A. Use the AWS CloudTrail console to search for user activity: While the CloudTrail console allows you to view events, it is not efficient for searching large volumes of data over a 3-month period. Athena is better suited for this task.

- B. Use the Amazon CloudWatch Logs console to filter CloudTrail data by user: CloudWatch Logs can store and analyze logs, but it is not the most efficient way to query CloudTrail logs for specific access keys over a long period.

- C. Use AWS Config to see what actions were taken by the user: AWS Config tracks resource configuration changes, not API calls or user activity. It is not suitable for this use case.

By using Amazon Athena to query CloudTrail logs, the Security team can efficiently identify all actions performed by the former employee using the identified access key.

A company is storing data in Amazon S3 Glacier. The security engineer implemented a new vault lock policy for 10TB of data and called initiate-vault-lock operation 12 hours ago. The audit team identified a typo in the policy that is allowing unintended access to the vault.

What is the MOST cost-effective way to correct this?

A. Call the abort-vault-lock operation. Update the policy. Call the initiate-vault-lock operation again.

B. Copy the vault data to a new S3 bucket. Delete the vault. Create a new vault with the data.

C. Update the policy to keep the vault lock in place.

D. Update the policy. Call initiate-vault-lock operation again to apply the new policy.

The **MOST cost-effective way** to correct the typo in the vault lock policy is:

**A. Call the abort-vault-lock operation. Update the policy. Call the initiate-vault-lock operation again.**

### Explanation:

- **Vault Lock Policy**: Once a vault lock policy is initiated, it enters a **provisioned state** for 24 hours. During this time, the policy can still be aborted if it hasn't been locked yet.

- **Abort Vault Lock**: If the policy has not been locked (i.e., within the 24-hour window), you can call the abort-vault-lock operation to cancel the current policy. This allows you to correct the typo in the policy and re-initiate the vault lock with the updated policy.

- **Cost-Effectiveness**: This approach avoids the need to copy or delete data, which would incur additional storage and transfer costs.

### Why not the other options?

- **B. Copy the vault data to a new S3 bucket. Delete the vault. Create a new vault with the data**: This approach is **not cost-effective** because it involves copying 10TB of data to a new bucket, which incurs data transfer and storage costs. It is also time-consuming.

- **C. Update the policy to keep the vault lock in place**: Once the vault lock policy is locked, it **cannot be updated or changed**. This option is not feasible.

- **D. Update the policy. Call initiate-vault-lock operation again to apply the new policy**: You cannot update the policy or re-initiate the vault lock until the current lock process is aborted. This option is not valid.

By aborting the current vault lock, updating the policy, and re-initiating the lock, the security engineer can correct the typo in the most cost-effective and efficient manner.

A company wants to control access to its AWS resources by using identities and groups that are defined in its existing Microsoft Active Directory.

What must the company create in its AWS account to map permissions for AWS services to Active Directory user attributes?

A. AWS IAM groups

B. AWS IAM users

C. AWS IAM roles

D. AWS IAM access keys

The correct answer is:

C. AWS IAM roles

### Explanation:

To control access to AWS resources using identities and groups defined in an existing Microsoft Active Directory, the company must use AWS IAM roles in conjunction with AWS Directory Service (specifically, AWS Managed Microsoft AD or AD Connector). Here's how it works:

1. AWS Directory Service: The company sets up AWS Directory Service to integrate its existing Microsoft Active Directory with AWS. This allows Active Directory users and groups to be recognized in the AWS environment.

2. IAM Roles: The company creates IAM roles in AWS and defines permissions for those roles. These roles are then mapped to Active Directory users or groups using role trust policies.

3. Federation: When Active Directory users log in, they assume the IAM roles based on their Active Directory group memberships or user attributes. This allows them to access AWS resources with the permissions defined in the roles.

### Why not the other options?

- A. AWS IAM groups: IAM groups are used to manage permissions for IAM users within AWS, not for integrating with external identity providers like Active Directory.

- B. AWS IAM users: IAM users are created and managed directly in AWS, not mapped to external identities like Active Directory users.

- D. AWS IAM access keys: Access keys are used for programmatic access to AWS resources and are not related to integrating with Active Directory.

By using IAM roles, the company can map permissions for AWS services to Active Directory user attributes, enabling seamless access control based on existing directory identities.

A company has contracted with a third party to audit several AWS accounts. To enable the audit, cross-account IAM roles have been created in each account targeted for audit. The Auditor is having trouble accessing some of the accounts.

Which of the following may be causing this problem? (Choose three.)

A. The external ID used by the Auditor is missing or incorrect.

B. The Auditor is using the incorrect password.

C. The Auditor has not been granted sts:AssumeRole for the role in the destination account.

D. The Amazon EC2 role used by the Auditor must be set to the destination account role.

E. The secret key used by the Auditor is missing or incorrect.

F. The role ARN used by the Auditor is missing or incorrect.

The correct answers are:

**A. The external ID used by the Auditor is missing or incorrect.**

**C. The Auditor has not been granted sts:AssumeRole for the role in the destination account.**

**F. The role ARN used by the Auditor is missing or incorrect.**

### Explanation:

When using cross-account IAM roles, the following issues could prevent the Auditor from accessing the target accounts:

1. **A. The external ID used by the Auditor is missing or incorrect**:

- An **external ID** is an optional security feature used to prevent the "confused deputy problem." If the destination account requires an external ID and the Auditor does not provide it or provides an incorrect one, the AssumeRole operation will fail.

2. **C. The Auditor has not been granted sts:AssumeRole for the role in the destination account**:

- The IAM role in the destination account must have a trust policy that allows the Auditor's account to assume the role using the sts:AssumeRole action. If this permission is missing or misconfigured, the Auditor will not be able to assume the role.

3. **F. The role ARN used by the Auditor is missing or incorrect**:

- The **role ARN** (Amazon Resource Name) uniquely identifies the IAM role in the destination account. If the Auditor provides an incorrect or missing role ARN, the AssumeRole operation will fail.

### Why not the other options?

- **B. The Auditor is using the incorrect password**:

- Passwords are not used for cross-account role assumption. The Auditor assumes the role using temporary security credentials, not a password.

- **D. The Amazon EC2 role used by the Auditor must be set to the destination account role**:

- This is irrelevant. The Auditor is not using an EC2 instance role; they are assuming a cross-account IAM role.

- **E. The secret key used by the Auditor is missing or incorrect**:

- Secret keys are not used for cross-account role assumption. The Auditor assumes the role using the AssumeRole API, which generates temporary security credentials.

By addressing the external ID, sts:AssumeRole permissions, and role ARN, the Auditor should be able to successfully assume the cross-account IAM role and access the target accounts.

Compliance requirements state that all communications between company on-premises hosts and EC2 instances be encrypted in transit. Hosts use custom proprietary protocols for their communication, and EC2 instances need to be fronted by a load balancer for increased availability.

Which of the following solutions will meet these requirements?

A. Offload SSL termination onto an SSL listener on a Classic Load Balancer, and use a TCP connection between the load balancer and the EC2 instances.

B. Route all traffic through a TCP listener on a Classic Load Balancer, and terminate the TLS connection on the EC2 instances.

C. Create an HTTPS listener using an Application Load Balancer, and route all of the communication through that load balancer.

D. Offload SSL termination onto an SSL listener using an Application Load Balancer, and re-spawn and SSL connection between the load balancer and the EC2 instances.

The correct answer is:

D. Offload SSL termination onto an SSL listener using an Application Load Balancer, and re-spawn an SSL connection between the load balancer and the EC2 instances.

### Explanation:

To meet the compliance requirements of encrypting all communications between on-premises hosts and EC2 instances, while also fronting the EC2 instances with a load balancer for increased availability, the solution must ensure end-to-end encryption. Here's why option D is the best choice:

1. SSL Termination at the Load Balancer:

- The Application Load Balancer (ALB) supports SSL termination, meaning it can decrypt incoming HTTPS traffic from on-premises hosts.

2. Re-Spawn SSL Connection to EC2 Instances:

- After decrypting the traffic, the ALB can re-encrypt the traffic using a new SSL/TLS connection between the load balancer and the EC2 instances. This ensures that the communication between the load balancer and the EC2 instances is also encrypted, meeting the requirement for encryption in transit.

3. Custom Proprietary Protocols:

- Since the hosts use custom proprietary protocols, the ALB can handle the traffic at the application layer (Layer 7), ensuring compatibility with the protocols.

### Why not the other options?

- A. Offload SSL termination onto an SSL listener on a Classic Load Balancer, and use a TCP connection between the load balancer and the EC2 instances:

- This option does not ensure encryption between the load balancer and the EC2 instances, as it uses a TCP connection (unencrypted) for this segment.

- B. Route all traffic through a TCP listener on a Classic Load Balancer, and terminate the TLS connection on the EC2 instances:

- While this ensures encryption between the on-premises hosts and the EC2 instances, it does not offload SSL termination to the load balancer, which could increase the computational load on the EC2 instances.

- C. Create an HTTPS listener using an Application Load Balancer, and route all of the communication through that load balancer:

- This option does not explicitly mention re-encrypting the traffic between the load balancer and the EC2 instances, which is necessary for end-to-end encryption.

By using an Application Load Balancer to terminate SSL and re-encrypt the traffic to the EC2 instances, the solution ensures end-to-end encryption and meets the compliance requirements.

An application is currently secured using network access control lists and security groups. Web servers are located in public subnets behind an Application Load

Balancer (ALB); application servers are located in private subnets.

How can edge security be enhanced to safeguard the Amazon EC2 instances against attack? (Choose two.)

A. Configure the application's EC2 instances to use NAT gateways for all inbound traffic.

B. Move the web servers to private subnets without public IP addresses.

C. Configure AWS WAF to provide DDoS attack protection for the ALB.

D. Require all inbound network traffic to route through a bastion host in the private subnet.

E. Require all inbound and outbound network traffic to route through an AWS Direct Connect connection.

The correct answers are:

B. Move the web servers to private subnets without public IP addresses.

C. Configure AWS WAF to provide DDoS attack protection for the ALB.

### Explanation:

To enhance edge security for the application and safeguard the EC2 instances against attacks, the following measures are recommended:

1. B. Move the web servers to private subnets without public IP addresses:

- By moving the web servers to private subnets, they are no longer directly exposed to the internet. All inbound traffic must pass through the Application Load Balancer (ALB), which acts as a single point of entry and provides an additional layer of security. This reduces the attack surface and minimizes the risk of direct attacks on the web servers.

2. C. Configure AWS WAF to provide DDoS attack protection for the ALB:

- AWS WAF (Web Application Firewall) can be integrated with the ALB to protect against common web exploits, such as SQL injection and cross-site scripting (XSS). Additionally, AWS WAF can help mitigate Distributed Denial of Service (DDoS) attacks by filtering and monitoring HTTP/HTTPS traffic. This enhances the security of the application at the edge.

### Why not the other options?

- A. Configure the application's EC2 instances to use NAT gateways for all inbound traffic:

- NAT gateways are used for outbound internet access for instances in private subnets, not for inbound traffic. This option is not relevant for enhancing edge security.

- D. Require all inbound network traffic to route through a bastion host in the private subnet:

- A bastion host is used for secure access to instances in private subnets, typically for administrative purposes. It is not suitable for routing all inbound application traffic, as it would create a bottleneck and is not designed for this use case.

- E. Require all inbound and outbound network traffic to route through an AWS Direct Connect connection:

- AWS Direct Connect is used for establishing a dedicated network connection between on-premises infrastructure and AWS. It is not a security measure for protecting against attacks on web applications.

By moving the web servers to private subnets and configuring AWS WAF for the ALB, the application's edge security is significantly enhanced, protecting the EC2 instances from potential attacks.

A Security Administrator is restricting the capabilities of company root user accounts. The company uses AWS Organizations and has enabled it for all feature sets, including consolidated billing. The top-level account is used for billing and administrative purposes, not for operational AWS resource purposes.

How can the Administrator restrict usage of member root user accounts across the organization?

A. Disable the use of the root user account at the organizational root. Enable multi-factor authentication of the root user account for each organizational member account.

B. Configure IAM user policies to restrict root account capabilities for each Organizations member account.

C. Create an organizational unit (OU) in Organizations with a service control policy that controls usage of the root user. Add all operational accounts to the new OU.

D. Configure AWS CloudTrail to integrate with Amazon CloudWatch Logs and then create a metric filter for RootAccountUsage.

The correct answer is:

C. Create an organizational unit (OU) in Organizations with a service control policy that controls usage of the root user. Add all operational accounts to the new OU.

### Explanation:

To restrict the usage of root user accounts across the organization, the Security Administrator can use AWS Organizations and Service Control Policies (SCPs). Here's how this solution works:

1. Create an Organizational Unit (OU):

- The Administrator creates a new OU within AWS Organizations to group all operational accounts.

2. Create a Service Control Policy (SCP):

- The Administrator creates an SCP that restricts the capabilities of the root user account. For example, the SCP can deny specific actions or limit the root user's permissions.

3. Attach the SCP to the OU:

- The SCP is attached to the OU, and all member accounts within the OU inherit the policy. This ensures that the root user accounts in those member accounts are restricted according to the SCP.

4. Add Operational Accounts to the OU:

- All operational accounts are moved into the new OU, ensuring that the SCP applies to them.

### Why not the other options?

- A. Disable the use of the root user account at the organizational root. Enable multi-factor authentication of the root user account for each organizational member account:

- Disabling the root user account at the organizational root is not possible, as the root user is always available for emergency access. Enabling MFA is a good security practice but does not restrict the capabilities of the root user.

- B. Configure IAM user policies to restrict root account capabilities for each Organizations member account:

- IAM policies cannot be applied to the root user account. They only apply to IAM users, groups, and roles.

- D. Configure AWS CloudTrail to integrate with Amazon CloudWatch Logs and then create a metric filter for RootAccountUsage:

- While this approach can help monitor and detect root user activity, it does not restrict or control the usage of the root user account.

By using SCPs in AWS Organizations, the Administrator can effectively restrict the capabilities of root user accounts across the organization, ensuring better security and compliance.

A Systems Engineer has been tasked with configuring outbound mail through Simple Email Service (SES) and requires compliance with current TLS standards.

The mail application should be configured to connect to which of the following endpoints and corresponding ports?

A. email.us-east-1.amazonaws.com over port 8080

B. email-pop3.us-east-1.amazonaws.com over port 995

C. email-smtp.us-east-1.amazonaws.com over port 587

D. email-imap.us-east-1.amazonaws.com over port 993

The correct answer is:

C. email-smtp.us-east-1.amazonaws.com over port 587

### Explanation:

To configure outbound mail through Amazon Simple Email Service (SES) while ensuring compliance with current TLS standards, the mail application must connect to the SMTP endpoint provided by SES. Here's why option C is correct:

1. SMTP Endpoint:

- SES provides an SMTP endpoint for sending emails. The endpoint format is email-smtp.<region>.amazonaws.com, where <region> is the AWS region (e.g., us-east-1).

2. Port 587:

- Port 587 is the standard port for SMTP submission with STARTTLS, which ensures that the connection is encrypted using TLS. This is the recommended port for secure email transmission.

3. TLS Compliance:

- Using port 587 with STARTTLS ensures that the mail application complies with current TLS standards for secure communication.

### Why not the other options?

- A. email.us-east-1.amazonaws.com over port 8080:

- This is not a valid SES endpoint or port. SES does not use port 8080 for email transmission.

- B. email-pop3.us-east-1.amazonaws.com over port 995:

- POP3 (Post Office Protocol) is used for retrieving emails, not sending them. Port 995 is for POP3 over SSL/TLS, which is unrelated to outbound mail through SES.

- D. email-imap.us-east-1.amazonaws.com over port 993:

- IMAP (Internet Message Access Protocol) is used for retrieving emails, not sending them. Port 993 is for IMAP over SSL/TLS, which is unrelated to outbound mail through SES.

By configuring the mail application to connect to email-smtp.us-east-1.amazonaws.com over port 587, the Systems Engineer ensures secure and compliant outbound email transmission through Amazon SES.

A threat assessment has identified a risk whereby an internal employee could exfiltrate sensitive data from production host running inside AWS (Account 1). The threat was documented as follows:

Threat description: A malicious actor could upload sensitive data from Server X by configuring credentials for an AWS account (Account 2) they control and uploading data to an Amazon S3 bucket within their control.

Server X has outbound internet access configured via a proxy server. Legitimate access to S3 is required so that the application can upload encrypted files to an

S3 bucket. Server X is currently using an IAM instance role. The proxy server is not able to inspect any of the server communication due to TLS encryption.

Which of the following options will mitigate the threat? (Choose two.)

A. Bypass the proxy and use an S3 VPC endpoint with a policy that whitelists only certain S3 buckets within Account 1.

B. Block outbound access to public S3 endpoints on the proxy server.

C. Configure Network ACLs on Server X to deny access to S3 endpoints.

D. Modify the S3 bucket policy for the legitimate bucket to allow access only from the public IP addresses associated with the application server.

E. Remove the IAM instance role from the application server and save API access keys in a trusted and encrypted application config file.

The correct answers are:

A. Bypass the proxy and use an S3 VPC endpoint with a policy that whitelists only certain S3 buckets within Account 1.

B. Block outbound access to public S3 endpoints on the proxy server.

### Explanation:

To mitigate the threat of a malicious actor exfiltrating sensitive data from Server X to an S3 bucket in Account 2, the following measures should be taken:

1. A. Bypass the proxy and use an S3 VPC endpoint with a policy that whitelists only certain S3 buckets within Account 1:

- By using an S3 VPC endpoint, traffic between Server X and S3 remains within the AWS network, avoiding the public internet. This ensures that data cannot be exfiltrated to external S3 buckets.

- A VPC endpoint policy can be configured to allow access only to specific S3 buckets within Account 1, preventing Server X from uploading data to unauthorized buckets.

2. B. Block outbound access to public S3 endpoints on the proxy server:

- Blocking outbound access to public S3 endpoints ensures that Server X cannot communicate with S3 buckets outside the VPC, including those in Account 2. This prevents the malicious actor from uploading data to their own S3 bucket.

### Why not the other options?

- C. Configure Network ACLs on Server X to deny access to S3 endpoints:

- Network ACLs are stateless and apply to all traffic, which could disrupt legitimate access to S3. This approach is not granular enough and could break the application's functionality.

- D. Modify the S3 bucket policy for the legitimate bucket to allow access only from the public IP addresses associated with the application server:

- This approach is ineffective because Server X uses an IAM instance role, which does not rely on IP-based restrictions. Additionally, the malicious actor could still upload data to their own S3 bucket in Account 2.

- E. Remove the IAM instance role from the application server and save API access keys in a trusted and encrypted application config file:

- This approach increases security risks because storing static access keys in a config file is less secure than using IAM roles. It also does not prevent the malicious actor from using their own credentials to upload data to Account 2.

By using an S3 VPC endpoint with a restrictive policy and blocking outbound access to public S3 endpoints, the threat of data exfiltration is effectively mitigated while maintaining legitimate access to S3.

A company will store sensitive documents in three Amazon S3 buckets based on a data classification scheme of Sensitive, Confidential, and Restricted. The security solution must meet all of the following requirements:

✑ Each object must be encrypted using a unique key.

✑ Items that are stored in the Restricted bucket require two-factor authentication for decryption.

✑ AWS KMS must automatically rotate encryption keys annually.

Which of the following meets these requirements?

A. Create a Customer Master Key (CMK) for each data classification type, and enable the rotation of it annually. For the Restricted CMK, define the MFA policy within the key policy. Use S3 SSE-KMS to encrypt the objects.

B. Create a CMK grant for each data classification type with EnableKeyRotation and MultiFactorAuthPresent set to true. S3 can then use the grants to encrypt each object with a unique CMK.

C. Create a CMK for each data classification type, and within the CMK policy, enable rotation of it annually, and define the MFA policy. S3 can then create DEK grants to uniquely encrypt each object within the S3 bucket.

D. Create a CMK with unique imported key material for each data classification type, and rotate them annually. For the Restricted key material, define the MFA policy in the key policy. Use S3 SSE-KMS to encrypt the objects.

The correct answer is:

A. Create a Customer Master Key (CMK) for each data classification type, and enable the rotation of it annually. For the "Restricted" CMK, define the MFA policy within the key policy. Use S3 SSE-KMS to encrypt the objects.

### Explanation:

This solution meets all the requirements as follows:

1. Each object must be encrypted using a unique key:

- When using S3 Server-Side Encryption with AWS KMS (SSE-KMS), each object is encrypted with a unique data encryption key (DEK), which is itself encrypted by the Customer Master Key (CMK). This ensures that each object has a unique encryption key.

2. Items in the "Restricted" bucket require two-factor authentication for decryption:

- The MFA policy can be defined within the key policy of the CMK used for the "Restricted" bucket. This ensures that MFA is required for decryption operations involving this CMK.

3. AWS KMS must automatically rotate encryption keys annually:

- AWS KMS supports automatic key rotation for CMKs. By enabling this feature, the CMKs will be rotated annually, ensuring compliance with the requirement.

### Why not the other options?

- B. Create a CMK grant for each data classification type with EnableKeyRotation and MultiFactorAuthPresent set to true. S3 can then use the grants to encrypt each object with a unique CMK:

- CMK grants are used to delegate permissions to use a CMK, but they do not enable automatic key rotation or MFA policies. This option does not meet the requirements.

- C. Create a CMK for each data classification type, and within the CMK policy, enable rotation of it annually, and define the MFA policy. S3 can then create DEK grants to uniquely encrypt each object within the S3 bucket:

- While this option mentions enabling key rotation and defining an MFA policy, the use of "DEK grants" is incorrect. S3 SSE-KMS automatically handles the creation of unique data encryption keys (DEKs) for each object, so no additional grants are needed.

- D. Create a CMK with unique imported key material for each data classification type, and rotate them annually. For the "Restricted" key material, define the MFA policy in the key policy. Use S3 SSE-KMS to encrypt the objects:

- Importing key material is unnecessary and adds complexity. AWS KMS can automatically generate and manage CMKs without requiring imported key material. This option is overly complex and does not provide any additional benefits over option A.

By creating a CMK for each data classification type, enabling automatic key rotation, defining an MFA policy for the "Restricted" CMK, and using S3 SSE-KMS, the solution meets all the requirements effectively.

An organization wants to deploy a three-tier web application whereby the application servers run on Amazon EC2 instances. These EC2 instances need access to credentials that they will use to authenticate their SQL connections to an Amazon RDS DB instance. Also, AWS Lambda functions must issue queries to the RDS database by using the same database credentials.

The credentials must be stored so that the EC2 instances and the Lambda functions can access them. No other access is allowed. The access logs must record when the credentials were accessed and by whom.

What should the Security Engineer do to meet these requirements?

A. Store the database credentials in AWS Key Management Service (AWS KMS). Create an IAM role with access to AWS KMS by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances. Set up Lambda to use the new role for execution.

B. Store the database credentials in AWS KMS. Create an IAM role with access to KMS by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances and the Lambda function.

C. Store the database credentials in AWS Secrets Manager. Create an IAM role with access to Secrets Manager by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances and the Lambda function.

D. Store the database credentials in AWS Secrets Manager. Create an IAM role with access to Secrets Manager by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances. Set up Lambda to use the new role for execution.

The correct answer is:

D. Store the database credentials in AWS Secrets Manager. Create an IAM role with access to Secrets Manager by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances. Set up Lambda to use the new role for execution.

### Explanation:

This solution meets all the requirements as follows:

1. Store the database credentials in AWS Secrets Manager:

- AWS Secrets Manager is designed to securely store and manage sensitive information, such as database credentials. It provides automatic rotation, access control, and auditing capabilities.

2. Create an IAM role with access to Secrets Manager:

- An IAM role is created with permissions to access the secrets stored in Secrets Manager. The role's trust policy allows both EC2 and Lambda service principals to assume the role.

3. Add the role to an EC2 instance profile and attach it to the EC2 instances:

- The IAM role is added to an EC2 instance profile, which is then attached to the EC2 instances. This allows the EC2 instances to assume the role and access the credentials.

4. Set up Lambda to use the new role for execution:

- The Lambda function is configured to use the same IAM role for execution, allowing it to access the credentials stored in Secrets Manager.

5. Access logs and auditing:

- AWS Secrets Manager automatically logs access to secrets, including when the credentials were accessed and by whom. This meets the requirement for access logging.

### Why not the other options?

- A. Store the database credentials in AWS Key Management Service (AWS KMS). Create an IAM role with access to AWS KMS by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances. Set up Lambda to use the new role for execution:

- AWS KMS is used for encryption keys, not for storing credentials. This option does not meet the requirement for storing and managing database credentials.

- B. Store the database credentials in AWS KMS. Create an IAM role with access to KMS by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances and the Lambda function:

- Similar to option A, AWS KMS is not suitable for storing credentials. Additionally, attaching an instance profile to a Lambda function is not valid, as Lambda functions use execution roles.

- C. Store the database credentials in AWS Secrets Manager. Create an IAM role with access to Secrets Manager by using the EC2 and Lambda service principals in the role's trust policy. Add the role to an EC2 instance profile. Attach the instance profile to the EC2 instances and the Lambda function:

- This option incorrectly suggests attaching an instance profile to a Lambda function. Lambda functions use execution roles, not instance profiles.

By using AWS Secrets Manager to store the credentials and configuring IAM roles for both EC2 instances and Lambda functions, the Security Engineer meets all the requirements effectively.

A company has a customer master key (CMK) with imported key materials. Company policy requires that all encryption keys must be rotated every year.

What can be done to implement the above policy?

A. Enable automatic key rotation annually for the CMK.

B. Use AWS Command Line Interface to create an AWS Lambda function to rotate the existing CMK annually.

C. Import new key material to the existing CMK and manually rotate the CMK.

D. Create a new CMK, import new key material to it, and point the key alias to the new CMK.

The correct answer is:

D. Create a new CMK, import new key material to it, and point the key alias to the new CMK.

### Explanation:

For Customer Master Keys (CMKs) with imported key material, automatic key rotation is not supported. Therefore, to comply with the company's policy of rotating encryption keys annually, the following steps must be taken:

1. Create a new CMK:

- A new CMK is created in AWS KMS to replace the existing one.

2. Import new key material to the new CMK:

- New key material is imported into the new CMK. This ensures that the new key is used for encryption and decryption operations.

3. Point the key alias to the new CMK:

- The key alias (a friendly name for the CMK) is updated to point to the new CMK. This ensures that applications using the alias do not need to be modified, as they will automatically start using the new CMK.

### Why not the other options?

- A. Enable automatic key rotation annually for the CMK:

- Automatic key rotation is not supported for CMKs with imported key material. This option is invalid.

- B. Use AWS Command Line Interface to create an AWS Lambda function to rotate the existing CMK annually:

- While this approach could be used to automate the process of creating a new CMK and importing key material, it is unnecessarily complex. Manually creating a new CMK and updating the alias is simpler and more straightforward.

- C. Import new key material to the existing CMK and manually rotate the CMK:

- Importing new key material into an existing CMK does not rotate the key. The existing CMK continues to use the same key ID, and the new key material does not change the key's metadata or alias. This option does not meet the requirement for key rotation.

By creating a new CMK, importing new key material, and updating the alias, the company can effectively rotate the encryption key annually while complying with its policy.

A water utility company uses a number of Amazon EC2 instances to manage updates to a fleet of 2,000 Internet of Things (IoT) field devices that monitor water quality. These devices each have unique access credentials.

An operational safety policy requires that access to specific credentials is independently auditable.

What is the MOST cost-effective way to manage the storage of credentials?

A. Use AWS Systems Manager to store the credentials as Secure Strings Parameters. Secure by using an AWS KMS key.

B. Use AWS Key Management System to store a master key, which is used to encrypt the credentials. The encrypted credentials are stored in an Amazon RDS instance.

C. Use AWS Secrets Manager to store the credentials.

D. Store the credentials in a JSON file on Amazon S3 with server-side encryption.

Answer:

A. Use AWS Systems Manager to store the credentials as Secure Strings Parameters. Secure by using an AWS KMS key.

### Explanation:

1. Cost-Effectiveness:

- AWS Systems Manager Parameter Store (Standard tier) is significantly cheaper for storing 2,000 credentials compared to AWS Secrets Manager.

- Parameter Store Cost: ~$0.05 per parameter/month (Standard tier) = $100/month for 2,000 parameters.

- Secrets Manager Cost: ~$0.40 per secret/month = $800/month for 2,000 secrets.

- S3 (Option D) could be cheaper for storage, but auditing per credential requires separate files, which is not specified here. A single JSON file would lack granular auditability.

2. Independent Auditability:

- Parameter Store logs every API call (e.g., GetParameter, PutParameter) in AWS CloudTrail, enabling per-credential access tracking.

- Secrets Manager also provides auditability, but its higher cost makes Parameter Store the better choice for this use case.

3. Security:

- Secure Strings in Parameter Store are encrypted using AWS KMS, ensuring credentials are protected at rest.

- No need for complex setups (e.g., RDS in Option B) or managing thousands of files (Option D).

### Why Other Options Fail:

- B (KMS + RDS): Overly complex and expensive for credential storage. RDS is unnecessary for static credentials.

- C (Secrets Manager): More expensive than Parameter Store for large-scale credential storage.

- D (S3 with Server-Side Encryption): A single JSON file lacks per-credential auditability. Managing 2,000 files is cumbersome and not explicitly described in the question.

### Key Takeaway:

AWS Systems Manager Parameter Store with Secure Strings is the most cost-effective and auditable solution for storing 2,000 unique credentials while meeting security and compliance requirements.

An organization is using Amazon CloudWatch Logs with agents deployed on its Linux Amazon EC2 instances. The agent configuration files have been checked and the application log files to be pushed are configured correctly. A review has identified that logging from specific instances is missing.

Which steps should be taken to troubleshoot the issue? (Choose two.)

A. Use an EC2 run command to confirm that the awslogs service is running on all instances.

B. Verify that the permissions used by the agent allow creation of log groups/streams and to put log events.

C. Check whether any application log entries were rejected because of invalid time stamps by reviewing /var/cwlogs/rejects.log.

D. Check that the trust relationship grants the service cwlogs.amazonaws.com permission to write objects to the Amazon S3 staging bucket.

E. Verify that the time zone on the application servers is in UTC.

The correct answers are:

A. Use an EC2 run command to confirm that the “awslogs” service is running on all instances.

B. Verify that the permissions used by the agent allow creation of log groups/streams and to put log events.

### Explanation:

To troubleshoot missing logging from specific EC2 instances, the following steps should be taken:

1. A. Use an EC2 run command to confirm that the “awslogs” service is running on all instances:

- The CloudWatch Logs agent awslogs service must be running on the instances to push logs to CloudWatch. Using the EC2 Run Command, you can remotely check the status of the awslogs service on all instances to ensure it is active.

2. B. Verify that the permissions used by the agent allow creation of log groups/streams and to put log events:

- The IAM role or credentials used by the CloudWatch Logs agent must have the necessary permissions to create log groups/streams and to put log events. If the permissions are missing or incorrect, the agent will not be able to send logs to CloudWatch.

### Why not the other options?

- C. Check whether any application log entries were rejected because of invalid time stamps by reviewing /var/cwlogs/rejects.log:

- While this step can help identify issues with log entries, it is not directly related to the problem of missing logging from specific instances. The rejects.log file is used to track rejected log entries, not missing logs.

- D. Check that the trust relationship grants the service “cwlogs.amazonaws.com” permission to write objects to the Amazon S3 staging bucket:

- This is irrelevant. CloudWatch Logs does not use an S3 staging bucket for log ingestion. The trust relationship should allow the EC2 instances to assume the IAM role with permissions for CloudWatch Logs.

- E. Verify that the time zone on the application servers is in UTC:

- While incorrect time zones can cause issues with log timestamps, they do not prevent logs from being sent to CloudWatch. This step is not directly related to the issue of missing logs.

By confirming that the awslogs service is running and verifying the agent's permissions, you can effectively troubleshoot the issue of missing logging from specific EC2 instances.

A Security Engineer must design a solution that enables the incident Response team to audit for changes to a user's IAM permissions in the case of a security incident.

How can this be accomplished?

A. Use AWS Config to review the IAM policy assigned to users before and after the incident.

B. Run the GenerateCredentialReport via the AWS CLI, and copy the output to Amazon S3 daily for auditing purposes.

C. Copy AWS CloudFormation templates to S3, and audit for changes from the template.

D. Use Amazon EC2 Systems Manager to deploy images, and review AWS CloudTrail logs for changes.

The correct answer is:

A. Use AWS Config to review the IAM policy assigned to users before and after the incident.

### Explanation:

To audit changes to a user's IAM permissions, the Security Engineer can use AWS Config as follows:

1. AWS Config:

- AWS Config provides a detailed history of configuration changes for AWS resources, including IAM users, groups, roles, and policies. It tracks changes to IAM policies and permissions over time.

2. Configuration Snapshots:

- AWS Config captures configuration snapshots of IAM resources, allowing you to review the state of IAM policies before and after a security incident.

3. Compliance and Auditing:

- AWS Config rules can be used to evaluate whether IAM configurations comply with security policies. The configuration timeline feature allows you to see exactly when changes were made and what the changes were.

### Why not the other options?

- B. Run the GenerateCredentialReport via the AWS CLI, and copy the output to Amazon S3 daily for auditing purposes:

- The Credential Report provides a snapshot of IAM user credentials (e.g., password and access key usage) but does not track changes to IAM policies or permissions over time. It is not suitable for auditing changes to IAM permissions.

- C. Copy AWS CloudFormation templates to S3, and audit for changes from the template:

- CloudFormation templates are used for infrastructure as code (IaC) and do not provide a history of IAM policy changes. This option is irrelevant for auditing IAM permissions.

- D. Use Amazon EC2 Systems Manager to deploy images, and review AWS CloudTrail logs for changes:

- While CloudTrail logs API calls, including those related to IAM, it does not provide a detailed history of configuration changes like AWS Config. Additionally, EC2 Systems Manager is unrelated to auditing IAM permissions.

By using AWS Config, the Security Engineer can effectively audit changes to IAM permissions and provide the incident response team with the necessary information to investigate security incidents.

A company has complex connectivity rules governing ingress, egress, and communications between Amazon EC2 instances. The rules are so complex that they cannot be implemented within the limits of the maximum number of security groups and network access control lists (network ACLs).

What mechanism will allow the company to implement all required network rules without incurring additional cost?

A. Configure AWS WAF rules to implement the required rules.

B. Use the operating system built-in, host-based firewall to implement the required rules.

C. Use a NAT gateway to control ingress and egress according to the requirements.

D. Launch an EC2-based firewall product from the AWS Marketplace, and implement the required rules in that product.

The correct answer is:

B. Use the operating system built-in, host-based firewall to implement the required rules.

### Explanation:

When the complexity of network rules exceeds the limits of AWS security groups and network ACLs, the most cost-effective solution is to use the operating system's built-in, host-based firewall. Here's why:

1. Host-Based Firewall:

- Operating systems like Linux (e.g., iptables or firewalld) and Windows (e.g., Windows Firewall) provide built-in firewalls that can be configured to implement complex network rules. These firewalls operate at the instance level and are not constrained by the limits of AWS security groups or network ACLs.

2. No Additional Cost:

- Using the host-based firewall does not incur additional costs, as it leverages existing operating system capabilities.

3. Granular Control:

- Host-based firewalls allow for highly granular control over ingress, egress, and inter-instance communication, making them suitable for complex rule sets.

### Why not the other options?

- A. Configure AWS WAF rules to implement the required rules:

- AWS WAF (Web Application Firewall) is designed to protect web applications from common web exploits (e.g., SQL injection, XSS). It is not suitable for controlling general network traffic between EC2 instances.

- C. Use a NAT gateway to control ingress and egress according to the requirements:

- A NAT gateway is used to allow instances in a private subnet to access the internet. It does not provide the granularity or flexibility needed to implement complex network rules.

- D. Launch an EC2-based firewall product from the AWS Marketplace, and implement the required rules in that product:

- While this option can provide advanced firewall capabilities, it incurs additional costs for the EC2 instance and the Marketplace product. It is not a cost-effective solution.

By using the operating system's built-in firewall, the company can implement all required network rules without exceeding AWS limits or incurring additional costs.

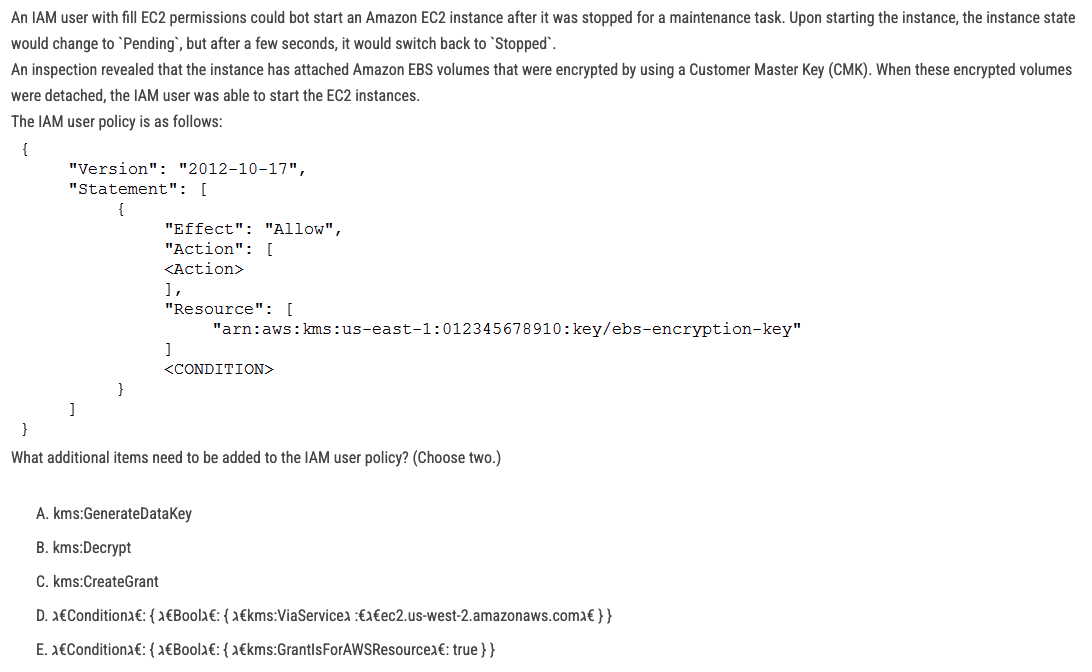

The correct answers are:

B. kms:Decrypt

C. kms:CreateGrant

### Explanation:

To start an EC2 instance with encrypted EBS volumes, the IAM user must have the necessary permissions to interact with the Customer Master Key (CMK) used to encrypt the volumes. The following permissions are required:

1. kms:Decrypt:

- This permission allows the IAM user to decrypt the encrypted EBS volumes attached to the EC2 instance. Without this permission, the instance cannot access the data on the encrypted volumes, causing it to fail to start.

2. kms:CreateGrant:

- This permission allows the IAM user to create a grant, which is necessary for the EC2 service to use the CMK to decrypt the EBS volumes. Grants are used to delegate permissions to AWS services like EC2 to use the CMK on behalf of the user.

### Why not the other options?

- A. kms:GenerateDataKey:

- This permission is used to generate data keys for encryption, not for decrypting existing encrypted volumes. It is not required for starting an EC2 instance with encrypted EBS volumes.

- D. "Condition": { "Bool": { "kms:ViaService": "ec2.us-west-2.amazonaws.com" } }:

- While this condition can restrict the use of the CMK to the EC2 service in a specific region, it is not necessary for the basic functionality of starting an EC2 instance with encrypted EBS volumes.

- E. "Condition": { "Bool": { "kms:GrantIsForAWSResource": true } }:

- This condition ensures that grants are only created for AWS resources, but it is not required for the IAM user to start the EC2 instance.

By adding kms:Decrypt and kms:CreateGrant to the IAM user policy, the user will have the necessary permissions to start the EC2 instance with encrypted EBS volumes.

A Security Administrator has a website hosted in Amazon S3. The Administrator has been given the following requirements:

✑ Users may access the website by using an Amazon CloudFront distribution.

✑ Users may not access the website directly by using an Amazon S3 URL.

Which configurations will support these requirements? (Choose two.)

A. Associate an origin access identity with the CloudFront distribution.

B. Implement a Principal: cloudfront.amazonaws.com condition in the S3 bucket policy.

C. Modify the S3 bucket permissions so that only the origin access identity can access the bucket contents.

D. Implement security groups so that the S3 bucket can be accessed only by using the intended CloudFront distribution.

E. Configure the S3 bucket policy so that it is accessible only through VPC endpoints, and place the CloudFront distribution into the specified VPC.

The correct answers are:

A. Associate an origin access identity with the CloudFront distribution.

C. Modify the S3 bucket permissions so that only the origin access identity can access the bucket contents.

### Explanation:

To ensure that users can access the website only through the CloudFront distribution and not directly via the S3 URL, the following configurations are required:

1. A. Associate an origin access identity with the CloudFront distribution:

- An origin access identity (OAI) is a special CloudFront user that allows CloudFront to access the S3 bucket. By associating an OAI with the CloudFront distribution, you ensure that CloudFront can fetch content from the S3 bucket.

2. C. Modify the S3 bucket permissions so that only the origin access identity can access the bucket contents:

- The S3 bucket policy should be updated to grant access only to the OAI. This ensures that only CloudFront (using the OAI) can access the bucket, and users cannot access the content directly via the S3 URL.

### Why not the other options?

- B. Implement a "Principal": "cloudfront.amazonaws.com" condition in the S3 bucket policy:

- This is incorrect because CloudFront does not use the cloudfront.amazonaws.com principal. Instead, it uses an origin access identity (OAI) to access S3.

- D. Implement security groups so that the S3 bucket can be accessed only by using the intended CloudFront distribution:

- Security groups are used for EC2 instances, not S3 buckets. S3 does not support security groups, so this option is invalid.

- E. Configure the S3 bucket policy so that it is accessible only through VPC endpoints, and place the CloudFront distribution into the specified VPC:

- CloudFront is a global service and cannot be placed into a VPC. Additionally, restricting S3 access to VPC endpoints would prevent CloudFront from accessing the bucket, as CloudFront operates outside the VPC.

By associating an OAI with the CloudFront distribution and restricting S3 bucket access to the OAI, the Security Administrator can ensure that users can access the website only through CloudFront and not directly via the S3 URL.

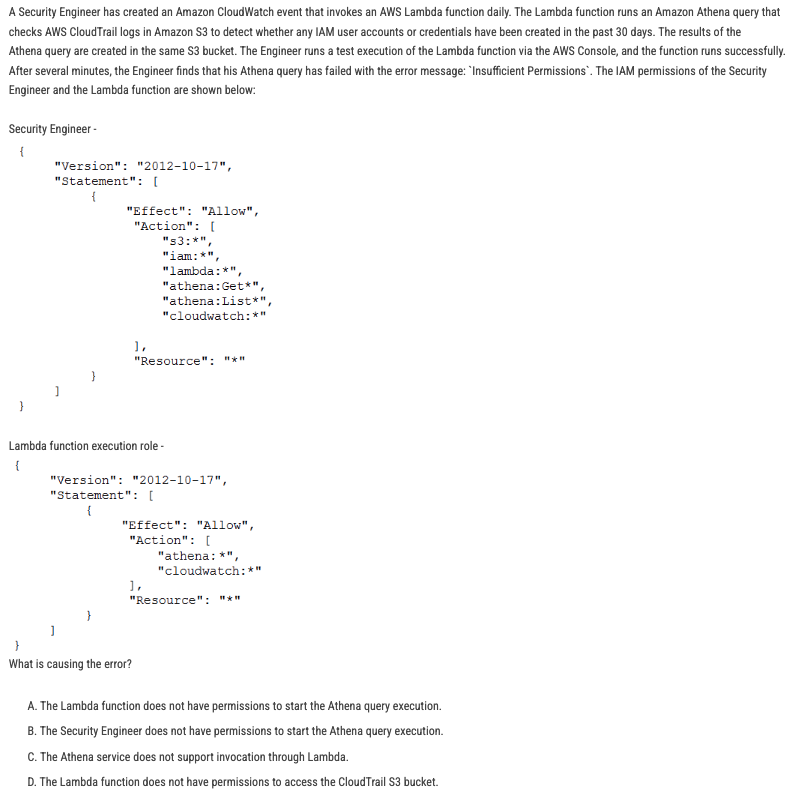

The correct answer is:

D. The Lambda function does not have permissions to access the CloudTrail S3 bucket.

### Explanation:

The error message "Insufficient Permissions" indicates that the Lambda function lacks the necessary permissions to perform its tasks. Here's why option D is correct:

1. Access to CloudTrail Logs in S3:

- The Lambda function runs an Athena query to check CloudTrail logs stored in an S3 bucket. To access these logs, the Lambda function must have permissions to read from the S3 bucket where the CloudTrail logs are stored.

2. Missing S3 Permissions in Lambda Execution Role:

- The Lambda execution role provided in the question does not include any permissions for S3 s3:GetObject, s3:ListBucket, etc.). Without these permissions, the Lambda function cannot access the CloudTrail logs in the S3 bucket, leading to the "Insufficient Permissions" error.

### Why not the other options?

- A. The Lambda function does not have permissions to start the Athena query execution:

- The Lambda execution role includes athena:* permissions, which allow it to start and manage Athena queries. This is not the cause of the error.

- B. The Security Engineer does not have permissions to start the Athena query execution:

- The Security Engineer's permissions include athena:Get* and athena:List*, which are sufficient to start and manage Athena queries. This is not the cause of the error.

- C. The Athena service does not support invocation through Lambda:

- Athena can be invoked through Lambda. This option is incorrect.

By adding the necessary S3 permissions (e.g., s3:GetObject and s3:ListBucket) to the Lambda execution role, the Lambda function will be able to access the CloudTrail logs in the S3 bucket, resolving the "Insufficient Permissions" error.

A company requires that IP packet data be inspected for invalid or malicious content.

Which of the following approaches achieve this requirement? (Choose two.)

A. Configure a proxy solution on Amazon EC2 and route all outbound VPC traffic through it. Perform inspection within proxy software on the EC2 instance.

B. Configure the host-based agent on each EC2 instance within the VPC. Perform inspection within the host-based agent.

C. Enable VPC Flow Logs for all subnets in the VPC. Perform inspection from the Flow Log data within Amazon CloudWatch Logs.

D. Configure Elastic Load Balancing (ELB) access logs. Perform inspection from the log data within the ELB access log files.

E. Configure the CloudWatch Logs agent on each EC2 instance within the VPC. Perform inspection from the log data within CloudWatch Logs.

The correct answers are:

A. Configure a proxy solution on Amazon EC2 and route all outbound VPC traffic through it. Perform inspection within proxy software on the EC2 instance.

B. Configure the host-based agent on each EC2 instance within the VPC. Perform inspection within the host-based agent.

### Explanation:

To inspect IP packet data for invalid or malicious content, the following approaches are effective:

1. A. Configure a proxy solution on Amazon EC2 and route all outbound VPC traffic through it. Perform inspection within proxy software on the EC2 instance:

- A proxy solution can inspect all outbound traffic from the VPC. By routing traffic through the proxy, you can analyze and filter packets for malicious content before they leave the VPC.

2. B. Configure the host-based agent on each EC2 instance within the VPC. Perform inspection within the host-based agent:

- A host-based agent can inspect traffic at the instance level. This allows for granular inspection of IP packets directly on each EC2 instance, ensuring that malicious content is detected and blocked before it enters or leaves the instance.

### Why not the other options?

- C. Enable VPC Flow Logs for all subnets in the VPC. Perform inspection from the Flow Log data within Amazon CloudWatch Logs:

- VPC Flow Logs provide metadata about IP traffic (e.g., source/destination IP, ports, and protocol) but do not capture the actual packet content. Therefore, they cannot be used to inspect for invalid or malicious content within the packets.

- D. Configure Elastic Load Balancing (ELB) access logs. Perform inspection from the log data within the ELB access log files:

- ELB access logs provide information about HTTP/HTTPS requests but do not capture IP packet data. They are not suitable for inspecting IP packets for malicious content.

- E. Configure the CloudWatch Logs agent on each EC2 instance within the VPC. Perform inspection from the log data within CloudWatch Logs:

- The CloudWatch Logs agent collects and sends logs to CloudWatch but does not inspect IP packet data. This option is not suitable for packet-level inspection.

By using a proxy solution or host-based agents, the company can effectively inspect IP packet data for invalid or malicious content, ensuring the security of its network traffic.

An organization has a system in AWS that allows a large number of remote workers to submit data files. File sizes vary from a few kilobytes to several megabytes.

A recent audit highlighted a concern that data files are not encrypted while in transit over untrusted networks.

Which solution would remediate the audit finding while minimizing the effort required?

A. Upload an SSL certificate to IAM, and configure Amazon CloudFront with the passphrase for the private key.

B. Call KMS.Encrypt() in the client, passing in the data file contents, and call KMS.Decrypt() server-side.

C. Use AWS Certificate Manager to provision a certificate on an Elastic Load Balancing in front of the web service's servers.

D. Create a new VPC with an Amazon VPC VPN endpoint, and update the web service's DNS record.

The correct answer is:

C. Use AWS Certificate Manager to provision a certificate on an Elastic Load Balancing in front of the web service's servers.

### Explanation:

To remediate the audit finding and ensure that data files are encrypted while in transit over untrusted networks, the following solution is the most effective and requires minimal effort:

1. AWS Certificate Manager (ACM):

- ACM allows you to provision, manage, and deploy SSL/TLS certificates for use with AWS services. These certificates can be used to encrypt data in transit.

2. Elastic Load Balancing (ELB):

- By provisioning an SSL/TLS certificate from ACM and attaching it to an ELB (Application Load Balancer or Network Load Balancer), you can ensure that all data transmitted between remote workers and the web service is encrypted using HTTPS.

3. Minimal Effort:

- This solution requires minimal effort because ACM simplifies the process of obtaining and managing certificates, and ELB automatically handles the encryption and decryption of traffic.

### Why not the other options?

- A. Upload an SSL certificate to IAM, and configure Amazon CloudFront with the passphrase for the private key:

- This option is not suitable because CloudFront is typically used for content delivery, not for handling file uploads from remote workers. Additionally, managing certificates through IAM is more complex than using ACM.

- B. Call KMS.Encrypt() in the client, passing in the data file contents, and call KMS.Decrypt() server-side:

- While this approach would encrypt the data, it is overly complex and requires significant changes to the client and server-side code. It is not a practical solution for encrypting data in transit.

- D. Create a new VPC with an Amazon VPC VPN endpoint, and update the web service's DNS record:

- This option is not practical for a large number of remote workers, as it would require each worker to connect via VPN, which is cumbersome and not scalable. It also does not address the encryption of data in transit over untrusted networks.

By using AWS Certificate Manager to provision a certificate on an Elastic Load Balancer, the organization can ensure that data files are encrypted in transit with minimal effort, addressing the audit finding effectively.

Which option for the use of the AWS Key Management Service (KMS) supports key management best practices that focus on minimizing the potential scope of data exposed by a possible future key compromise?

A. Use KMS automatic key rotation to replace the master key, and use this new master key for future encryption operations without re-encrypting previously encrypted data.

B. Generate a new Customer Master Key (CMK), re-encrypt all existing data with the new CMK, and use it for all future encryption operations.

C. Change the CMK alias every 90 days, and update key-calling applications with the new key alias.

D. Change the CMK permissions to ensure that individuals who can provision keys are not the same individuals who can use the keys.

The correct answer is:

B. Generate a new Customer Master Key (CMK), re-encrypt all existing data with the new CMK, and use it for all future encryption operations.

### Explanation:

To minimize the potential scope of data exposed by a possible future key compromise, the following key management best practice should be followed:

1. Generate a New CMK and Re-encrypt Data:

- By generating a new Customer Master Key (CMK) and re-encrypting all existing data with the new CMK, you ensure that any compromised key only exposes a limited amount of data. This approach reduces the risk associated with a key compromise by limiting the scope of data encrypted with the compromised key.

2. Use the New CMK for Future Encryption Operations:

- After re-encrypting the data, the new CMK should be used for all future encryption operations. This ensures that only the new key is used moving forward, further minimizing the risk of exposure.

### Why not the other options?

- A. Use KMS automatic key rotation to replace the master key, and use this new master key for future encryption operations without re-encrypting previously encrypted data:

- While automatic key rotation is a good practice, it does not re-encrypt previously encrypted data. This means that data encrypted with the old key remains vulnerable if the old key is compromised.

- C. Change the CMK alias every 90 days, and update key-calling applications with the new key alias:

- Changing the CMK alias does not provide any security benefit, as it does not change the underlying key or re-encrypt data. It is merely a naming convention and does not minimize the scope of data exposed by a key compromise.

- D. Change the CMK permissions to ensure that individuals who can provision keys are not the same individuals who can use the keys:

- While this is a good practice for separation of duties, it does not address the scope of data exposed by a key compromise. It focuses on access control rather than minimizing the impact of a compromised key.

By generating a new CMK and re-encrypting all existing data, the organization can effectively minimize the potential scope of data exposed by a possible future key compromise, adhering to key management best practices.

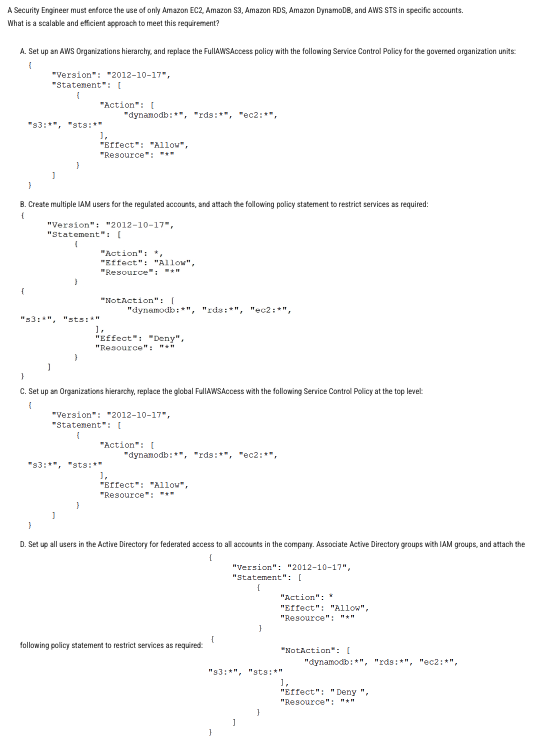

The correct answer is:

C. Set up an Organizations hierarchy, replace the global FullMSRAccess with the following Service Control Policy at the top level:

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"dynamodb:*",

"rds:*",

"ec2:*",

"s3:*",

"sts:*"

],

"Effect": "Allow",

"Resource": "*"

}

]

}### Explanation:

To enforce the use of only Amazon EC2, Amazon S3, Amazon RDS, Amazon DynamoDB, and AWS STS in specific accounts, the most scalable and efficient approach is to use AWS Organizations with Service Control Policies (SCPs). Here's why:

1. AWS Organizations:

- AWS Organizations allows you to centrally manage and govern multiple AWS accounts. By setting up an Organizations hierarchy, you can apply policies across all accounts in the organization.

2. Service Control Policies (SCPs):

- SCPs are used to restrict the services and actions that can be performed in member accounts. By replacing the FullMSRAccess policy with a custom SCP at the top level, you can enforce the use of only the specified services (EC2, S3, RDS, DynamoDB, and STS) across all governed accounts.

3. Scalability and Efficiency:

- Applying an SCP at the top level of the Organizations hierarchy ensures that the policy is automatically enforced across all member accounts. This approach is scalable and efficient, as it eliminates the need to manually configure policies for each account or user.

### Why not the other options?

- A. Set up an AWS Organization hierarchy, and replace the FullMSRAccess policy with the following Service Control Policy for the governed organization units:

- While this option uses SCPs, it applies the policy to specific organizational units (OUs) rather than the entire organization. This approach is less efficient and scalable compared to applying the policy at the top level.

- B. Create multiple IAM users for the regulated accounts, and attach the following policy statement to restrict services as required:

- This approach is not scalable, as it requires manually creating and managing IAM users and policies for each account. It also does not leverage the centralized management capabilities of AWS Organizations.

- D. Set up all users in the Active Directory for federated access to all accounts in the company. Associate Active Directory groups with IAM groups, and attach the following policy statement to restrict services as required:

- While federated access and IAM groups are useful for managing user permissions, this approach does not provide the centralized control and scalability offered by AWS Organizations and SCPs. It also requires significant manual configuration.

By using AWS Organizations and applying a top-level SCP, the Security Explorer can efficiently and scalably enforce the use of only the specified AWS services across the governed accounts.

A company's database developer has just migrated an Amazon RDS database credential to be stored and managed by AWS Secrets Manager. The developer has also enabled rotation of the credential within the Secrets Manager console and set the rotation to change every 30 days.

After a short period of time, a number of existing applications have failed with authentication errors.

What is the MOST likely cause of the authentication errors?

A. Migrating the credential to RDS requires that all access come through requests to the Secrets Manager.

B. Enabling rotation in Secrets Manager causes the secret to rotate immediately, and the applications are using the earlier credential.

C. The Secrets Manager IAM policy does not allow access to the RDS database.

D. The Secrets Manager IAM policy does not allow access for the applications.

The correct answer is:

B. Enabling rotation in Secrets Manager causes the secret to rotate immediately, and the applications are using the earlier credential.

### Explanation:

When you enable rotation for a secret in AWS Secrets Manager, the secret is rotated immediately. This means that the old credential is replaced with a new one. If the applications are still using the old credential, they will fail to authenticate with the database, resulting in authentication errors.

### Why not the other options?

- A. Migrating the credential to RDS requires that all access come through requests to the Secrets Manager:

- This is incorrect because migrating the credential to Secrets Manager does not inherently require all access to come through Secrets Manager. Applications can still use the credentials directly if they are configured to do so.

- C. The Secrets Manager IAM policy does not allow access to the RDS database:

- This is incorrect because the IAM policy for Secrets Manager governs access to the secret itself, not the RDS database. The issue described is related to credential rotation, not IAM permissions.

- D. The Secrets Manager IAM policy does not allow access for the applications:

- This is incorrect because the issue described is related to the applications using outdated credentials, not IAM permissions for accessing Secrets Manager.

To resolve the authentication errors, the applications need to be updated to retrieve the latest credentials from Secrets Manager whenever they attempt to authenticate with the database. This ensures that they always use the most current credentials.

A Security Engineer launches two Amazon EC2 instances in the same Amazon VPC but in separate Availability Zones. Each instance has a public IP address and is able to connect to external hosts on the internet. The two instances are able to communicate with each other by using their private IP addresses, but they are not able to communicate with each other when using their public IP addresses.

Which action should the Security Engineer take to allow communication over the public IP addresses?

A. Associate the instances to the same security groups.

B. Add 0.0.0.0/0 to the egress rules of the instance security groups.

C. Add the instance IDs to the ingress rules of the instance security groups.

D. Add the public IP addresses to the ingress rules of the instance security groups.

The correct answer is:

D. Add the public IP addresses to the ingress rules of the instance security groups.

### Explanation:

To allow communication between the two EC2 instances over their public IP addresses, the Security Engineer must ensure that the security group ingress rules permit traffic from the public IP addresses of the instances. Here's why:

1. Security Group Rules:

- Security groups act as virtual firewalls for EC2 instances, controlling inbound (ingress) and outbound (egress) traffic. By default, security groups do not allow inbound traffic unless explicitly permitted.

2. Public IP Communication:

- When instances communicate using their public IP addresses, the traffic is routed through the internet. To allow this communication, the security group must explicitly permit inbound traffic from the public IP addresses of the other instance.

3. Ingress Rules:

- Adding the public IP addresses of the instances to the ingress rules of their respective security groups ensures that traffic from these IP addresses is allowed.

### Why not the other options?

- A. Associate the instances to the same security groups:

- While associating instances with the same security group can simplify rule management, it does not automatically allow communication over public IP addresses. The ingress rules must still explicitly permit traffic from the public IP addresses.

- B. Add 0.0.0.0/0 to the egress rules of the instance security groups:

- This option allows all outbound traffic from the instances, but it does not address the issue of inbound traffic over public IP addresses. The problem is with ingress rules, not egress rules.

- C. Add the instance IDs to the ingress rules of the instance security groups:

- Security group rules do not support instance IDs as a source or destination. Instead, IP addresses or security group IDs must be used.

By adding the public IP addresses to the ingress rules of the instance security groups, the Security Engineer can enable communication between the instances over their public IP addresses.

The Security Engineer is managing a web application that processes highly sensitive personal information. The application runs on Amazon EC2. The application has strict compliance requirements, which instruct that all incoming traffic to the application is protected from common web exploits and that all outgoing traffic from the EC2 instances is restricted to specific whitelisted URLs.

Which architecture should the Security Engineer use to meet these requirements?

A. Use AWS Shield to scan inbound traffic for web exploits. Use VPC Flow Logs and AWS Lambda to restrict egress traffic to specific whitelisted URLs.

B. Use AWS Shield to scan inbound traffic for web exploits. Use a third-party AWS Marketplace solution to restrict egress traffic to specific whitelisted URLs.

C. Use AWS WAF to scan inbound traffic for web exploits. Use VPC Flow Logs and AWS Lambda to restrict egress traffic to specific whitelisted URLs.

D. Use AWS WAF to scan inbound traffic for web exploits. Use a third-party AWS Marketplace solution to restrict egress traffic to specific whitelisted URLs.

The correct answer is:

D. Use AWS WAF to scan inbound traffic for web exploits. Use a third-party AWS Marketplace solution to restrict egress traffic to specific whitelisted URLs.

### Explanation:

To meet the compliance requirements for the web application, the Security Engineer should implement the following architecture:

1. AWS WAF (Web Application Firewall):

- AWS WAF is designed to protect web applications from common web exploits such as SQL injection, cross-site scripting (XSS), and other OWASP Top 10 vulnerabilities. By integrating AWS WAF with an Application Load Balancer (ALB) or Amazon CloudFront, the Security Engineer can scan and filter inbound traffic to the application, ensuring protection against web exploits.

2. Third-Party AWS Marketplace Solution for Egress Traffic:

- Restricting egress traffic to specific whitelisted URLs requires a solution that can enforce URL-based filtering. While AWS-native services like VPC Flow Logs and Lambda can monitor traffic, they are not designed for granular URL-based filtering. A third-party AWS Marketplace solution, such as a next-generation firewall (NGFW) or web proxy, can provide the necessary functionality to restrict egress traffic to specific whitelisted URLs.

### Why not the other options?

- A. Use AWS Shield to scan inbound traffic for web exploits. Use VPC Flow Logs and AWS Lambda to restrict egress traffic to specific whitelisted URLs:

- AWS Shield is designed for DDoS protection, not for scanning inbound traffic for web exploits. Additionally, VPC Flow Logs and Lambda are not suitable for enforcing URL-based egress traffic restrictions.

- B. Use AWS Shield to scan inbound traffic for web exploits. Use a third-party AWS Marketplace solution to restrict egress traffic to specific whitelisted URLs:

- AWS Shield is not designed to scan inbound traffic for web exploits. It focuses on DDoS protection, making this option unsuitable for meeting the requirement to protect against common web exploits.

- C. Use AWS WAF to scan inbound traffic for web exploits. Use VPC Flow Logs and AWS Lambda to restrict egress traffic to specific whitelisted URLs:

- While AWS WAF is suitable for scanning inbound traffic, VPC Flow Logs and Lambda are not designed for URL-based egress traffic filtering. They lack the granularity and functionality required to enforce whitelisted URLs.

By using AWS WAF for inbound traffic protection and a third-party AWS Marketplace solution for egress traffic filtering, the Security Engineer can effectively meet the compliance requirements for the web application.

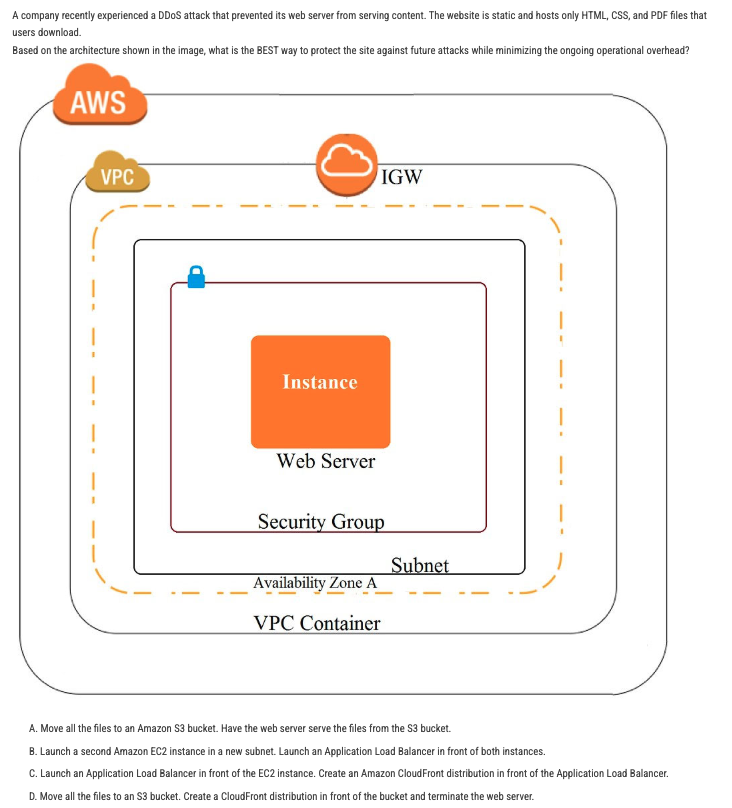

The correct answer is:

D. Move all the files to an S3 bucket. Create a CloudFront distribution in front of the bucket and terminate the web server.

### Explanation:

To protect the static website against future DDoS attacks while minimizing ongoing operational overhead, the following approach is the most effective:

1. Move Files to Amazon S3:

- Amazon S3 is designed for storing and serving static content, such as HTML, CSS, and PDF files. By moving the files to an S3 bucket, you eliminate the need for a web server, reducing the attack surface and operational complexity.

2. Create a CloudFront Distribution:

- Amazon CloudFront is a content delivery network (CDN) that provides DDoS protection and improves performance by caching content at edge locations. By creating a CloudFront distribution in front of the S3 bucket, you can serve the static content securely and efficiently.

3. Terminate the Web Server:

- Since the website is static and all files are served from S3 and CloudFront, the web server is no longer needed. Terminating the web server reduces operational overhead and eliminates the risk of DDoS attacks targeting the server.

### Why not the other options?

- A. Move all the files to an Amazon S3 bucket. Have the web server serve the files from the S3 bucket:

- While moving files to S3 reduces storage complexity, the web server is still required to serve the files, leaving it vulnerable to DDoS attacks. This option does not fully address the DDoS protection requirement.