132 midterm

1/46

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

47 Terms

How many solutions can a linear system have

0, 1, or infinite

inconsistent system

0 = k (a row of all zeros except the last in the augmented matrix)

how many bits represent a number in a computer

64 bits

what happens when we store a real number in a computer

Most real numbers cannot be represented exactly in a computer. Generally, we wind up storing the closest floating point number that the computer can represent.

How many digits of accuracy is there in a floating point

We only have about 16 digits of accuracy

The relative error that can be introduced any time a real number is stored in a computer.

approximately 10^-16.

leading entry

the first nonzero element in a row

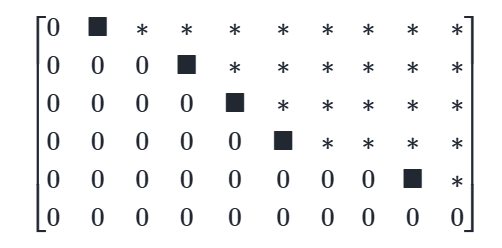

echelon form/row echelon form

All nonzero rows are above any rows of all zeros.

Each leading entry of a row is in a column to the right of the leading entry of the row above it.

All entries in a column below a leading entry are zeros.

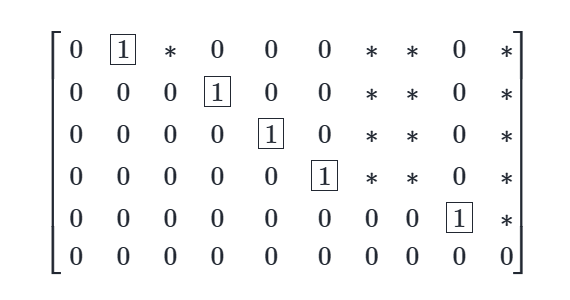

reduced echelon form/ row reduced echelon form

if it is in echelon form, and furthermore:

The leading entry in each nonzero row is 1.

Each leading 1 is the only nonzero entry in its column.

pivot position

the position of a leading 1 in the reduced echelon form of a matrix

The Cost of Gaussian Elimination

~(2/3)n³ flops

basic variable

Variables whose column has a pivot are called basic variables.

free variables

Variables without a pivot in their column are called free variables.

span

is the set of all possible linear combinations of a given set of vectors.

a matrix spans the whole R^n space when there is a pivot in every row

identity matrix

1s on the diagonal and 0s everywhere else

Linear dependence

The columns of A are linearly dependent if and only if Ax=0 has an infinite solution set (can include the trivial solution).

The solution set of Ax=0 has a free variable,

in other words, A does not have a pivot in every column.

If a set contains more vectors than there are entries in each vector, then the set is linearly dependent.

If a set S={v1,...,vp} in R^n contains the zero vector, then the set is linearly dependent.

Linear independence

The columns of the matrix A are linearly independent if and only if the equation Ax=0 has only the trivial solution x = 0,

pivot in each column

A transformation T is linear if:

T(u+v)=T(u)+T(v) for all u,v in the domain of T; and

T(cu)=cT(u) for all scalars c and all u in the domain of T.

T(0) = 0

rotation matrix (through angle θ)

A=[cosθ , −sinθ

sinθ cosθ].

counterclockwise rotation for a positive angle.

The determinant (2×2)

A=[a b

c d]

ad - bc

onto

A mapping T:R^n→R^m is said to be onto R^m if each b in R^m is the image of at least one x in R^n.

Informally, T is onto if every element of its codomain is in its range.

T is onto if there is at least one solution x of T(x)=b for all possible b

one-to-one

A mapping T:R^n→R^m is said to be one-to-one if each b in R^m is the image of at most one x in R^n.

If T is one-to-one, then for each b, the equation T(x)=b has either a unique solution, or none at all.

A vector b is in the range of a linear transformation if

the augmented matrix with vector b is consistent

Rules for Transposes

(AT)T=A

(A+B)T=AT+BT

For any scalar r, (rA)T=r(AT)

(AB)T=BTAT

For two n×n matrices, what is the computational cost of multiplication

2n³

matrix-vector multiplication what is the computational cost

2n²

inverse matrix of 2×2

If ad−bc≠0, then A is invertible and A−1=1/(ad−bc)[d −b

−c a].

If ad−bc=0, then A is not invertible.

inverse matrix larger than 2×2

Ax1=e1

Ax2=e2

⋮

Axn=en

solve the linear systems to get each corresponding column of A^-1

If any of the systems are inconsistent or has an infinite solution set, then A^−1 does not exist.

computational cost of matrix inversion

∼2n³

Using the Matrix Inverse to Solve a Linear System

x = A^-1b

rule for inverse matrices

(A−1)−1=A.

(AT)−1=(A−1)T.

(AB)−1=B-1A−1.

Invertible Matrix Theorem (only applies to square matrices)

the following statements are equivalent; that is, they are either all true or all false:

A is an invertible matrix.

AT is an invertible matrix.

The equation Ax=b has a unique solution for each b in Rn.

A is row equivalent to the identity matrix. (the reduced row echelon form of A is I.)

A has n pivot positions.

The equation Ax=0 has only the trivial solution.

The columns of A form a linearly independent set.

The columns of A span R^n

The linear transformation x↦Ax maps Rn onto Rn.

The linear transformation x↦Ax is one-to-one.

Invertible Linear Transformations

A linear transformation T:Rn→Rn is invertible if there exists a function S:Rn→Rn such that

S(T(x))=x for all x∈Rn,

and

T(S(x))=x for all x∈Rn.

probability vector

a vector of nonnegative entries that sums to 1.

stochastic matrix

a square matrix of nonnegative values whose columns each sum to 1.

Markov chain

a dynamical system whose state is a probability vector and which evolves according to a stochastic matrix.

steady state vectors

If P is a stochastic matrix, then a steady-state vector (or equilibrium vector) for P is a probability vector q such that:

Pq=q.

It can be shown that every stochastic matrix has at least one steady-state vector.

how to solve a Markov Chain for its steady state:

Solve the linear system (P−I)x=0.

The system will have an infinite number of solutions, with one free variable. Obtain a general solution.

Pick any specific solution (choose any value for the free variable), and normalize it so the entries add up to 1.

regular stochastic matrix

We say that a stochastic matrix P is regular if some matrix power Pk contains only strictly positive entries.

has a unique steady-state vector

numpy function that makes and n x m matrix of all zeros

np.zeros((n, m))

numpy function that makes and n x m matrix of all ones

np.ones((n, m))

numpy function that creates the n x n identity matrix

np.identity(n) or np.eye(n)

numpy function that creates a matirx with a, b, c,d on the diagonal

np.diag([a, b, c, d])

numpy function that creates an n x m matrix with random entries [0, 1)

np.random.rand(n, m)

numpy code for matrix multiplication of two matrices A and B

A @ B

numpy function to compare if two matrices A and B are equivalent/close

np.isclose(A, B) or np.allclose(A, B)

numpy fuction computes dot product of A and B (either vector or matrix)

np.dot(A, B)