quasi experimental designs and applied research

1/19

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

20 Terms

dual functions of applied research

Solve real-world problems

Increases basic knowledge, evaluates theory

applied research in history

trained in basic research, felt pressures to produce “relevance”

ethical dilemmas in applied research

consent, privacy, potential coercion

trade-off between internal and external validity

internal validity may suffer

Problems unique to between-subjects designs

Can be difficult to create equivalent groups

Problems unique to within-subjects designs

Uncontrolled order effects, attrition

quasi experimental design

No causal conclusions, less than complete control, no random assignment

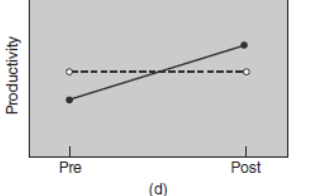

Non-equivalent control group designs

Typically (but not necessarily) include pretests and posttests

Random assignment to groups not possible for practical reasons

example of nonequivalent control groups design

Two factories, one in Pittsburgh and one in Cleveland

Implement Flex time (work 8 hours but whenever u want) in Pittsburgh

regression to the mean and matching

Match based on pretest may produce

Experimental group that scores higher than population on pretest

Control group that scores lower than population on pretest

Both groups may regress to mean on posttest, masking any real change due to treatment

Nonequivalent control group designs without pretests

Ruled out alternative explanation that those in California would always have more earthquake nightmares

interrupted time series design

Able to see natural fluctuations and why they happen

Useful for evaluating overall trends

variations on basic time series design

add a control group, add a switching replication, Add a second DV, not expected to be influenced by the program

program evaluation

Started in the 1960s to evaluate social programs; does the need exist, does it function, what is the outcome, is it worth the cost

need analysis

Census data

Surveys for available resources (any repeats?)

Surveys of potential users

Key informants, focus groups, community forums

formative evaluation

Evaluating program while in progress

Implemented as planned?

Program audit

Pilot study

summative evaluation

Program effectiveness

More threatening than formative evaluation

Use of quasi-experimental designs

Failure to reject H0 can be useful outcome

New programs have to prove themselves

cost analysis

Two equally effective programs, but may differ in costs

Most cost-effective wins

qualitative analysis

Quantitative data if often supplemented with qualitative data in various steps of program evaluation

ethics and program evaluation

Consent issues → special populations

Confidentiality issues

Perceived injustice (Participant crosstalk: control group perceives themselves at a disadvantage)

stakeholder conflicts