CPS 470 Final Exam

1/99

Earn XP

Description and Tags

Spring 2025

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

100 Terms

Packet delay

d(proc) + d(queue) + d(trans) + d(prop)

d(proc)

nodal processing delay

d(queue)

queueing delay

d(trans)

transmission delay = L/R where L = packet length and R = link transmission rate

d(prop)

Propagation delay = d/s where d = length of physical link and s = propagation speed

Traffic intensity

(L*a)/R where a = average packet arrival rate, L = packet length, and R = link bandwidth. as traffic intensity increases, queuing delay also increases.

instantaneous throughput

rate at which bits are being sent from sender to receiver at a given point in time

average throughput

rate at which bits are being sent from sender to receiver over a longer period of time

bottleneck link

link on end-end path that constrains end-end throughput

per connection end-end throughput

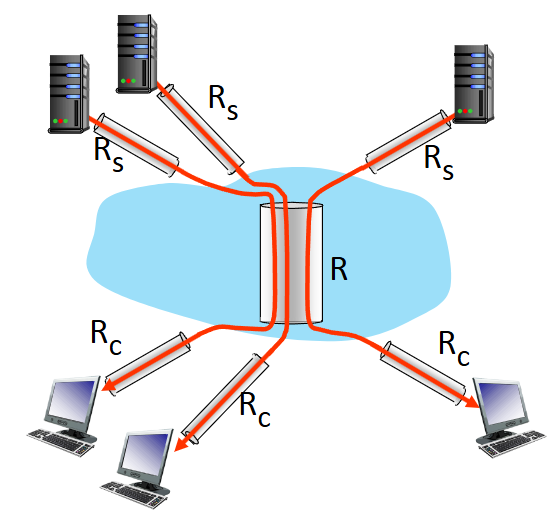

min(Rc, Rs, R/(number of links))

Web cache (proxy server)

satisfy client requests without involving origin server. Helps to improve user experience by decreasing the time it takes to retrieve a webpage.

Domain Name System (DNS)

distributed database implemented in hierarchy of many name servers. Hosts, DNS servers communicate to resolve names (address/name translation). Runs on top of UDP protocol on the application layer

DNS hierarchy

Root < top level domain < authoritative

Top level domain servers

.com, .org, etc DNS servers

authoritative DNS servers

individual websites DNS server. managed by top level domain

root name servers

managed by ICANN (internet corporation for assigned names and numbers)

local DNS name server

when host makes a DNS query, it is sent to the local DNS server first. If the local cache has a copy of the requested file, it will send it (the file may be out of date).

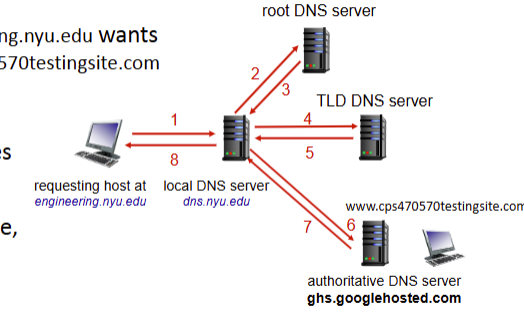

Iterated query

method of DNS name resolution that contacts each server individually from the root to resolve the name

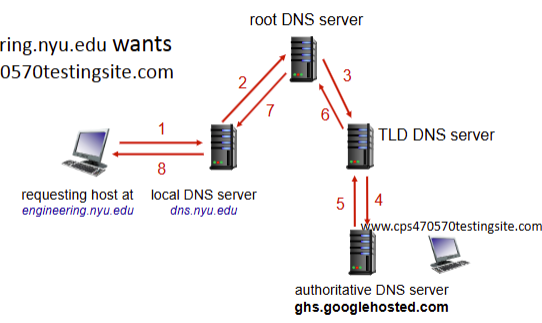

Recursive query

method of DNS resolution that puts the burden of name resolution of the first contacted name server. heavy load at upper levels of hierarchy

Recursive vs iterated query

iterative query more widely adopted because of the burden recursive query has on the upper levels of the DNS hierarchy

congestion

too many sources sending too much data too fast for the network to handle. manifests as long queueing delays and packet loss due to buffer overflow at routers.

flow control

one sender sending too fast for one receiver.

end-end congestion control

congestion inferred from observed loss and delays.

AIMD (additive increase; multiplicative decrease)

senders can increase sending rate until packet loss occurs, then decrease sending rate on loss event.

Additive increase

increase sending rate by 1 MSS every RTT until loss detected.

multiplicative decrease

cut sending rate in half at each loss event

TCP rate

cwnd/RTT bytes/sec

cwnd

current TCP window size. It is dynamically adjusted in response to observed network congestion. it helps limit sending speed based on congestion level.

TCP sender limits transmission

LastByteSent - LastByteAcked <= cwnd

TCP slow start

when connection begins, increase sending rate exponentially until first loss event. initially, cwnd = 1 MSS, cwnd doubled every RTT.

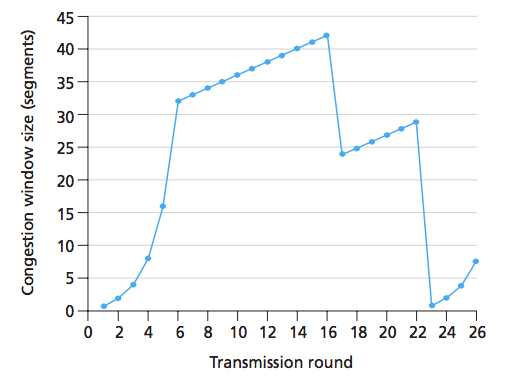

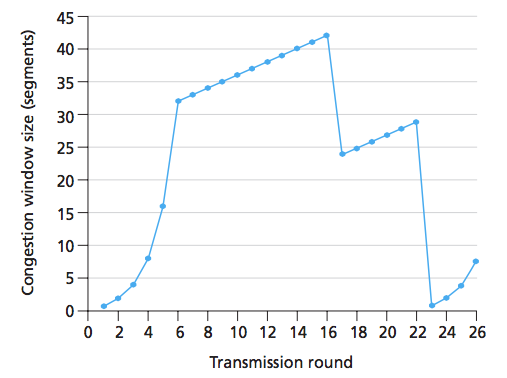

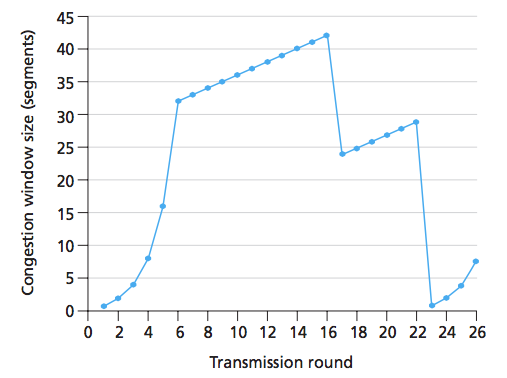

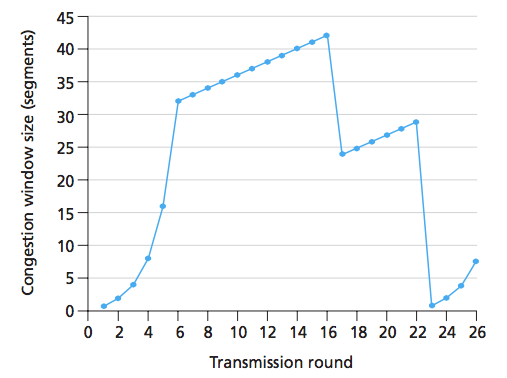

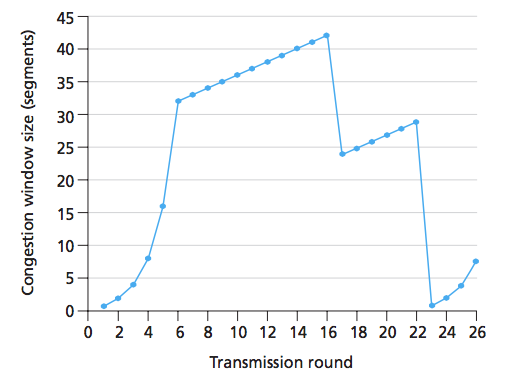

what is the phase from t=0 to t=6?

slow start

what is the phase from t=6 to t=16

congestion control

where do the loss events occur in the graph?

t=16 and t=22

where does the triple duplicate ack loss event occur?

t=16

where does the timeout loss occur?

t=22

what does cwnd get set to after a triple duplicate ack event?

cwnd/2 + 3

what does cwnd get set to after a timeout event

1 MSS

TCP bottleneck link

throughput controlled by bottleneck link. limit traffic going through the bottleneck link

Delay-based TCP congestion control

keep bottleneck link busy transmitting, but avoid high delays/buffering.

measured throughput

(#bytes sent in last RTT interval)/(RTT measured)

how does cwnd change in delay-based tcp congestion control?

if the measured throughput is very close to uncongested throughput, increase cwnd linearly. else if the measured throughput is far below the uncongested throughput, decrease cwnd linearly

TCP fairness

TCP is fair. If K TCP sessions share same bottleneck link of bandwidth R, each should have an average rate of R/K. More TCP connections = lower bandwidth. applications can try to speed up sending by opening up multiple parallel TCP connections.

UDP fairness

UDP is not fair. It does not want rates throttled by congestion control. There is no one policing the use of congestion control

IP address

32-bit identifier associated with each host or router interface. Often in dotted decimal format.

Subnet

device interfaces that can physically reach each other without passing through an intervening router.

IP address structure

subnet part: devices in same subnet have common high-order bits

host part: remaining lower order bits

2 reserved addresses for subnet ID

CIDR format

Classless InterDomain Routing. format of subnet address: a.b.c.d/x where x is the number of bits in the subnet portion of the address

What is the role of the IP address of the routers IP interface in forwarding datagrams through the router?

first-hop router to send outside of the subnet.

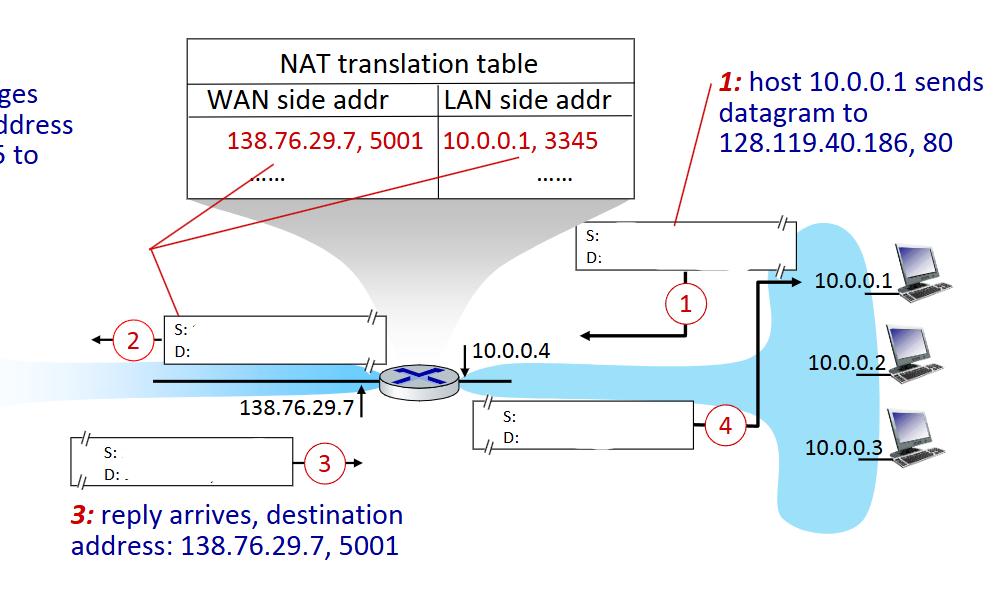

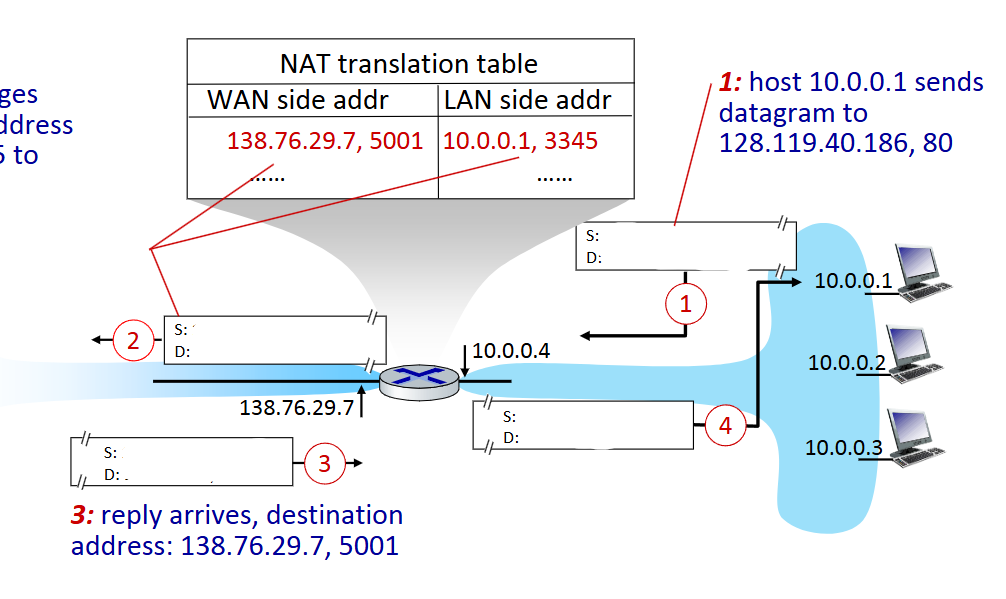

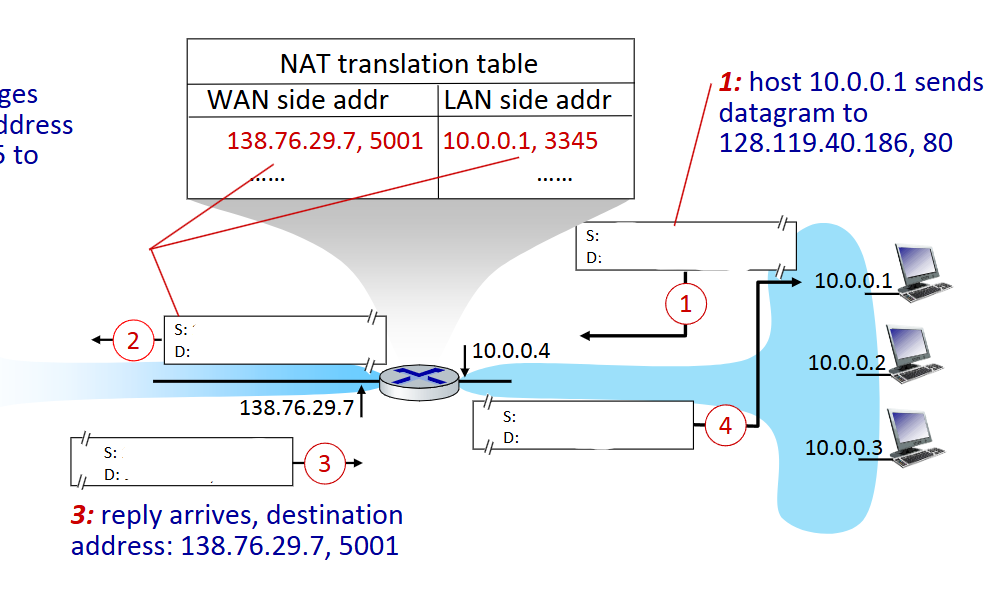

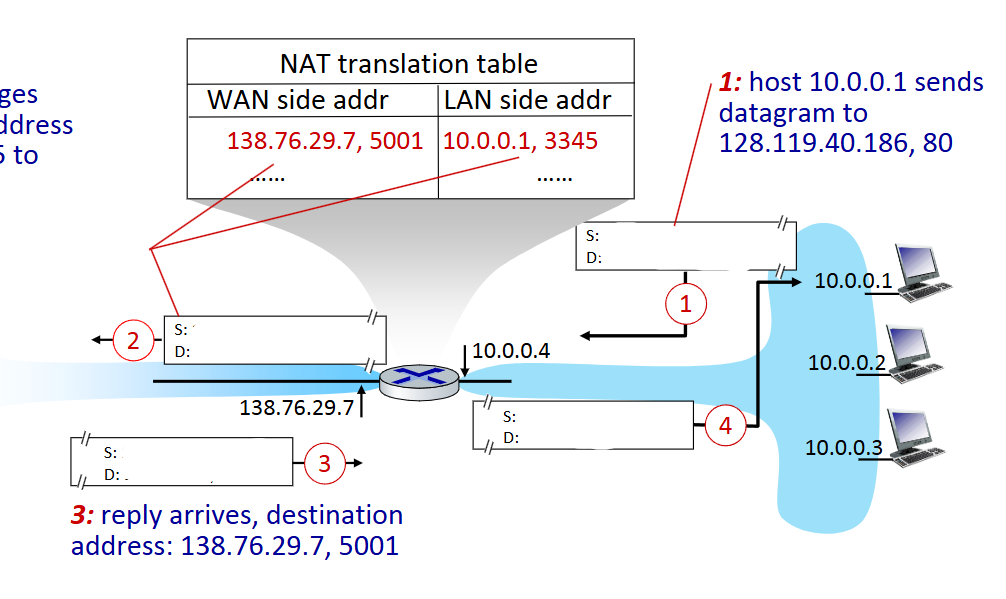

NAT (network address translation)

all devices in local network share just one IPv4 address (as far as the outside world is concerned). all datagrams leaving the local network have the same source NAT IP address but different port numbers.

Advantages of NAT

Just one IP address needed from provider ISP for ALL devices in the local network.

can change address of host in local network without notifying the outside world

can change ISP without changing addresses of hosts in local network.

security: devices inside local net not directly accessible or visible by outside world.

what is the source and destination IP and port at step 1

Source: 10.0.0.1, 3345

Destination: 128.119.40.186, 80

what is the source and destination IP and port at step 2

Source: 138.76.29.7, 5001

Destination: 128.119.40.186, 80

what is the source and destination IP and port at step 3

Source: 128.119.40.186, 80

Destination: 138.76.29.7, 5001

what is the source and destination IP and port at step 4

Source: 128.119.40.186, 80

Destination: 10.0.0.1, 3345

Domains

autonomous systems (AS)

Intra-AS (intra-domain)

routing within the same AS (network). all routers in the same AS must run the same intra-domain protocol.

gateway router

at “edge” of its own AS, as links to routers in other AS’es. performs both inter-domain and intra-domain routing.

Inter-AS (inter-domain)

routing among AS’es.

Forwarding table for AS’es

intra-AS routing determines entries for destinations within AS. inter- and intra-AS determine entries for external destinations. BGP also contributes to the forwarding table

Why need inter-domain routing

defines reachability and where to send packet.

OSPF(Open shortest path first) routing

Uses IP directly instead of TCP or UDP.

each router floods OSPF link-state advertisements to all other routers in entire AS. each router has full topology and uses dijkstra’s algorithm to compute the forwarding table.

BGP (Border gateway protocol)

inter-domain routing protocol. Allows subnets to advertise its existence and the destinations it can reach to the rest of the internet. uses a TCP connection.

eBGP

obtain subnet reachability information from neighboring ASes

iBGP

propagate reachability information to all AS-internal routers.

BGP path advertisement

when receiving an advertisement at a gateway router, advertise to all possible, both internal and external.

gateway router may learn about multiple paths to a destination. Decides which path to use based on inter-AS policy.

Multiple access protocol

distributed algorithm that determines how nodes share channels. needed to avoid/deal with collisions.

channel partitioning

divide channel into smaller “pieces” (time slots, frequency, code) and allocate a piece to a node for exclusive use.

random access

channel not divided, allow collisions and “recover” from them.

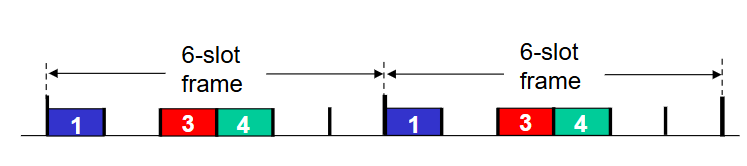

TDMA (time division multiple access)

access to channel in rounds. each station gets a fixed length slot (length = packet transmission time) in each round. unused slots go idle.

cons: wasted bandwidth when not sending

pros: dedicated time slots, can send at full bandwidth, no collisions.

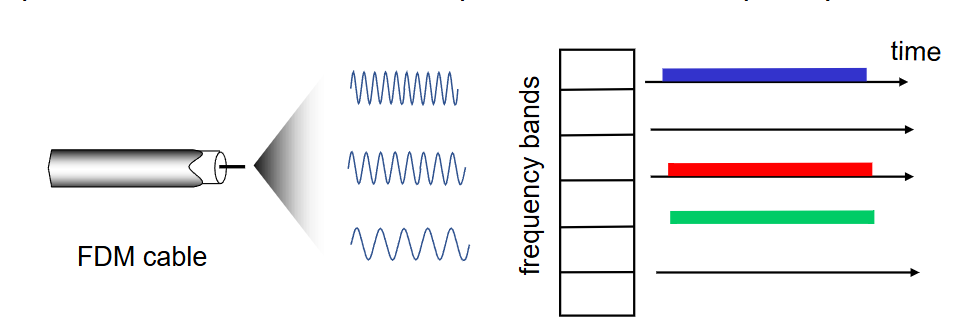

FDMA (frequency division multiple access)

channel spectrum divided into frequency bands, each station assigned a fixed frequency band. unused transmission time in frequency bands go idle.

no collisions, no time slots, speed depends on frequency band used.

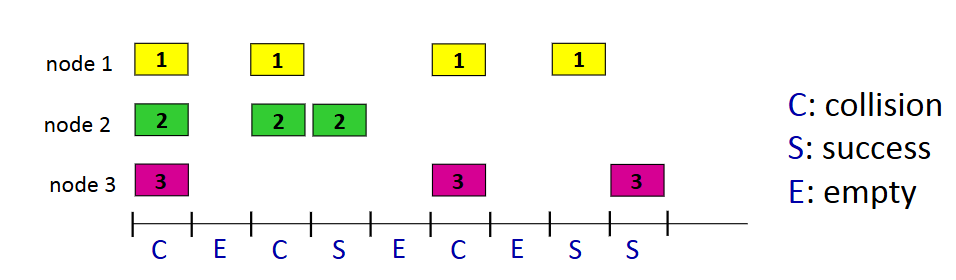

Slotted ALOHA

when a node obtains a frame, transmits in next slot. If no collision: node can send new frame in next slot. If collision: node retransmits frame in each subsequent slot with probability p until success.

why randomization in slotted ALOHA

chance to avoid collision

Slotted ALOHA pros

single active node can continuously transmit at full rate of channel.

Highly decentralized: only slots in nodes need to be in sync

simple.

Slotted ALOHA cons

collisions

idle slots wasting slots

nodes may be able to detect collision in less than time to transmit packet

need clock synchronization

CSMA (carrier sense multiple access)

listen before transmit: if channel sensed idle: transmit entire frame. if channel sensed busy: defer transmission. collisions can still occur because of propagation delay causing two nodes to not hear each others just-started transmission.

CSMA/CD

CSMA with collision detection. used by ethernet.

Collisions detected within a short time, colliding transmissions aborted, reducing channel wastage. reduces the amount of time wasted in collisions.

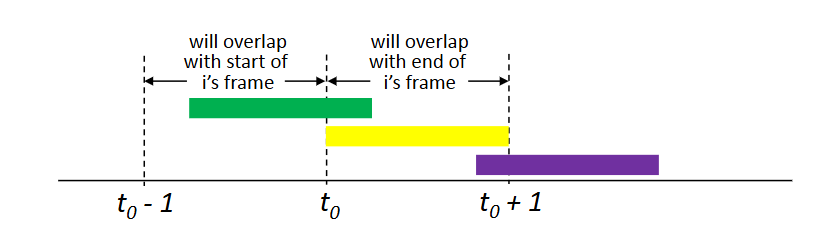

Pure ALOHA

like slotted ALOHA but with no synchronization, nodes can send at any time. Collision probability increased due to lack of synchronization. 18% efficiency

collision

entire packet transmission time wasted

Ethernet CSMA/CD algorithm

NIC receives datagram from network layer, creates frame

if NIC senses channel idle: start frame transmission, else: wait until channel idle, then transmit

If NIC transmits entire frame without collision, NIC is done with frame.

If NIC detects another transmission while sending: abort and send jam signal

After aborting, NIC enters binary (exponential) backoff:

after mth colloson, NIC chooses K at random from {0, 1, 2, (2^m) - 1}. NIC waits K*512 bit times, returns to step 2

more collisions = longer backoff interval

MAC address

unique to each device/host. burned into NIC ROM. 48 bits

ARP (address resolution protocol)

gets interface’s MAC address knowing its IP address. broadcasts query, containing destination IP address, source MAC, and source IP to all adjacent hosts. populates ARP table. Only destination replies to ARP query.

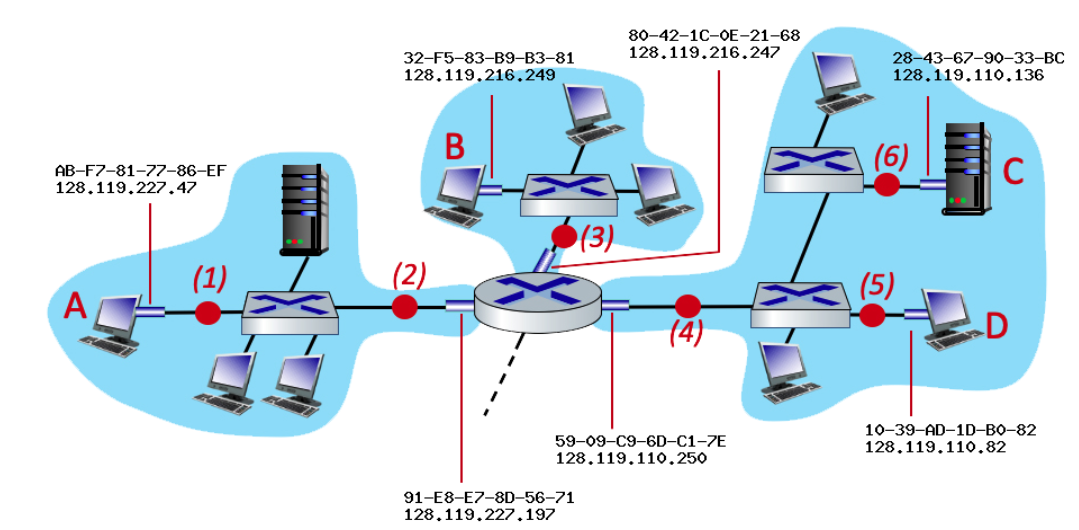

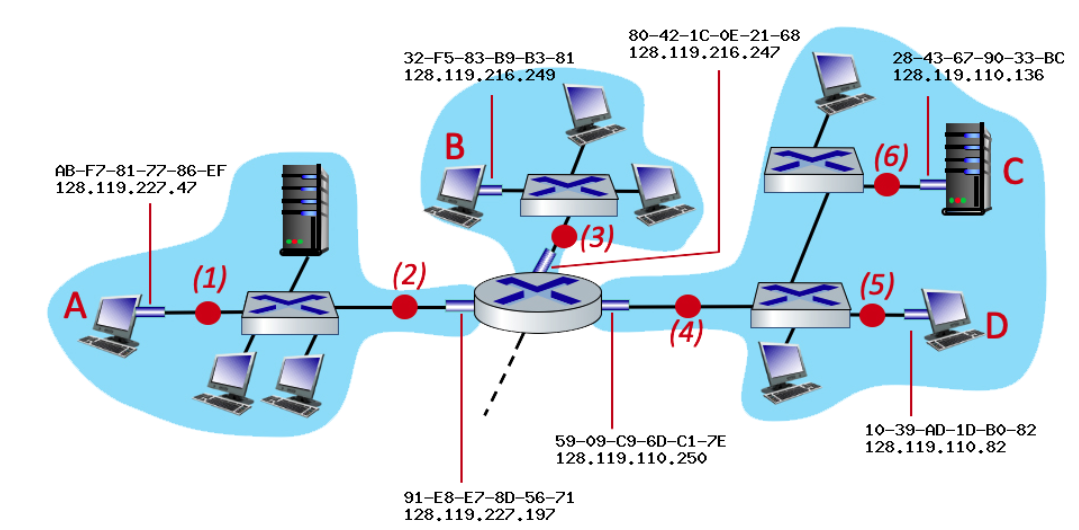

consider an IP datagram being sent from node D to node A. What is the source and destination MAC at point 5

Source: D’s MAC

Destination: Router’s MAC (right interface)

consider an IP datagram being sent from node D to node A. What is the source and destination MAC at point 2

Source: router’s MAC (left interface)

Destination: A’s MAC

Ethernet switches

transparent, link layer device that stores and forwards ethernet frames. uses CSMA/CD to access segment. plug and play and self learning, no need to be configured.

switch forwarding tables.

each entry in the switch table contains the MAC of each host, the interface to reach the host, and the time stamp. switch learns which hosts can be reached through which interfaces by flooding each interface in search of the correct destination.

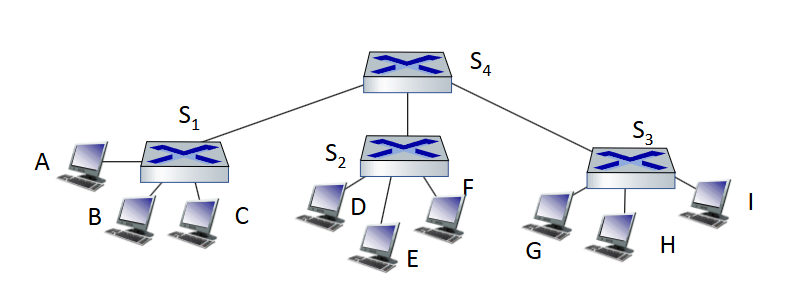

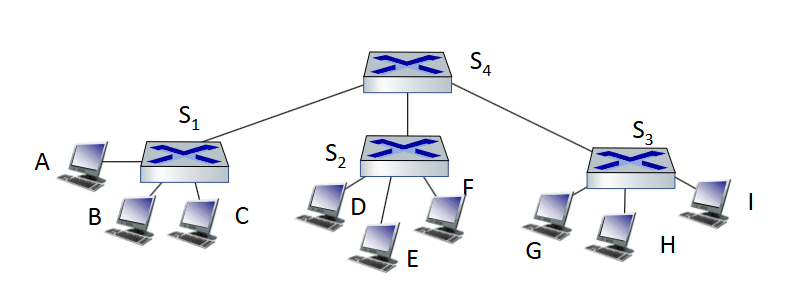

When sending from A to G, which interfaces learn A’s information?

S1, B, C, S4, S2, D, E, F, S3, G, H, I

When sending from A to G, which interfaces learn G’s information?

S3, S4, S1, A

Virtual Local Area Networks (VLANs)

switch’s ports are divided into groups by the network manager. allows multiple virtual local area networks to be defined over a single physical local area network infrastructure.

VLAN trunking

trunk ports connect VLAN switches using a single link that carries traffic for multiple VLANs. trunk ports behold to all VLANs.

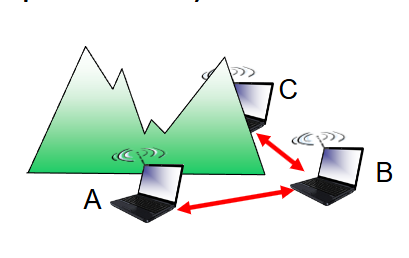

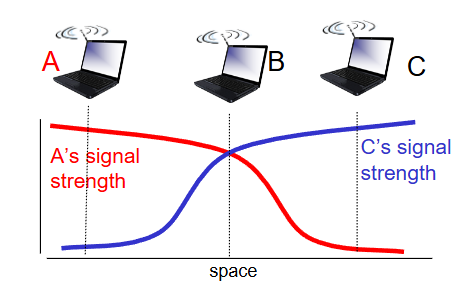

hidden terminal problem

two hosts both have a connection to another host, but cannot hear each other and are unaware of their interference at the shared host.

signal attenuation

one host hears two hosts traffic who cannot hear each other interfering at their shared host.

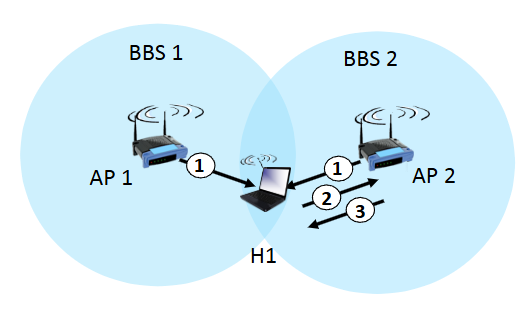

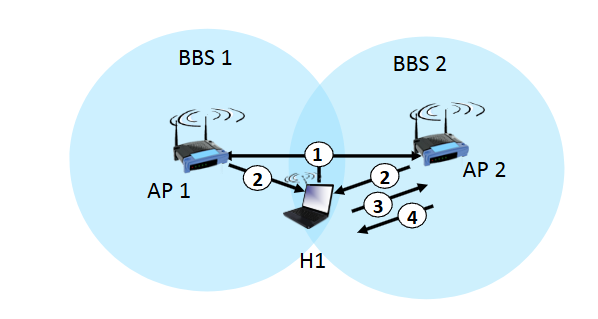

passive scanning

AP's send beacon frames unprompted

active scanning

host request beacon frames from APs

beacon frames

contains APs unique name (SSID) and MAC address

CSMA/CA (collision avoidance)

used by WiFi.

Sender:

1. if sense channel idle for DIFS then transmit entire frame

2. If sense channel bust then start a random backoff time. timer counts down while the channel is idle. transmit when timer expires. if no ACK, increase random backoff interval and repeat step.

Receiver:

If the frame was received OK: return ACK after short inter-frame spacing (SIFS)

DIFS (distributed inter-frame space)

short period of time before sending frame with CSMA/CA

Why do you need ACK in CSMA/CA

because of the hidden terminal problem

WiFi collision avoidance protocol

sender “reserves” channel use for data frames using small reservation packets.

sender first transmits small request-to-send (RTS) packet to BS (base station) using CSMA.

BS broadcasts clear-to-send (CTS) packet in response to RTS.

CTS heard by all nodes so sender transmits data frame uninterrupted.

end-end delay with web cache

(cache hit rate)*(delay when satisfied from cache)+(rate of requests to server)*(delay from origin server)

DHCP

application layer protocol used to assign IP addresses to hosts and give the host the address of the first-hop router and DNS server. encapsulated in UDP, encapsulated in IP, encapsulated in ethernet.