Perception -Vision + Audition

1/66

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

67 Terms

what is light

electromagnetic radiation

we have a spectrum of light which is a slither of electromagnetic radiation

380-700nm is what we c approximately

shorter wavelengths = higher frequency

longer wavelengths = lower frequency

gamma rays, x-raus etc -theyr just labels for the spectrum

how wavelengths act

bounce off of objects in the world -which allows us o c objects -we c because light bounces off of objects into eye

what produces infrared raditation?

anything that emits heat —we emit heat (so if we could detect this wed be constantly bombarded by the radiation we emit, so wer not sensitive to this

Geometrical optics assume that light rays do the

following

propagate in straight-line paths through a homogeneous medium -means theres differences in density

• bend at the interface between two dissimilar media (like air and

water, air and our eyes, etc.)

• may be absorbed, reflected, or transmitted

what happens when light is reflected (dichromatic reflectance model)?

what u look at surfaces that r matte -light comes in and bounces of at all diff directions -it doesnt just bounce of it goes inside bounces around then gets reflected out

imagine a mirror ball -on each reflection ud c a relfection

this room is filled with intersecting rays of light

every time u put ur eye in a diff position ur seeing a diff set. of intersecting rays

every position has a sample of projected rays

the idea of the ambiant optic ray —in every position in space there is everything that u could potentially c (360 degress) rays coming from all sides intersecting at that point

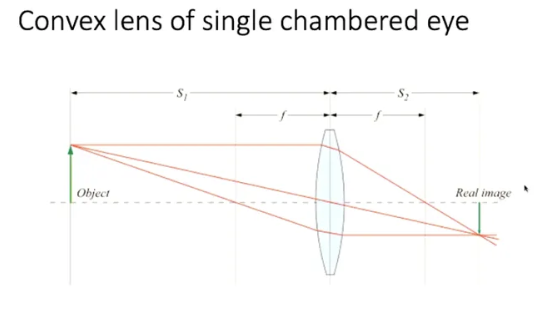

light into convex lens and how many times does the light change density?

what makes it through the lense -all the diff rays coming from 1 position in space end up in the same position after coming through lense —if the rays dont all go to 1 spot of ‘real image’ itd be blurry

in image -changes density twice -from air to lense, from lens back into air —2 refraction events

what causes refraction?

if i hav light coming and it goes from something less dense to smthing more (like water) -the path of the light changes, Light moves slower in denser media

it will get bent towards normal (normal is perpendicular to the surface) if it goes into higher density -and bent away if it goes from high to low

it takes the path with the least amount of time

think of it like -ur going along go into higher density and get dragged down

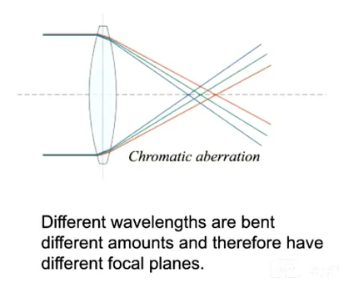

chromatic aberration

You cant focus all the wavelengths of light using refraction cos its impossivle for them to bend by the same amount -so its impossible for them all to be focused at the same image plane

amount that light is bent depends on its wavelength

ur eye uses refraction to focus light -the light that reaches ur eye then r not all gonna focus on the same point -theyr being bent by diff amounts -longer wavelengthe the reddish ones get bent less than shorter ones —so how do u bring them all into focus at same point?

u cant get them alll to same point. we actually hav red fringed in vision but wev gotten used to it -brain adapts to it always being there

refraction in the human eye

4 refraction events happen in eye

2 diff types of goop in eye: aqueous humour, vitreous humour in back -more gelatinous

when u look at things in diff distances ur lens changes shape

the 1st event of refraction occurs in the cornea -2 refraction events occur in the cornea -front and back (coming in going out)

then light goes through pupil then hits lens —-in lens 2 refraction events

biggest refraction event -biggest change in density -is in cornea from air to cornia. (y lasik surgery is done, by changing shape of cornea can make light hit it better)

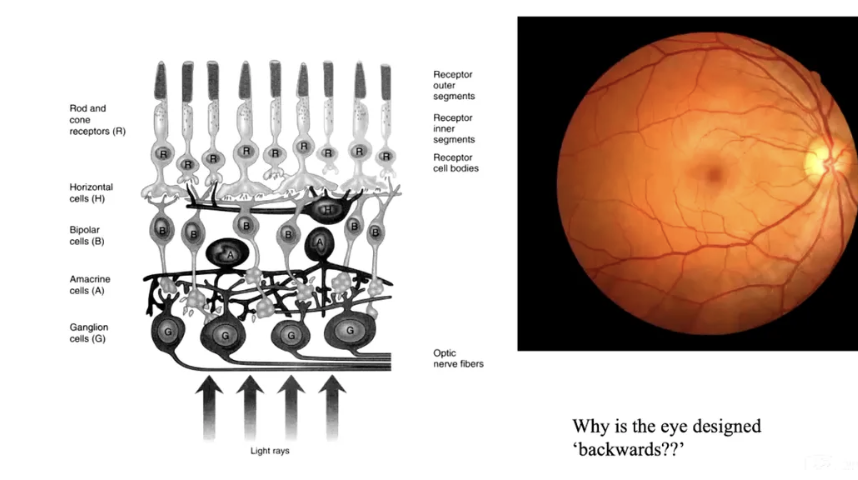

how the eye is designed backwards…

light rays r coming through network of neurons and blood(wer looking through these that we r completely unaware of —these r constantly casting shadows onto the receptor surface —has some interesting perceptual consequences

process receptors starts at top —and then travels away from brain -weird ik -so light goes through that network then is received through that network and swivels around into brain

ganglion cells r the last layer of cells -final layer of neural processing before goes to brain

microsacadts

everytime eye moves vessels and stuff moves too

in order to not c mesh of veins and neurons it does all these lil microsaccades

the whole motivation for this is that ur eyes hav to figure out how not to c all the blood vessls and shit its looking through at all times —visual system does this through microsacadts (ur never rlly perfecly fixating on anything)

2 types of vision/ 2 sets of photoreceptors

Scotopic vision: low-light, rod dominated

Photopic vision: High light levels, cone dominated

what allows u to c colour with cones but not with rods?

theres 3 diff types of cones only 1 type of rod

if u only hav 1 type of photoreceptor u cant tell diff wavelengths apart

why cant u c the faint stars in ur periphery when u look directly at them?

when u look at something ur pointing ur fovea at something —its where u hav the highest density of photoreceptors —-but in the fovea there r no rods

if ur ever out in the bush look at a very faint star out the corner of ur eye -then when u look straight at it it disappears -the reason u cant c it when u look straight at it is that u dont hav rods in ur centre vision to detect that very faint light

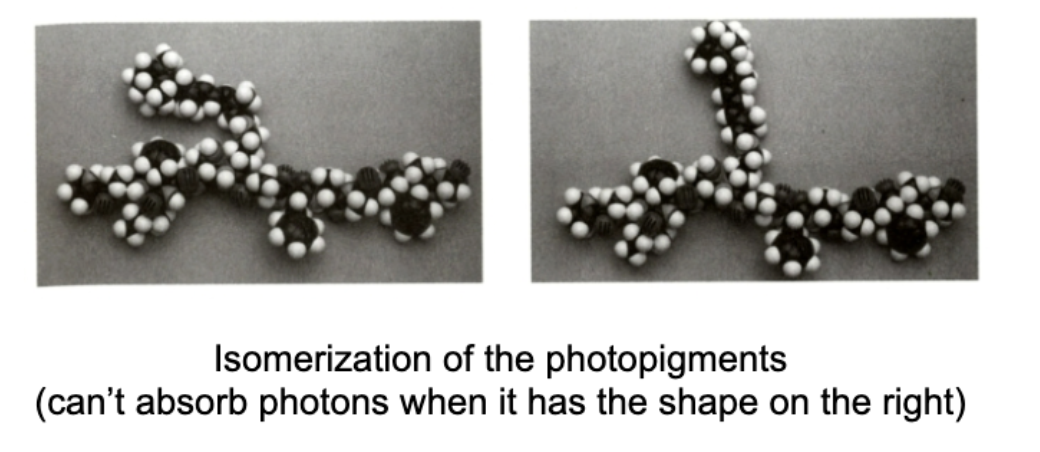

light adaptation: Isomerization of the photopigments

eg.if u walk in or out of dim theatre into bright light its almost painful and then its fine —pupils respond is instantaneous so its not them thats making it painful —its the photopigments themselves —all ur photoreceptors contain pigment -particular kind cos it responds to light —when u go from dark room -ur eyes hav adjusted to lower light levels inside -every time light hits one of the photopigments it changes its shape —when it changes shape it causes electric current, when its in isomerized shape u can throw all these photons on it and it just bounces of it cant change shape anymore —theres a sea of photo pigments -but capacity to absorb depends on what state its in —chemical replenishing process that takes minutes to recover before u adjust to new light environment

dynamic sensitivity

when u go out into bright light and get used to it ur finding the right balance of photopigments that r in receptive state and unreceptive state

colour reproduction

if ik how ur cones respond -all i need to do is someohow stimulate the cones exactlly the same way how they would be done in the real world -need to make sure 3 cone types respond in the sane ratio

can we tell the diff between wavelength and intensity?

no

photoreceptors just count photons that fall within its range of sensitivity —to get that weighted average which tells us the colour!

metamers

things that appear visually identical, generate the same perception of colour, can be physically very diff (diff wavelengths and amplitudes of light) -but generate same response in cones

what does it mean in terms of cone responses if its a metameric match?

producing the exact same relative activation -the weighted sum of the l, m , and s cone r exactly the same -they r diff physically but look same to us

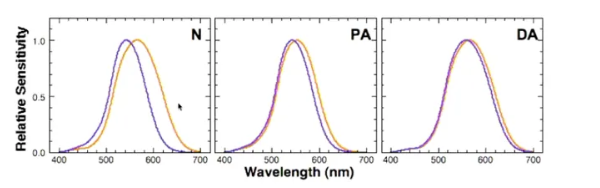

Anamolous trichromats:

three cone types, but xthe L and M overlap more than they should

some ppl hav too much overlap -overlap so much that theyr almost acting as the same cone type —when the green and red right next to each other they cant c it, when theyr alone can distinguish it usually

simultaneous contrast

a perceptual phenomenon where the apparent color or brightness of a stimulus changes due to the influence of its adjacent or surrounding colors.

How it Works

Brightness:

A patch of gray will look lighter when surrounded by a dark color and darker when surrounded by a light color.

Color:

A patch of color will also change in hue, lightness, and chroma (or vividness) depending on the surrounding colors. For example, a neutral gray surrounded by a highly saturated red will appear to take on a greenish hue.

Why Audition Matters (The Purpose of Hearing)

Audition is not just “hearing sounds”—it is a reverse-engineering system that lets the brain infer what is out in the world and what caused it.

Perception is not about sensory input itself, but about inferring the objects and events that caused that input.

Audition therefore provides:

Environmental information (forest vs ocean; indoors vs outdoors).

Identity of sound sources (human vs animal; metal vs wood).

Location—including behind you (vision cannot do this).

Communication (speech relies heavily on mid-frequency sensitivity).

Music perception (complex pitch + timbre cues).

how is audition described

Omnidirectional – sound reaches you from all directions, including behind.

Constant – cannot be “closed” like your eyes.

Audition therefore solves environmental problems vision alone can’t.

Sound is defined as

A repetitive change in air pressure over time.

Sound is a “ripple” of pressure fluctuating through space, created by compressions and rarefactions.

how is sound created

Mechanically:

A sound source (e.g., a vibrating speaker or vocal folds) compresses air → increases pressure.

As the source recoils, it creates a rarefaction → decreases pressure.

These travel as a longitudinal wave, where particles oscillate parallel to the direction of propagation.

Wavelengths

Lowest audible frequency (~20 Hz) ≈ 17 m wavelength.

Highest audible (~20 kHz) ≈ 1.7 cm wavelength.

This massive range explains why:

Sound bends around corners (diffraction).

Light does not, because its wavelengths are ~500 nm (much shorter).

sound wave has three physical features

Physical Property | Perceptual Result |

|---|---|

Frequency | Pitch |

Amplitude | Loudness |

Spectral complexity (fundamental + harmonics) | Timbre |

Frequency Range

Human hearing range: ~20–20,000 Hz (ideal).

Dogs ≈ 40 kHz, cats ≈ 70 kHz.

Pitch–Frequency Relationship

Pitch is perceived frequency, but not a linear mapping.

examples:

Below ~1000 Hz, Mel scale ≈ linear with Hz.

Above ~1000 Hz, physical frequency must change much more to produce equal pitch steps.

Demonstration: equal perceived steps require unequal physical steps.

Equal Hz changes do not equal equal pitch changes.

Loudness -Decibels (dB)

is a measure of sound pressure level, expressed relative to a reference:

Reference pressure = 20 μPa (threshold of hearing).

Rule of thumb:

+6 dB = doubling of sound pressure.

–6 dB = halving.

Why distance affects loudness

Sound spreads as a 3D spherical wavefront.

As distance doubles → energy is spread over 4× area.

So intensity drops by 6 dB per doubling of distance (inverse square law).

eg:

1 m: 88 dB

2 m: 82 dB

4 m: 76 dB

how Loudness depends on frequency

Audibility curve:

Most sensitive at 500–4000 Hz (speech range).

Low frequencies require much higher amplitudes to be detected.

Equal-loudness contours further show:

At moderate loudness (e.g., 80 dB), our sensitivity is flatter across frequency.

At quiet levels, sensitivity varies strongly with frequency.

Eg:

A 40 dB tone is not equally loud across frequencies.

Human hearing is worst at very low and very high frequencies

Timbre: Perceiving Complexity

Timbre = the colour or quality of sound.

Determined by the harmonic structure: the fundamental + higher harmonics and their relative amplitudes.

fundamental frequency

fundamental frequency is the lowest frequency in a periodic waveform or a complex sound. It is the frequency that determines the perceived pitch of a sound and is often the most prominent component of the waveform. The fundamental frequency is also known as the first harmonic (𝑓1 or 𝑓0), and it is the baseline from which other higher frequencies, called harmonics or overtones, are based.

What determines pitch?

Pitch is determined by the frequency of a sound wave, which is the number of vibrations per second.

Pitch = fundamental frequency (f₀)

f₀ is the greatest common divisor of all harmonics.

What determines timbre?

Timbre = pattern of harmonics (number, amplitude, phase).

Examples:

Piano vs flute → same fundamental (same pitch), different harmonic patterns → different timbre.

broadband and natural sounds

Every natural sound (voice, waves, explosions) is broadband (contains many frequencies).

Only pure sine waves contain one frequency — these do not occur naturally.

eg? -A violin playing a single note is not a pure tone.

Vertical localisation cues

spectral-shape cues generated by the way your head, torso, and particularly your outer ears (pinnae) filter sound, which tells your brain whether a sound is coming from above, below, in front, or behind you. These monaural (single-ear) cues are a combination of peaks and dips in the sound's spectrum that change depending on the sound's elevation.

Outer ear

Collects/guides sound into ear canal.

Resonance amplification of mid-frequencies (speech).

Vertical localisation cues

Middle ear: Impedance matching

Problem:

Sound moves from air → fluid (inner ear).

impedance matching: transfers sound energy from the low-impedance air of the outer ear to the high-impedance fluid of the inner ear, preventing most of the sound from being reflected away.

99% energy would be lost without amplification.

Together dramatically increase pressure to drive the fluid-filled cochlea:

Area ratio

Large eardrum area → small oval window area

≈ 15:1 amplification.

Lever action

Malleus–incus–stapes act as levers

≈ 2:1 amplification.

Inner ear (Cochlea): Frequency Analysis: Place theory

Place theory: location of the standing wave on the basilar membrane encodes frequency

As frequency rises, the location of peak displacement moves toward the base.

Base = stiff, narrow → high frequencies.

Apex = broad, floppy → low frequencies.

This spatial mapping is tonotopic organisation. The brain reads off frequency based on where the BM vibrates.

For complex sounds:

Each harmonic produces activity at a different BM location, so the cochlea acts like a real-time frequency analyser.

Exam trap:

The basilar membrane encodes frequency, not pitch directly.

Inner vs Outer Hair Cells

Inner Hair Cells (IHCs)

Primary sensory transducers — send ~95% of auditory nerve fibres to the brain.

~3,500 per ear.

Their cilia bending is what ultimately triggers action potentials in the auditory nerve.

Functionally analogous to retinal ganglion cells sending signals to the brain.

Outer Hair Cells (OHCs)

~12,000 per ear, 3 rows, attached to the tectorial membrane.

Do NOT primarily send information to the brain (~5% of fibres).

Instead, they amplify BM motion by:

Actively changing length (“electromotility”) when stimulated.

Enhancing the shearing force that bends IHC cilia.

This amplification:

Lowers hearing thresholds (increases sensitivity).

Sharpens tuning (narrower frequency bandwidths).

Transduction

Mechanical Motion → Neural Activity

Shearing movement

As BM and tectorial membrane move out of alignment, cilia bend sideways.

Bending opens mechanotransduction ion channels → depolarization.

This triggers neurotransmitter release → auditory nerve action potentials.

Why OHCs matter to transduction

OHCs “push and pull” on the tectorial membrane, enhancing shearing.

Without OHCs, IHCs receive weaker deflection → poorer sensitivity and reduced frequency selectivity.

Phase Locking / Frequency Theory

For low frequencies, auditory nerve fibres spike at the peaks of the waveform.

Perfect phase locking up to ~1,000 Hz.

Imperfect but functional up to ~4,000 Hz (local populations “take turns”).

Above ~4,000 Hz → no phase locking at all.

→ Only place code remains.

This explains:

Why pitch discrimination is excellent at low/mid frequencies.

Why very high frequencies all sound similarly “high.”

Hybrid coding model

Frequency (phase) code handles: low frequencies.

Place code handles: high frequencies.

Both operate in the mid-range.

Missing Fundamental Illusion

Fundamental frequency (f₀) is removed from a harmonic complex.

Listeners still hear the same pitch, even though f₀ is absent.

Why? (New explanation)

The complex waveform still contains periodicity at f₀.

Phase-locked spiking reflects that periodicity.

Therefore:

Frequency theory predicts the illusion correctly.

Place theory fails, because no BM place is stimulated at f₀.

Characteristic Frequency (CF)

Each nerve fibre has one frequency where it is most sensitive — its CF.

Bandwidth

Nerve fibres respond only to a narrow 12.5% bandwidth around their CF.

Example:

CF = 1000 Hz

Band = 940–1062 Hz (±6.25%)

Increasing tuning sharpness in higher brain regions

As signals travel to the Inferior Colliculus (IC) and Medial Geniculate Nucleus (MGN),

lateral inhibition sharpens tuning further.

a process where a neuron that is strongly activated inhibits its neighbors, which enhances the contrast and sharpness of sensory perception

Human pitch discrimination can reach 0.2% differences (remarkably precise).

Sensorineural Hearing Loss (SNHL) -2 types

(Damage inside the cochlea: hair cells)

OHC damage

Raised thresholds (poorer sensitivity).

Broadened bandwidths (poorer frequency selectivity → “blurred” hearing).

High frequencies degrade first.

Causes: loud music/noise, ototoxic antibiotics.

Often accompanied by tinnitus.

IHC damage

Raised thresholds,

Normal bandwidths (selectivity intact).

More commonly caused by infection/disease.

Also associated with tinnitus.

Conductive Hearing Loss

Middle-ear bones fail to transmit sound efficiently.

All frequencies attenuated equally (flat loss).

Cochlea and nerve can be normal.

other hearing loss

Industrial deafness → a “notch” at specific frequencies.

Age-related loss → high-frequency decline first due to BM base wear.

(linked to constant use: even low frequencies must pass through the base.)

Cochlear Implants -how they work and their limitations

How implants work

External microphone captures sound.

Signal filtered into ~20–28 frequency bands.

Each band stimulates a specific electrode contact along a wire inserted into the cochlea.

Electrical stimulation activates auditory nerve fibres at appropriate places.

Limitations

Wire cannot reach cochlear apex → weak low-frequency coding.

Broad frequency bands → poor pitch & timbre discrimination.

Melody perception is poor; rhythm preserved.

Speech is intelligible but sounds “robotic.”

Localisation is also poor because cues are coarse.

Acoustic cues

Unlike vision, where the retina contains a literal spatial map of the world, audition receives no spatial layout at the ear: The eardrum only receives pressure over time, with no information about location.

Therefore the brain must compute spatial location using indirect acoustic cues:

Binaural cues → azimuth (left–right)

Monaural spectral cues → elevation (up–down) + front/back disambiguation

Precedence effect → ignoring echoes

Spatial attention → selecting a target voice

Active noise cancellation (ANC) → physics of cancelling sound

Dimensions of Auditory Space

Auditory space is described in:

Azimuth = left–right

Elevation = up–down

Distance is also perceived but only crudely, using:

absolute sound level

spectral balance

reverberation patterns

Binaural Cue 1: Interaural Time Difference (ITD)

How ITDs arise

If a sound comes from one side, the wavefront reaches the near ear first and the far ear later.

The maximum delay is ~600 μs (0.6 ms) at ~90°.

The time difference is extremely small, but the brain detects it with microsecond precision.

—> Neural processing

ITDs are processed in the Medial Superior Olive (MSO): the earliest stage where signals from both ears are combined.

Ecological notes

Largest in large-headed animals → better spatial resolution.

Most useful in low frequencies (HF cycles too fast for precise timing).

Not useful underwater (sound travels too fast → tiny delays).

Jeffress coincidence detector model vs Alternative mammalian model

Jeffress coincidence detector model (New Theory)

A classic model (especially in birds, esp. owls):

Axons from each ear act as delay lines.

A neuron fires only when inputs from both ears arrive simultaneously.

The location of that neuron along the array encodes azimuth.

This is a “simple, low-overhead” computation that happens extremely early, before timing latencies accumulate.

Alternative mammalian model

Mammals appear to rely less on delay-lines.

Use two broadly tuned channels, one favoring left, one right.

Relative activity of the channels encodes location.

Binaural Cue 2: Interaural Level Difference (ILD)

How ILDs arise

The head blocks sound, producing an acoustic shadow.

The far ear receives lower amplitude.

ILDs can be up to 20 dB at high frequencies

Frequency dependence

ILDs are minimal at low frequencies (long wavelengths wrap around the head).

ILDs are large at high frequencies (short wavelengths absorbed by head/hair/skin).

Thus:

ITD = low frequencies

ILD = high frequencies

Neural processing

ILDs are processed in the Lateral Superior Olive (LSO).

Comparison of ITD vs ILD

ITD generally more precise.

ILD complements ITD at high frequencies.

Natural sounds contain many frequencies → both cues are combined.

Monaural Spectral Cues for Elevation

Elevation cannot be obtained from ITD or ILD (0 difference at front and back).

How elevation is encoded

The outer ear (pinna) filters sound:

some frequencies amplified

others attenuated

filtering pattern changes with elevation and direction

filtering is strongest at high frequencies (above ~5 kHz)

These patterns create a spectral fingerprint for each elevation.

—> learned not innate:

The brain must learn these spectral fingerprints through experience and vision.

Wearing moulds of someone else’s ears → immediate loss of elevation perception.

After ~6 weeks, people relearn localisation with the new ears.

Removing the moulds → original ability returns within a day.

ITD and ILD produce identical values for front and back.

Spectral cues break this ambiguity:

Different peaks/troughs for front vs back.

Limitation

Narrow-band sounds (e.g., pure tones) provide poor spectral information → elevation becomes hard to judge.

Precedence Effect

Indoor environments produce reflections:

Same sound arrives multiple times from different directions.

Problem:

Reflections could confuse localisation.

Solution: Precedence Effect

When two identical sounds arrive within:

~5 ms (clicks)

up to ~40 ms (complex sounds)

The auditory system only uses the first arrival to determine location.

Later arrivals are suppressed (not consciously perceived as separate).

eg.Two speakers play same sound; one is placed further back -You hear the sound from the nearer speaker only — the delayed one is entirely ignored.

Spatial Attention in Audition

Spatial attention becomes possible after the brain estimates location.

Functions:

Select a target sound (e.g., one voice).

Suppress neighbouring sources.

Improve signal-to-noise ratio (SNR).

Allows conversation in noisy environments (“cocktail party effect”).

Recordings sound worse because -Your auditory system suppresses background noise, but the recorder doesn’t.

Effect of hearing aids

Hearing aids often block natural spectral cues by filling the concha:

Users struggle to localise sounds

Spatial attention becomes harder

Speech separation becomes difficult

Active Noise Cancellation (ANC)

What ANC cancels

Background noise in real environments tends to be low-frequency weighted:

Pink noise: −3 dB per octave

Brown noise: −6 dB per octave

ANC therefore focuses on low frequencies.

How ANC works

Microphone captures external noise.

System inverts that waveform (anti-phase).

Anti-phase + incoming noise = destructive interference → cancellation.

Why ANC fails for speech & high frequencies

ANC requires sampling + analysis + generation of the anti-phase signal.

This creates a latency.

For low frequencies → long wavelengths → small latency irrelevant.

For high frequencies → short wavelengths → latency becomes too large → cancellation fails.

Audition_3_2025

Thus:

ANC cancels low-frequency ambient noise

But not speech (500–4000 Hz)