FINALS- Distributed Computing

1/47

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

48 Terms

Virtual Processes

Built in software, on top of physical processors.

Processor

Provides a SET OF INTRUCTIONS along with the capability of automatically executing a series of those intructions

Thread

A MINIMAL SOFTWARE PROCESSOR in whose context- a series of instructions can be executed. Saving a thread context implies stopping the current execution and saving all the data needed to continue the execution of a later stage.

Process

A SOFTWARE PROCESSOR — in whose context — ONE OR MORE THREADS MAY BE EXECUTED. Executing a thread means executing a series of instructions in the context of the thread.

Introduction to threads

Thread is the unit of execution within a process performing a task.

A process can have single or multiple threads.

When a process starts, memory and resources are allocated which are shared by each thread

in a SINGLE-THREADED process, both the process and thread are same.

a SINGLE-THREADED process can perform one task at a time

A MULTI-THREADED process can perform multiple tasks at the same time.

Introduction to threads - How do you think can we make a processor truly multiple tasks parallelly?

In relation to hardware, add more CPUs ( servers with multiple CPU sockets)

All the modern processors are multi-core processors, meaning, a single physical processor will have more than one CPU in it.

MULTI-CORE PROCESSORS are capable of running more than one process or thread at the same time. Example, a quad-core processor has 4 CPU cores, it can tun 4 processes or threads at the same time in parallel (PARALLELISM)

Contexts

Allow pausing and resuming without losing data

Processor Context

The minimal collection of value STORED IN THE REGISTERS OF A PROCESSOR USED FOR THE EXECUTION OF A SERIES OF INTRUCTION. (eg. stack pointer, addressing registers, program counter.)

Thread Context

The minimal collection of value STORED IN REGISTERS AND MEMORY, USED FOR THE EXECUTION OF A SERIES OF INSTRUCTIONS. (eg, processor context, thread state — running/ waiting/ suspended)

Process Context

The minimal collection of values STORED IN REGISTERS AND MEMORY, USED FOR THE EXECUTION OF A THREAD. (eg. thread context, but now also at least memory management information such as Memory Management Unit (MMU) register values.)

Observations

Threads share the same address space. THREAD CONTECT SWITCHING can be done entirely independent of the operating system.

Process switching is generally (somewhat) MORE EXPENSIVE as it involves getting the OS in the loop. i.e., trapping to the kernel.

Creating and destroying THREADS is much CHEAPER than doing so for processes.

THREADS

Lightweight units of execution that allow efficient multitasking within a process

PROCESSES

Havier, independent units of execution that require more overhead for creation, switching and destruction.

WHY USE THREADS?

AVOID NEEDLESS BLOCKING- a single-threaded process will block when doing I/O; in a MULTI-THREADED PROCESS, the operation system can SWITCH THE CPU TO ANOTHER THREAD IN THE PROCESS.

EXPLOIT PARALLELISM - The THREADS IN A MULTI-THREADED PROCESS CAN BE SCHEDULE TO RUN IN PARALLEL on a multiprocessor or multicore processor.

AVOID PROCESS SWITCHING- structure large applications NOT AS A COLLECTION OF PRCESSES, but through MULTIPLE THREADS.

AVOID PROCESS SWITCHING

avoid EXPENSIVE context switching

Treade-offs:

Threads use the same address space: MORE PRONE TO ERRORS

No support from OS/HW to protect threads using each other’s memory.

Threads context switching may be faster than process context

Threads And Operating Systems

Main Issue:

Should an OS kernel provide threads, or should they be implemented as user-level package?

User-space Solution

Threads are managed by a LIBRARY/USER-LEVEL PACKAGE that the kernel:

All operations can be completely handled WITHIN A SINGLE PROCESS → Implementations can be extremely efficient.

All services provided by the kernel are done ON BEHALF OF THE PROCESS IN WHICH A THREAD RESIDES → If the kernel decides to block a thread, the entire process will be blocked.

Threads are used when there are lots of external events: THREADS BLOCK ON A PER-EVENT BASIS → If the kernel can’t distinguish threads, how can it support signaling events to them?

Kernel solution’

to HAVE THE KERNEL CONTAIN THE IMPLEMENTATION OF A THREAD PACKAGE. This means that all operations return as system calls.

Operations that block a thread are no longer a problem: the KERNEL SCHEDULES ANOTHER AVAILABLE THREAD within the same process.

Handling external events is simple: The KERNEL(WHICH CATCHES ALL EVENTS) schedules the thread associated with the event.

The problem is (or used to be) the LOSS OF EFFICIENCY due to the fact that each thread operation required a trap (system call that causes the CPU to switch from user mode to kernel mode) to the kernel.

**** CONCLUSION-BUT: Try to MIX USER-LEVEL and KERNEL-LEVEL THREADS into a single concept, however, performance gain has not turned out to outweigh the increased complexity.

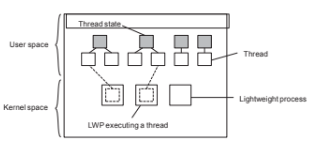

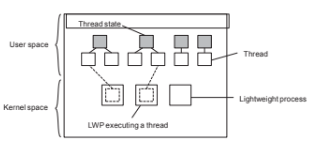

Lightweight Processes

Basic Idea

Introduce a TWO-LEVEL THREADING APPROACH: LIGHTWEIGHT PROCESSES that can execute.

Principle Operation

User-level thread does a system call → THE LWP THAT IS EXECUTING THAT THREAD BLOCKS. The thread remains BOUND to the LWP.

The kernel CAN SCHEDUEL ANOTHER LWP HAVING A RUNNABLE THREAD BOUND TO IT. Note: this thread can switch to ANY other runnable thread currently in the user.

a thread calls a blocking user-level operation → fo a context switch to a runnable thread, (then bound to the same LWP).

When there are no threads to schedule, an LWP may remain idel, and may even be removed( destroyed) by the kernel.

Using Threads At the Client Side

Multithreaded Web Client

Hidinh network latencies:

The web browser scans an incoming HTML page and finds that MORE FILES NEED TO BE FETCHED.

EACH FILE IS FETCHED BY A SEPARATE THREAD. each doing a (blocking) HTTP request.

As files come in, the browser displays them.

Multiple request-response calls to other machines (RPC)

A client does several calls at the same time, each one by a different thread.

it then waits until all results have been returned

Note: If calls are to different servers, we may have a linear speed-up.

Using threads at the Server Side

Improve Performance

Starting a thread is cheaper than starting a new process.

Having a single -threaded server prohibits simple scale-up to a MULTIPROCESS SYSTEM.

As with clients: HIDE NETWORK LATENCY by reacting to the next request while the previous one is being replied.

Better Structure

Most servers have high I?O demands, Using simple, WELL-UNDERSTOOD BLOCKING CALLS simplifies the overall structure.

Multithreaded programs tend to be smaller and easier to understand due to SIMPLIFIED FLOW OF CONTROL.

Why Multithreading is Popular:

ORGANIZATION

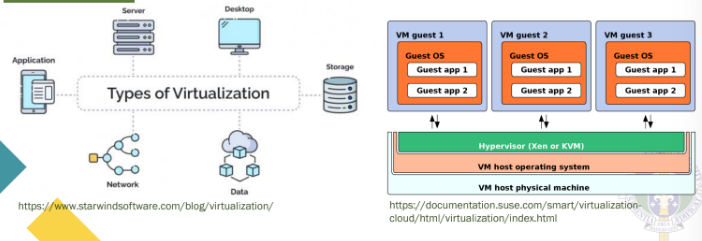

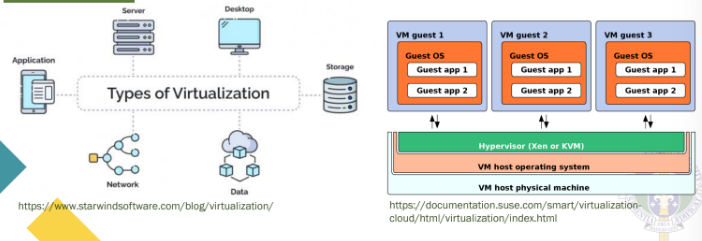

VIRTUALIZATION

Observation | Virtualization is important:

Hardware CHANGES FASTER than software

Ease of PORTABILITY and close migration

ISOLATION of failing or attacked components

Principles: Mimicking Interfaces

Simulating HARWARE OR SOFTWARE interfaces in a virtual environment.

Mimicking Interfaces

Interfaces at three different levels

Instruction set architecture: the set of MACHINE INSTRUCTIONS, with two subsets:

PRIVILEGED instructions: allowed to be executed only by the operating system.

GENERAL instructions: can be executed by any program.

SYSTEM CALLS as offered by an operating system.

LIBRARY CALL- known application programming interface (API)

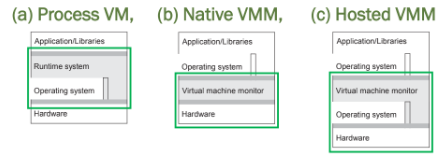

WAYS OF VIRTUALIZATION

Differences:

a) Platform-independent; a separate set of instructions - an interpreter/emulator, RUNNING ATOP AN OS

b) Has DIRECT ACCESS TO HARDWARE; Low-level instructions, along with bare-bones MINIMAL OS INSTRUCTIONS.

c) RUNS ON TOP OF AN EXISTING OS; may be slower than native VMM due to an EXTRA OS LAYER.

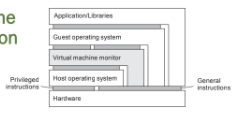

ZOOMING INTO VMs: PERFORMANCE

Refining the Organization

PRIVILEGED INSTRUCTION: if and only if executed in user mode, if causes a TRAP TO THE OS (switch control from user to kernel mode for the OS to perform a PRIVILEGED OPERATION on behalf of the user program)

NONPRIVILEGED INSTRUCTIONS: the rest

SPECIAL INSTRUCTIONS

Control-sensitive instruction: may affect the configuration of a machine, may trap (e.g. one affecting relocation register or interrupt table.)

Behavior-sensitive Instruction: effect is partially determined by the system context (e.g., POPF sets an iterrupt-enable flag, but only in system mode)

Condition for Virtualization

Necessary Condition

For any convention computer, a virtual machine monitor may be constructed if the set of SENSITIVE INSTRUCTIONS for the computer is a SUBSET OF THE SET OF PRIVILEGED INSTRUCTIONS.

Problems: THE CONDITION IS ALWAYS SATISFIED

There may be sensitive instructions that are executed in user mode without causing a trap to the operating system.

Solutions

EMULATE all instructions

WRAP NONPRIVILLEGED SENSITIVE INSTRUCTIONS to divert control to VMM

PARAVISUALIZATION: modify guest OS, either by preventing nonprivileged sensitive instructions, or making them nonsensitive (i.e, changing the context).

Guest OS users make hypercalls for privileged operations.

Virtual Machines And Cloud Computing

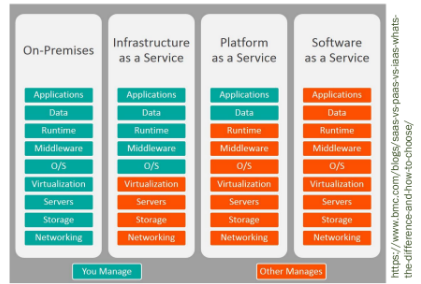

THREE TYPES OF CLOUD SERVICES

INFRASTUCTURE-AS-A-SERVICE(IaaS) : Covering the basic INFRASTRUCTURE.

Instead of renting out physical machines, a cloud provider will rent out VM ( or VMM) that may possibly be SHARING A PHYSICAL MACHINE WITH OTHER CUSTOMERS → ALMOST COMPLETE ISOLATION BETWEEN CUSTOMERS (although performance isolation may not be reached)

PLATFORM-AS-A-SERVICE (PaaS): covering SYSTEM-LEVEL services

SOFTWARE-AS-A-SERVICE (SaaS): containing actual APPLICATIONS

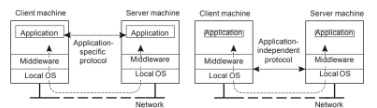

Client-Server Interaction

Distinguish application-level and middleware-level solutions

APPLICATION LEVEL

Each new application must implement ist OWN PROTOCOL LOGIC, leading to higher development effort and less reuse

MIDDLEWARE LEVEL

Promotes code reuse, interoperability, and simplifies development by ABSTRACTING NETWORK details from the application

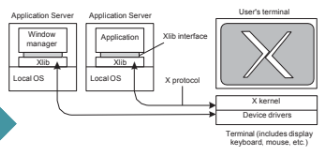

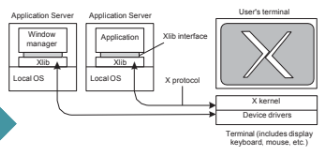

ex: The X Window System

Basic Organization

The client app (on a remote machine) uses XLib to communicate.

It sends GUI commands over the X protocol to the X server.

the X server renders graphics and sends back user input (keyboard/mouse) events.

X client and server

The application acts as a client to the X-kernel, the latter running as a server on the client’s machine.

Improving X

Practical Observation

There is a often NO CLEAR SEPARATION between application logic and user-interface commands (mixed)

Application tend to operate in a tightly SYNCHRONOUS manner with X kernel (affects performance -waits for responses)

Alternative Approaches

Let applications control the display completely, up to the pixel level (e.g., VNC)

Provide only a few high-level display operations ( dependent on local video drivers), allowing more efficient displays operations.

Client-side software

Generally tailored for distribution transparency

Access transparency: Conceal resource access using client-side stubs for RPCs

Location/ migration transparency: conceal resource location and let client-side software keep track of the actual location

Replication transparency: multiple invocations handled by the client stub:

Failure transparency: can often be placed only at the client ( mask server and communication failures).

SERVER: GENERAL ORGANIZATION

Basic model

A process IMPLEMENTING A SECIFIC SERVICE on behalf of a collection of client. It waits for an incoming request from a client and subsequently ENSURE THAT THE REQUEST IS TAKEN CARE OF, after which it waits for the next incoming request.

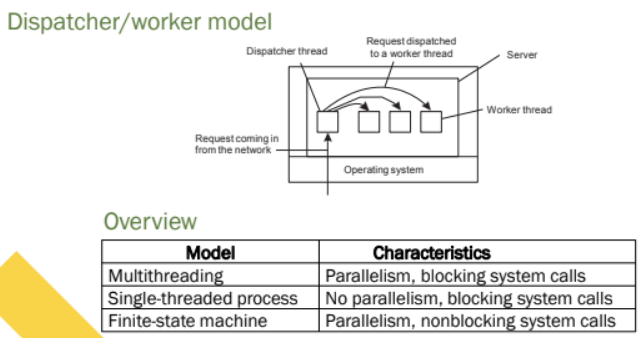

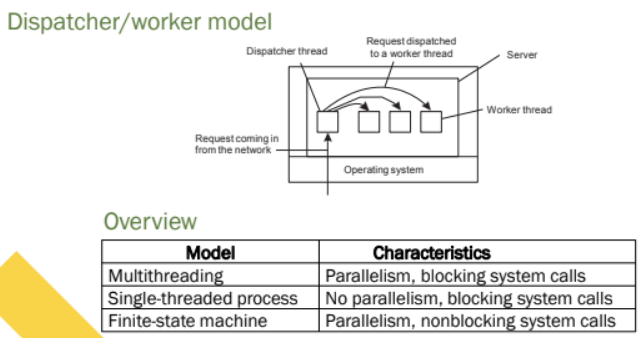

CONCURRENT SERVERS

ITERATIVE SERVE

The server handles the request before attending to the next request.

CONCURRENT SERVER

Uses a dispatcher, which picks up an incoming request that is then passed on to a SEPARATE THREAD/PROCESS. it can handle multiple request in parallel ( at the same time).

Observation

Concurrent servers are the norm: they can EASILY HANDLE MULTIPLE REQUESTS, notably in the presence of blocking operations ( to disks or other servers).

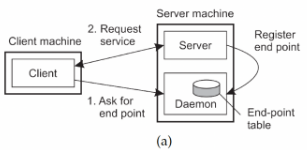

Contracting a server

observation:

Most services are tied to a specific port

DYNAMICALLY ASSIGNING TO AN ENDPOINT

Deamon Registry

The client asks the daemon on the server machine for an available endpoint

The daemon provides the client with the endpoint, and the client uses it to request service from the appropriate server.

The server registers its endpoint with the daemon ( which maintains an endpoint table).

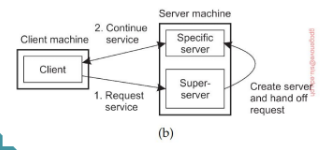

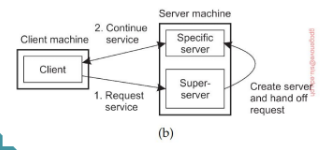

Super- Server Model

The client send a request for service to a super-server

The super server dynamically creates or activates a specific server.

The specific server takes over and continues servicing the client directly

DIAGRAM (A) USES A DEMON to manage and register server endpoints, where the SERVER IS ALWAYS RUNNING AND the CLIENT REQUEST ENDPOINT DYNAMICALLY. This approach suits PERSISTENT SERVICES but can be resource-intensive.

In contrast, DIAGRAM (b) employs a SUPER-SERVER that activates or spawns a specific server only when a client request arrives, making it more resource-efficient and scalable-ideal for ON-DEMAND or multi-service environments.

OUT-OF-BAND COMUNICATION

ISSUE:

Is it possible to interrupt a server once it has accepted (or is in the process of accepting) a service request?

SOLUTION 1: USE A SEPARATE PORT FOR URGENT DATA

server has a separate thread/process for urgent messages

urgent message come in → associated request in put on bold

NOTE: we require OS support priority-based scheduling

SOLUTION 2: USE FACULITIES OF THE TRANSPORT LAYER

example: TCP ALLOWS FOR URGENT MESSAGES in same connection

Urgent massages can be caught using OS signaling techniques.

SERVER AND STATE

STATELESS SERVES

NEVER KEEP ACCURATE INFORMATION about the status of a client after having handled a request:

DON’T record whether a file has been opened (simple close it again after access)

DON’T promise to invalidate a client’s cache

DON’T Keep track of your clients

CONSEQUENCE

Clients and servers are completely independent

State inconsistencies due to client or server crashes are reduced

Possible loss of performance because, for example, a server cannot anticipate client behavior (think of prefetching file blocks)

STATEFUL SERVERS

Keeps TRACK OF THE STATUS of its clients:

Record that a file has been opened, so that prefetching can be done.

knows which data a client has cached, and allows clients to keep local copies of shared data.

Obsercation

The performance of a stateful server can be extremely high, provided clients are allowed to keep local copies. As it turns out, reliability is often not a major problem. However, this may be harder to scale.

COMPARISON

SATELESS SERVERS:

DO NOT RETAIN ANY INFORMATION about client interactions between requests, treating each request as independent and self-contained

highly scalable, easier to manage, and fault-tolerant —ideal for RESTful APIs and services, like DNS, could be inconsistent.

STATEFUL SERVERS:

MAINTAIN SESSION INFORMATION across request, useful for more personalized and content-aware interactions, such as in online banking, shopping carts, or gaming.

harder to scale and less resilient to failures, often requiring more complex infrastructure to manage session state.

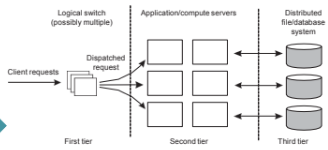

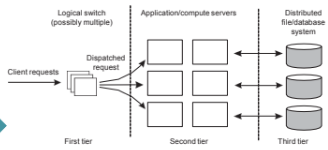

THREE DIFFERENT TIERS

COMMON ORGANIZATION

Per tier:

Client requests first hit a logical switch or load balancer. The switch distributes the requests among multiple servers.

Each server process business logic, performs computations, or prepares requests for the backend.

Handles data persistence, retrieval and updates.

Crucial Element

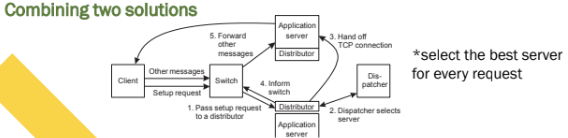

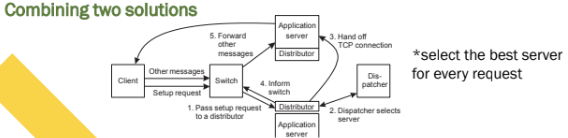

The FIRST TIER generally responds to passing requests to an appropriate server: REQUEST DISPATCHING

REQUEST HANDLING

Observation:

Having the first tier handle all communication from/to the cluster MAY LEAD TO A BOTTLENECK. Imagine only one load balancer.

Solution: TCP HANDOFF

With the TCP handoff:

The selected SERVER TAKES OVER the connection and continues communication with the client.

The server processes the request and SENDS A RESPONSE DIRECTLY to the client.

From the client’s perspective, it feels like a SINGLE CONTINUOUS CONNECTION.

SERVER CLUSTERS

The front end may easily get overloaded: Special measures may be needed

TRANSPORT-LAYER Switching: Front end simply passes the TCP request to one of the servers, taking some PERFORMANCE METRIC into account.

CONTENT-AWARE DISTRIBUTION: front end reads the content of the request and then selects the BEST SERVER.

WHEN SERVERS ARE SPREAD ACROSS THE INTERNET

Observation:

Spreading servers across the internet may introduce Administrative problems. these can be largely circumvented by using data centers from a single cloud provider.

Request dispatching: IF THE LOCALITY IS IMPORTANT

Common approach: use DNS

Clients looks up specific service through DNS— client’s IP address is part of the request

DNS server keeps tracking of replica servers for the requested service, and RETURND THE ADDRESS OF MOST LOCAL OR NEAREST SERVERS.

CLIENT TRANSPARENCY

to keep the client unaware of distribution, le the DNS resolve act on behalf of the client. the problem is that the resolver may actually be far from local to the actual client.

DISTRIBUTED SERVERS WOTH STABLE IPv6 ADDRESS(es)

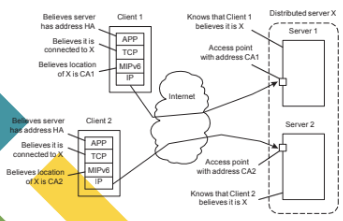

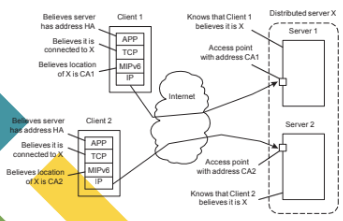

Transparency through Mobile IP

ROUTE OPTIMIZATION can be used to make different clients believe they are COMMUNICATING WITH A SINGLE SERVER, where, in fact, each client is communicating with a different member node of the distributed server

When a distributed server’s access point forwards a request from client C1 to serve node S1 (with care-of address CA1), it INCLUDES ENOUGH INFORMATION FOR S1 TO BEGIN A ROUTE OPTIMIZATION PROCESS.

This process makes C1 believe that CA1 is the server’s current location, allowing C1 to store the pair (HA,CA1) for future communication. The ACCESS POINT AND THE HOME AGENT TUNNEL MOST OF THE TRAFFIC, ENSURING THE HOME AGENT DOES NOT DETECT A CHANGE IN THE CARE-OF ADDRESS

As a result, the HOME AGENT continues to communication with the original access point, maintaining SESSION CONTINUITY.

DISTRIBUTED SERVER: ADDRESSING DETAILS

Essence: Client having MobilelPv6 can transparently set up a connection to any peer

Client C set up connection to IPv6 HOME ADDRESS(HA)

HA is maintained by a (network-level) home agent, which hands off the connection to a registered CARE-OF ADDRESS CA.

C can then apply ROUTE APTIMIZATION by directly forwarding packets to address CA (i.e., without the handoff through the home agent).

Collaborative distributed systems

Origin server maintain a home address, but HAND OFF CONNECTIONS TO THE ADDRESS OF COLLABORATING PEER→ ORIGIN SERVER AND PEER APPEAR AS ONE SERVER.

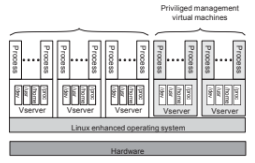

EXAMPLE: PLANET LAB

Essence:

Different organizations contributes machines, which they subsequently share for various experiments.

PlanetLab was a global research network that allowed researchers to test new protocols and services on a real-world, wide-area network. Ot consisted of hundred of nodes(servers) hosted by universities and research institutions around the world.

PROBLEM:

We need to ensure that different distribution do not get into each other’s way → Virtualization.

Basic Organization

Vserver

Independent and protected environment with its own libraries, server versions, and so on. Distributed applications are assigned a COLLECTION OF Vservers distributed across multiple machines.

PlanetLab VServers and Slices

Essence

Each Vserver operates in its own environment.

Linux enhancements include proper adjustment of process.

Teo process if DIFFERENT VSERVERS MAY HAVE SAME USER ID< BUT DOES NOT IMPLY THE SAME USER.

Separation Leads to slices:

REASON TO MIGRATE CODE

Load Distribution

Ensuring that servers in a data center are sufficiently loaded(e.g., to prevent waste of energy)

Minimizing communication by ensuring that COMPUTATIONS ARE CLOSE TO WHERE THE DATA IS (think of mobile computing).

Flexibility: moving code to a client when needed

Code migration is the process of moving executable code from one machine to another in a distributed system to improve performance, efficiency, or flexibility.

Avoids pre-installing software and increases dynamic configuration.

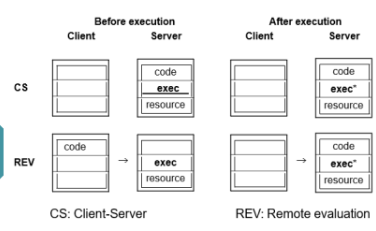

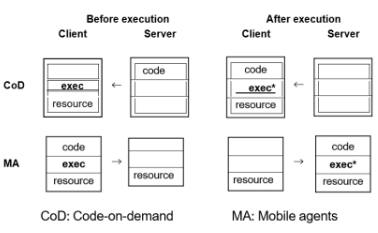

Models For code migration

client-server

The client sends a request to a server. The server processes the request locally and sends back the result.

CODE STAYES PUT, only data moves between client and server

Remote Evaluation

The client sends code to the server to be executed there. Useful when the server has more data or resources.

CODE MOVES FROM CLIENT TO SERVER.

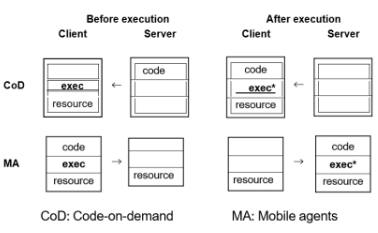

Code on Demand

The server SENDS CODE TO THE CLIENT, where it’s executed. Often used when clients need dynamic behavior or updates.

CODE MOVES FROM SERVER TO CLIENT.

Mobile Agents

A MOBILE AGENT (code + execution state + data) moves from host to host, executing part of its task at each.

CODE AND STATE MOVE BETWEEN SYSTEMS.

STRING and WEAK MOBILTY

Object Components in Code Migration

Code segment: contains the actual code

Data segment: contains the state

Execution state: contains the context of the thread executing the object’s code

Weak mobility: Move code and data segment ( and reboot execution)

Relatively simple. especially if the code is portable

Distinguish code shipping(push) from code fetching(pull)

Strong mobility: Move component, including execution state"

Migration: move the entire object from one machine to the other.

Cloning: start a clone, and set it in the same execution state.

Migration in HETEROGENEOUS SYSTEM

Main Problem:

The target machine may not be SUITABLE TO EXECUTE THE MIGRATE CODE

The definition of process/thread/processor context is HIGHLY DEPENDENT ON LOCAL HARDWARE, OPERATING SYSTEM AND RUNTIME SYSTEM.

Only Solution: ABSTRACT MACHINE IMPLEMENTED ON DIFFRENT PLATFORMS

Interpreted language, effectively having their own VM

Virtual machine monitors

Migrate entire virtual machine including (OS and processes)

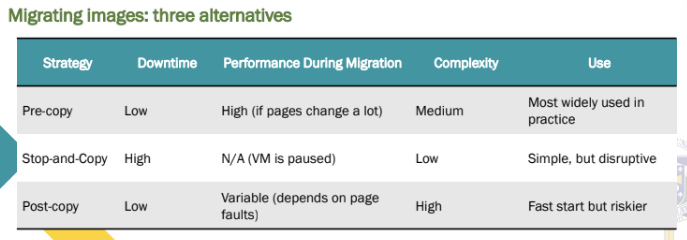

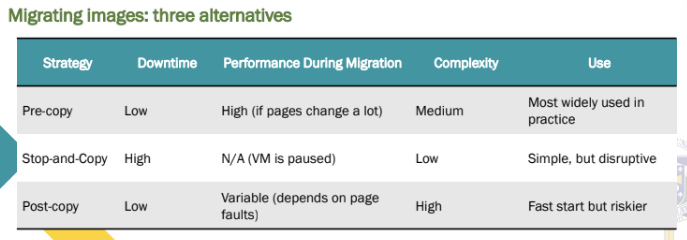

Migrating a virtual machine

Migrating images: three alternatives

pushing memory pages ( unit of memory management) to the new machine and RESENDING THE ONES THAT ARE LATER MODIFIED during the migration process.

STOPPING the current virtual machine; MIGRATING memory, and processes start on the new virtual machine

Letting the new virtual machine PULL IN NEW PAGES AS NEEDED: processes start on the new virtual machine immediately and copy memory pages on demand.

Performance of migrating virtual machine

Problem

A complete migration may actually take tens of seconds. we also need to realize that during the migration, a service will be completely unavailable for multiple seconds.

Measurements regarding response times during VM migrations