processes

1/36

Earn XP

Description and Tags

blue: user-space solution; red: kernel solution

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

37 Terms

virtual processors

built in software, on top of physical processors

processor

provide set of instructions along with the capability of automatically executing a series of those instruction

thread

minimal software processor; lightweight unit of execution within a process performing a task

process

software processor; one or more threads can be executed; heavier, independent units of execution that require more overhead for creation, switching, and destruction.

memory and resources

when a process starts, _ are allocated which are shared by each thread.

single-threaded process

one task at a time

multi-threaded process

many task at the same time

contexts

allow pausing and resuming without losing data

processor context

the minimal collection of values stored in the registers of a processor used for the execution of a series of instructions (e.g., stack pointer, addressing registers, program counter).

thread context

the minimal collection of values stored in registers and memory, used for the execution of a series of instructions (i.e., processor context, thread state – running/waiting/suspended).

process context

the minimal collection of values stored in registers and memory, used for the execution of a thread (i.e., thread context, but now also at least memory management information such as Memory Management Unit (MMU) register values).

independent

threads share the same address space; context switching can be done entirely _ of the operating system.

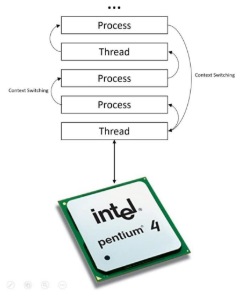

expensive

process switching is generally (somewhat) more _ as it involves getting the OS in the loop, i.e., trapping to the kernel.

cheaper

creating and destroying threads is much _ than doing so for processes.

avoid needless blocking

a single-threaded process will _ when doing I/O; in a multi-threaded process, the operating system can switch the CPU to another thread in that process.

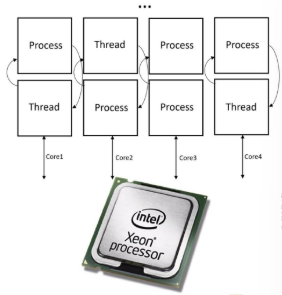

exploit parallelism

the threads in a multi-threaded process can be scheduled to run in _ on a multiprocessor or multicore processor.

avoid process switching

structure large applications not as a collection of processes, but through multiple threads.

process switching tradeoff

threads use the same address space: more prone to errors; no support from OS/HW to protect threads using each other’s memory; thread context switching may be faster than process context.

user-space solution

threads are managed by a library/user-level package than the kernel

kernel solution

to have the kernel contain the implementation of a thread package; all operations return as system calls.

single process

all operations can be completely handled within a _ ⇒ Implementations can be extremely efficient.

resides

all services provided by the kernel are done on behalf of the process in which a thread _ ⇒ if the kernel decides to block a thread, the entire process will be blocked.

per-event basis

threads are used when there are lots of external events: threads block on a _ ⇒ if the kernel can’t distinguish threads, how can it support signaling events to them?

schedules

operations that block a thread are no longer a problem: the kernel _ another available thread within the same process.

catches

handling external events is simple: the kernel (which _ all events) schedules thethread associated with the event.

loss of efficiency

the problem is (or used to be) the _ due to the fact that each thread operation requires a trap (system call that causes the CPU to switch from user mode to kernel mode) to the kernel.

lightweight processes

can execute user-level threads

fetched

in a multithreaded web client, web browser scans an incoming HTML page and finds that more files need to be _

separate

in a multithreaded web client, each file is fetched by a _ thread, each doing a (blocking) HTTP request; then as files come in, the browser displays them.

linear speed-up

in multiple request-response calls to other machines (RPC), client does several calls at the same time, each one by a different thread then it waits until all results have been returned. if calls are to different servers, we may have a _

multiprocessor system

in improving performance in threads at a server side, having a single-threaded server prohibits simple scale-up to a _.

hide network latency

in improving performance in threads at a client side, _ by reacting to the next request while the previous one is being replied.

blocking calls

in better structure in threads at a server side, most servers have high I/O demands. Using simple, well-understood _ simplifies the overall structure.

flow of control

in better structure in threads at a server side, multithreaded programs tend to be smaller and easier to understand due to simplified _.

multithreading (model)

parallelism, blocking system calls

single-threaded process (model)

no parallelism, blocking system calls

finite-state machine (model)

parallelism, nonblocking system calls