Week 7: Validity and Behavioral Observation

1/28

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

29 Terms

What is validity?

Assesses whether the scale is measuring what it is supposed to be measuring?

Are the items on a self-esteem actually measuring self-esteem?

What is content validity?

Adequately covering the relevant content

Expert ratings of test content

Example: In educational testing, does the test cover all 5 chapters or just 2?

Considered "logical" rather than statistical.

This means that you can’t run a statistical test to ensure your test has content validity (expert ratings)

What are the threats to content validity?

*2 Principles to content validity

Principle:

Test should not include content irrelevant to the construct.

Threat: "Construct Irrelevant Content"

Principle:

Test should include content representing the full range of the construct.

Threat: "Construct Under-representation"

Test fails to include content representing the full range of the construct.

What is the difference between content validity and face validity?

Face Validity:

Related to content validity.

The degree to which a measure appears to be related to a specific construct, as judged by non-experts (e.g., test takers).

Not considered a “real” measure of validity because it doesn’t provide evidence to support conclusions.

Considered “logical” rather than statistical.

This means that you can’t run a statistical test

What is criterion-related validity, and what are its types?

Tells us how well a test corresponds to an established criterion.

Assessed using a correlation.

Use your test and a gold standard test from the field then correlate them

Predictive Validity:

"Forecasting function" → longitudinal.

Correlation between a predictor and a criterion.

Predictor variable is measured before criterion variable

Example: college entrance test (taken in high school) predicting college GPA.

Concurrent Validity:

Simultaneously administered → cross-sectional.

Correlation between two variables at the same time point

Example: college entrance test (taken in high school) predicting high school GPA

What is predictive validity?

Predictive Validity:

Type of criterion-related validity

"Forecasting function" → longitudinal.

Correlation between a predictor and a criterion.

Predictor variable is measured before criterion variable

Example: college entrance test (taken in high school) predicting college GPA.

What is concurrent validity?

Concurrent Validity:

Type of criterion-related validity

Simultaneously administered → cross-sectional.

Correlation between two variables at the same time point

Example: college entrance test (taken in high school) predicting high school GPA

What is construct-related validity, and what are its types?

Construct-Related Validity: Assesses whether a test measures the theoretical construct it is intended to measure.

Convergent Evidence:

Similar to criterion-related validity.

Assesses correlation between two measures that are theorized to be related.

Can be positively or negatively correlated.

Example: A new anxiety scale correlating with an existing measure of anxiety.

Divergent/Discriminant Evidence:

Assesses correlation between two measures that are theorized to be unrelated.

Does not mean -negative correlation-, just no meaningful relationship.

Example: A test of mathematical ability should not correlate with a measure of extroversion.

What is convergent evidence?

Convergent Evidence:

Type of construct-related validity

Similar to criterion-related validity.

Assesses correlation between two measures that are theorized to be related.

Can be positively or negatively correlated.

Example: A new anxiety scale correlating with an existing measure of anxiety.

What is divergent/discriminant evidence?

Divergent/Discriminant Evidence:

Type of construct-related validity

Assesses correlation between two measures that are theorized to be unrelated.

*Does not mean -negative correlation-, just no meaningful relationship.

Example: A test of mathematical ability should not correlate with a measure of extroversion.

What are multi-method approaches for construct validity?

Multi-method approaches integrate different measurement methods to strengthen construct validity.

Multiple Informants:

Different people report on the same individual.

Example: For young children, researchers may use parent and teacher reports.

Example: For adolescents or adults, peer evaluations can supplement self-reports.

Multiple Methods:

Different types of data collection.

Example: A researcher observing social interactions to validate a self-report on shyness.

Example: Measuring heart rate as a physiological indicator of anxiety.

What is a validity coefficient, and how is it interpreted?

A validity coefficient is a correlation coefficient that indicates how well a test predicts a criterion.

Acceptable coefficient: r = .30 or higher.

Percentage of variation explained:

Squared value of the correlation coefficient (r²).

Example: If r = .40, then 16% of the variation in the criterion can be explained by the test.

What are some considerations for evaluating validity coefficients?

Sample composition – Ensure the measure has been validated in the population/sample you are testing.

Example: Using an anxiety scale validated for 7-8-year-olds in a study on 3-5-year-olds may not be appropriate.

Sample size – Smaller samples may inflate correlation coefficients.

Restricted ranges – A limited range in predictor or outcome variables can lower the validity coefficient.

Generalizability – Consider whether the original validation study results apply to your sample.

Example: A measure validated with parent reports may not generalize if you use teacher reports.

Differential predictions – The test's predictive power may vary across different groups or conditions.

What is the difference between reliability and validity?

Reliability = Consistency (Does the test produce stable and consistent results?)

Validity = Accuracy (Does the test measure what it is supposed to measure?)

A test can be reliable but not valid (e.g., a broken scale consistently gives the wrong weight).

A test cannot be valid without being reliable (if a test is not consistent, it cannot be accurate).

What is behavioral observation?

Moves beyond questionnaire-based data.

Involves observing a participant or group of participants directly in real-world settings or controlled environments.

What are the contexts of behavioral coding?

Laboratory or Home:

Lab: Controlled environment, often with video recording.

Issue: Participants may act differently because they know they are being recorded.

Naturalistic:

Uncontrolled: Observing in settings like classrooms, hospitals, parks.

Live, in vivo coding: More challenging as it requires multiple researchers and real-time observation without playback.

Video recorded: Using tools like GoPro for unobtrusive observation.

Why use behavioral observation? What are its pros?

Ecological validity: Observing behavior in natural settings increases relevance to real-world situations.

Assess construct in young children: Some children may be too young to understand or respond to questionnaires.

Limit self-report bias: Reduces reliance on self-reports, which can be biased or inaccurate.

Multi-method approach: Can contribute to establishing construct validity when combined with other methods.

Why might you NOT use behavioral observation? What are its cons?

Expensive/resource intensive: Requires equipment, multiple observers, and potentially a large setup.

Time intensive: Observing and coding behavior takes significant time and effort.

What is frequency coding in behavioural observation?

Frequency Coding: Measures how often a certain behaviour occurs during an observation period.

Example: How many times does a parent praise their child during a three-minute play session?

Coded Behaviour: 16 instances of praise observed.

16 instances ÷ 3 minutes = 5.33 instances per minute.

What is duration coding in behavioural observation?

Duration Coding: Measures how long a certain behaviour occurs during an observation period.

Example:

How long did a participant smile during a one-minute social interaction?

Coded Behavior: 43 seconds of smiling.

Proportion: 43 seconds ÷ 60 seconds = 71.67%.

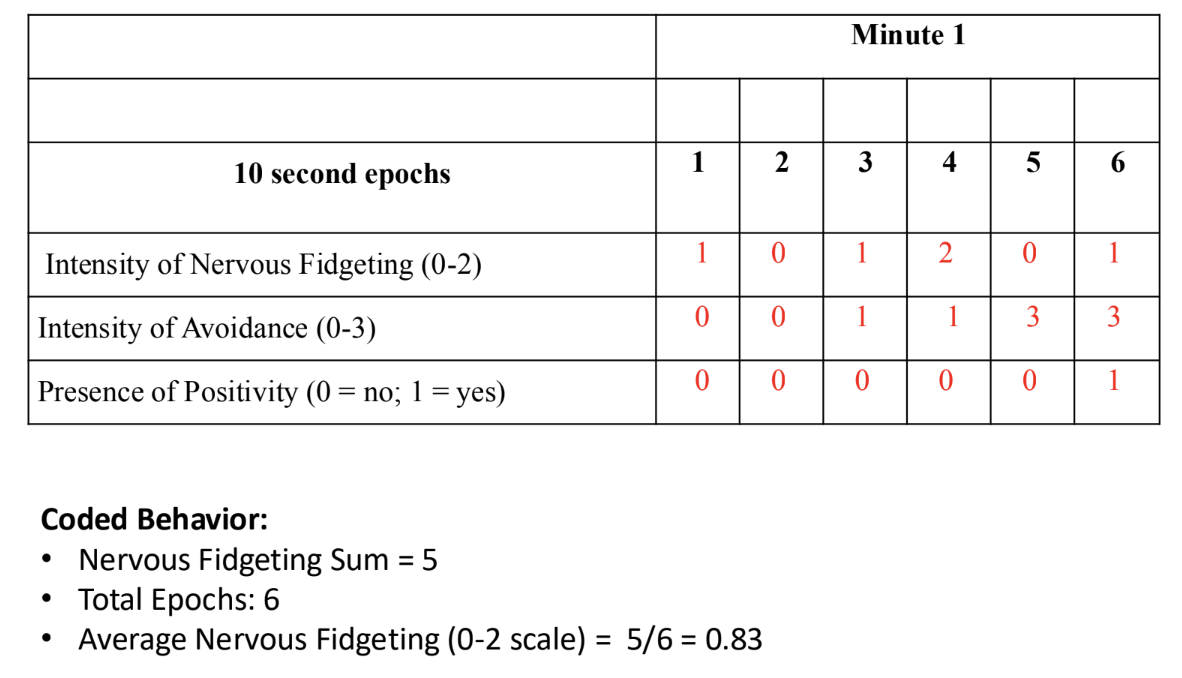

What is interval coding in behavioural observation?

Interval Coding: Measures whether a behaviour occurs during predetermined intervals.

Example: A researcher divides a one-minute task into six 10-second epochs.

Coded Behavior:

Dichotomous: Is the behaviour present or absent?

Continuous: Is the behaviour occurring at low, medium, or high levels?

Integrates intensity - Measures the strength or frequency of the behavior.

What is global coding in behavioural observation?

Global Coding: Provides an overall impression of the behaviour across the entire observation period, rather than focusing on specific intervals or epochs.

How do you develop a coding scheme for behavioral observation?

What do you want to measure?

Example: Number of times a student asks for help during a 50-minute tutorial.

How do you define the behavior?

Example: What counts as "help"? Does it include raising a hand, asking the TA, or asking peers? Clear guidelines are necessary.

For abstract behaviors (e.g., shyness):

Theoretically, what behaviors capture shyness?

Verbal hesitancy

Gaze aversion

Body orientation

What is inter-rater reliability?

Inter-Rater Reliability: Ensures that different raters (or coders) interpret behaviors in the same way.

Blinded raters need to overlap on about 15% of the cases to establish reliability.

Example:

You have 200 videos to code and two coders.

Each coder should code a subset of 30 overlapping videos to assess agreement.

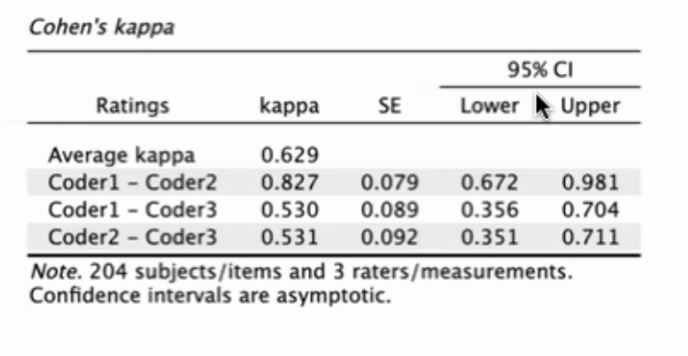

How is inter-rater reliability established using the Kappa statistic?

Kappa Statistic: Assesses the level of agreement among raters.

Range:

1 = Perfect agreement

-1 = Less agreement than would be expected by chance

Kappa Value:

Higher than .70: Excellent agreement

.40 to .70: Fair to Good (Acceptable)

Less than .40: Poor agreement

What should you do if you have a low Kappa statistic?

Clarify your coding scheme to ensure consistency and reduce ambiguity.

Train coders more extensively to improve their understanding and consistency in applying the coding criteria.

What is internal consistency, and how is it used in behavioral coding?

Internal Consistency: Measures the consistency of items within a test or coding scheme.

Can be used for interval coding, where epochs are treated as "items."

Cronbach's alpha is used, just like in questionnaires, to assess how well the items (or epochs) correlate with each other.

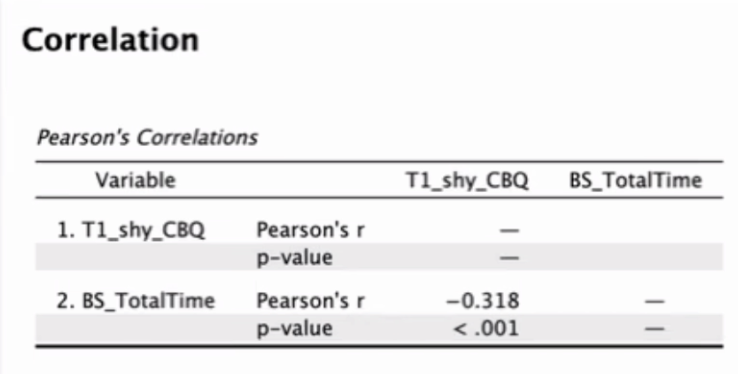

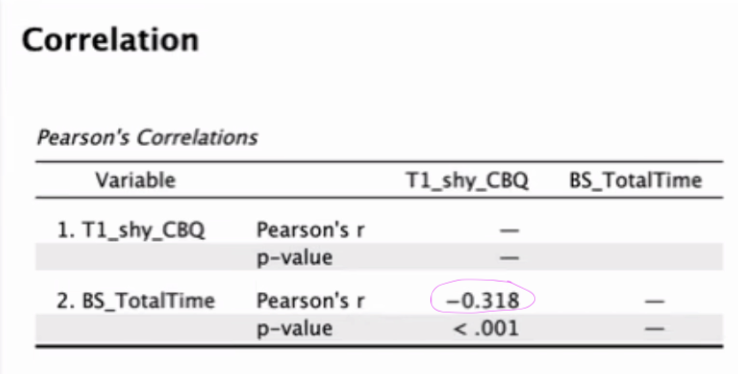

Can we establish convergent validity for these measures?

yes because the correlation coefficient is greater than 0.3

Are these coders in agreement?

yes bc kappa is above 0.4 for each individual set of coders