OS Midterm

1/115

Earn XP

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

116 Terms

data register

small fast data storage location on the CPU (aka buffer register)

address register

specifies the address in memory for the next read or write

PC (program counter)

holds address of next instruction to be fetched

instruction register

stores the fetched instruction

interrupt

allows other modules to interrupt the normal sequencing of the processor

hit ratio

fraction of all memory accesses found in the cache

Principle of locality

memory references by processor tend to cluster.

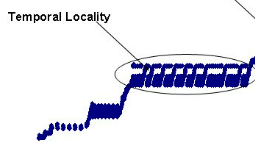

temporal locality

limited range of memory addresses requested repeatedly over a period of time

spatial locality

memory addresses that are requested sequentially

cache

small, quick access storage close to the CPU used for repetitively accessed data or instructions. Modernly stored in 3 levels (L1, L2, L3)

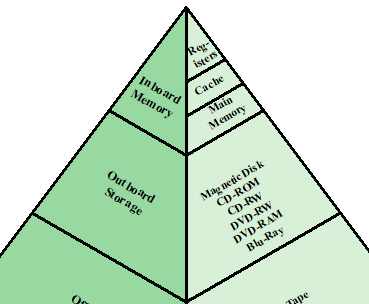

memory hierarchy

system of memory levels balancing cost and capacity vs speed. Bigger = slower = cheaper

volatile memory

memory that will be cleared when computer is powered off (ex: RAM)

purpose of interrupts

helpful for handling asynchronous events, multitasking, and error handling

interrupt classes

program (illegal instruction)

timer

I/O

hardware failure

interrupt handler

determines nature of interrupt and performs necessary actions

program flow with and without interrupts

program is able to execute separate instructions when waiting on something (like I/O)

multiple interrupt handling

Approach 1: Disable interrupts while processing an interrupt

Approach 2: Use a priority scheme

calculation of EAT (Effective Access Time)

Ts = H*T1 + (1-H)*(T1 + T2)

where

Ts = average access time

H = hit ratio

T1 = access time of M1 (cache)

T2 = access time of M2 (main memory)

Instruction execution order

fetch instruction, then execute

Operating System

interface between applications and hardware that controls the execution of programs

basic elements of a computer

processor

I/O modules

Main memory

System Bus

system bus

provides communication between computer components

I/O modules

move data between computer and external environment

secondary memory

communication equipment

terminal

programmed I/O

I/O module performs action and sets appropriate bits in I/O status register. processor periodically checks status of I/O module

Interrupt-Driven I/O

I/O module interrupts processor when ready to exchange data

Direct Memory Access (DMA)

performed by separate module on system bus or incorporated into I/O module

symmetric multiprocessors (SMP)

stand-alone computer system where

2+ processors

processors share memory, access to I/O

system controlled by one OS

high performance/scaling/availability

kernel

contains the most frequently used OS instructions and other portions. The central component of the OS. Manages resources, processes, and memory

turnaround time

total time to execute a process

process switch

switching between processes, requires switching data within registers (aka context switch)

process

Instance of a program in execution; unit of activity that can be executed on a processor

3 components of a process

executable program

associated data needed by program

execution context

execution context

internal data OS can supervise/control

contents of registers

process state, priority, I/O wait status

5 OS management responsibilities

process isolation

automatic allocation + management

modular programming support

protection and access control

long-term storage

Application Binary Interface (ABI)

how compiler builds an application. Defines system call interface through user Instruction set architecture (ISA)

Instruction Set Architecture (ISA)

Contains set of executable instructions by CPU. Considered an interface

thread

a lightweight process that shares resources within a process. Dispatchable unit of work; includes a thread context

multithreaded process

process which can separate concurrent threads

multiprogramming

the ability to store processes in memory and switch execution between programs

degree of multiprogramming

number of concurrent processes allowed in main memory

goals of an OS

convenience

efficiency

evolution ability

manage computer resources

multitasking vs parallelism

multitasking executes multiple processes on one CPU by allocating each process CPU time. Parallel processing involves using multiple cores.

activities associated with processes

creation, execution, scheduling, resource management

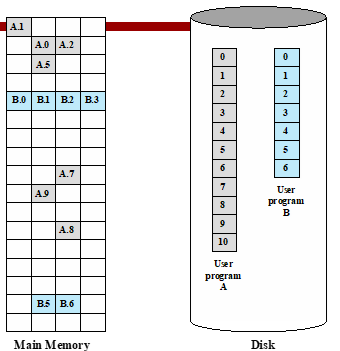

virtual memory

allocated space for a program that has relative memory addresses

paging

system of fixed size blocks assigned to processes

microkernel architecture

assigns few essential functions to kernel

simple implementation

flexible

good for distributed environment

smaller than monolithic kernels

monolithic kernel

kernel where all components are in 1 address space. large and hard to design, but high performing

signal

mechanism to send message kernel→process

system call

mechanism to send message process→kernel

distributed operating system

provide illusion of

single main and secondary memory space

unified access facilities

object oriented OS

add modular extensions to small kernel

easy OS customizability

eases development of tools

5 process states

new

ready

running

blocked

exiting

blocked vs suspended

Blocked

waiting on event

can run once event happens

Suspended

able to run

instructed not to run

swapping

moving pages from memory to disk

happens when OS runs out of physical memory

dispatcher

small program that switches processor between processes

ready queue

queue that stores processes ready to run (waiting for CPU time)

event queue

queue that manages and processes asynchronous events (ex: timers, I/O)

virtual machine

dedicate 1 or more cores to a particular process and leave processor alone

preemption

suspending a running process to allow another process to run

process switch

7 step execution to switch processes

save processor context

update PCB

move PCB to appropriate queue

select new process

update PCB

update memory data structures

restore processor context

process image

process’s state at a given moment

user-level context

register context

system level context

process control block (PCB)

data needed by OS to control process

identifiers

user-visible registers

control and status register

scheduling

privileges

resources

memory management

role of PCB

contain info about process

read/modified by every module in OS

defines state of OS

hard to protect

User Running (process state)

Executing in user mode

Kernel Running (process state)

Executing in kernel mode

ready to run, in memory (process state)

ready to run as soon as the kernel schedules it

asleep in memory (process state)

unable to run until event occurs; process in main memory (blocked state)

ready to run, swapped (process state)

ready to run, but must be swapped into main memory

sleeping, swapped (process state)

process awaiting event and swapped into secondary storage (blocked state)

preempted (process state)

able to run, but instructed not to. Process returning from kernel mode to user mode, kernel does process switch to switch to other process

created/new (process state)

process newly created; not ready to run. Parent has signaled desire for child but child is not allocated space nor in main memory yet

zombie (process state)

process DNE, but leaves record for parent process to collect

I/O bound processes

processes that spend a significant amount of time waiting for I/O responses

CPU bound processes

processes that spend almost all of their time in CPU time

User vs Kernel mode implementation

user mode requests services from OS through system calls and interrupts

User vs Kernel mode reasoning

protection

security

isolation

flexibility

When Kernel mode is used

applications act in user mode, until they need special access through system calls and interrupts

process creation steps

assign PID

allocate space

initialize PCB

set linkages

create/expand other data structures

Trap

error generated by current process

known as exception/fault

when process switches occur

timeout

I/O

system calls

interrupts

User level thread

thread management done by application

kernel not aware of threads

Kernel level thread

thread management done by kernel

benefits of threads

threads share memory, are quicker, more efficient

5 components of a thread

execution state

thread context

execution stack

storage

memory/resource access

thread execution states

ready

running

blocked

thread operations

spawn

block

unblock

finish

ULT pros and cons

pros:

doesn’t require kernel mode

works on any OS

cons:

system calls block all threads of a process

cannot multiprocess

KLT pros and cons

pros:

can run multiple threads in parallel

can schedule new thread if thread is blocked

cons:

needs kernel mode

OS specific

ULT vs KLT applications

ULT: web servers, games, user level applications

KLT: network services, device drivers, background applications

user vs kernel mode

User: most applications run here, restricted access, safer

Kernel: unrestricted access, dangerous

Amdahl’s law

the idea that speedup has diminishing returns and does not scale linearly. Allows us to determine optimal number of processors

Linux tasks

single-threaded process

thread

kernel tasks

Linux namespaces

separate views that process can have of the system

helps create illusion that processes are the only process on a system

monitor

easier to control semaphore implemented at the PL level

synchronization

enforce mutual exclusion

achieved by condition variables

binary variables that flag suspension or resumption of a process

message passing

needs synchronization and communication

has send and receive

both sender and receiver can be blocked

addressing

schemes for specifying processes in send and receive

direct and indirect

readers/writers problem

data area shared among many processes

3 conditions

any number of readers

1 writer

no reading when writer writing

race condition

when multiple threads/processes read and write data items; final result depends on order of execution

mutual exclusion

requirement that no other processes can be in a critical section when 1 process is accessing critical resources