Memory Management and Virtualisation

1/17

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

18 Terms

Memory Protection

Security mechanism prevents processes from accessing unauthorized memory regions

Most hardware supports memory protection through an MMU (memory management unit)

This controller allows the OS to mark different parts of memory as accessible to different processes (and therefore not others)

This can be used to ensure programs don’t interfere with each other’s memory

Memory protection without hardware support can’t be enforced

It helps ensure the user-space programs can’t do kernel things

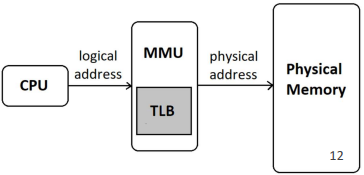

Memory Management Unit (MMU)

A hardware component in modern CPUs responsible for memory protection and address translation

Processes can only access their assigned memory

Virtual addresses are mapped to physical memory safely

A mechanism like paging or segmentation to map virtual addresses to physical addresses, allowing for efficient memory allocation

Unauthorized memory access is prevented

The OS can efficiently isolate and protect processes

Memory Protection using MMU

Through Virtual Memory & Address Translation:

Each process runs in its own virtual address space

Translates virtual addresses → physical addresses

This isolates processes from directly accessing hardware memory

Prevents one process from reading/writing another process’s data

Uses Page Tables to store mappings of virtual → physical addresses

Works with CPU privilege levels to separate user applications from the kernel

Virtual Memory

Implemented using both hardware (MMU) and software (OS)

It allows OS to use a combination of physical RAM and disk storage to provide a larger, virtual address space for programs

Enables processes to run even if the system does not have enough physical memory (RAM)

by swapping data between RAM and a portion of the hard disk as needed

OS divides memory into fixed-size blocks called "pages" or "segments"

It keeps the most frequently used pages in RAM, while less frequently used pages are stored on the hard disk

If a page is not in RAM, OS swaps it in from HD

OS manages this swapping process, and the user is unaware of it

Allow each process to think it is the only program using memory

Prevent processes from accessing bits of memory, which isn’t allowed

Let processes use more memory than the machine physically has

Each process is given the entire memory address space to itself

VM is important for improving system performance, multitasking, and using large programs

Efficiency of VM

Required hardware support to be practical

Otherwise the OS has to examine every process instruction before running it

OS maintains a page table data-structure which maps regions of virtual address space for each process into physical address space

The page in page table comes from each address

Page

Fixed-size block of data in VM

Historically size was 4kB but since early 2000’s, 4MB and 16MB are more common due to larger memory sizes

Page Table

A data structure that maps virtual addresses to physical addresses, allowing the system to manage memory efficiently

TLB Translation Lookaside Buffer

A hardware, automatic page table

A specialised cache within MMU that stores recent translations of virtual memory addresses to physical memory addresses, enabling faster memory access

Used to speed up the process of translating virtual addresses (used by programs) to physical addresses (used by the hardware)

When the CPU tries to access an address, it will automatically be mapped by it into a different address

This allows the program to be written with virtual memory addresses but access physical ones

OS has to load the appropriate mappings into the TLB every time it switches between processes

TLB Hit

Occurs when the required virtual address translation is already present in the TLB

Fast access → The CPU retrieves the physical address directly from the TLB

No need to access the page table → Reduces memory access time

TLB Miss

Occurs when the required virtual address translation is not found in the TLB

Slow access → The CPU must search the page table in memory to find the physical address

Extra memory access → Increases execution time

This causes a CPU exception which automatically switches to run the OS’s page fault code

The OS then decides what to do. The fault could be due to either:

The process has tried to access a virtual address is does not have access to. Typically the OS will then kill the process. On Linux, it signals segmentation fault

Alternatively, it could be a virtual address which does exist but doesn’t have a current mapping…

Performance Impact of TLB Hits and Misses

More TLB hits → Faster memory access and better performance

Frequent TLB misses → Increased memory access time (due to page table lookup)

To reduce TLB misses, OS and hardware use techniques like:

Larger TLB size (more entries stored)

Superpages (mapping larger memory chunks to reduce TLB entries)

TLB Hit → The CPU finds the virtual-to-physical address mapping in the TLB → Fast memory access

TLB Miss → The CPU does not find the mapping in the TLB → Page table lookup is required → Slower access

Page Swapping

A memory management technique where OS moves entire blocks of memory (pages) between RAM and HD

If physical RAM is full

It moves (swaps) inactive pages of memory from RAM to HD (swap space or page file)

This allows the system to free up RAM for active processes while still keeping inactive pages available for later retrieval

Pros and Cons of Page Swapping

Pros

Allows more processes to run simultaneously

Prevents immediate crashes when RAM is full

Cons

Accessing swap space is much slower than accessing RAM

Excessive swapping can slow down the system significantly

TLB Miss and Swapped-Out Pages

If a process requests a page, but the page is swapped out to disk, the TLB will not have a valid translation

The OS detects that the page is not in RAM and triggers a page fault

The OS loads the page from disk to RAM, updates the page table, and adds the mapping to the TLB

TLB Flush on Context Switches & Swaps

When a process is swapped out, its memory pages are removed from RAM, and their TLB entries become invalid

The TLB must be cleared (flushed) so that outdated mappings are not used when another process runs

Performance Impact (Swapping and TLB)

Frequent Swapping → More TLB Misses → More Page Table Lookups → Slower Performance

Efficient Memory Management → Reduces Swaps → Improves TLB Hit Rate

Optimisation Techniques:

Increase RAM → Reduces swapping

Use Larger TLB → Reduces TLB misses

Limitations of Virtual Memory

Slower Access Time - slower performance due to disk swapping

Memory access time in nanoseconds (ns) or milliseconds (ms)

The lower the access time, the faster the data retrieval process

Increased Complexity

Requires a complex memory management system to map virtual addresses to physical addresses. This adds overhead to OS and can introduce potential issues

Requires hardware support, such as MMU

Factors that Affect Memory Access Time

Cache memory: Stores frequently accessed data in main memory addresses, allowing the CPU to access the data faster

Page table access: Affects the average memory access time

TLB efficiency: Affects the average memory access time