Psych 120B Object recognition/motion/depth

1/72

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

73 Terms

viewpoint invariance

The ability to recognize an object regardless of the viewpoint one has observing the object

Ex. face experiment: face at different angles could be recognized

Ex. Greebles: take longer with large angle, not fully viewpoint invariant

Some tasks do. Some objects don’t have

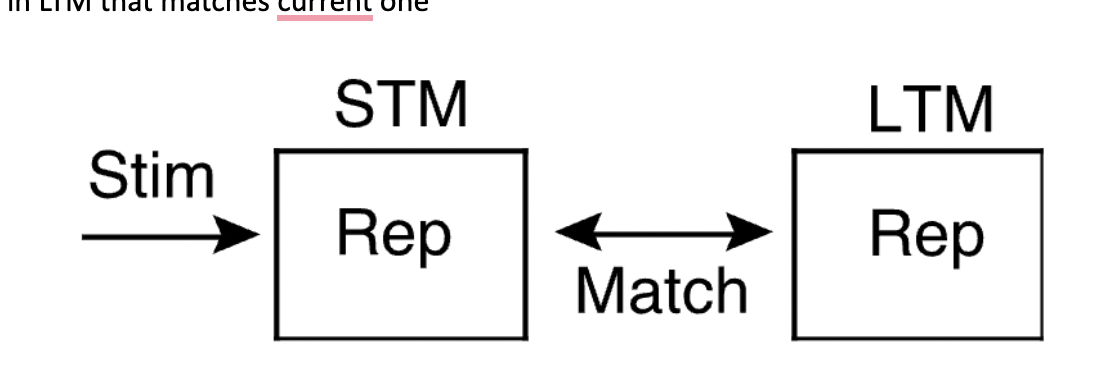

Steps of Object recognition

S

teps: represent physical stimulus in short-term memory(STM) then find representation in LTM that matches current one

What are the theories of object recognition

Template theory, feature detection theory, structural descriptions

Template Theory

representations are mental images(LTM is templates)

Match template well=object

Matching process is correlational: highest correlating template wins

Disadvantages of Template Theory

Disadvantages: too many views possible, require to many templates in LTM

Transformation step(rotate/change to match template) not enough invariance

Feature Detection Theory

define smaller features necessary to be in a category

What is the Pandemonium Model steps of feature detection theory

1. Image demon: receives sensory input 2. Feature demons: decode/ determine specific feature Ex. Break down R into vertical line, curve..) 3. Cognitive demons: “shout” when receiving certain combinations of features(Ex. Gets info from feature demon to determine R) 4. Decision demon: listens for lourdest shout in pandemonium to identify input

Disadvantages of Pandemonium Model

Problems: bottom-up model(data driven) can’t explain how context influences perception

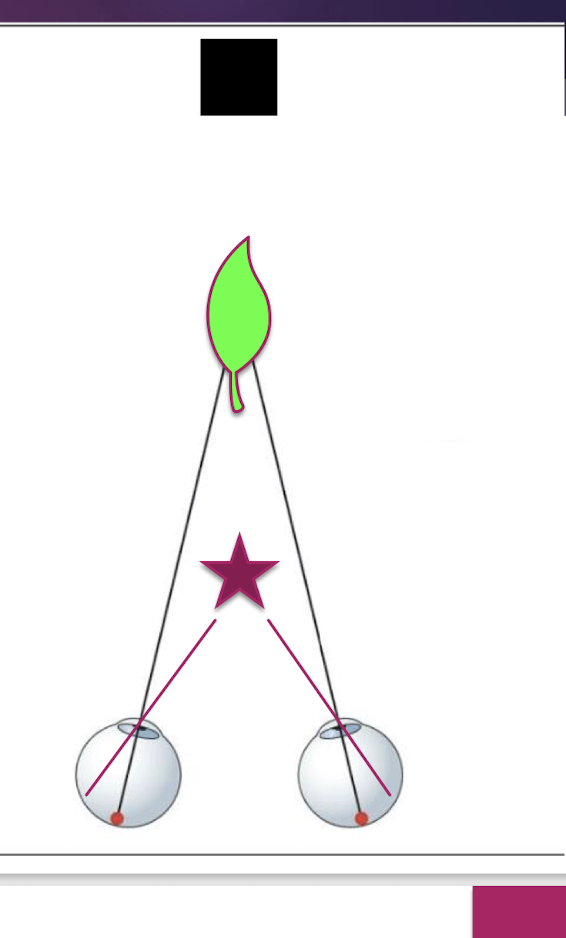

Structural Descriptions:

representations by structural components, ex. A =long segment at 45 angles to short segments)

Geons

What are geons and componentt/purpose

Geons: geometric elements of which all objects are all composed(36 different shapes, easily discriminated, viewpoint invariant-look the same at dif angles, robust to with noise(blurry) still able to recognize

Volumetric representation: geons allow for partial encoding 3D structures of objects- recognize objects based on components

Strength of Geons/Structural Descriptions

recognize partially occluded objects if geons can be determined, easier to recognize with corners

Weaknesses of Geons/structural description not enough

structural description not enough, need metric info, ambiguity in structural description, most often we have several candidates, doesn’t include mechanism for contextual influences on object recognition

How is sensitivity to motion measured

retinal velocity (how fast an image's position is changing on retina)

Subject-relative motion:

empty field with no background reference= 10/20 min/sec(how fast eyes can perceive)=less sensitive to motion (slowest motion to detect)

Object relative motion(with background reference)

1-2 min/second= more sensnitive with background

Retinal displacement:

when position of object’s image on retina changes

Optical pursuit

track a moving object with eye (stays in one place in retina)

apparent motion

Images flash on/off in separate locations within a certain timing-nothing really moves but perceived as motion

Gives sense of timing perceptual system needs to perceive motion

What is spatial disparity and timing between the stimuli to perceive motion with apparent motion

Spatial disparity(distance between stimuli): s<60ms looks simultaneous S:60-200ms looks like motion; S> 200 ms looks like succession not movement

Indirect Perception Theory

motion is not a basic perceptual quality and it derived from other things(position, timing)

Direct Perception Theory

motion is a basic perceptual quality, the system is wired to, you dont need to derive it from other things(position, timing)-correct based on exner and wetherimers experiment

Exner experiment

timing): can detect motion before timing info=direct theory

Wetherimer’s experiment

we have phi or objectless motion so we don’t need position=direct theory

Reichardt Detectors

Network of cells for motion detection:model of how the brain detects motion by comparing signals from two neighboring sensors (A and B), where the signal from the first sensor is delayed before being compared to the signal from the other by adding interneuron(D)

A and B have to activate M at the same time for it to fire

Multiple Reichardt detectors allow motion tracking for longer distances

Direction specific: only fire for detection in one direction

how opponent processing explains motion aftereffects

Two Reichardt detectors with one in the right direction(excitatory) and other in left direction(inhibitory) = resulting motion is the sum of activation from the opposite direction detectors

-one becomes fatigued when motion stop balance becomes uneven so the opposite is seen

Define the aperture problem and describe how the visual system solves this problem

When viewed through a small hole(aperture) it is not clear what direction the motion actually is because can’t see all edges, only has a tiny view so cant do it on its own, can only see a small part of space

Solves: combine multiple apertures with global motion detectors: cells that combine the input of multiple motion detectors

Motion specific regions in the brain

MST and V5

Describe how the brain distinguish self- and object-motion,

Eye movement versus object movement: Reichardt detector can’t determine on their own-needs external info

Motion system send out two copies of its signal:

One goes to eye muscles causing them to move\

Other is efference copy: goes to region in visual system called comparator: knows about visual motion and compensate for image changes caused by eye movement, inhibits other parts(determine if eye moves or world is moving)

What are the two copies of signals that motion sends out to distinguish between eye movement versus object movement

Image movement signal:One goes to eye muscles causing them to move\

Other is efference copy(eyemovement): goes to region in visual system called comparator: knows about visual motion and compensate for image changes caused by eye movement, inhibits other parts(determine if eye moves or world is moving)

What signals are sent ONLY if eye moves not object

Efference copy + image movement signal

What signals are sent if the Object moves and eye does not

Only image movement signal

Self-motion:

movement of body rather than eyes(optic flow, focus of expansion, radial expansion)

Optic flow:

body moves through space, creates distinct visual pattern as perspective changes

Focus of expansion:

point you are heading to

Radial expansion

objects you are moving closer to and past expands outward

Structure-from-motion

ability to extract object structure from moving displays(ex.dots moving turn into cylinder)

Rigid motion

describes motion of an object in space during which there are no changes in distances between any two points on the objects - allows for rotation and translation of an objects(cube moving/won’t stretch/rigid)

Non-rigid motion:

biological motion (elastic, and jointed)

Size constancy:

understanding that the size of a retinal projection for an object does not change size

Metric depth cue

Distance

Non-metric depth cue

depth

Binocular depth cues:

relies on info from both eyeballs

Monocular depth cue

relies on info from one eye

Four types of depth cues

Kinematic, stereoscopic, ocularmotor, pictorial

Kinematic info

depth cues emerging from moving objects

Types of Kinematic info

Motion perspective/parallax, optical expansion/contraction, accretion/deletion of texture

Motion perspective/parallax(type of cue, def, and metric, binocular or monocular)

Type: Kinematic

Def:when the observer moves, displacement of object’s image on eye depends on distance - things closer to your eye moves faster than farther objects (train example)

Metric: relative metric

-mononcular

Optical expansion/contraction(type of cue,def,binocular or monocular)

type: kinematic

def: when an objects approaches, its image expands.

-monocular

Tau: ratio of the retinal size of the object to the rate at which that size is changing is called tau (proportional to the time to collision) of an approaching object

Tau

-part of Optical expansion/contraction

ratio of the retinal size of the object to the rate at which that size is changing is called tau (proportional to the time to collision) of an approaching object

accretion/deletion of texture(type of cue, def)

type:kinematic

def:when a surface moves relative to another surface, the nearer surface progressively occludes background texture on the farther surface

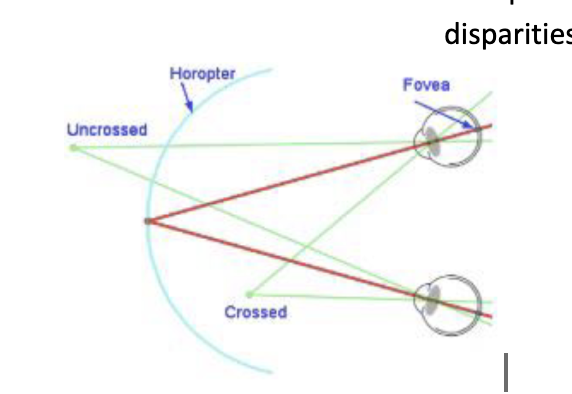

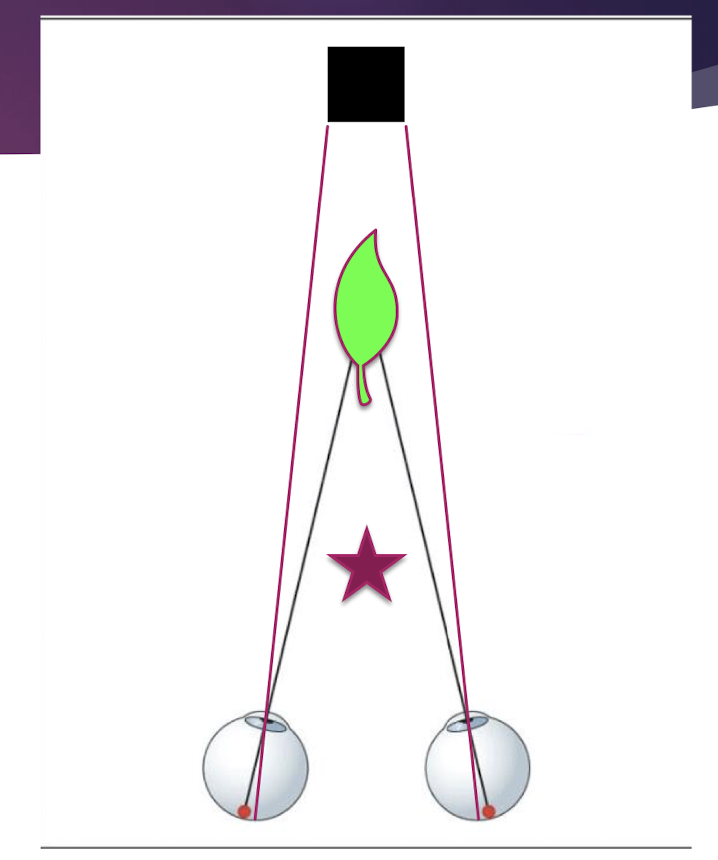

Stereoscopic information

depth cues emerging from discrepancies in the retinal images projected to both eyes

Types of Stereoscopic information

Binocular disparity

Binocular disparity(type, def, metric, binocular/monocular)

Type: stereoscopic

def:the differences in the two eyes views of an object - relative metric

Horopter: sets of points in the world having identical binocular disparities

Horopter

sets of points in the world having identical binocular disparities

Crossed disparity

crossed disparity: eyes focus before/within the horopter, indicates that a point is nearer to t

he observer than the point being fixated

Occulomotor info

depth cues emerging from motion of the eyeballs in the skull

Types of Occulomotor info

Accommodation, Convergence

Accommodation(type of cue, def,metric type)

type:occulomotor

def: changing the shape of the lenses to help focus the eye - movement of lens controlled by ciliary muscles (contract = lens thick = focus on near; relax = lens stretch = focus on far)

-metric

Convergence(type, def, metric type)

Type: occulomotor

def:turning the eyes in order to focus on objects (closer)

-metric

Divergence: farther

Pictorial info

largely monocular cues that can be used by one eye and when the object is static

Types of monocular cues

Occlusion: one object blocks another - non-metric

Linear perspective: lines that are parallel and move away will converge (road)

Texture gradient: textures closer will be larger than textures far away

Familiar size: used for objects whose size is known - metric

Relative size: how the size of object compares to other objects in the scene - relative metric

Relative height: more distant object = closer to horizon (higher) - relative metric

Aerial perspective: scattering light, objects in distant have blue tint and are blurry/fuzzy

What is metric cues

physical units (horse is 20 feet away)

Relative metric:

can only be specified in terms of ratios between depths of diff points in scene (horse is 2x farther)

Non-metric

can only specify depth relative to another object as being closer, farther, or same depth (horse is farther away)

Generic viewpoint

most viewpoints - unconscious inference (what makes most sense duhhh)

Accidental viewpoints:

viewpoints that provide a percept but are not generally representative of the structure of a scene (Wouldn’t exist from any other position)

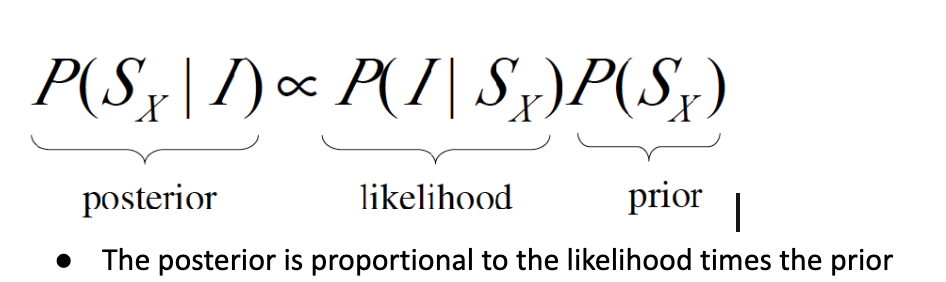

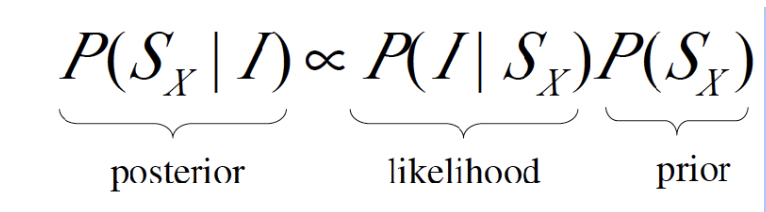

Bayes Law

showing how prior beliefs combine with data likelihood to form a more accurate "posterior" probability

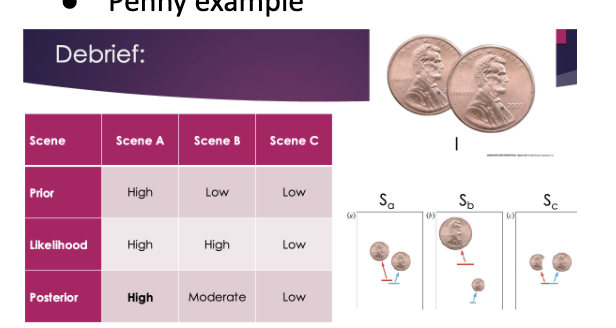

Parts of the Bayes Law probability

Likelihood: probability that you will see the image given a particular scene is present (is viewpoint generic or accidental)

Prior: probability that a particular scene occurs at all (giant penny)

Posterior: solution to equation, probability that a scene is present in the real world

Bayesian processing

nervous system chooses scene that has highest probability using sensory evidence and prior knowledge of the world

Penny Example

Uncrossed disparity:

occur for objects thart are beyond the horpoter

-image is more medial(towards the midline) on borth retinas compared to an object in the same direction from the viewer

-qaure in the image

First order motion

clear luminace marked edges on an object that help you detect motion

-stepping feet illusion, beta motion, phi motion

Second order motion

motion where you don’t have that edge- you need changes in the surface texture of two surfaces to spot the motion( video example)