Design Web Crawler

0.0(0)

Card Sorting

1/3

There's no tags or description

Looks like no tags are added yet.

Study Analytics

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

4 Terms

1

New cards

Functional/Non functional

crawls websites based on certain reasoning (search indexing, web archival, web monitoring, etc).

scalable, robust, extensible, polite

2

New cards

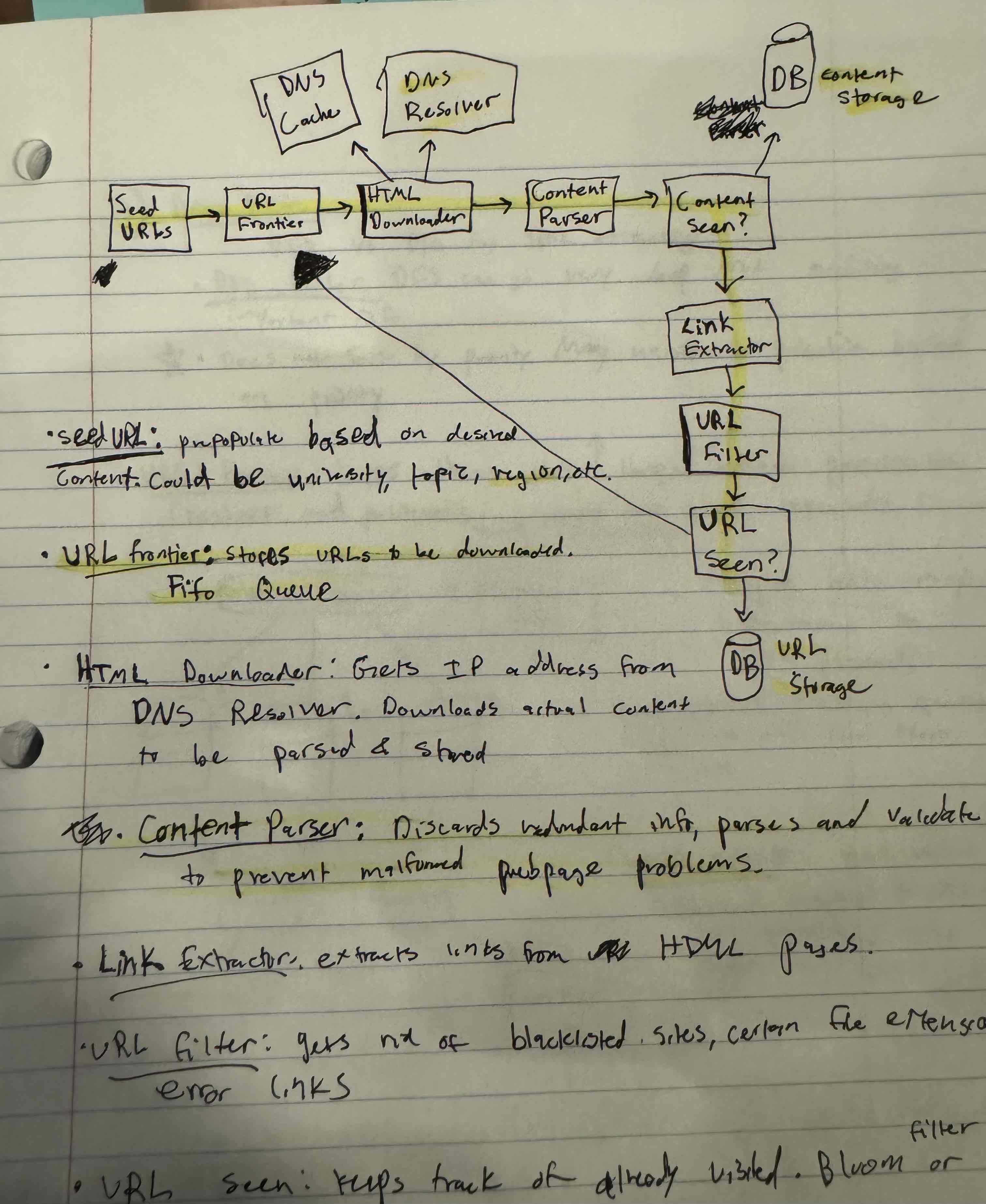

High level diagram

3

New cards

Deep Dive (non-diagram)

Enforcing Politeness- Use Robots.txt (filename used for telling web crawlers which urls to exclude)

Extensible- Want to be able to add other components, like PNG extractor (after Content Seen?)

Freshness- Could recrawl based on web pages update history. Expensive though.

Robust- Save crawl states in case downloading fails, can pickup where left off. Hashing for downloaders (checking if content seen)

4

New cards

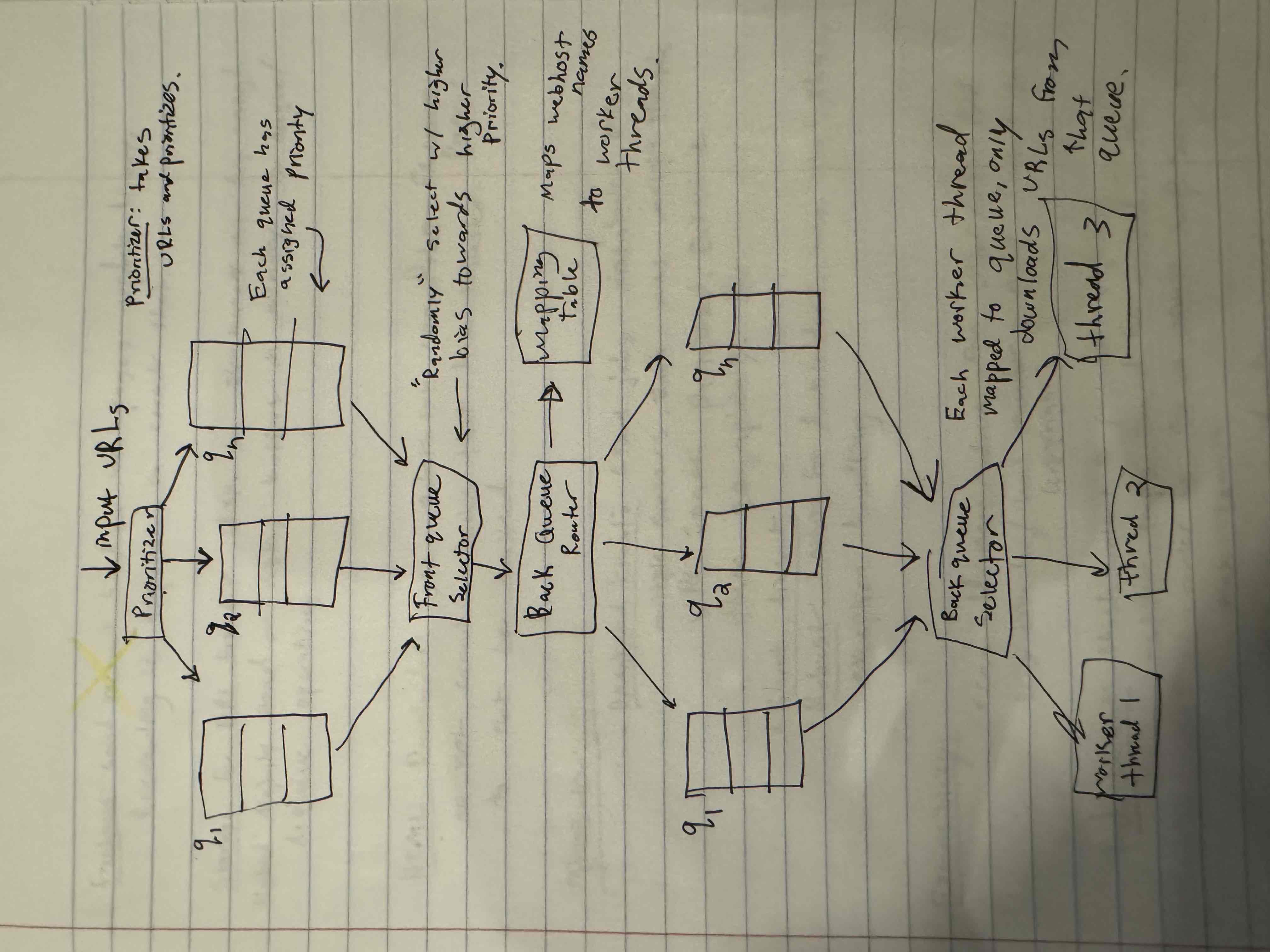

Deep Dive (High Level Diagram)