223 definitions

1/76

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

77 Terms

the pivot of a row matrix is

the left most non zero entry in that row

a matrix is in row echelon form if

all rows consisting only of 0’s are at bottom

pivot if each non zero is in a column to the right of the pivot in the row above it

a matrix is in reduce row echelon form if

the matrix is in row echolon form

the pivot in each row is equal to 1

each pivot is the only non zero entry on its column

we say xi is a basic variable for the system if

the ith column of rref(c) has a pivot

we say the xi is a free variable for the system if

the ith column of the rref(c) does not have a pivot

the system if inconsistent if and only if

the last column of rref(A) has a pivot

The system has exactly one solution if and only if

the last column does not have a pivot and every other column of rref(c) has a pivot

the system has infinitely many solutions if and only if

the last column of the rref(c) does not have a pivot and rref(A) has a column without a pivot

a linear combination of vectors v1, v2,…,vn in Rn is a vector of the form

c1v1, c2v2,…,cnvn

the span of vectors of v1, v2,…,vn in Rm is the set

span (v1, v2,…,vn) = {c1v1+…+cnvn| c1, c2…,cn ∈ R}

a set of vectors {v1, v2,…,vn} in Rm

At least one of the vectors is a linear combination of others: that is vi ∈ span(v1, v2,…,vi-1, vi+1,...vn)

a vector space (over the real numbers) is any set of vectors V in Rn that satisfies all of the following properties:

V is non empty

V is closed under scalar multiplication

V is closed under vector addition

let V be a vector subspace of Rn. A spanning set (also known as a Generating Set) for V is

any subset B of V so that V = span(B)

a subset B of a vector space V is called a Basis if

B is a spanning set and

B is linearly independent

Let V be a nonzero vector subspace of Rn. Then the dimension of V, denoted dimV, is

the size of any basis for V

the standard basis for R is the set E :={e1, e2 , . . .,en } where ei is

the vector in n dimensional space where 1 in the ith coordinate and 0 in all other coordinate

Let A be an mxn matrix with column vectors A = (v1 v2 · · · vn). Then, for a vector x in Rn the matrix-vector product of A and x is the vector in Rm defined by

Ax = x1v1 + x2v2+ … + xnvn

Let A be an mxn matrix. Then, the matrix transformation associated to A is the function

TA : Rn → Rm defined by TA(x) = Ax

A function F: Rn → Rm is called linear if it satisfies the following two properties for all vectors v,w ∈ Rn and scalars c ∈ R

F(v + w) = F(v) + F(w)

F(cw) = cF(w)

Let F: Rn → Rm be a linear transformation. Then, the defining matrix of F is the mxn matrix M satisfying

F(x) = Mx

A function f: X →Y is called one-to-one (or injective) if the following property holds

for all y ∈ Y there is at most one input x ∈ X so that f(x) = y

A function f: X →Y is called onto (or surjective) if the following property holds

for all y ∈ Y there is at least one input x ∈ X so that f(x) = y

A function f : X → Y is called bijective if

f is both injective and surjective

Let V be a subspace of Rn and W a subspace of Rm. An isomorphism between V and W is

any linear bijective function F: V → W

The kernel of F is the subset ker(F) ⊆ Rn defined by

ker(F) := {x ∈ Rn: F(x) = 0} ⊆ Rn

The image of F is the subset im(F) ⊆ Rm defined by

im(F) := {F(x): x ∈ Rn} ⊆ Rm

The Rank of F is

the dim of im(F)

The Nullity of F is

the dim of ker(F)

The column space of A is the subspace of Rm given by

Col(A) := Span(v1, v2,…,vn)

The Null space of A is the subspace of Rn given by

Nul(A) := {x∈ Rn : Ax = 0}

The nullity of A is

the dimension of Nul(A)

The Rank of A is

the dimension of Col(A)

A system of linear equations is called homogeneous if

the constatnt coeff in every equation is 0

The sum of A and B is the mxn matrix given by

A + B:= (v1+w1 v2+w2 … vn+wn)

The scalar product of A with C is the mxn matrix given by

cA: = (cv1 cv2 … cvn)

Let A be an mxk matrix and B = (b1 · · · bn)

be a kxn matrix. Then, the matrix product of A and B is the mxn matrix

AB:= (Ab1 Ab2 … Abn)

the identity matrix In is

nxm In = (x1 x2 …xn)

that is In = [(1 0 … 0), (0 1 … 0), (0 0 …1)]

Let A be an nxn matrix. The inverse of A, if it exists, is

the matrix B such that AB = In and BA = In (not always the same though)

An n x n matrix is called elementary if

it can be obtained by performing exactly one row operation to the identity matrix

The unit square is the subset of R2 given by

S := {α1e1 + α2e2 : 0 ≤ α1, α2 ≤ 1}.

An ordered basis {b1,b2} for R2 is called positively oriented if

we can rotate b1 counterclockwise to reach b2 without crossing the line spanned by b2. Otherwise, the basis is called negatively oriented.

Let F: R2 → R2 be a linear transformation. Then, the determinant of F, denoted by det(F), is the oriented area of F(S). That is,

det(F) :=

area(F(S)) if {F(e1), F(e2)} is positively oriented

−area(F(S)) if {F(e1), F(e2)} is negatively oriented

0 if area(F(S)) = 0.

If A is a 2 × 2 matrix, the determinant of A, denoted by det(A),

det(A) := det(TA)

The unit cube is the subset of R3 given by

C := {α1e1 + α2e2 + α3e3 : 0 ≤ α1, α2, α3 ≤ 1}.

For an n × n matrix A =(aij)

the ij-minor of A is defined to be the (n − 1) × (n − 1) matrix A(ij) with the ith row and jth column deleted.

Let A be the n × n matrix with ij-entry equal to aij . Then we define the determinant of A by the following cofactor expansion formula:

det(A) := a11 det(A11) − a12 det(A12) + · · · + (−1)n+1a1n det(A1n)

Let A be an n×n matrix. A non-zero vector v is an eigenvector

of A if

Av = λv where

Let A be an n × n matrix with eigenvalue λ.

(1) The λ-Eigenspace of A is the vector subspace of R

n defined by

Eλ := Nul(A − λIn).

The geometric multiplicity of λ

the dimension of the λ-eigenspace Eλ.

For an n × n matrix A, the characteristic polynomial of A is called

χA(x) = det(A − xIn).

Let B = {v1, v2,…, vn} be an ordered basis for a vector space V.

Recall that every vector x in V can be written in the form

x = x1v1 + · · · + xnvn

The B-coordinates of x is the vector in Rn given by

[x]B :=

(x1

.

.

.

xn)

Let C and B be bases for a vector space V . Then, the change

of basis matrix MC←B is the matrix satisfying

MC←B[x]B = [x]C

Let C be a basis for a vector space V . Then, for any x, y ∈ V and scalar k ∈ R we have

[x + y]C = [x]C + [y]C and [kx]C = k[x]C.

Let {b1, . . . ,bn} be a linearly independent subset of a vector space V .

Then, for any basis C of V , the set {[b1]C, . . . , [bn]C}

is linearly independent

Let F: Rn→Rn be a linear transformation, and B be any basis for R^n. Then, the defining matrix of F with respect to the basis B is the matrix M so that…

[F(x)]B = M[x]B

we use the notation M = MF,B

Twon×nmatrices B and C are called similar if they represent the same function, but in possibly different bases.That is

there is a single linear transformation F:Rn→Rn so that MF,B = B and MF,C =C where B and C are bases for Rn

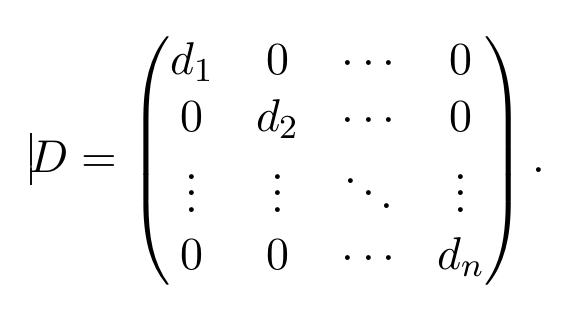

An n×n matrix D is called diagonal if

the only non zero entries in the matrix appear on the diagonal. That is

In this case we write D = diag(d1, d2,…dn)

An n×n matrix is called diagonalizable if

it is similar to a diagonal matrix

Suppose that A is an n×n diagonalizable matrix with eigenvalues λ1,...,λn and corresponding linearly independent eigenvectors v1,...,vn. We call the equality

A = CDC-1

the EIGENDECOMPOSITION of the matrixA. By the Diagonalization Theorem, we know that

D=diag(λ1,...,λn) and C= (vector)v1 ··· (vector)vn

The dot product of vector u and v is the scalar

u (dot) v = u1v1 + u2v2 + …+ unvn

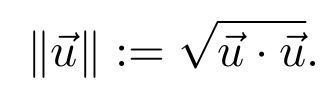

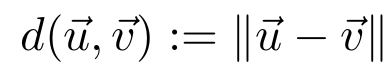

Let vector uand v be vectors in Rn

The distance between vectors u and v is

We say that u and v are orthogonal if

u (dot) v = 0

A basis B={v1,v2,...,vn} is orthogonal if

vector ui (dot) vj for every i ≠ j

An basis B is called orthonormal if it’s orthogonal and

||vi|| = 1 for every vi in B

We call an n×n matrix Q orthogonal if

its column vectors form an orthonormal basis for Rn

Equivalently, Q is called orthogonal if QT = Q-1

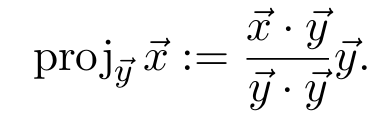

For vectors x, y in Rn, the orthogonal projection of x onto y is

An n×n matrix A is called orthogonally diagonalizable if

there exists an orthogonal matrix Q and a diagonal matrix D so that Q⊤AQ = D

Suppose that A is an n×n symmetric matrix with eigenvalues λ1,...,λn and orthonormal basis of eigenvectors{v1,...,vn}. We call the equality

A = QDQT

a SPECIAL DECOMPOSITION of A, where

D = diag{λ1,...,λn} and Q = (v1 … vn)

Let A be an m×n matrix and {v1,...,vn} be an orthonormal basis for Rn of eigenvectors for A⊤A, as above. The singular values of A are

the scalars σi = ||Avi|| for i = 1,…,n