Research methods

1/184

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

185 Terms

what is an independent variable (IV)?

a variable that is manipulated by a researcher to investigate whether it consequently brings change in another variable

what is a dependent variable (DV)?

a variable that is measured and predicted to be dependent upon the IV

why is it important to operationalise variables?

ensures readers understand what was done

enables the research to be replicated to test for reliability and/or validity

how are variables operationalised?

abstract variables are made measureable

e,g, standardised scales, questionnaires

what is an extraneous variable (EV)?

a general term for any variable other than the IV that might affect the results (DV), and make it more difficult to detect a significant effect

when EVs are important enough to cause a change in the DV, they become confounding variables (CV)

what is a confounding variable (CV)?

an EV that causes a change in the DV

means the outcome of the study may be useless

what is an aim?

a statement of what the researcher intends to find out in a research study

what is a hypothesis?

a precise and testable statement about the assumed relationship between variables

operationalisation is a key part of making the statement testable

what are the two types of hypothesis?

directional

non-directional

what is a directional hypothesis?

states the expected direction of the results

one tailed

what is a non-directional hypothesis?

states there will be a difference between conditions but not what the difference will be

two tailed

what is the difference between a test of difference and correlations?

a correlational study has two DVs, and a test of difference has an IV and a DV

a correlational study cannot show causation, and a test of difference can

a correlational study needs to use variables which can both be put onto scales (ordinal variables), and a test of difference can use categorical (nominal) data

what is a correlational hypothesis?

predicts a relationship between two dependent variables

directional hypothesis - e.g. there will be a significant positive/negative correlation between…

non-directional hypothesis - e.g. there will be a significant correlation between…

what are the types of experiment?

laboratory experiment

field experiment

natural experiment

quasi experiment

what is a laboratory experiment?

conducted under controlled conditions

in which the researcher deliberately changes something (IV) to see the effect of it on something else (DV)

what is the evaluation for laboratory experiments?

control - high degree of control over the environment and other extraneous variables means the researcher can accurately assess the effects of the IV, high internal validity

replicable - due to the researcher’s high levels of control, research procedures can be repeated so that the reliability of results can be checked

lacks ecological validity - due to the involvement of the researcher in manipulating and controlling variables, findings cannot be easily generalised to other settings, poor external validity

what is a field experiment?

carried out in a natural setting

in which the researcher manipulates something (IV) to see the effect on something else (DV)

what is the evaluation for field experiments?

validity - field experiments have some degree of control but also are conducted in a natural environment, so have reasonable internal and external validity

limited control - have less control than laboratory experiments, so extraneous variables are more likely to distort findings, internal validity is lowered

what is a natural experiment?

the study of a naturally occurring situation as it unfolds in the real world

the researcher does not exert any influence over the situation but rather simply observes individuals and circumstances, comparing the current condition to some other condition

what is the evaluation for natural experiments?

high ecological validity - due to a lack of involvement of the researcher, variables are naturally occurring, findings can be easily generalised to other settings, resulting in high external validity

lack of control - no control over the environment & other extraneous variables means that researcher cannot always accurately assess the effects of the IV, internal validity is lowered

not replicable - due to the researcher’s lack of control, research procedures cannot be repeated so the reliability of results cannot be checked

what is a quasi experiment?

research in which the investigator cannot randomly assign units or participants to conditions, cannot generally control or manipulate the independent variable, and cannot limit the influence of extraneous variables

what is the evaluation for quasi experiments?

high ecological validity - due to a lack of involvement of the researcher, variables are naturally occurring, findings can be easily generalised to other settings, resulting in high external validity

lack of control - no control over the environment & other extraneous variables means that researcher cannot always accurately assess the effects of the IV, internal validity is lowered

not replicable - due to the researcher’s lack of control, research procedures cannot be repeated so the reliability of results cannot be checked

what is a pilot study?

small, trial versions of proposed studies to test their effectiveness and make improvements

helpful in identifying potential issues early

any part of the study could be tested - e.g. the validity of measure, if the procedure is effective

important part of the experimental process

what is standardisation?

the process in which procedures used in research are kept the same, so that changes in data can be attributed to the IV

more likely that the results will be successfully replicated

what are demand characteristics?

presence of demand characteristics in a study suggests a risk participants will change their behaviour, which could affect how they respond to tasks they are set

participants may try to please the researcher by doing what they assume is expected of them, or may try to skew the results to sabotage the study

what is social desirability bias?

occurs when participants note aspects of a study associated with particular social norms or expectations, and in turn present themselves in what they deem to be a socially acceptable fashion

what are investigator effects?

when a researcher acts in a way to support their prediction - consciously or unconsciously

can be a problem when observing events that can be interpreted in more than one way

direct investigator effects - interacting with the participants

indirect investigator effects - as a consequence of the investigator designing the study

what is a correlation?

a test to see whether two variables are related

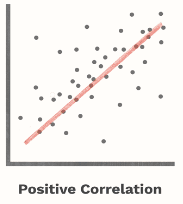

what does a graph representing a positive correlation of +1 look like?

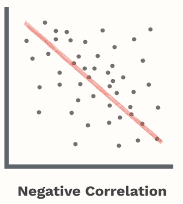

what does a graph representing a negative correlation of -1 look like?

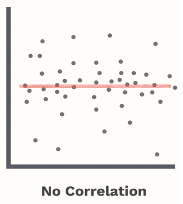

what does a graph representing no correlation look like?

what is a linear correlation?

systematic relationship between co-variables that is defined by a straight line

what is a curvilinear correlation?

when there is a predictable relationship between covariables, but there is an optimum point

what are naturalistic observations?

the observer takes advantage of a naturally occurring situation and watches without interfering

what is the evaluation for naturalistic observations?

high ecological validity

little control over what is happening

something unknown to the observer may be causing behaviour

what are controlled observations?

some variables in the environment are regulated by the researcher

what is the evaluation for controlled observations?

higher control

lacks ecological validity

what are covert observations?

individuals are unaware they are being observed

what is the evaluation for covert observations?

behaviour is more natural

ethical issues around what is acceptable to observe

what are overt observations?

those being observed are aware they are being observed

what is the evaluation for overt observations?

less ethical issues than covert observations

risk of social desirability bias

risk of observer bias

what are participant observations?

researcher is part of the group being observed

what is the evaluation for participant observations?

may give greater insight that could not be gained otherwise

more likely to be overt - issues of participant awareness affecting results

if covert, risks of ethical issues

what are non-participant observations?

researcher merely watches behaviour from a distance and does not interact with those being observed

what is the evaluation for non-participant observations?

likely to be more objective

may not gain as much insight

what are structured observations?

when a system is used to organise observations in order to ensure it is objective and rigorous

the two main ways to structure observations are through behavioural categories and sampling procedures

what are unstructured observations?

where the researcher records all relevant behaviour but has no system

the problem with this is that what is reported may be what is most obvious or eye catching rather than the most important

what are behavioural categories?

behaviour broken down and operationalised into categories

behavioural categories should be:

1) objective - observer should not make inferences about behaviour, and should just record explicit actions

2) cover all possible behaviours and avoid a ‘waste’ category - i.e. behaviours that don’t fit a category

3) mutually exclusive or specific - should not have to mark two categories at one time

4) simplistic - easy to use by others and can be replicated

what are the different ways of observing?

observations can be made continuously where the observers record everything that happens in detail - e.g. with a camera

sampling techniques

what is event sampling?

counting how many times a certain behaviour (event) occurs in a target individual or individuals

what is time sampling?

recording behaviours in a given time frame

what are self-report techniques?

refers to techniques which ask people about their own thoughts and feelings

two main methods used are questionnaires and interviews

what is the evaluation for self-report techniques?

may allow for greater understanding - can find out what an individual is thinking or feeling rather than inferring

not reliant on the researcher’s interpretations of behaviour

relies on the participant’s ability to reflect internally

may lack generalisability

may be vulnerable to demand characteristics and social desirability bias - participants may not be completely honest

what are questionnaires?

set of written questions designed to collect information about particular topics

permit a researcher to discover what people think and feel directly

can be an objective and scientific way of conducting research, but design is important

are always predetermined - the questions are structured

what is the evaluation for questionnaires?

easy to analyse data - normally closed predetermined questions

cheap and easy to distribute

easy to replicate - high reliability

social desirability bias may occur

sample may be biased - e.g. only accessible to those who can read and write

low response rate

can lack detail - closed questions may not gain enough depth

people who respond may be different to those who don’t respond

what are unstructured interviews?

has less structure than a structured interview - new questions are developed during the course of the interview

the interviewer may begin with general aims and a few predetermined questions but subsequent questions develop on the basis or answers given

sometimes called a clinical interview

what is the evaluation for unstructured interviews?

flexible with questions - can follow up interesting or relevant points of discussion

greater depth and insight into the issue being discussed - qualitative data produced

time consuming - means a smaller sample size with less data produced

requires trained interviewers to manage lack of structure - could be costly

could be harder to analyse and replicate due to changing questions

what are structured interviews?

has predetermined questions - is essentially a questionnaire delivered in person or over the phone with no deviation from the original questions

conducted in real time - the interviewer asks questions and the interviewee replies

what is the evaluation for structured interviews?

easy to replicate - predetermined questions with high reliability

easy to analyse - quantitative data due to closed questions

does not require interviewer to have specialist skills

can be quick to conduct with a large sample size

only produces quantitative data - lacks detail

could have interviewer bias

not very flexible - unable to follow up points by changing questions

what three key principles that need to be addressed when writing questions?

clarity

bias

analysis

why is clarity significant when writing questions?

questions need to be written so that the respondent understands what is being asked

there should be no ambiguity - where something has more than one possible meaning

the use of double negatives reduces clarity - e.g. ‘are you against banning capital punishment?’

a further issue is double barrelled questions - asking two things in one question

why is bias significant when writing questions?

any bias in a question may lead the respondent to be more likely to give a particular answer - leading question

the greatest problem with questionnaires is likely to be social desirability bias - participants given answers that make them look better, rather than being totally truthful

any bias in a questionnaire will reduce its validity

why is analysis significant when writing questions?

questions need to be written so that answers are easy to analyse - can be affected by the type of question used:

1) open questions have no set response, so each response may be different - can give greater detail and insight but produces qualitative data which is more challenging to analyse so making clear patterns and conclusions is more difficult

2) closed questions have a set range of possible answers, e.g. a scale or yes/no questions - can be easier to analyse and produce quantitative data, but may not truly reflect the participant’s thoughts or behaviour

what should be considered when writing questionnaires?

filler questions - irrelevant questions help to distract from the main purpose of the study, reducing demand characteristics

sequence for the questions - it is best to start with easier questions and save those that could make participants anxious or defensive until they have relaxed

sampling technique - how participants are selected is important, e.g. questionnaires often use stratified sampling

pilot study - smaller trial of the research meaning the questionnaire can be refined based on any difficulties encountered before the main study

what should be considered when designing interviews?

recording the interview

effects of the interviewer

questioning skills in an unstructured interview

why is recording significant when designing interviews?

an interviewer may take notes throughout the interview to document answers but this is likely to interfere with their listening skills

may asso make the respondent feel a sense of evaluation as the interviewer may not write everything down - the respondent may feel what they said was not valuable

interviews may be audio or video recorded instead

why is the effect of the interviewer significant when designing interviews?

a strength of interviews is that the presence of an interviewer who is interested in the respondents answers may increase the amount of information provided, so the interviewer should be aware of behaviours that demonstrate their ‘interest’:

1) non-verbal communication - head nodding and leaning forward conveys interest, encouraging the participant to speak, as opposed to arms crossed and frowning

2) listening skills - understanding how and when to speak, ensuring they do not interrupt too often, using encouraging comments to show they are listening

why are questioning skills significant when designing unstructured interviews?

in an unstructured interview, there are special skills to be learned about what kind of follow up questions should be asked

it is important to be aware of questions already asked and to avoid repeating them

it is also useful to avoid probing too much and asking ‘why’ too often

it is better to ask more focused questions - both for the interviewee and later analysis

what are case studies?

the detailed study of a single individual, institution or even

evidenced based research - used to look at unusual behaviours and to look at particular behaviours in detail

scientific research method - aims to use objective and systematic methods

longitudinal - follow an individual or group over an extended period of time

findings are organised into themes to represent the individual’s thoughts, emotions, experiences and abilities

what are the sources and techniques used in case studies?

use information from sources - e.g. person concerned, family, friends

the people may be interviewed or observed whilst engaged in daily life

psychological data produced - e.g. IQ/personality tests, questionnaires

may use the experimental method to test what the target person or group can or can’t do

what is the evaluation for case studies?

provides rich, in-depth data

provides insight for further research - may help to direct or develop research

easy to understand the person/case study due to comprehensive research

time consuming and expensive

can be a loss of objectivity - can be bias due to personal investment in the research

unusually cannot be generalised to anyone outside of the individual or group that were studied

what is a population?

the group of individuals the researcher is interested in

what is a sample?

a smaller group taken from the population that the researcher is interested in

what is an opportunity sample?

recruit those who are most convenient or most available

e.g. those walking by on the street, students in the same class

what is the evaluation for an opportunity sample?

easiest methods, as the first suitable participants found are used - takes less time than any other methods

biased - drawn from a small part of the population, type of people can vary based on different factors, e.g. location, time of day

what is a random sample?

methods include the lottery method, a random number table or a random number generator

what is the evaluation for a random sample?

unbiased - all members of the target population have an equal chance of selection

need to have a list of all members of the population and contact those selected, which may take some time

what is a stratified sample?

subgroups (strata) within a population are identified - e.g. gender, age groups

participants are obtained from each of the strata in proportion to their occurence in the population

selection from the strata is done using a random technique

what is the evaluation for a stratified sample?

likely to be more representative than other methods, as there is a proportional and randomly selected representation of subgroups

time consuming to identify subgroups, then randomly select and contact participants

what is a systematic sample?

use a predetermined system to select participants, e.g. selecting every third person from a list

the numerical interval is applied consistently

what is the evaluation for a systematic sample?

unbiased as participants are selected using an objective system

not truly unbiased or random, unless the researcher selects a number using a random method and starts with this person when selecting

what is a volunteer sample?

advertise in a newspaper, online, etc

ask those interested in participating to contact you

what is the evaluation for a volunteer sample?

gives access to a variety of participants which may make sample more representative and less biased

volunteer bias - sample is still biased as participants might have more time or are more motivated, or need the money

what is sampling bias?

all sampling methods are biased or distorted in someway

e.g. opportunity sample only represents the people that were available to the researcher at that time

what is volunteer bias?

the fact that people who volunteer to take part in research are likely to be different to other members of the population and this distorts or biases the data they produce

what are the three types of experimental design?

repeated measures design

independent groups design

matched pairs design

what is the repeated measures design for experiments?

all participants receive all levels of the IV

they participate in all conditions

what is the evaluation for the repeated measures design for experiments?

the order of conditions may affect performance on the tasks - use counterbalancing to address

participants may have guessed the purpose of the experiment for the second condition which may affect behaviour

what is the independent groups design for experiments?

splits participants into two equal sized groups labelled A and B

the results from each group will be compared

what is the evaluation for the independent groups design for experiments?

researchers cannot control effects of participant variables - e.g. differences between groups may be the reason for results

independent groups need a greater number of participants

what is the matched pairs design for experiments?

match up the participants in pairs

then put one held of the pair in group A and the other in group B

this guarantees that the two groups will be similar, meaning any differences found will likely be due to the IV

the characteristics on which the participants are matched should be relevant to the study

what is the evaluation for the matched pairs design for experiments?

time consuming and difficult to match participants - would need an even larger group of participants to be able to match them

still cannot control all participant variables as they may not be known to the researcher and may still affect the experiment

what is counterbalancing?

1) AB or BA - participants are split into two groups, group 1 complete condition A then condition B, group 2 complete condition B then condition A

2) ABBA - all participants complete each condition twice, in both the morning and afternoon, and these are compared

what is reliability?

the extent to which a test produces a consistent set of findings everytime it is done

for a study to be reliable, we would expect the test to produce the same results each time

what are the two methods of assessing reliability?

test-retest

inter-observer

what is the test-retest method of assessing reliability?

for questionnaires, psychological tests and interviews

involves administering the same test to the same person on different occasions - if it is reliable, results should be the same or very similar

must be sufficient time between test and retest (1-2 weeks) to ensure participants cannot recall their answers, but not so long their attitudes, abilities or opinions might have changed

two sets of scores are correlated - +0.8 indicates a strong, positive correlation, and good reliability

what is the inter-observer method of assessing reliability?

for observations

the extent to which there is an agreement between two or more observers involved in observations of behaviour - observers should carry out observations independently and compare results to ensure there is not subjectivity bias

observations are correlated - +0.8 indicates a strong, positive correlation, and good reliability

similar methods are used for assessing reliability of content analysis (inter-rater), and interviews (inter-interviewer)

how can the reliability of questionnaires be improved?

make sure questions asked are clear and not ambiguous - if they are they should be rewritten

open ended questions should be changed to closed, fixed choice questions

same interviewer should be used each time, or use interviewers who have sufficient training so they know not to ask leading or ambiguous questions

how can the reliability of observations be improved?

ensure behavioural categories are clearly defined and operationalised, and as objective as possible

categories should not overlap

ensure observers are sufficiently trained and practiced in using behavioural categories

how can the reliability of observations be improved?

procedures should be exactly the same each time they are repeated - standardised

control effects of potential confounding variables and control the research situation as closely as possible