Quality Assurance I

1/36

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

37 Terms

What is Quality Assurance?

QA ensures software meets specified requirements through structured processes. It involves systematic monitoring and improvements throughout the SDLC.

Why are bugs costly?

- 2016: $1.1 trillion losses

- 2022: $2.41 trillionBug costs include wasted development time, poor customer experience, and maintenance burdens.

How is software quality defined?

- Conformance to requirements (including timeliness, cost)

- Fitness for use

- Freedom from errors

- Customer satisfaction

Types of software quality attributes?

1. Technical (correctness, reliability, performance, maintainability)

2. User (usability, installability, documentation)

3. Discernability: Runtime: usability, performance

Not at runtime: modifiability, reusability

What are technical quality attributes?

- Correctness: Measures how many defects exist (defect rate).

- Reliability: Measures how often the system fails during operation (failure rate).

- Capability: Percentage of system requirements that are fully implemented.

Maintainability: Effort and impact required to modify or update the system.

- Performance: Efficiency of software in terms of CPU usage, memory usage, and response time.

User quality attributes?

- Usability: How many users report satisfaction with the interface or experience.

- Installability: How easy it is to install the system on different platforms.

- Documentation: Clarity and completeness of user guides, help resources.

- Availability: The degree to which the system is operational and accessible when required.

McCall’s Quality Model (1977)

model emphasizes factors like correctness, reliability, efficiency, and maintainability, and is widely used for evaluating software quality

3 QA Principles

1. Know what you're doing: Follow structured processes like SDLC, Agile, or DevOps to reduce ad hoc decisions.

2. Know what you should be doing: Focus on use cases and continuous user feedback to align with expectations.

3. Know how to measure: Employ formal methods, testing strategies, inspections, and metrics to quantify quality and detect issues.

QA Methods

1. Formal Methods: Use mathematical models (e.g., formal specifications) to verify correctness; essential for safety-critical systems but costly and time-consuming.

2. Testing: Execute software with test cases to identify faults and measure conformance to requirements.

3. Inspections: Manual examination of documents or code to find errors early (e.g., code reviews, walkthroughs).

4. Metrics: Quantitative measures like defect density, code coverage, MTBF, and customer-reported bugs.

What is Software Testing?

Process of evaluating a system to verify it satisfies specified requirements.

Cannot prove absence of bugs, only their presence (Dijkstra).

Validation vs. Verification

- Validation: building the right product- Verification: building the product right

Levels of Specification

1. Functional Specification: Describes what the software should do, i.e., its required behavior and constraints.

2. Design Specification: Outlines the system architecture and how components interact to meet functionality.

3. Detailed Design Specification: Describes internal logic and implementation of individual components/code modules.

Difference between errors, fault and failure

- Error: mistake in code

- Fault (bug): manifestation of error

- Failure: incorrect external behavior

Testing vs Debugging

• Debugging is the process of analyzing and locating bugs when the software does not behave as expected.

• Testing plays the much more comprehensive role of methodically searching for and exposing bugs.

• Debugging supports testing but cannot replace it.

Causes of Software Errors

• Faulty definition of requirements

• Client-developer communication failures (at early stage of the development process)

• Deliberate deviations from software requirements

• Logical design errors

• Coding errors

• Non-compliance with documentation and coding instructions

• Shortcomings of the testing process

• Procedural errors

Documentation errors

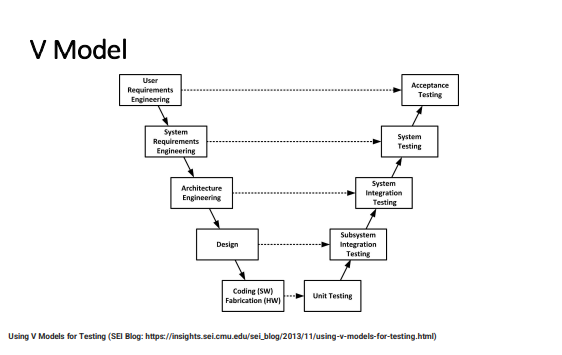

V-Model

Types of Testing

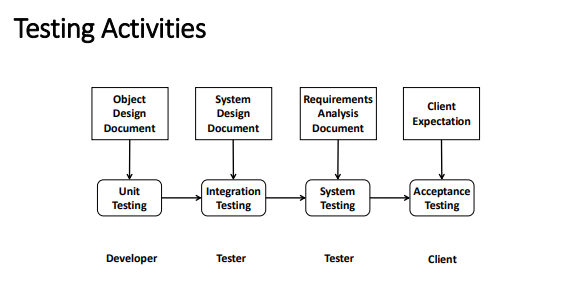

Unit Testing

• What: Individual component (class or subsystem)

• Who: Developers

• Goal: Confirm that the component or subsystem is correctly coded and carries out the intended functionality

Integration Testing

• What: Groups of subsystems (collection of subsystems) and eventually the entire system

• Who: Test analysts/engineers

• Goal: Test the interfaces among the subsystems

System Testing

• What: The entire system

• Who: Test analysts/engineers

• Goal: Determine if the system meets the requirements (functional and nonfunctional)

Acceptance Testing

• What: Evaluates the system delivered by developers

• Who: Client

• Goal: Demonstrate that the system meets the requirements and is ready to use

Why do we test?

Build confidence, prove correctness, show requirement conformance, find faults, reduce cost, assess quality |

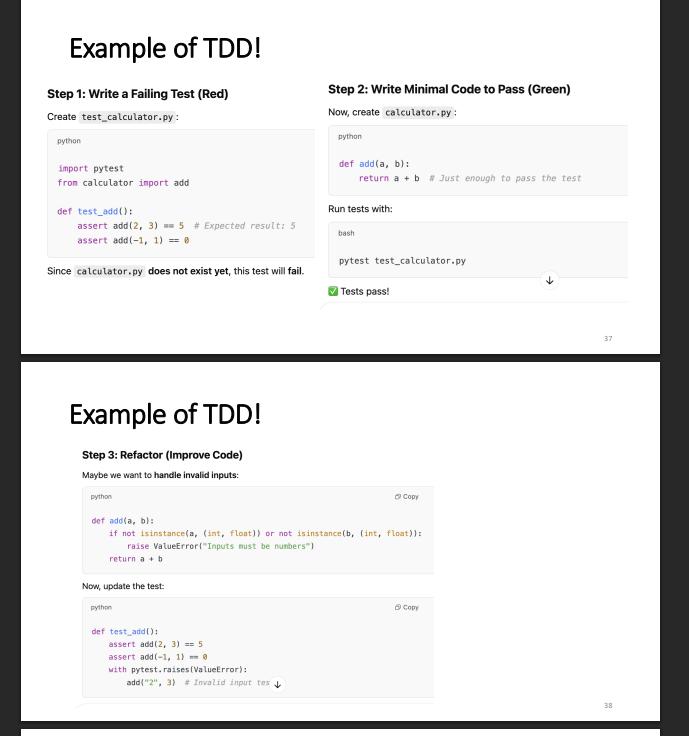

When should you write a test?

- Traditionally: after code-

In XP (TDD): before code (Add test → fail → write code → test passes → refactor)

What to test? |

Can't test everything (exhaustive impractical). Prioritize: - most severe, visible, likely faults- end-user priorities- complex/critical areas

Test Evolution

Tests must evolve with software changes. Automation aids regression testing.

Black Box vs White Box vs Grey Box

- Black Box Testing: No access to the codebase. Tests rely solely on inputs and expected outputs based on requirements or specifications. Useful early in development or when source code is not available.

- White Box Testing (Glass Box): Full access to internal logic and structure of the code. Tests are designed based on control flow, code paths, loops, and internal structures

.- Grey Box Testing: Partial knowledge of internals. Combines black and white box techniques. Effective for detecting structural flaws or improper usage between modules.- Goal: Ensure thorough testing from both internal and external perspectives.

What is Black Box Testing?

A testing method where the tester does not have access to the internal code or structure. Focus is solely on inputs and expected outputs based on specifications.

When is Black Box Testing Used?

Early in development, even before code is written. Especially useful for validation against requirements.

Black Box Viewpoint

Treats software as a "black box" with only input/output visibility. No code knowledge needed. Focus is on what the system does, not how it does it.

Advantages of Black Box Methods

- Test cases can be created in parallel with software development

- Independent of implementation

- Aligns well with user perspective

- Can identify missing functionality

Information Sources for Black Box Tests

- Requirements

- Specifications (formal/informal)

- Design documents (optional)

Types of Black Box Testing

1. Input Coverage Tests: Analyze input domains

2. Output Coverage Tests: Focus on outputs only

3. Functionality Coverage Tests: Based on behavior/actions regardless of I/O

Common Black Box Techniques

- Equivalence Partitioning

- Boundary Value Analysis

- State Transition Testing

- Cause-Effect Graphing

- Syntax Testing

- Random Testing

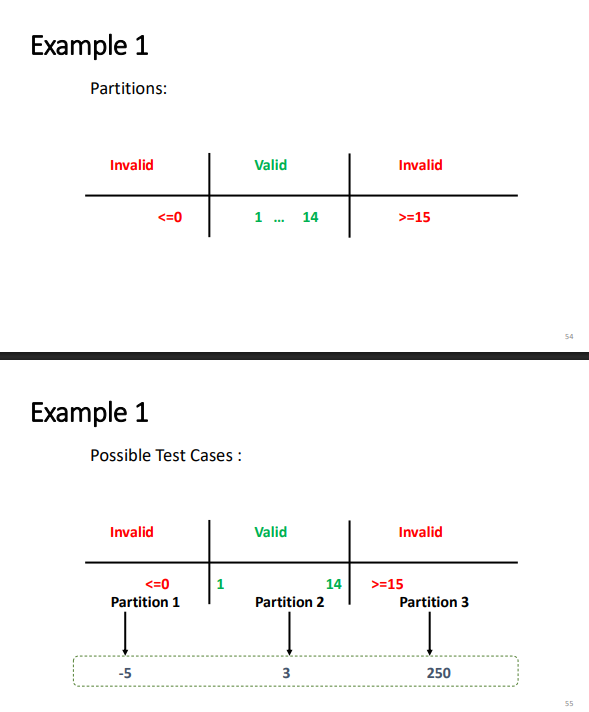

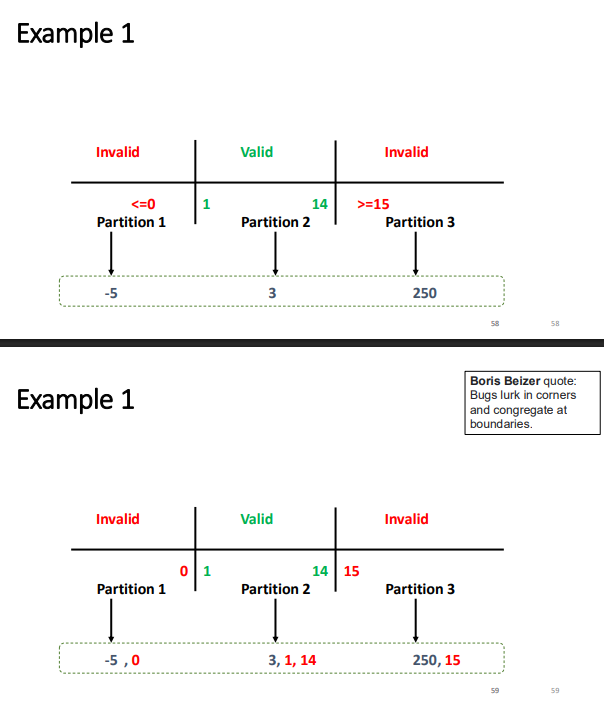

Equivalence Partitioning

Divide inputs into logical groups (partitions) that should be treated the same. Choose one representative from each group for testing.

Why Equivalence Partitioning Works

- Efficient

- Minimizes test cases

- Confirms program can handle each input category

- Easy to identify completion (1 case per group)

Boundary Value Analysis (BVA)

Focuses on values at the edge of partitions (boundaries), where errors often occur.

Advantages of BVA

- Captures common edge-case bugs

- Complements equivalence partitioning

- Simple and systematic