Software Testing CA2

1/235

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

236 Terms

External Specifications

Define software requirements and expected behaviors.

Agile Development

Uses user stories for requirements instead of detailed specs.

Baseline Document

Describes expected system behavior for comparison.

Testing Oracle

Source to determine expected results for tests.

Expected Results

Defined outcomes before executing tests.

Exit Criteria

Triggers to confirm testing completion.

Coverage Achieved

Percentage of requirements tested, typically 80%.

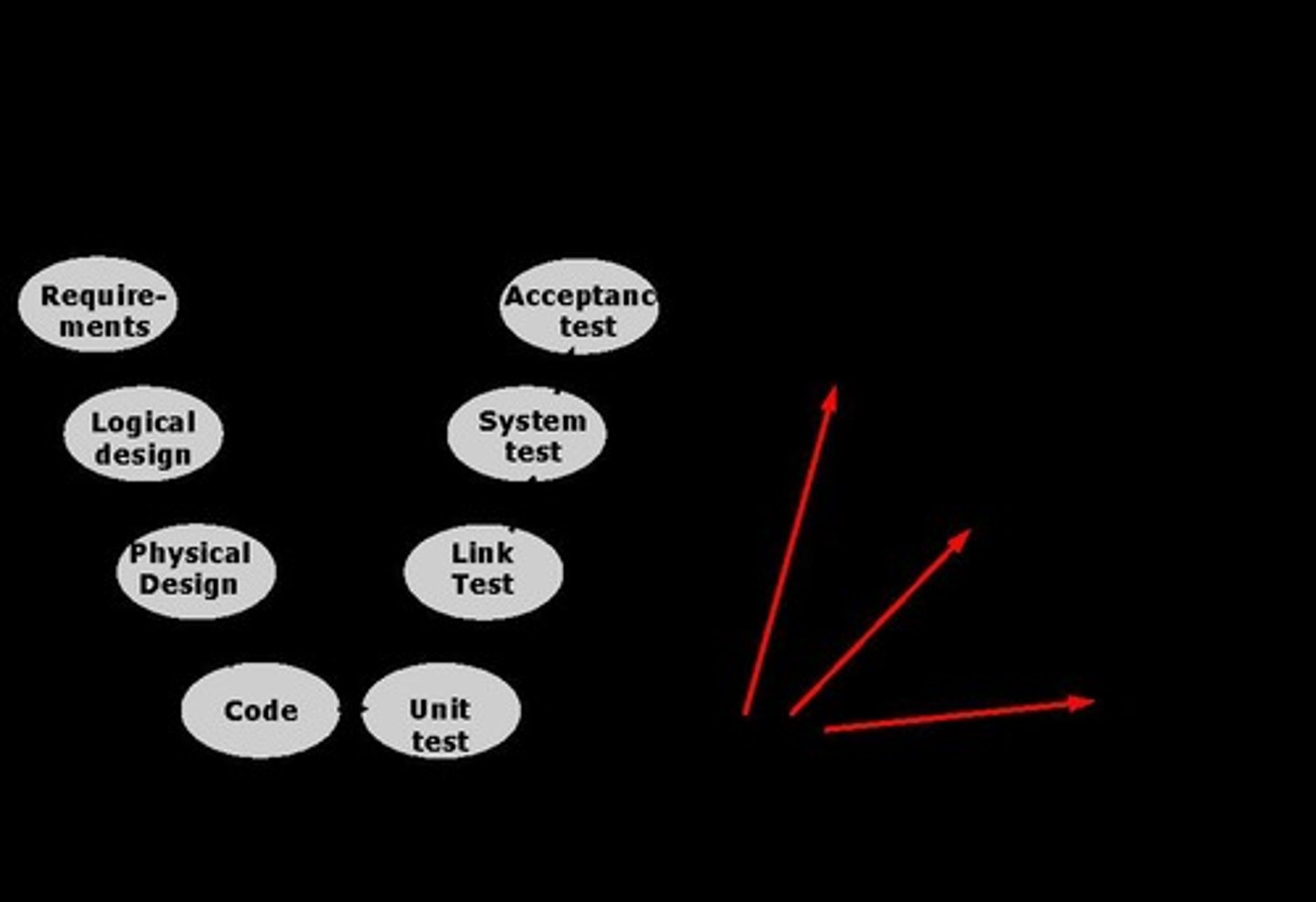

Unit Testing

Testing individual components for correctness.

Integration Testing

Testing combined components for interaction issues.

System Testing

Testing the complete system for compliance.

Test Planning

Process of defining testing strategy and scope.

Test Case Generation

Creating specific scenarios to validate requirements.

User Stories

Informal descriptions of software features from user perspective.

Refactoring

Improving code without changing its external behavior.

Component Testing

Testing individual parts of a system in isolation.

Testers' Role

Identify tests needed and compare results with requirements.

Requirements Comparison

Assessing actual results against defined specifications.

Plausible Result

Erroneous result perceived as correct due to bias.

Critical Business Scenarios

Key functionalities that must be tested thoroughly.

Outstanding Incidents

Unresolved issues that may affect testing outcomes.

Test

Controlled exercise with defined inputs and outputs.

Expected Results

Derived from baseline; compares with actual results.

Actual Result

Outcome obtained from executing a test.

Test Process

Main activities include planning, execution, and closure.

Test Planning

Determines strategy implementation and test scope.

Test Control

Measures results and monitors testing progress.

Test Analysis

Derives test conditions from baseline documents.

Test Design

Sets up test environment and prepares test cases.

Test Implementation

Transforms conditions into test cases and data.

Test Execution

Runs tests and records actual results.

Incident Reports

Documents test failures for analysis and action.

Regression Testing

Re-testing to ensure previous functionality remains intact.

Exit Criteria

Conditions to assess completion of testing activities.

Test Closure Activities

Final report and review after testing phase.

Test Inventory

List of features and test cases to be executed.

Test Case Prioritization

Ranking test cases based on importance or risk.

Testware

Tools and resources used for testing activities.

Test Coverage

Extent to which testing addresses requirements.

Baseline Documents

Original documents used as reference for testing.

Pre-flight Checks

Initial checks before executing test cases.

Test Scripts

Detailed instructions for executing test cases.

Test Data

Specific data used during test execution.

Corrective Actions

Steps taken to address identified issues.

Post Implementation Reviews

Evaluations to learn from testing outcomes.

Defect Presence

Testing indicates defects exist, not absence.

Exhaustive Testing

Testing all paths and inputs is impractical.

Effective Testing

Select tests that efficiently find faults.

Early Testing

Start testing early in development cycle.

Defect Clustering

Few modules contain most pre-release defects.

Pesticide Paradox

Repeated tests stop finding new defects.

Context-Dependent Testing

Testing varies by software context and risk.

Absence-of-Errors Fallacy

Good tests don't guarantee user satisfaction.

Testing Goal

Find defects before customers discover them.

Test Understanding

Know the purpose behind each test.

Test Corrections

Fixes may introduce unintended side effects.

Defect Convoys

Defects often occur in clusters.

Defect Prevention

Testing includes preventing defects, not just finding.

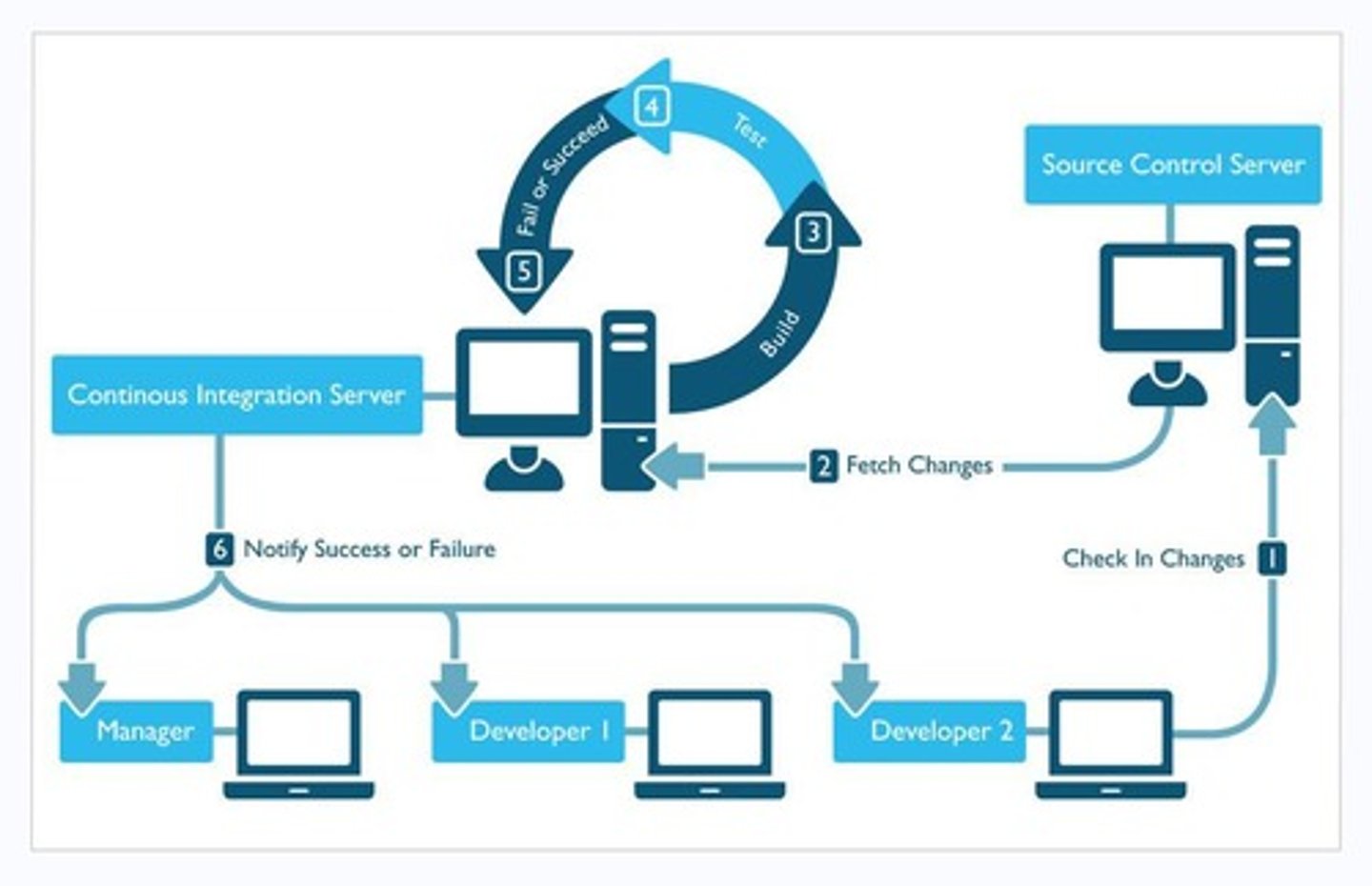

Automation in Testing

Well-planned automation enhances testing benefits.

Team Commitment

Successful testing requires dedicated, skilled teams.

Risk Focus

Prioritize testing based on risk assessment.

Testing Objectives

Focus testing on defined, clear objectives.

Operational Failures

Modules with defects often show operational issues.

Testing Efficiency

Balance effectiveness and efficiency in testing.

Faulty Requirements

Defects arise from specifications not meeting user needs.

Test Design Techniques

Methods to identify test conditions and cases.

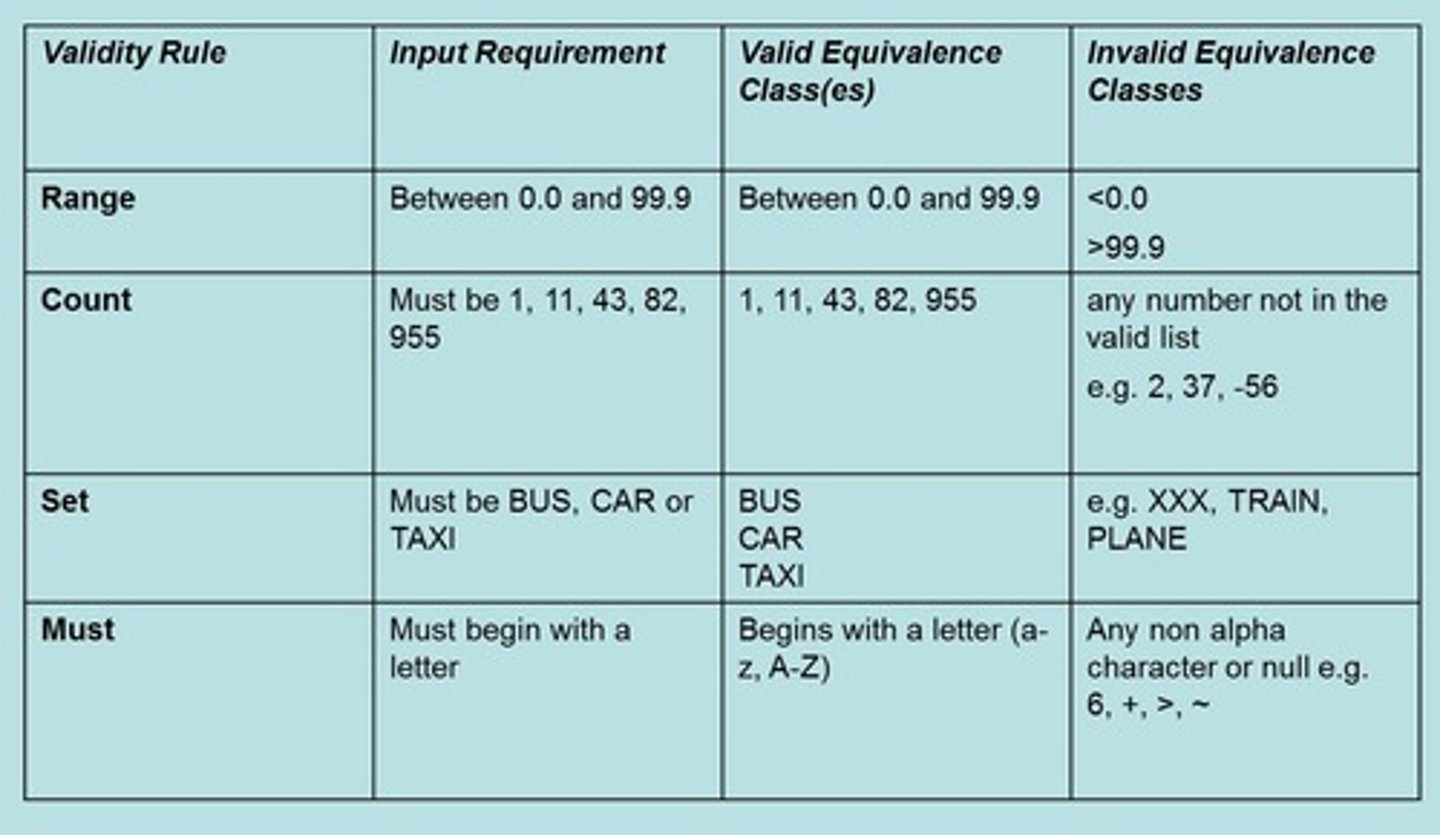

Equivalence Class Partitioning

Dividing inputs into groups treated equivalently.

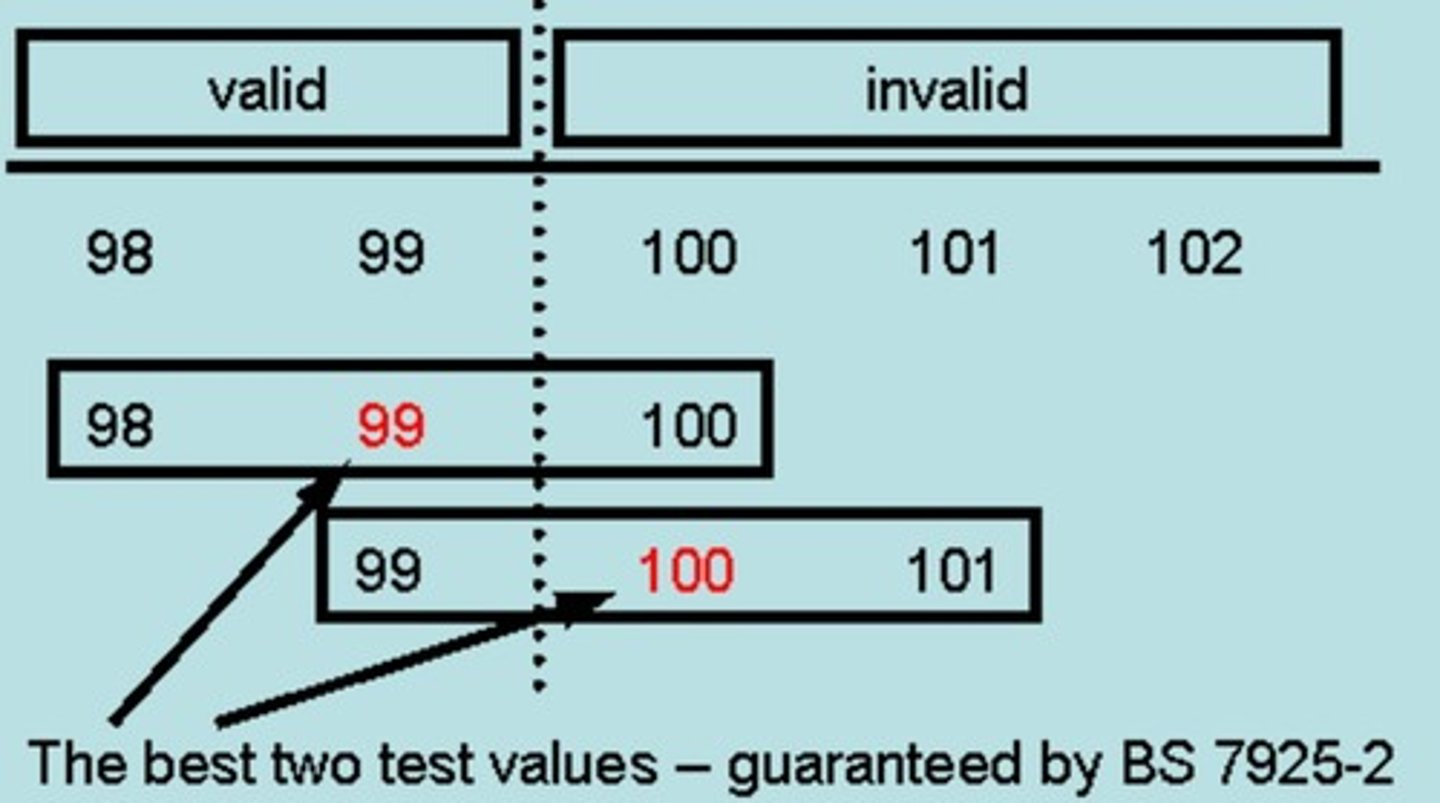

Boundary Value Analysis

Testing at the edges of equivalence partitions.

Specification Based Techniques

Testing methods based on software specifications.

Exhaustive Testing

Testing all possible inputs and paths impractically.

Valid Partitions

Input ranges expected to be accepted by the system.

Invalid Partitions

Input ranges not expected by the system.

Test Case Strategy

Method to derive test cases from equivalence classes.

Equivalence Class

Group of inputs treated the same by the system.

Test Coverage

Extent to which test cases cover requirements.

Unique Identifier

Label assigned to each equivalence class.

Error Message Handling

System response to invalid input partitions.

Precision in Testing

Importance of exact values in specific domains.

Boundary Cases

Values just above, below, and on the boundary.

BS5925-2

Standard for boundary value analysis techniques.

Equivalence Partitioning Example

Identifying valid and invalid input ranges.

Default Condition

Assumed value when no input is supplied.

Empty Condition

Value exists but contains no content.

Blank Condition

Value exists with content but is whitespace.

Null Condition

Value does not exist or is unallocated.

Zero Condition

Numeric value of zero in input.

None Condition

No selection made from a list.

Fault Detection

Identifying errors through strategic test cases.

Subjective Boundary Selection

Choosing boundaries can vary based on interpretation.

Test Case Annotation

Documenting equivalence class identifiers for test cases.

Brute-force Testing

Testing every combination of inputs impractically.

Software Process

Methodology for producing software products.

Software life-cycle model

Framework for managing software development phases.

Software Development Life Cycle (SDLC)

Phases involved in software development.

Build-and-fix model

Ad-hoc approach without specifications or design.

Waterfall model

Sequential phases where each phase is signed-off.

Iterative development models

Repeated cycles of requirements, design, and testing.

Incremental Model

Gradually adds functionality to a system.

V-Model

Verification and validation model for software testing.

Continuous Software Engineering (CSE)

Integration of continuous processes in software development.

Regression testing

Testing existing functionality after changes.