Lecture 16: the experimental research strategy (part 2)

1/41

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

42 Terms

why are confounding variables threat to internal validity?

we don’t know if it’s the IV or the confounding variable that caused the change in the DV

this offer alternative explanations to your results (introduce ambiguity)

how can you avoid confounding variables?

by examining extraneous variables for possible influence on the DV and the IV (make sure that an extraneous variable doesn’t become a confounding variable)

what are the categories of extraneous variables? (3)

environmental: different environments for each participants (lightening, hour)

participant: individual differences (age, gender, personality)

time-related: if tested over time, some things can change (history, maturation, instrumentation)

how can you control extraneous variables so that they don’t become confounding variables? (5)

remove the variable

hold the variable constant across conditions

use a placebo control

match the variable across conditions (balance or counterbalancing)

randomize the variable

*singular is used, but there could be multiple extraneous variables

true or false: we can eliminate all confounding variables

false: you can remove some confounds, but not all of them

true or false: holding a confounding variable constant works for all types of extraneous variables (environmental, participant, time-related)

false: it only works for environmental variables, but not for participant variables

how can you hold a variable constant? (2)

by standardizing the environment and procedures (works only for environment extraneous variables)

standardize the confound to a certain rage (ex: age)

what are the problems when you hold a variable constant? (2)

can become unreasonable (ex: we want 18 years old with an IQ of 110. good luck)

trade-off between standardizing variables and external validity: cannot generalize beyond the sample

when do we use a placebo control group?

when the experimental method itself can become a confounding variable

ex: you want to test a new COVID vaccine, but some people fear needle (confound). to control for this, you give the actual vaccine to the treatment group and the saline vaccine to the placebo. if there is a difference between both groups, then you know that it’s caused by the COVID vaccine and not the fear of needle

when would a placebo control group not work?

if the placebo and treatment groups are too different from each other

how can you match variables across conditions?

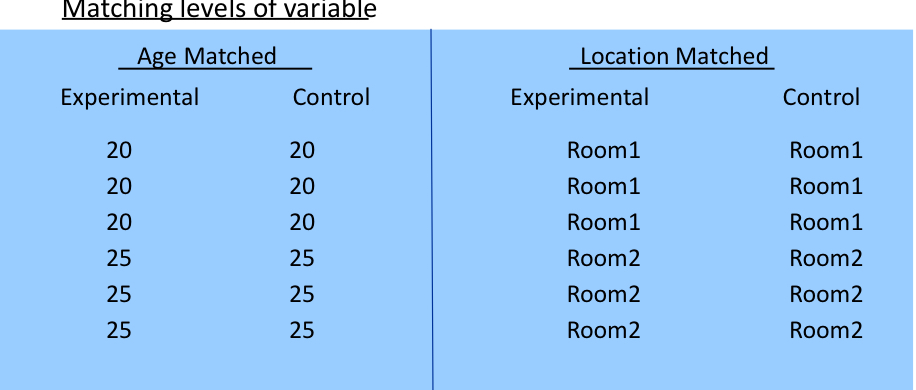

by balancing: match the levels across conditions

when do we try to balance?

when we can’t remove or hold the confound variable constant (because counterbalance can still be complicated)

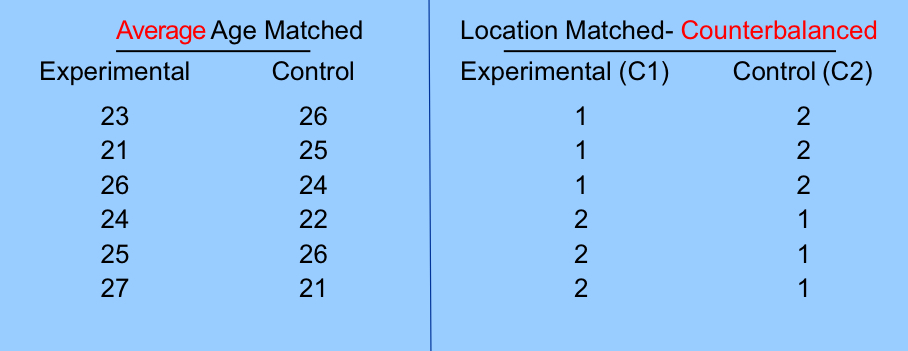

why should you try to counterbalance other factors when averages are used?

to reduce the effects due to different average values

in other words, when trying to have the same average between the experimental and control conditions, you might cause some differences that you try to solve by counterbalancing

in this case, you can try to counterbalance to get more and less experimented experimenters in each room

what’s the difference between balance and counterbalance?

balance: ensure equal representation of certain characteristics in your sample

counterbalance: control for order effect

what are the problems of matching across conditions (balance and counterbalance)? (3)

a lot of time and effort

reduced participant sample

can reduce external validity

for what set of variables should you use balancing?

those that threaten internal validity

why would you randomly assign participants to conditions?

extraneous variables related to participants will balance out across conditions

prevent the extraneous from becoming a confound: extraneous won’t be assigned to one condition

what’s an strength and weakness of randomization? (separately)

strength: powerful as it allows you to control many environmental and participant variables at the same time

weakness: it’s a chance factor, meaning that you can still get a biased outcome

what’s the difference between randomization and random assignement?

randomization: use of a random process to avoid a systematic relationship between two variables

random assignment: use of a random process to assign participants to treatment conditions

true or false: with a large group, randomization guarantees a balanced result

true: usually, it’s over 20 person

define “manipulation check”

measure to confirm that the IV has the desired effect on participants

what are the manipulation checks you can do? (2)

check the manipulation: take measures from the participant to see if the manipulation did what you wanted it to do

include an exit questionnaire that tests whether participants were aware of the manipulations and purposes of the experiment

when is it important to do manipulation checks? (4)

participant manipulations: hard to know if your IV worked

subtle manipulations: hard to know if participant noticed

placebo controls: did the participant believe that the placebo real

simulations: hard to know if participant perceived the environment as real

what are the reasons why an experiment did not work? (7)

IV isn’t sensitive enough (ex: preference cookies VS chocolate… do artichoke VS chocolate)

DV isn’t sensitive enough (ex: instead of “yes/no”, use scale)

IV has a floor or ceiling effect

DV has a floor or ceiling effect

measurement error

insufficient power (not enough participants to detect the true effect of the IV)

hypothesis is wrong

what are the threats to internal validity? (8)

history: other/personal events affected the DV

maturation: normal developmental processes affected the DV

statistical regression: extreme scores on one measurement will have less extreme scores on a second measurement

selection: were the participants self-selected or assigned randomly

experimental attrition: did some participants drop out in unequal numbers across conditions

testing: did previous testing affect the behaviour at a later testing

instrumentation: dod the measurement method change

design contamination: did participants find out something about the experimental condition

define “statistical regression”, is it a threat to external or internal validity?

extreme scores on one measurement tend to be less extreme on a second measurement

internal validity: it’s not the IV that caused change to the DV

define “experimental attrition”

some participants dropping out in unequal numbers across conditions

define “design contamination”

participants found out something about the experimental condition

what are the threats to external validity? (4)

unique program features: designs aspects that might be unique to your experiment (ex: overly motivated participants)

effects of selection: can this study by replicated with different participants. was the recruitment and assignment successful

effects of environment/settings: can these results be replicated in other labs/environments

effects of history: can these results be replicated in other eras/time periods

what are the experimental strategies used to strengthen external validity? (3)

lab simulations: bring “real world” in lab

mundane realism: how close the lab environment is to the real world

experimental realism: bring only the psychological aspects in the lab (mental, not visual)

define “mundane realism”

how close the lab environment is to the real world

define “experimental realism”

bring only the psychological aspects in the lab (mental, not visual)

how can you know if the original lab simulations worked? (3)

examine published simulations

it had preference for hypothetical scenarios as IV than real world (ex: let’s pretend you are in…)

it had a preference for qualitative self-reports as DV

how can you know if current-day lab simulations worked? (2)

realistic immersive stimuli (IV): put yourself in the role depending on the instructions

quantitative response measures (DV)

how can you know if high realism virtual reality (VR) worked? (2)

more positive affect when immersed in nature

similar to responses observed in actual nature (experimental realism)

define “lab simulation”

creation of conditions that simulate/duplicate the natural environment

define “field studies” and why we use them

research conducted in a place that the participant considers as natural environment

done when it’s something that is hard to measure in lab

true or false: you can do field studies with animals

true

what is the strength (for both) and weakness (both individually) for field study and stimulation?

strength:

allow researchers to test behaviour in a more realistic environment than labs

weakness

field study: hard to control for extraneous

simulation: depends on whether the participant believes if the simulation is real

what are the perils of experimental strategies? (4)

sometimes, we don’t have theories. meaning that hypotheses are ad hoc (after findings), so illogical and meaningless

some measurement instruments are not reliable or valid

sometimes use inappropriate research designs (wrong DV, no control, etc)

conditions may be incomparable or inconsistent across studies

how can you avoid the perils of experimental designs? (4)

use something that someone else already used

conduct treatment manipulation checks

conduct pilot tests with small samples to be sure that it’s the IV that caused the DV

use tasks that are simpler and familiar for the participants

when are field studies or simulations used?

as alternatives to lab experiments