Computer Architecture

1/7

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

8 Terms

What is computer architecture?

A set of rules and methods that describe functionality, organisation and implementation of computer systems

Can be applied to many layers

Processor architecture

Memory architecture

Instruction set architecture

System level: how we link processors to I/O

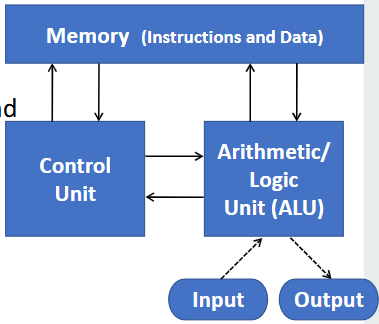

What is the Von Neumann architecture?

Proposed by John Von Neumann in 1945

Memory: stores data and instructions

Control unit: contains instruction register and program counter

Processing unit: contains ALU and processor registers

I/O mechanisms

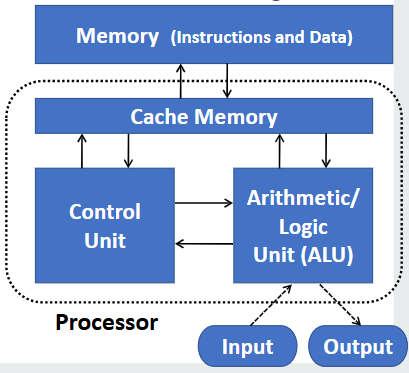

What is the CPU composed of?

Consists of:

ALU: arithmetic logic unit

CU: also contains high-speed cache memory

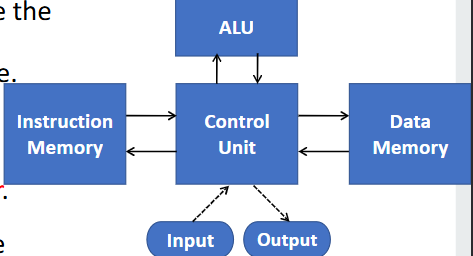

What are advantages and disadvantages of the Harvard architecture?

Advantages:

Overcomes bottleneck issues with Von Neumann

Parallel access to instruction and data = faster

Better against cyber attacks

Disadvantages:

Higher costs as it needs more memory since data and instruction data storage is seperate

IMPORTANT

Instructions and data memory is seperate

Cannot change instruction memory at run-time

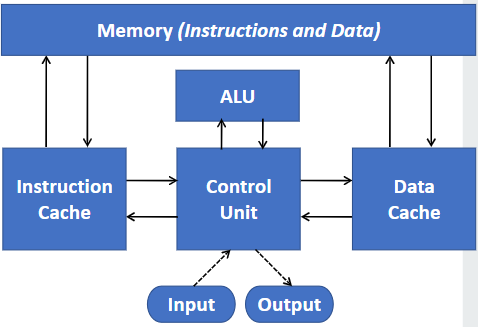

What is the modified Harvard architecture?

Improvement to the original Harvard version

Still seperates instruction and data caches INTERNALLY, BUT a single unified main memory is still visible to users/programs

Used in chips e.g. ARM9, MIPS, x86

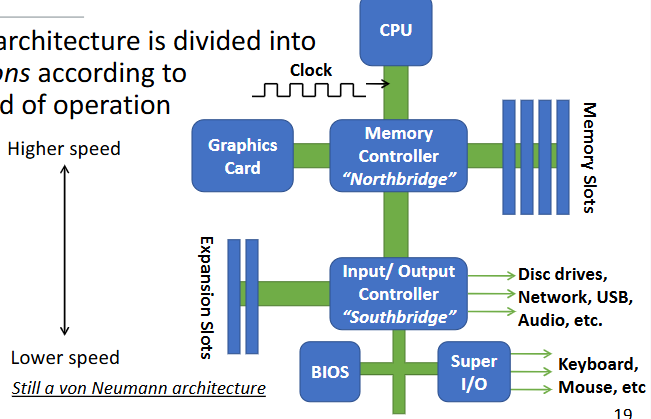

What is the modern PC architecture?

Divided into regions according to speed of operation

Memory controller: northbridge

Manages communication between CPU and RAM

Is now inside the CPU, allows faster access and lower latency

Input / Output controller: southbridge

Manages I/O devices (e.g. USBs, keyboards, mouses

Also manages audio and disc drives

What are the popular metrics for measuring speed of computers?

Increase clock rate

e.g. 1.87 GHz = 1.87 billion ticks per second

IMPORTANT: Computer being faster ≠ CPU being faster

Only the CPU gets faster - Unfair to compare clock rate

MIPS (millions of instructions per second)

Better indication of speed BUT depends on which instructions are counted

Also unfair as there can be different results for different programs

FLOPS (floating point operations per second)

Even better indication of speed “where it counts”

Also unfair

None are ideal to measure other factors, e.g. I/O speed

What are limiting factors on speed?

Density limitations

Number of transisters per square inch

“Moore’s Law”: made in 1965, transistor number on a silicon doubles every 2 years

Still only a theory as it was only observed

Did not increase performance, only transistors

Power limitations

1/3 of power used to propogate clock signal around the processor

Therefore power and heat problems increase as clock rates increase

Cooling becomes challenging to accommodate this problem

As performance demands increase:

Inability to increase clock speed lead to the manufacturers making multi-core processors

Can also increase performace by linking computers using high-speed networks

Led to “blade servers”

Application runs across cluster but some application cannot be easily decomposed this way