Data Collection Module 4

1/93

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

94 Terms

construct

An abstract idea (e.g., stress, self-esteem).

concept

A general idea or category that can include constructs.

term

The label or word used to refer to a concept or construct.

dimension

A broad category within a construct (e.g., cognitive dimension of anxiety).

facet

A smaller aspect or feature.

trait

Often used to describe stable personal characteristics (e.g., introversion as a trait of personality).

conceptualisation

Defining what a construct means theoretically. It involves clarifying its dimensions and traits.

operationalisation

Turning a concept into measurable terms (e.g., defining "stress" as a score on a 10-item questionnaire).

measurement

The actual process of collecting data to represent a construct, using instruments (e.g., surveys, tests).

types

Distinct categories within a variable or construct (e.g., types of motivation: intrinsic vs. extrinsic).

typology

A classification system based on the combination of different types or traits (e.g., personality typologies).

index

A composite score created by adding up different indicators (e.g., socioeconomic status index = income + education + occupation).

scale

A tool to measure constructs using items with intensity or frequency levels (e.g., Likert scale: strongly agree to strongly disagree).

data collection method

How you gather information (e.g., surveys, interviews, observations, physiological measures).

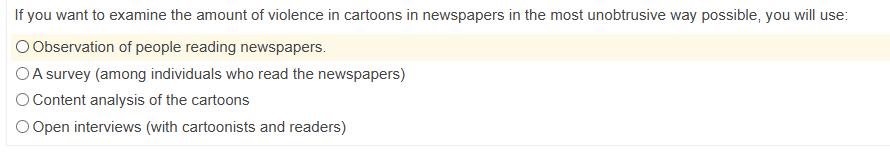

obtrusive

The participant knows they’re being studied (e.g., interviews, questionnaires).

unobtrusive

The participant might not know, minimising reactivity (e.g., observing behaviour through a one-way mirror or analysing existing records).

open interview

A flexible interview format using mostly open-ended questions, allowing participants to express themselves in their own words.

non-standardised questions

Questions that vary between interviews. Common in unstructured interviews—good for depth, but limits comparability.

interview protocol

A structured guide or plan for how to conduct the interview, including question order, topics, and procedures.

interview instructions

Guidance given to the interviewer to ensure consistency (e.g., how to introduce themselves, when to probe, how to respond neutrally).

probes

Follow-up questions used to encourage the interviewee to elaborate (e.g., “Can you tell me more about that?”).

informants

Provide insight about a group or context they know well (e.g., a teacher talking about students).

respondents

Speak for themselves and their own experiences.

acquiscence

When participants tend to agree with whatever the interviewer says or suggests—whether they mean it or not.

social desirability

The tendency to give answers that seem socially acceptable or favourable, rather than being completely honest.

personal reactivity

How the personality, tone, or presence of the interviewer influences the interviewee’s responses.

procedural reactivity

How the design of the interview process itself (e.g., setting, format, consent process) affects responses.

cognitive interview

A method to improve accuracy of memory-based responses by using techniques like context reinstatement or reverse-order recall.

memory bias

Distortion in recalled information due to forgetting, distortion, or misremembering—a common issue in self-report data.

unobtrusive research

Research that does not involve direct interaction with participants (e.g., analyzing texts, media, or archives).

content analysis

A method for systematically analysing the content of communication (texts, images, audio) to identify patterns or meanings.

primary documents

Original sources of data like interviews, official records, diaries, or news articles used for analysis.

coding

Labeling sections of text or media with tags that represent themes, concepts, or categories.

coding scheme

A structured set of categories and rules used to ensure consistent coding across texts and coders.

inductive coding

Developing codes based on patterns that emerge from the data, without a predefined framework.

deductive coding

Using a predefined set of codes based on theory or prior research to analyse content.

inter coding reliability

The level of agreement among multiple coders analysing the same content—used to test the reliability of the coding process.

measurement instrument

A tool or device used to collect data or measure variables in research. Examples include surveys, questionnaires, tests, scales, or mechanical devices like thermometers or blood pressure monitors.

reliability

Refers to the consistency of a measurement instrument—its ability to produce the same results under the same conditions over time. High … means the measurement is stable and repeatable.

random error

Decrease the reliability of your measurements. They can be anything; they happen accidentally, are unpredictable and inconsistent. Across the board, they cancel each other out.

stability

A type of reliability that refers to how consistent results are over time. If the same test is repeated and yields similar results, it’s considered stable.

consistency

The degree to which different parts or versions of a measurement instrument yield similar results. It includes both internal consistency (e.g., similar answers to related questions on a survey) and test-retest consistency.

measurement reliability

The absence of random error.

measurement validity

No systematic error. You measure what you intend to measure (on average).

content validity

Evaluates how well an instrument (like a test) covers all relevant parts of the construct it aims to measure.

Does it cover all aspects of the concept?

criterion validity

An estimate of the extent to which a measure agrees with a gold standard.

Is it ‘correctly’ related to conceptually related indicators?

construct validity

The extent to which your test or measure accurately assesses what it's supposed to.

Is it ‘correctly’ related to other theoretically related variables?

contingency question

A question asked only to a specific subgroup of respondents, depending on their answers to previous questions.

psychological altruism

A motivational state with the goal of increasing another's welfare.

psychological egoism

The motivation to increase one's own welfare.

criteria for formulating survey questions

1. Relevance for the research question.

2. Relevant variation in the answers.

3. Item has to be simple, clear and understandable.

4. Not double-barreled: not two stimuli in one item.

5. Neutral wording in an item.

6. Mutually exclusive and balanced answering categories.

7. Avoid context effects.

naturalistic observation

Observing behaviour in its natural setting without intervention.

contrived observation

Observing behaviour in a controlled or artificial setting.

particiatory observation

The researcher actively engages in the environment or activities being studied.

non-participatory observation

The researcher remains detached and does not interact with the subjects.

observation vs. rating

The distinction between recording behaviour as it happens (observation) and making evaluative judgments (rating).

observation schedule

A structured framework for recording observed behaviors or events.

event sampling

Recording all instances of a specific type of event.

time sampling

Recording behaviours at predetermined time intervals.

Cohen’s Kappa

A number that shows how much two people agree when they are rating or observing the same things, while also considering how much of that agreement might have happened just by chance.

systematic error

Decrease the validity of your measurements. They systematically measure something different than intended.

measurement validity

Relates to the quality of measurements

Does a measure reflect what we want it to?

Relates to the goal for wich a measure is used.

A valid mathematics test is not a valid measure of general intelligence.

May be supported by research findings (but never finally proven.

validity of research

Relates to the strength of your conclusions.

internal validity

Conclusion about cause and effect justified?

Can we rule out alternative explanations/confounding variables?

external validity

o.k. to generalize to other settings?

A.k.a. ecological validity.

lack of reliability

Relates to random error.

Scores are to a large extent determined by random fluctuations.

Changes and variation reflect both real and random changes and variation.

Means that a measure reflects random fluctuations in addition to genuine variation.

Because random fluctuations cancel each other out, the group average is not affected.

lack of validity

Is systematic bias (bias is another word for systematic error).

Scores are consistently over-/underestimated.

content validity

Does not require statistical analyses; validity is plausible because of arguments (are all key aspects covered?).

criterio-related validity

Involves very convincing evidence, but often not practical (example: similarity between self-reports and observed behaviour).

construct validity

Is frequently applied (main question: does the measured construct “behave” as expected?).

Find support for the validity of an attitude measure by means of the correlation between self-esteem among teenagers and participation in school activities.

If you find the hypothesized correlation, you find support for the validity of this measure in this study

reliability

Is about getting similar outcomes from individuals when measuring something several times under the same circumstances.

systematic bias / selective non-response

Some characteristics determine if a respondent is selected into the sample (e.g., Voters that support Donald Trump are less likely to participate in voting polls).

simple random samples (SRS)

Most basic type.

Equal probability to be selected for every unit.

Selection from a list of the entire population.

stratified samples

Make sure that certain groups are represented.

Define subgroups in the population (strata; e.g., all Dutch municipalities).

Draw a sample of respondents in each stratum.

Stratification increases the representativeness of the sample.

Stratification decreases sampling error (compared to simple random samples).

Useful in case of large differences between strata (e.g., regional differences in political preferences).

cluster samples and multistage samples

Usually applied for practical reasons.

Define subgroups in the population (e.g., all Dutch municipalities). Clusters/primary sampling units.

Draw a sample of the clusters/primary sampling units.

Within each cluster you may draw a sample of e.g. individuals (two-stage sample/multistage sample).

Stratified samples: all groups are represented.

Cluster/multistage samples: only a few groups are selected.

Cluster/multistage sampling increases sampling error.

stratified cluster/multistage samples

Combination of the previous types.

Primary sampling units are grouped in strata (e.g., provinces).

From each stratum a number of municipalites is selected.

Within each municipality a sample of individuals is drawn.

Decrease of the sampling error by stratification hardly ever compensates for increase by clustering.

systematic sample

Example:

Start with a sampling frame (e.g., a list of all inhabitants of Enschede).

Decide what the sampling fraction will be (e.g. 1 out of 400).

Draw a random number between 1 and 400 (e.g., 52).

Select persons, nr. 52, nr. 452, nr. 852 etc. ...

The result is (nearly always) comparable to a simple random sample.

non-probability samples

Selection by availability/researcher’s judgment.

Only respondents that happen to be in the right place at the right time are selected.

Unclear what the population is.

Considerable risk of systematic errors.

why choose non-probability sampling?

Exploratory and/or qualitative research.

Lack of resources and/or time.

Need to reach specific respondents.

Respondents are hard to reach.

No sampling frame (list of population units) available.

convenience samples (aka availability samples)

Select respondents that are easy to reach.

snowball samples (aka respondent-assisted samples)

Ask respondents for additional participants.

purposive samples

Selection of respondents based on researcher’s judgment.

quota samples

Interviewers need to reach certain quota (e.g., 20 females, 20 males, in every group 5 minority respondents).

confidentiality

When a researcher guarantees participants of a study that others will not be able to connect data to the participants. However, the researcher will be able to connect the data to the participants when analysing the data.

why use a pilot test?

To see if items are interpreted in the way we want items to be interpreted.

factor analysis

A technique to identify/measure (the) underlying constructs to explain relationships between observed variables.

confirmatory factor analysis

Used to see whether test scores follow a pattern predicted by a theory.

exploratory factor analysis

Used to see whether a small number of latent factors (underlying constructs) can describe the correlation between a set of items.

Not meant to test hypotheses.

main goal of factor analysis

Data reduction (measure the underlying factors).

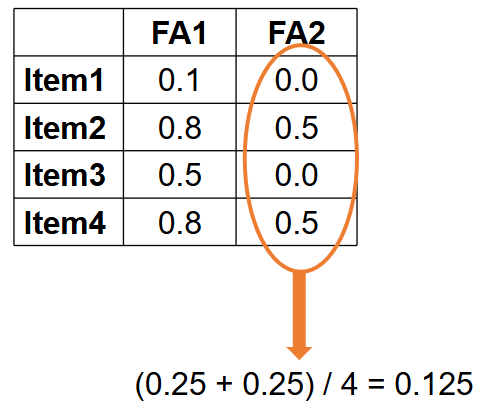

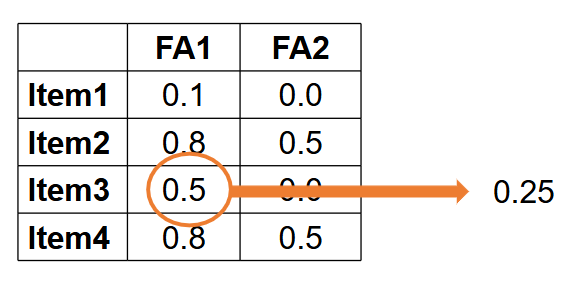

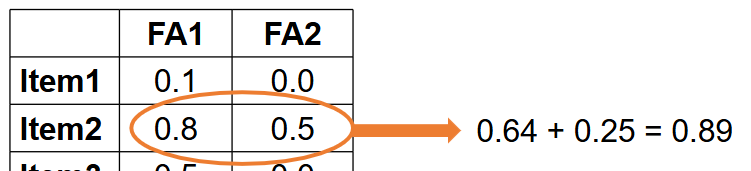

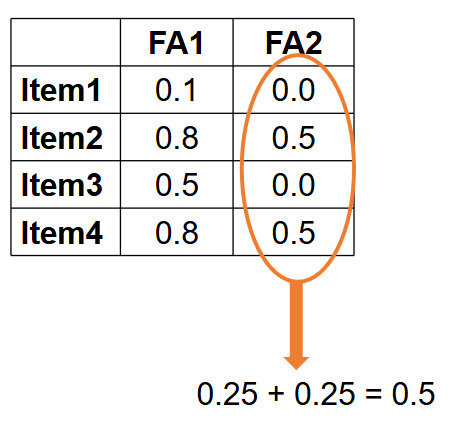

squared factor loading

(proportion of) variance of an item that is “explained” by a factor.

communality

Proportion of variance of an item that is “explained” by all factors.

eigenvalue

Sum of squared factor loadings per factor.

variance explained by a factor