Intro to AI

1/33

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

34 Terms

attribute

quality describing an observation - ex: color

feature

an attribute and value combination ex: color is blue

observation/instance

a datapoint or sample in dataset

training set

a set of instances used to train a ML model

if supervised its X and Y, if unsupervised just X

test set

set of instances used at the end of model training and validation to assess the predictive power of model

random variable

unknown value that follows certain probability distribution ex: X ~ N(0,1) with 0 as mean and 1 as the variance, divided into discrete (countable) summation and continuous integral

sum squared error

Sum of (yi - wxi)2 = wx

Four things required for ML

data

model

optimization

goal (objective function, optimal loss function ex: SSE)

Bias term regression - how do you determine the function

same way as SSE, use least squares to determine w0 (y-int) and w1 (slope) deriving in terms of that variable

Linearity Assumption

forces the predictor to be a linear combination of features (the function can be approximated by linear/constant shape)

homoscedasticity

variance of the residual error is assumed to be constant over the entire feature space

independence

assumed that each instance is independent of any other instance

independence of each instance sample, close but diff from multicollinearity

fixed features

input features are considered “fixed” - they are treated like “given constants” and are not random variables (no pdf or pmf) this implies they are free of measurement errors

absence of multicollinearity

can’t have strongly correlated features of an instance, b/c if 2 features are strongly correlated it means that there are infinitely many solutions and can’t solve uniquely

independence of features within a vector

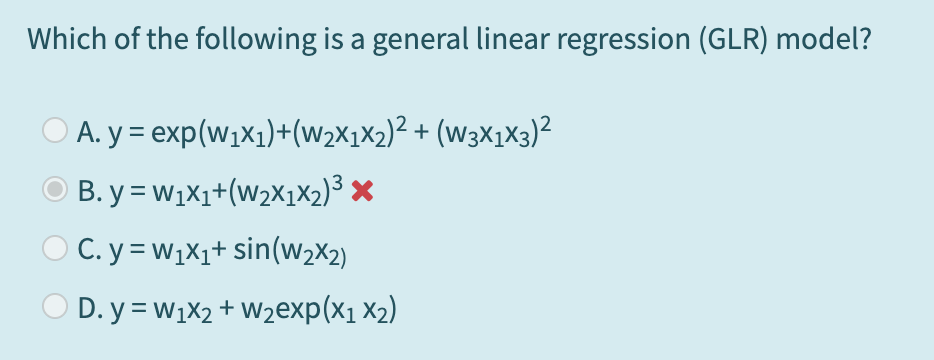

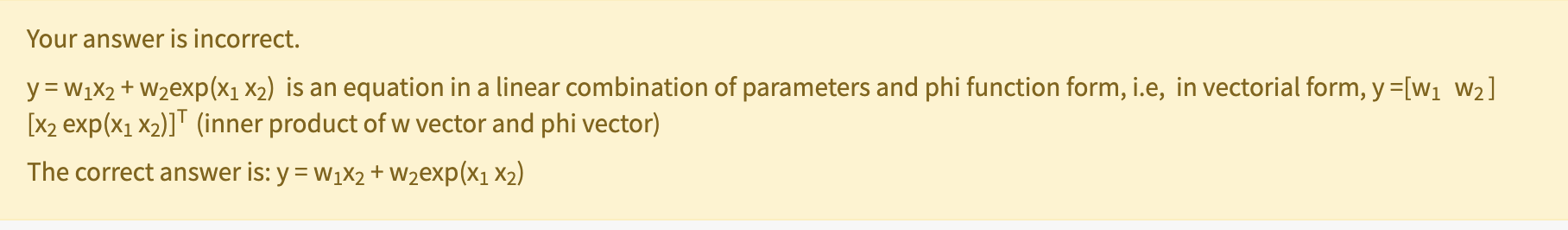

General Linear Regression

used when can’t use linear function to estimate, and need a ply

Phi(x)

basis function - common ones are polynomial

polynomial basis function p =1 and phii(x) = x

GLR reduces to univariate linear regression, d = 1

polynomial basis function p = 1 and phii(x) = x (feature vector)

GLR reduces to multivariate linear regression

polynomial basis function p = 2 and phii(x) = x2

GLR reduces to univarate polynomial order 2 regression, so d = 1

Number of parameters of regression GLR

grows exponentially with respect to polynomial order (p) and feature dimension (d) - (d+p)! / (d! p!)

multivariate

means that feature vector (d) > 1 means that there are more than one feature in the vector X

univariate

one feature in feature vector x

why is the correlation coefficient matrix important

when designing your feature vector, you know which features may contribute to multicollinearity and weaken your analysis

how do you determine if you can use left / right inverse

rank !!! left inverse rank = of phi T * phi = k + 1

right inverse rank = phi * phi-1 = n

don’t use coefficient correlation matrix, that’s for heuristic analysis, and further analysis

what does a singular matrix imply

there are infinitely many solutions, however the model and parameters are still solvable GLR, but the parameters are not unique

causes of no left inverse

multicollinearity, or perfectly correlated features (problem between features)

causes of no right inverse

dependent samples, or perfect correlations among SAMPLES (problem between samples)

rank deficient

rank is less than the rank if the matrix was independent, and invertible

pros and cons of pseudo inverse solution

+: fast, analytic (close form) solution

-: expensive if both k and n are large

multicollinearity issue

row rank issue

independence between observations

col rank issue

multicollinearity between features

calc coeff matrix

don’t include bias column

calc rank