(7) mirror neurons

what are mirror neurons?

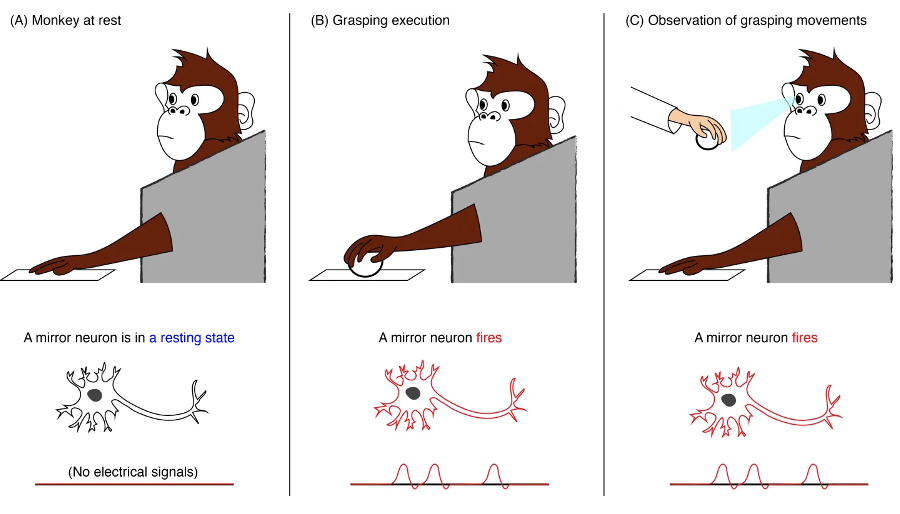

mirror neurons are a class of neurons that become active (i.e., firing) both when individuals perform a specific motor act and when they observe a similar act done by others. Thus, the neurons "mirror" the behaviour of the other, as though the observer were itself acting

such neurons have been directly observed in human and primate species and can be observed for the sound of actions too

there have been over 800 published papers proposing mirror neurons to be the neuronal substrate underlying a vast array of different functions e.g., empathy (Gazzola et al., 2006), autistic behaviour (Dapretto et al., 2006), speech perception (D’Ausilio, et al., 2009), language comprehension (Aziz-Zadeh et al., 2006), and imitation (Iacoboni, 2005).

some researchers have also argued that mirror neuron systems in the human brain help us understand the actions and intentions of other people

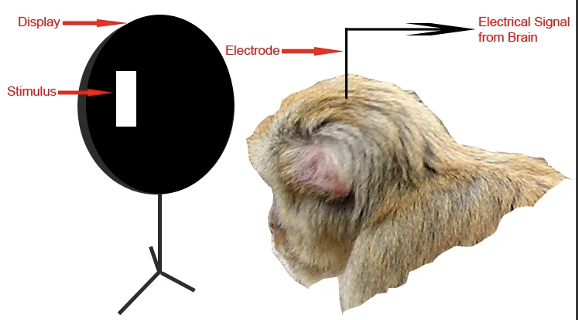

gold standard technique for identifying (not function!) mirror neurons

single-unit recordings

the technique requires a surgical opening of the skull and then the implanting of recording microelectrodes

single-unit provide a method of measuring the electro-physiological responses of a single neuron.

a microelectrode (glass micro-pipettes or metal microelectrodes made of platinum) is inserted into the brain, where it can record the rate of change in voltage with respect to time - highly invasive!!

microelectrodes are placed close to the cell membrane allowing the ability to record extracellularly (Glenberg, 2011)

1/28

Earn XP

Description and Tags

understanding action and observation

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

29 Terms

what are mirror neurons?

mirror neurons are a class of neurons that become active (i.e., firing) both when individuals perform a specific motor act and when they observe a similar act done by others. Thus, the neurons "mirror" the behaviour of the other, as though the observer were itself acting

such neurons have been directly observed in human and primate species and can be observed for the sound of actions too

there have been over 800 published papers proposing mirror neurons to be the neuronal substrate underlying a vast array of different functions e.g., empathy (Gazzola et al., 2006), autistic behaviour (Dapretto et al., 2006), speech perception (D’Ausilio, et al., 2009), language comprehension (Aziz-Zadeh et al., 2006), and imitation (Iacoboni, 2005).

some researchers have also argued that mirror neuron systems in the human brain help us understand the actions and intentions of other people

gold standard technique for identifying (not function!) mirror neurons

single-unit recordings

the technique requires a surgical opening of the skull and then the implanting of recording microelectrodes

single-unit provide a method of measuring the electro-physiological responses of a single neuron.

a microelectrode (glass micro-pipettes or metal microelectrodes made of platinum) is inserted into the brain, where it can record the rate of change in voltage with respect to time - highly invasive!!

microelectrodes are placed close to the cell membrane allowing the ability to record extracellularly (Glenberg, 2011)

who is this technique mostly used on

because of the invasive nature of the procedure, it is used most often, with animals

however, it has been used on patients with Parkinson’s disease or epilepsy

it can give high spatial and temporal resolution assessing the relationship between brain structure, function, and behaviour

simple paradigm is shown in ss- the researchers measure the sound (crackling sound is the neuron firing)

by looking at brain activity at the neuron level, researchers can link brain activity to behaviour and create neuronal maps describing flow of information through the brain (Boraund et a., 2002)

first evidence of mirror neurons

Giacomo Rizzolati’s lab

the discovery of mirror neurons was due to both serendipity and skill. In the 1990s, Rizzolati et al found that some neurons in an area of macaque monkeys' premotor cortex called F5 fired when the monkeys did things like reach for or bite a peanut.

the researchers wanted to learn more about how these neurons responded to different objects and actions, so they used electrodes to record activity from individual F5 neurons while giving the monkeys different objects to handle

they quickly noticed when the experimenter picked up an object (a peanut) to hand it to the monkey, some of the monkey's motor neurons would start to fire - these were the same neurons that would also fire when the monkey itself grasped the peanut

similarly, the same neuron would fire when the monkey put a peanut in its mouth and when the experimenter put a peanut in his own mouth

“we were lucky because there was no way to know such neurons existed, but we were in the right area to find them”

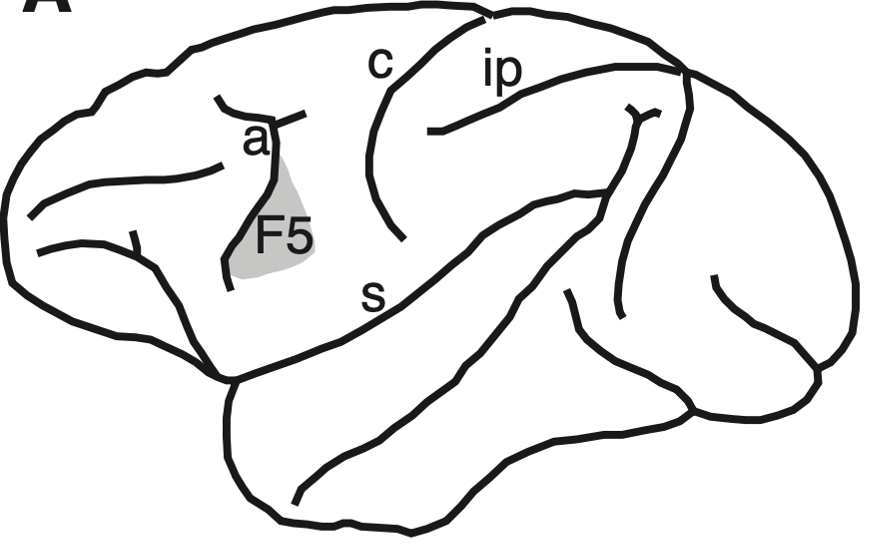

the F5

lateral view of macaque brain with the location of the premotor cortex area F5.

major sulci: a, arcuate; c, central; ip, intraparietal; s, sylvian sulcus.

the premotor cortex is a crucial part of the brain, which is believed to have direct control over the movements of voluntary muscles

since the discovery of mirror neurons it is believed to play a role in in understanding the actions of others, and in using abstract rules to perform specific tasks

this is where most mirror neurons have been found in response to observation and action

study on auditory mirror neurons

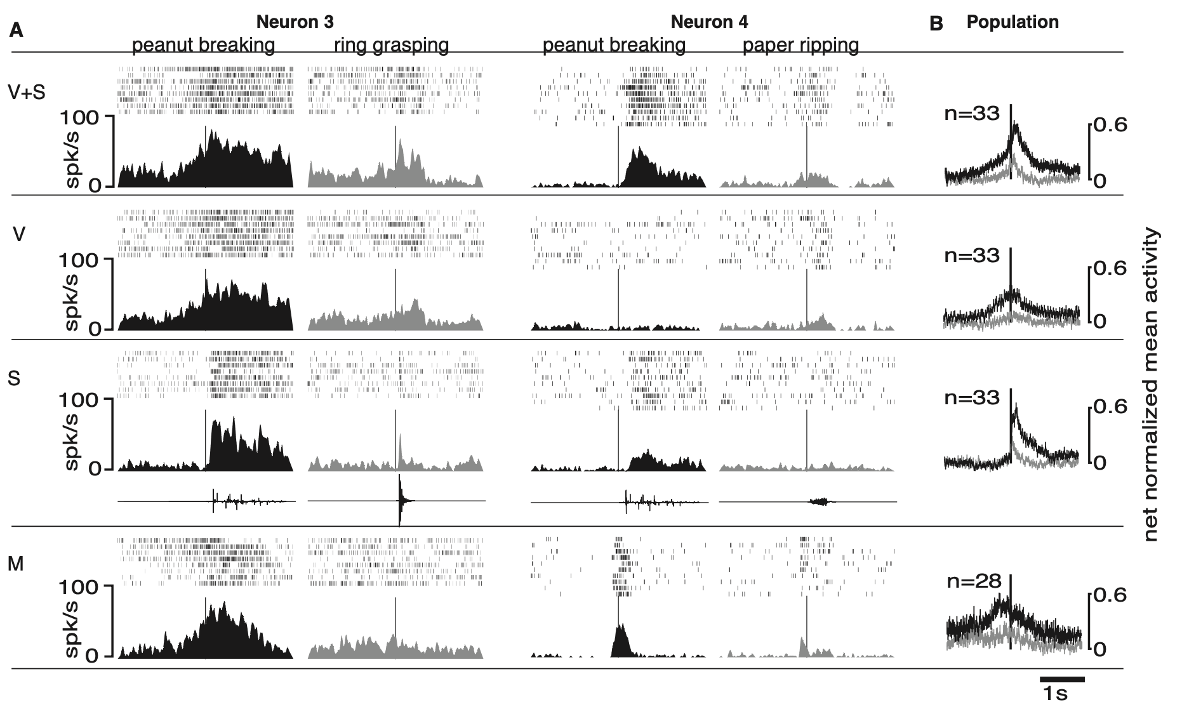

the experiments were carried out in three awake macaque monkeys. 497 neurons were recorded. In an initial group of neurons (n =211), the authors studied auditory properties by using sounds produced by the experimenter’s actions and non–action-related sounds; in another group (n = 286), they used digitised action-related sounds.

experimental paradigm I:

aim: control for activation of a set of neurons when the stimuli were presented 1) visually vs 2) visually + sound vs 3) sound vs 4) control

in the initial group of neurons action-related stimuli were peanut breaking, paper ripping, plastic crumpling, dry food manipulation, paper shaking, dropping a stick on the floor and metal hitting metal

wanted to examine if there were differences in neural activation across actions

all stimuli were presented in and out of sight. Only neurons showing strong and consistent auditory responses were analysed further with non-action-related control sounds

non-action-related sounds included: white noise, pure tones, clicks, monkey and other animal calls. Non action- related sounds were generated by a computer and played back through a loudspeaker

in all conditions the data acquisition was triggered by the experimenter pressing a foot pedal out of the monkey’s sight

second experimental paradigm

experimental paradigm II:

in the subsequent group of they addressed the issue of the neurons’ capacity to differentiate between two different actions based on vision and sound.

two actions were then randomly presented in:

vision-and-sound (‘V+S’) condition

sound- only (‘S’) condition

vision-only (‘V’) condition

motor / control (‘M’=monkeys performing the object-directed actions) condition

all monkeys were well acquainted with the normal acoustic consequences of the actions, to test the contribution of the sound and the vision of the action on the responses of the neurons separately, in all sensory testing conditions (V+S, V and S) the physical properties of the objects, target of the experimenter’s action, were modified so that acting on them did not produce any sound

in the ‘S’ condition, pre-recorded, digitised sounds of the action were then played back while the experimenter stood behind the loudspeaker

in the ‘V+S’ and the ‘V’ conditions, the experimenter performed the silent version of the action next to the loudspeaker

in the ‘V+S’ condition, the sound of the action was played back through the loudspeaker at the time when it would have normally occurred.

in all conditions, the experimenter pressed a foot pedal out of the monkey’s sight to trigger the acquisition of 4s of data centred upon the pedal-press event. In conditions ‘S’ and ‘V+S’, the foot-pedal also triggered the playback of the sound. A fixed, 50ms delay occurred between the press of the pedal and the playback of the sound, a delay that was taken in account by the experimenter in determining when to press the foot-pedal in the ‘V+S’ condition.

full testing (8-10 trials per condition) including at least one motor action was completed in 33 neurons

findings

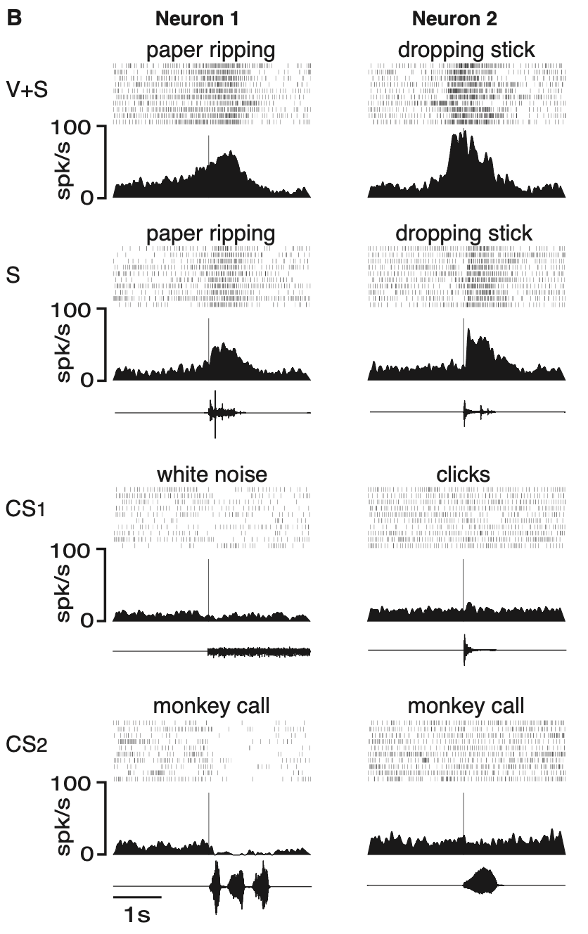

Kohler et al (2002)

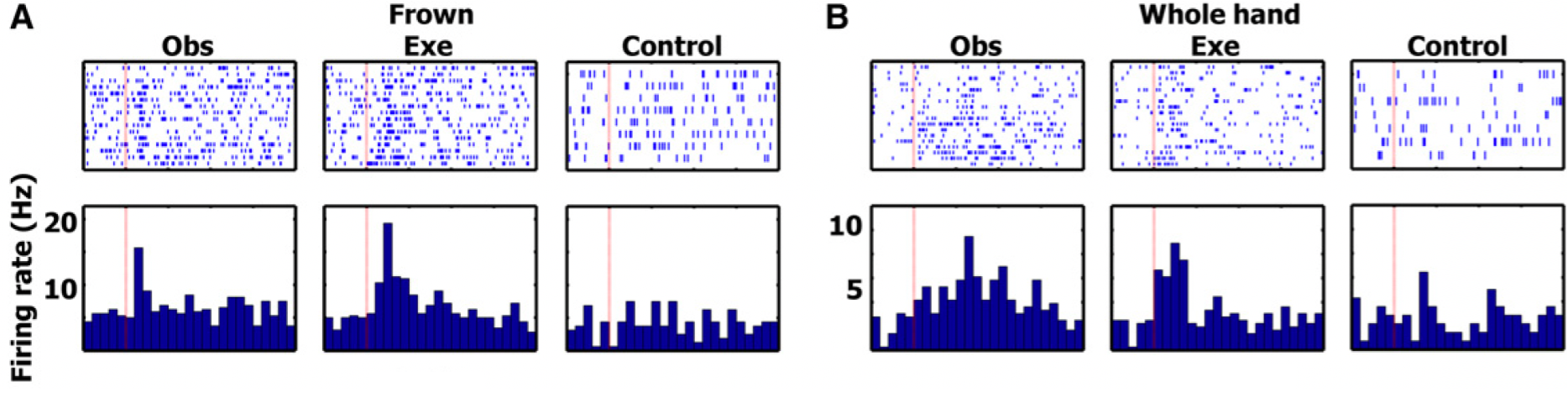

two examples of neurons responding to the sound of actions

rastergrams are shown together with spike density functions

text above each rastergram describes the sound or action used to test the neuron

vertical lines indicate the time when the sound occurred

traces under the spike density functions in S and in CS conditions are oscillograms of the sounds used to test the neurons

neuron 1:

this neuron responded to the vision and sound of a tearing action (paper ripping; V+S)

the sound of the same action performed out of the monkey’s sight was equally effective (S)

sounds that were non–action-related (white noise, monkey calls) did not evoke any excitatory response (control sounds: CS1, CS2)

as often occurs in F5 neurons during strong arousal, a decrease in firing rate was observed

neuron 2:

this neuron responded to the vision and sound of a hand dropping a stick (VS)

the response was also present when the monkey heard the sound of the stick hitting the floor (S)

non–action-related arousing sounds did not produce any consistent excitation (CS1, CS2)

showing these neurons fire in response to both watching the action and hearing the action!

more data from neurons 3, 4 and population

isolated sound and visual

neuron 3:

discharged when the monkey observed the experimenter breaking a peanut (V+S, and V) and when the monkey heard the peanut being broken without seeing the action (S)

the neuron also discharged when the monkey made the same action (M)

grasping a ring and the resulting sound of this action evoked smaller responses

neuron 4:

another example of a selective audio-visual mirror neuron

this neuron responded vigorously when the monkey saw and heard the experimenter braking a peanut (V+S) and much less when it ripped a sheet of paper (M) - difference between actions within same neuron

the sound alone of breaking a peanut produced a significant but smaller response (S), showing the importance of the visual modality for this neuron

vision without sound triggered no response

this allows us to measure each individual neuron, showing the selectivity to specific actions

overall findings

what makes a neuron a mirror neuron is that it is active both when the animal engages in an activity, such as grabbing a peanut with the hand, and when the animal observes or hear the experimenter engage in the same or closely related activity

area F5 contains a population of neurons—audio-visual mirror neurons—that discharge not just to the execution or observation of a specific action but also when this action can only be heard (Kohler et al., 2002)

a further difference is that audio-visual mirror neurons also discharge during execution of specific motor actions - they not only contain schemas on how an action should be executed (for example, grip selection) but also the action ideas (in terms of their goals e.g., grasp, hold, or break)

thus, audiovisual mirror neurons could be used to plan/execute actions (as in our motor conditions) and to recognise the actions of others (as in our sensory conditions), even if only heard

further research demonstrated that about one third of these mirror neurons are ‘‘strictly congruent’’—that is, they require a close match in action production and action perception, whereas about two thirds are broadly congruent and respond to actions with the same goal (e.g., grabbing a peanut) but different movement specifics (e.g., using a tool not the hand)

once mirror neurons had been identified in monkeys, the next step was to look for them in humans!!

first evidence of mirror neurons in humans

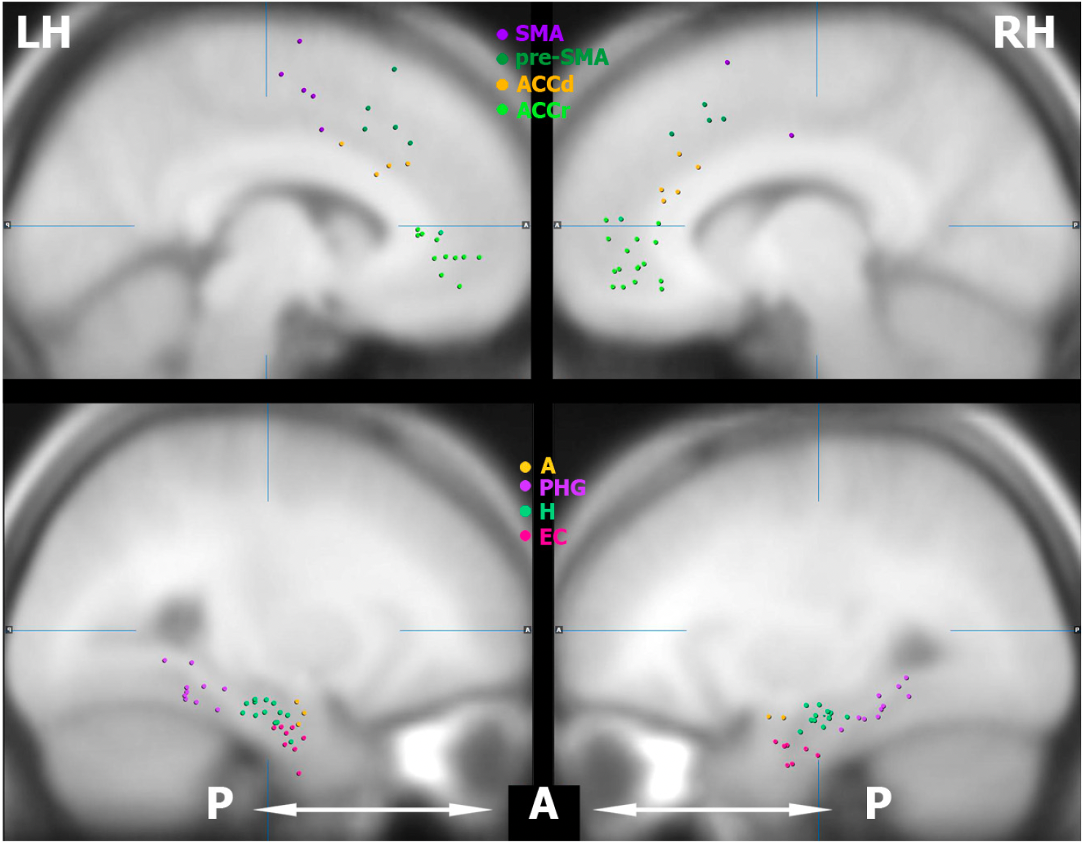

Mukamel et al (2010) reported on experiments using single-units recording in humans

the electrodes were implanted as a prelude to surgery rather than primarily for research, and thus, the location of the electrodes was not selected to test various hypotheses

nonetheless, these data provide some of the most convincing evidence in humans for individual cells with mirror properties

Makumel et al (2010) experimental procedure

patients:

authors recorded extracellular single activity from 21 patients with pharmacologically intractable epilepsy

patients were implanted with intracranial depth electrodes to identify seizure foci for potential surgical treatment

electrode location was based solely on clinical criteria and the patients provided written informed consent to participate in the experiments. Mean patient age was 31, 12 males; 15 right handers

each electrode terminated in a set of nine 40-μm platinum-iridium microwires and the signals from eight micro-electrodes were referenced to the ninth, lower impedance micro-electrode

experimental procedure

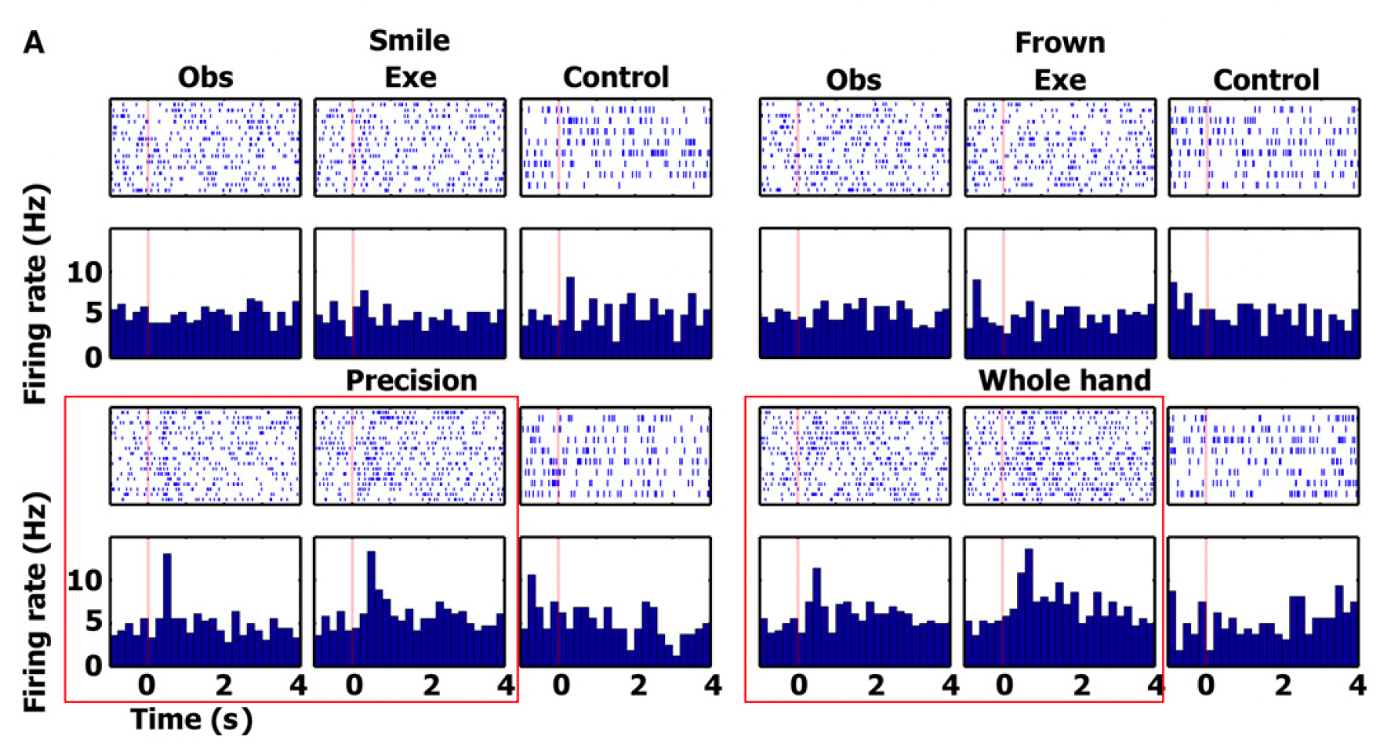

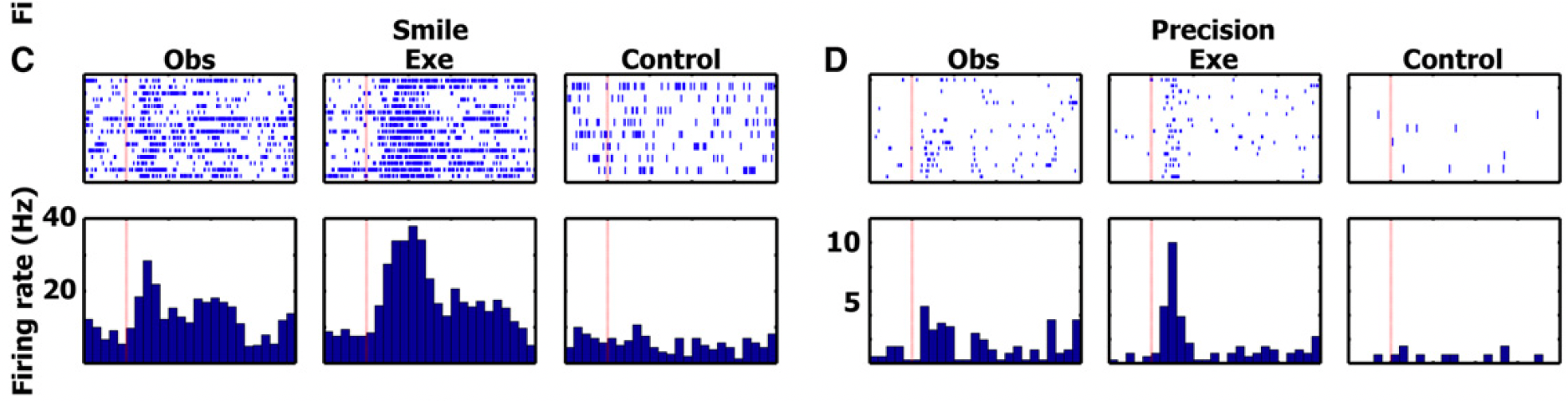

The experiment was composed of three parts - Grasp, Facial expressions and Control

grasping/hands experiment was made up of two conditions:

action-observation and action-execution

in the action-observation conditions, the subjects observed a 3 s video clip depicting a hand grasping a mug with either precision grip or whole-hand prehension

in the action-execution condition, the word ‘‘finger’’ appearing on the screen cued the subject to perform a precision grip on a mug placed next to the laptop. Similarly, the word ‘‘hand’’ cued the subject to perform a whole hand prehension. Observation and execution trials were randomly mixed.

facial expressions experiment was also composed of execution and observation trials

in the execution trials, the subjects smiled or frowned whenever the word ‘‘smile’’ or ‘‘frown,’’ respectively, appeared on the screen

in the observation conditions they simply observed an image of a smiling or frowning face. Observation and execution trials were randomly mixed.

control experiment, the subjects were presented with the same cue words used in the execution conditions of the facial expressions and grasping experiments (i.e., the words ‘‘finger,’’ ‘‘hand,’’ ‘‘smile,’’ or ‘‘frown’’). This time, the subjects had to covertly read the word and refrain from making facial gestures or hand movements

inhibiting also has an effect (but this is not relevant for this lecture)

Makumel et al (2010) cells recording procedure

overall, 1177 neurons were recorded

some of the areas examined:

SMA = Supplementary Motor Area

ACCd = dorsal aspect of anterior cingulate cortex

ACCr = rostral aspect of anterior cingulate

A = amygdala

PHG = parahippocampal gyrus

H = hippocampus

EC = entorhinal cortex

top row shows the electrodes in the medial frontal lobe and bottom row displays the electrodes in the medial temporal lobe

findings from cell in SMA

red boxes → responses significant

the authors focused the analyses on the action observation/execution matching cells responding during both observation and execution of particular actions. The figure displays one such cell in the SMA responding to the observation and execution of two grip types (precision and whole hand) - this cell did not respond to the control tasks or any of the facial gesture conditions.

significant changes in firing rate were tested with a two-tailed paired t test between the firing rate during baseline and a window of +200 to +1200 ms after stimulus onset

this neuron shows firing for observation and execution in the precision and whole hand condition, and no significant activation for the control condition or for any of the face stimuli

confirms activation of mirror neurons in humans

they then performed a chi-square test on the proportion of such cells in each region. The proportion of cells in the hippocampus, parahippocampal gyrus, entorhinal cortex and SMA was significantly higher than expected by chance

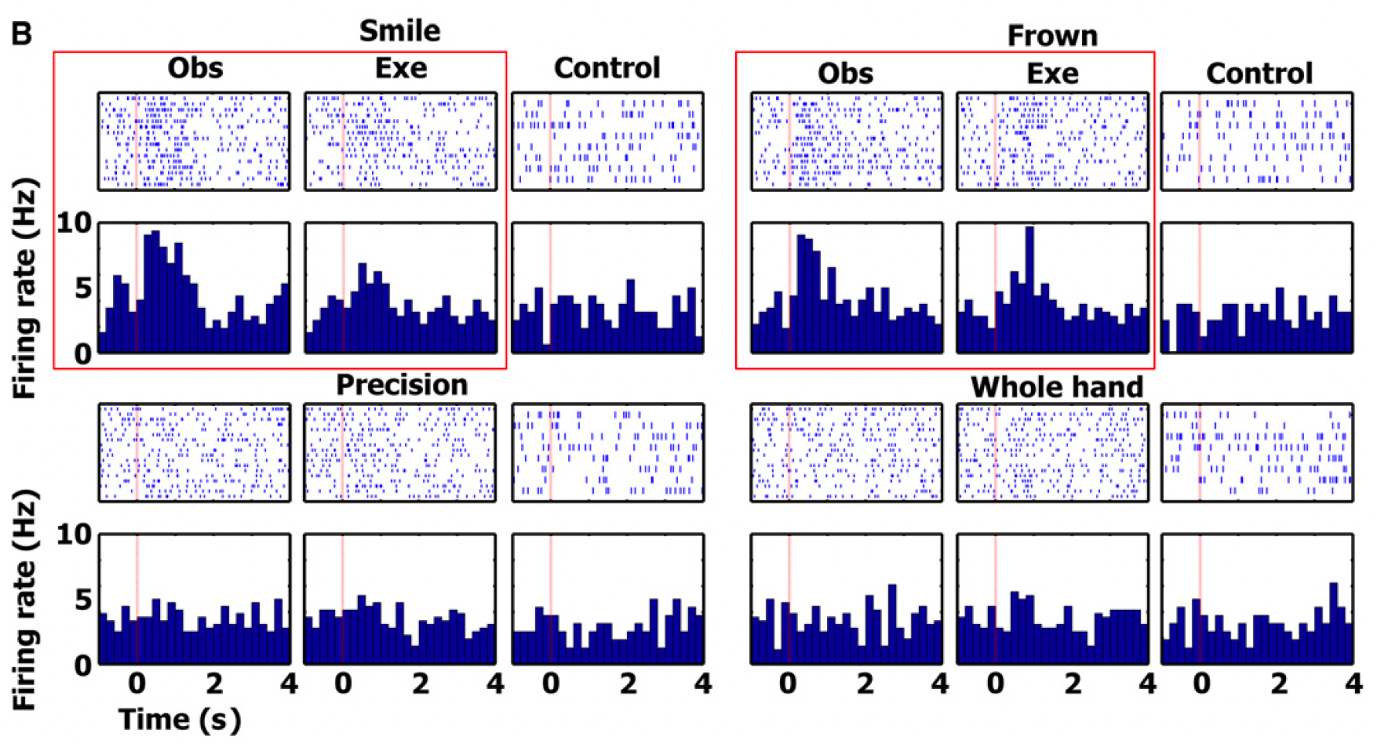

entorhinal cortex cell findings

figure displays another cell in entorhinal cortex responding to observation and execution of facial gestures (smile and frown)

showed significant activation for smile and frown observation and activation

but this cell did not respond to the control tasks or to observation and execution of the various grips - opposite of previous

shows how activation is specific

examples for frown and whole hand

A) single unit in left entorhinal cortex (EC) (area of the allocortex, located in medial temporal lobe) increasing its firing rate during both frown execution and frown observation

functions; memory, navigation, and the perception of time

B) single unit in right parahippocampal gyrus (surrounds the hippocampus and part of the limbic system) increasing its firing rate during whole hand grasp execution and observation

functions; memory encoding and retrieval

examples for smile and precision

C) single unit in left entorhinal cortex increasing its firing rate during smile execution and smile observation

D) single unit in right parahippocampal gyrus increasing its firing rate during precision grip execution and precision grip observation

Makumel et al (2010) discussion

overall, the authors recorded extracellular neural activity in 21 patients while they executed and observed facial emotional expressions and hand-grasping actions

in agreement with the known motor properties of SMA and pre-SMA, the results show a significantly higher proportion of cells responding during action-execution compared with action-observation in these regions.

the results also demonstrate mirroring spiking activity during action-execution and action-observation in human medial frontal cortex and human medial temporal cortex—two neural systems where mirroring responses at single-cell level have not been previously recorded.

taken together, these findings suggest the existence of multiple systems in the human brain endowed with neural mirroring mechanisms for flexible integration and differentiation of the perceptual and motor aspects of actions performed by self and others

one of the first studies of mirror neurons in humans!

study on understanding intentions of others

Lacoboni et al (2005)

neural mechanisms underlying this ability are poorly understood

the ability to understand the intentions associated with the actions of others is a fundamental component of social behaviour, and its deficit is typically associated with autism (Frith, 2001)

action implies a goal and an agent. Consequently, action recognition implies the recognition of a goal, and, from another perspective, the understanding of the agent’s intentions

e.g. John sees Mary grasping an apple. By seeing her hand moving toward the apple, he recognises what she is doing (‘‘that’s a grasp’’), but also that she wants to grasp the apple, that is, her immediate, stimulus-linked ‘‘intention,’’ or goal

more complex and interesting, however, is the problem of whether the mirror neuron system also plays a role in coding the global intention of the actor performing a given motor act. Mary is grasping an apple. Why is she grasping it? Does she want to eat it, or give it to her brother, or maybe throw it away?

the aim of the present study was to investigate the neural basis of “intention” used in this specific sense (the role played by the human mirror neuron system in this type of intention understanding) to indicate the ‘‘why’’ of an action - in addition to the action, are mirror neurons reflecting the intentions?

the competing hypotheses

an important clue for clarifying the intentions behind the actions of others is given by the context in which these actions are performed. The same action done in two different contexts acquires different meanings and may reflect two different intentions. Thus, what the authors aimed to investigate was whether the observation of the same grasping action, either embedded in contexts that cued the intention associated with the action or in the absence of a context cueing the observer, elicited the same or differential activity in mirror neuron areas for grasping in the human brain.

if the mirror neuron system simply codes the type of observed action and its immediate goal, then the activity in mirror neuron areas should not be influenced by the presence or the absence of context. If, in contrast, the mirror neuron system codes the global intention associated with the observed action, then the presence of a context that cues the observer should modulate activity in mirror neuron areas.

to test these competing hypotheses, the authors studied normal volunteers using fMRI which allows in vivo monitoring of brain activity

experimental procedure

participants: 23 right-handed participants (mean age of 26) 11 participants (six females) received Implicit instructions while 12 participants (nine females) received Explicit instructions

the participants receiving Implicit instructions were simply instructed to watch the clips

the participants receiving Explicit instructions were told to pay attention to the various objects displayed in the Context clips, to pay attention to the type of grip in the Action clip, and to try to figure out the intention motivating the grasping action in the Context clips

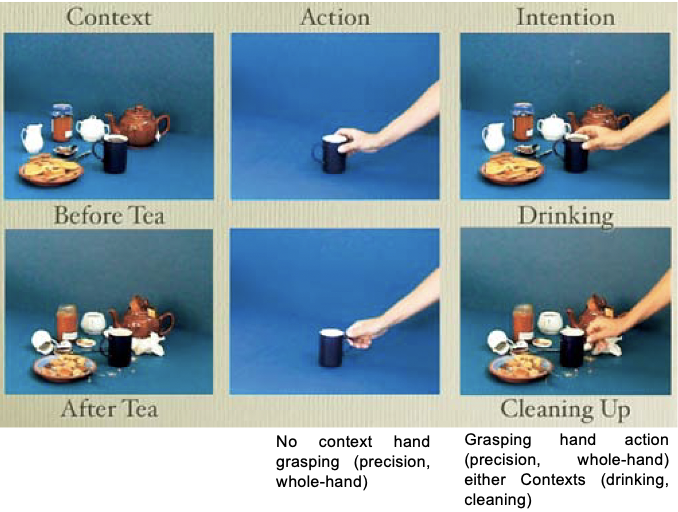

stimuli and instructions

there were 3 different types of 24-s video clips - Context, Action, and Intention

context - there were two types of context video clips

they both showed a scene with a series of three-dimensional objects (a teapot, a mug, cookies, a jar, etc)

the objects were displayed either as just before having tea (‘‘drinking’’ context) or as just after having had tea (‘‘cleaning’’ context)

action - a hand was shown grasping a cup in absence of a context on an objectless background. The grasping action was either a precision grip (the hand grasping the cup handle) or a whole-hand prehension (the hand grasping the cup body). The two grips were intermixed in the Action clip

intention video clips - there were 2 types

they presented the grasping action in the two Context conditions, the ‘‘drinking’’ and the ‘‘cleaning’’ contexts. Precision grip and whole hand prehension were intermixed in both ‘‘drinking’’ and ‘‘cleaning’’ Intention clips. A total of eight grasping actions were shown during each Action clip and each Intention clip.

the ‘‘drinking’’ context suggested that the hand was grasping the cup to drink. The ‘‘cleaning’’ context suggested that the hand was grasping the cup to clean up. Thus, the Intention condition contained information that allowed the understanding of intention, whereas the Action and Context conditions did not (i.e., the Action condition was ambiguous, and the Context condition did not contain any action)

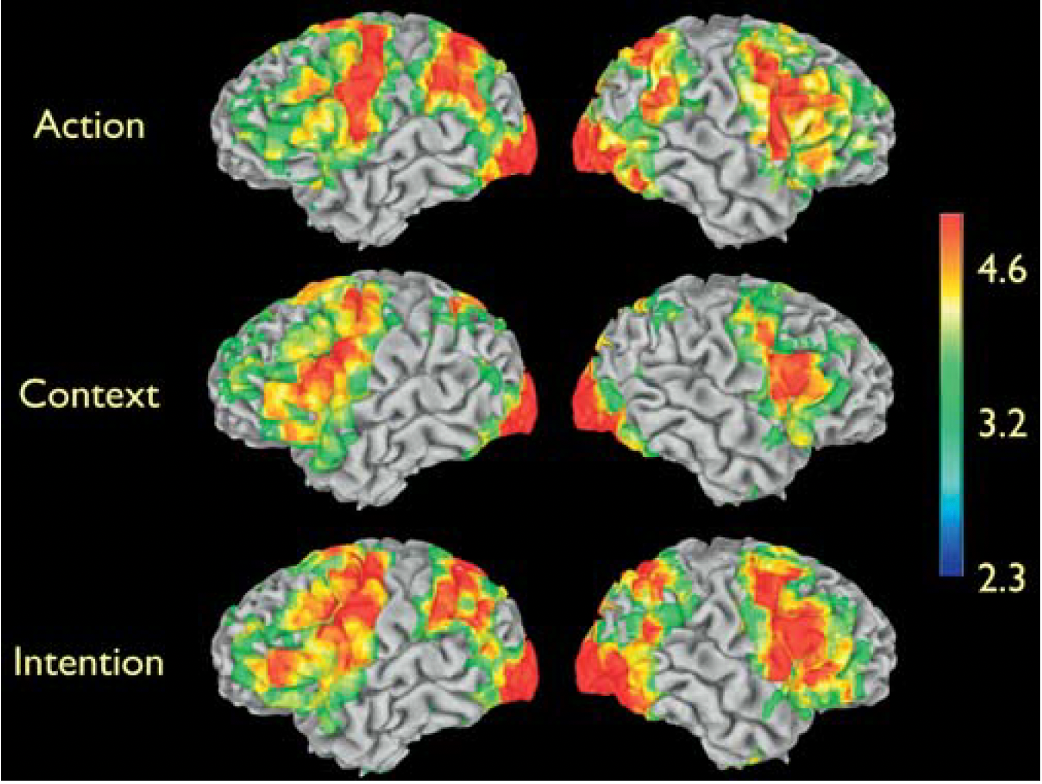

findings

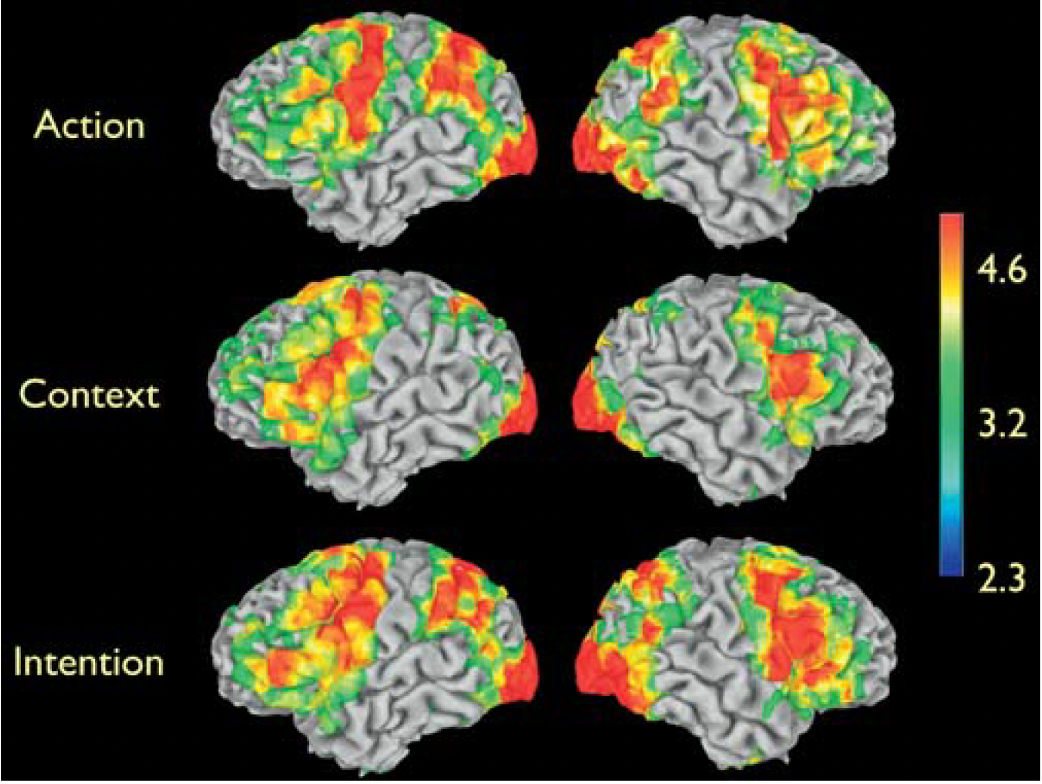

the figure displays the brain areas showing significant signal increase, indexing increased neural activity, for Action, Context, and Intention, compared to rest

large increases in neural activity were observed in occipital, posterior temporal, parietal, and frontal areas (especially robust in the premotor cortex) for observation of the Action and Intention conditions.

observation of the intention and action clips compared to the rest

the observation of the Intention and of the Action clips compared to rest yielded significant signal increase in the parieto-frontal cortical circuit for grasping

this circuit is known to be active during the observation, imitation, and execution of finger movements (‘‘mirror neuron system’’)

the observation of the Context clip compared to rest yielded signal increases in largely similar cortical areas, with the notable exceptions of the superior temporal sulcus (STS) region and inferior parietal lobule

the STS region is known to respond to biological motion, and the absence of the grasping action in the Context condition explains the lack of increased signal in the STS

the lack of increased signal in the inferior parietal lobule is also explained by the absence of an action in the Context condition

context does not activate mirror neurons like action and intention do

critical question for the study

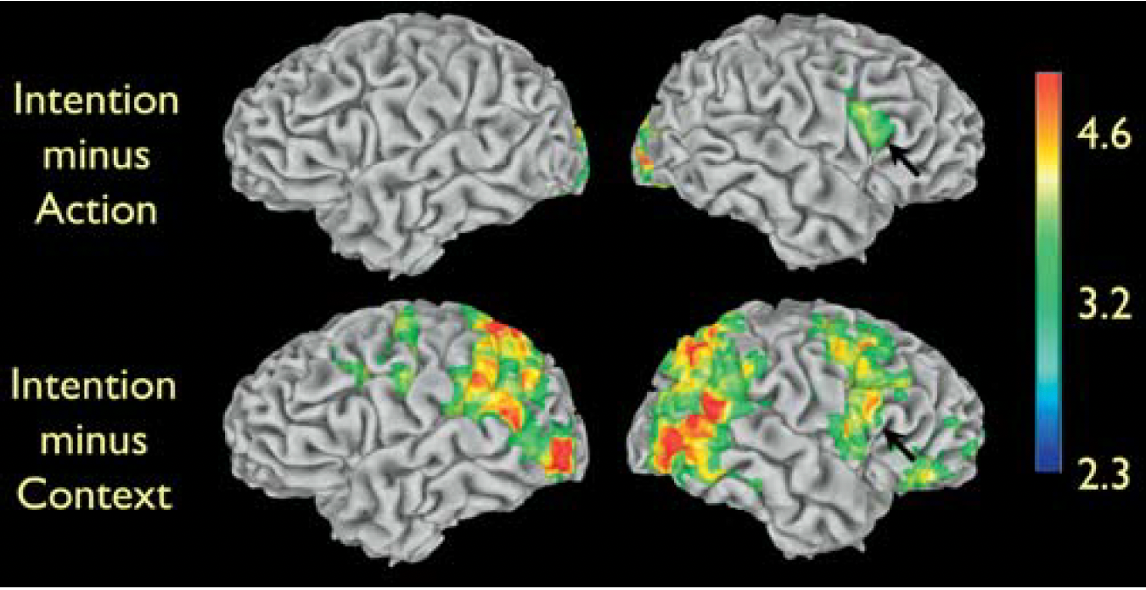

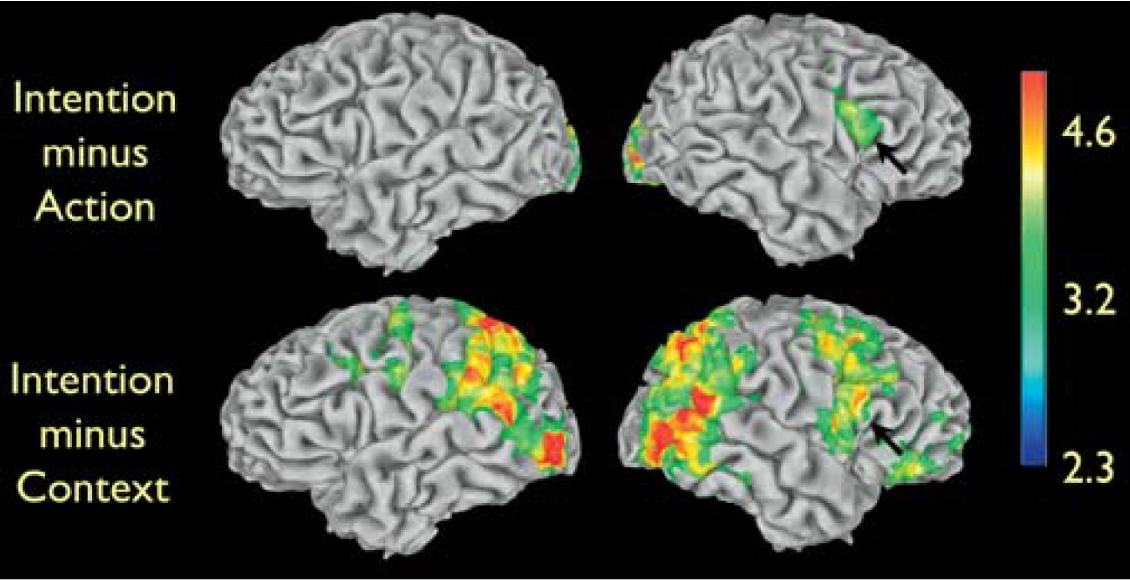

the critical question for this study was whether there are significant differences between the Intention condition and the Action and Context conditions in areas known to have mirror properties in the human brain - the Figure displays these differences - to see if there is a difference in mirror neuron activation

the Intention condition yielded significant signal increases compared to the Action condition in visual areas and in the right inferior frontal cortex, in the dorsal part of the pars opercularis of the inferior frontal gyrus (Figure, upper row)

the increased activity in visual areas is expected, given the presence of objects in the Intention condition, but not in the Action condition. The increased right inferior frontal activity is located in a frontal area known to have mirror neuron properties, thus suggesting that this cortical area does not simply provide an action recognition mechanism (‘‘that’s a grasp’’) but rather it is critical for understanding the intentions behind others’ actions

further testing the functional properties of the signal increase in inferior frontal cortex - intention minus context

to further test the functional properties of the signal increase in inferior frontal cortex, the authors looked at signal changes in the Intention condition minus the Context condition (Figure, lower row)

these signal increases were most likely due to grasping neurons located in the inferior parietal lobule, to neurons responding to biological motion in the posterior part of the STS region

most importantly, signal increase was also found in right frontal areas, including the same voxels—as confirmed by masking procedures—in inferior frontal cortex previously seen activated in the comparison of the Intention condition versus Action condition

thus, the differential activation in inferior frontal cortex observed in the Intention condition versus Action condition, cannot be simply due to the presence of objects in the Intention clips, given that the Context clips also contain objects

overall, (if you need to rewatch then go to 1:20 - 27/02/25)

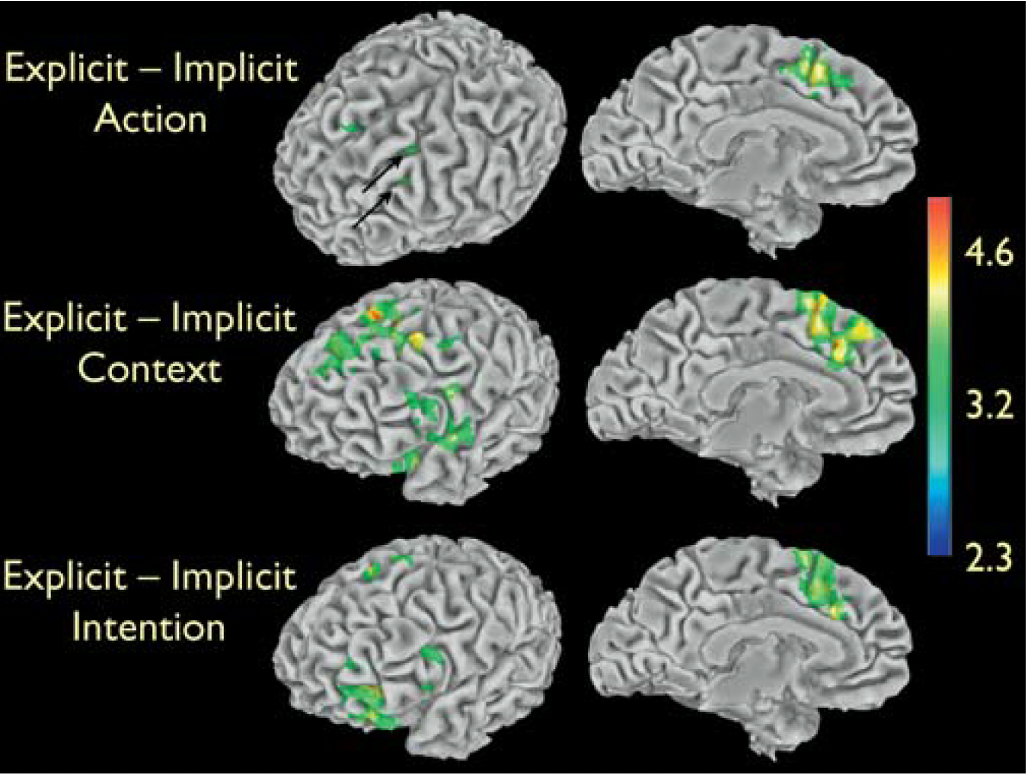

top-down modulation findings

the authors also tested whether a top-down modulation of cognitive strategy may affect the neural systems critical to intention understanding. The 23 volunteers recruited for the experiment received two different kinds of instructions. 11 participants were told to simply watch the movie clips (Implicit task). 12 participants were told to attend to the displayed objects while watching the Context clips and to attend to the type of grip while watching the Action clips.

these participants were also told to infer the intention of the grasping action according to the context in which the action occurred in the Intention clips (Explicit task). After the imaging experiment, participants were debriefed. All participants had clearly attended to the stimuli and could answer appropriately to questions regarding the movie clips. In particular, all participants associated the intention of drinking to the grasping action in the ‘‘during tea’’ Intention clip, and the intention of cleaning up to the grasping action in the ‘‘after tea’’ Intention clip, regardless of the type of instruction received

the two groups of participants that received the two types of instructions had similar patterns of increased signal versus rest for Action, Context, and Intention. The effect of task instructions is displayed in the Figure. In all conditions, participants that received the Explicit instructions had signal increases in the left frontal lobe, and, in particular, in the mesial frontal and cingulate areas. This signal increase is likely due to the greater effort required by the Explicit instructions, rather than to understanding the intentions behind the observed actions. In fact, participants receiving either type of instructions understood the intentions associated with the grasping action equally well

critically, the right inferior frontal cortex—the grasping mirror neuron area that showed increased signal for Intention compared to Action and Context—showed no differences between participants receiving Explicit instructions and those receiving Implicit instructions. This suggests that top-down influences are unlikely to modulate the activity of mirror neuron areas. This lack of top-down influences is a feature typical of automatic processing.

Lacoboni et al’s (2005) discussion

the findings of the present study showing increased activity of the right inferior frontal cortex for the Intention condition strongly suggest that this mirror neuron area actively participates in understanding the intentions behind the observed actions

if this area were only involved in action understanding (the ‘‘what’’ of an action), a similar response should have been observed in the inferior frontal cortex while observing grasping actions, regardless of whether a context surrounding the observed grasping action was present or not.

the present data show that the intentions behind the actions of others can be recognised by the motor system using a mirror mechanism. Mirror neurons are thought to recognise the actions of others, by matching the observed action onto its motor counterpart coded by the same neurons. The present findings strongly suggest that coding the intention associated with the actions of others is based on the activation of a neuronal chain formed by mirror neurons coding the observed motor act and by ‘‘logically related’’ mirror neurons coding the motor acts that are most likely to follow the observed one, in a given context. To ascribe an intention is to infer a forthcoming new goal, and this is an operation that the motor system does automatically.