karl henke, Linear Algebra, Midterm definitions

1/60

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

61 Terms

Linear Equation in n Variables

(Ch. 1) The form “a1x1 + a2x2 + a3x3 … + anxn = b” for n variables “x1, x2, x3, …, xn,” where:

The coefficients a1, a2, a3, …, an are real numbers.

The constant term b is a real number.

The number a1 is the leading coefficient.

The value x1 is the leading variable.

Solution Set

(Ch. 1) The set of all solutions of a linear equation.

System of m Linear Equations

(Ch. 1) A set of m equations (for n variables) where each equation is linear in the same n variables.

This is also referred to as a linear system.

Solution of a Linear System

(Ch. 1) A sequence of n real numbers s1, s2, s3, …, sn that satisfy all m equations when you substitute the values “x1 = s1, x2 = s2, x3 = s3, …, xn = sn.”

Consistent Linear System

(Ch. 1) A system of linear equations that contains at least one solution.

Inconsistent Linear System

(Ch. 1) A system of linear equations that has no solutions.

Theorem (Number of Solutions of a System of Linear Equations)

(Ch. 1) For a system of linear equations, precisely one of the statements below is true.

The system has exactly one solution (consistent system).

The system has infinitely many solutions (consistent system).

The system has no solution (inconsistent system).

Row-Echelon Form

(Ch. 1) A linear system that follows a stair-step pattern and has leading coefficients of 1.

Operations that Produce Equivalent Systems

(Ch. 1) There are three possible operations:

Interchanging two operations.

Multiplying an equation by a nonzero constant.

Adding a multiple of an equation to another equation.

This is also referred to as Elementary Row Operations or Gaussian elimination.

Matrix

(Ch. 1) If m and n are natural numbers, then m x n (read “m by n”) matrix is a rectangular array

[ a11 a12 a13 … a1n],

in which each entry, aij, of the matrix is a number. A m x n matrix has m rows (horizontal lines) and n columns (vertical lines).

Further, the index i is called the row subscript, and the index j is called the column subscript.

Augmented Matrix

(Ch. 1) The matrix derived from the coefficients and constant terms of a system of linear equations.

Coefficient Matrix

(Ch. 1) The matrix derived only from the coefficients of the system.

Row-Echelon Form Properties for a Matrix

(Ch. 1) There are three properties for a matrix:

Any rows consisting entirely of zeros occur at the bottom of the matrix.

For each row that does not consist entirely of zeros, the first nonzero entry is 1 (called a leading 1).

For two successive (nonzero) rows, the leading 1 in the higher row is farther to the left than the leading 1 in the lower row.

Homogeneous Systems

(Ch. 1) Systems of linear equations in which each of the constant terms is zero.

These systems must have at least one solution. Further, if all variables in this system have the value zero, then all equations are satisfied.

Theorem (The Number of Solutions of a Homogeneous System)

(Ch. 1) Every homogeneous system of linear equations is consistent. Moreover, if the system has fewer equations than variables, then it must have infinitely many solutions.

Equality of Matrices

(Ch. 2) Two matrices A = [aij] and B = [bij] are equal when they have the same size (m x n) and:

aij = bij for each 1 <= i <= m, and each 1 <= j <= n.

Matrix Addition

(Ch. 2) If A = [aij] and B = [bij] are matrices of size m x n, then their sum is defined to be the m x n matrix “A + B = [aij + bij].”

The sum of two matrices of different sizes is undefined.

Matrix Scalar Multiplication

(Ch. 2) If A = [aij] is an m x n matrix and c is a scalar, then the scalar multiple of A by c is the m x n matrix “cA = [c * aij].

Matrix Multiplication

(Ch. 2) If A = [aij] is an m x n matrix and B = [bij] is an n x p matrix, then the product AB is an m x p matrix “A * B = [cij], where cij = ai1b1j + ai2b2j + ai3b3j + … + ainbnj.”

Theorem (Properties of Addition and Scalar Multiplication)

(Ch. 2) If A, B, and C are m x n matrices, and c and d are scalars, then the properties below are true:

A + B = B + A (Communicative Property of Addition).

A + (B + C) = (A + B) + C (Associative Property of Addition).

(cd)A = c(dA) (Associative Property of Multiplication).

1A = A (Multiplicative Identity, where 1 is a Scalar).

c(A + B) = cA + cB (Distributive Property).

(c + d)A = cA + dA (Distributive Property).

Theorem (Properties of Zero Matrices)

(Ch. 2) If A is an m x n matrix and c is a scalar, then the properties below are true:

A + Omn = A = Omn + A.

A + (-A) = Omn.

If cA = Omn, then c = 0 or A = Omn.

Theorem (Properties of Matrix Multiplication)

(Ch. 2) If A, B, and C are (with sizes such that the matrix products are defined), and c is a scalar, then the properties below are true:

A * (B * C) = (A * B) * C (Associative Property).

A * (B + C) = A * B + A * C (Left Distributive Property).

(A + B) * C = A * C + B * C (Right Distributive Property).

c(A * B) = (cA) * B = A * (cB).

Theorem (Properties of the Identity Matrix)

(Ch. 2) If A is a matrix of size m x n, then the properties below are true:

A * In = A.

Im * A = A.

Transpose

(Ch. 2) A matrix that is created through writing another matrix’s rows as columns.

Theorem (Properties of Transposes)

(Ch. 2) If A and B are matrices (with sizes such that the matrix operations are defined) and c is a scalar, then the properties below are true:

(AT)T = A (Transpose of a Transpose).

(A + B)T = AT + BT (Transpose of a Sum).

(cA)T = c(AT) (Transpose of a Scalar Multiple).

(AB)T = BT AT (Transpose of a Product).

Inverse of a Matrix

(Ch. 2) A n x n matrix A is invertible when there exists an n x n matrix B such that “A * B = B * A = In,” where In is the identity matrix of order n.

The matrix B is the inverse of A. A matrix that does not have an inverse is noninvertible.

Theorem (Uniqueness of an Inverse Matrix)

(Ch. 2) If A is an invertible matrix, then its inverse is unique. The inverse of A is denoted as A-1.

Theorem (Properties of Inverse Matrices)

(Ch. 2) If A is an invertible matrix, k is a positive integer, and c is a scalar not equal to 0, then A-1, Ak, cA, and AT are invertible and the statements below are true:

(A-1)-1 = A.

(Ak)-1 = (A-1)k.

(cA)-1 = (1/c)A-1.

(AT)-1 = (A-1)T.

Theorem (The Inverse of a Product)

(Ch. 2) If A and B are invertible matrices of order n, then A * B is invertible and:

(A * B)-1 = B-1 * A-1.

Theorem (Cancellation Properties)

(Ch. 2) If C is an invertible matrix, then the properties below are true:

If A * C = B * C, then A = B (Right Cancellation Property).

If C * A = C * B, then A = B (Left Cancellation Property).

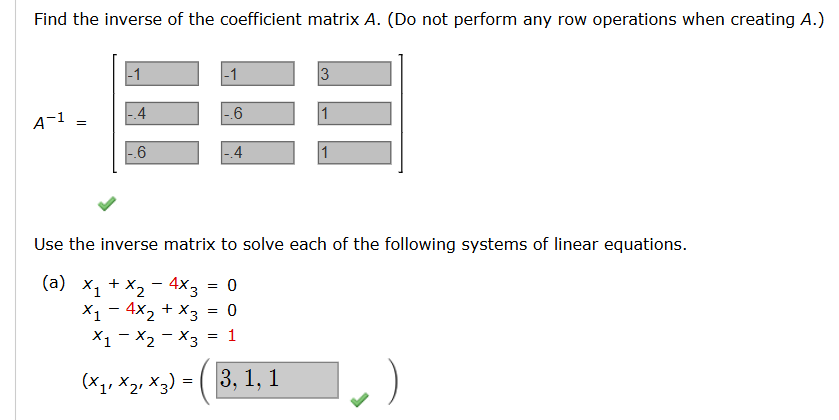

Theorem (Systems of Equations with Unique Solutions)

(Ch. 2) If A is an invertible matrix, then the system of linear equations Ax = b has a unique solutions x = A-1b.

Theorem (Representing Elementary Row Operations)

(Ch. 2) Let E be the elementary matrix obtained by performing an elementary row operation on Im. If that same elementary row operation is performed on an m x n matrix A, then the resulting matrix is the product E * A.

Row Equivalence

(Ch. 2) Let A and B be m x n matrices. Matrix B is row-equivalent to A when there exists a finite number of elementary matrices E1, E2, …, Ek such that “B = Ek * Ek-1 * … * E2 * E1 * A.

Theorem (Elementary Matrices are Invertible)

(Ch. 2) If E is an elementary matrix, then E-1 exists and is an elementary matrix.

Theorem (A Property of Invertible Matrices)

(Ch. 2) A square matrix A is invertible if and only if it can be written as the product of elementary matrices.

LU-Factorization

(Ch. 2) If the n x n matrix A can be written as the product of a lower triangular matrix L and an upper triangular matrix U, then A = L * U is an LU-factorization of A.

Determinant of a 2 × 2 Matrix

(Ch. 3) The determinant of a 2 × 2 matrix is “det(A) = |A| = a11a22 - a21a12.”

Cofactor of a Square Matrix

(Ch. 3) If A is a square matrix, then the minor Mij of the element aij is the determinant of the matrix obtained by deleting the i-th row and j-th column of A. The cofactor of the entry aij is given by “Cij = (-1)i + j Mij.

Determinant of a Square Matrix

(Ch. 3) If A is a square matrix of order n >= 2, then the determinant of A is the sum of the entries in the first row of A multiplied by their respective cofactors. That is “det(A) = |A| = a11C11 + a12C12 + … + a1nC1n.”

Theorem (Expansion by Cofactors)

(Ch. 3) Let A be a square matrix of order n. Then the determinant of A is “det(A) = |A| = ai1Ci1 + ai2Ci2 + … + ainCin” (i-th row expansion) or “det(A) = |A| = a1jC1j + a2jC2j + … + anjCnj” (j-th column expansion).

Theorem (Determinant of a Triangular Matrix)

(Ch. 3) If A is a triangular matrix of order n, then its determinant is the product of the entries on the main diagonal. That is, “det(A) = a11a22 … ann.”

Theorem (Elementary Row Operations and Determinants)

(Ch. 3) Let A and B be square matrices.

When B is obtained from A by interchanging two rows of A, det(B) = -det(A).

When B is obtained from A by adding a multiple of a row of A to another row of A, det(B) = det(A).

When B is obtained from A by multiplying a row of A by a nonzero constant c, det(B) = cdet(A).

Theorem (Conditions that Yield a Zero Determinant)

(Ch. 3) If A is a square matrix and any one of the conditions below is true, then det(A) = 0:

An entire row (or an entire column) consists of zeros.

Two rows (or columns) are equal.

One row (or column) is a multiple of another row (or column).

Theorem (Determinant of a Matrix Product)

(Ch. 3) If A and B are square matrices of order n, then “det(A * B) = det(A)det(B).”

Theorem (Determinant of a Scalar Multiple of a Matrix)

(Ch. 3) If A is a square matrix of order n and c is scalar, then the determinant of cA is “det(cA) = cn det(A).”

Theorem (Determinant of an Invertible Matrix)

(Ch. 3) A square matrix A is invertible (nonsingular) if and only if det(A) does not equal 0.

Theorem (Determinant of an Inverse Matrix)

(Ch. 3) If A is an invertible n x n matrix, then “det(A-1) = (1 / det(A)).”

Equivalent Conditions for a Nonsingular Matrix

(Ch. 3) If A is an n x n matrix, then the statements below are equivalent:

A is invertible.

Ax = b has a unique solution for every n x 1 matrix b.

Ax = 0 has only the trivial solution.

A is row-equivalent to In.

A can be written as the product of elementary matrices.

det(A) is not equal to 0.

Theorem (Determinant of a Transpose)

(Ch. 3) If A is a square matrix, then det(A) = det(AT).

Theorem (The Inverse of a Matrix using its Adjoint)

(Ch. 3) If A is an n x n invertible matrix, then “A-1 = (1 / det(A)) * adj(A).”

Theorem (Cramer’s Rule)

(Ch. 3) If a system of linear equations in n variables has a coefficient matrix A with a nonzero determinant, then the solution of the system is “x1 = (det(A1)/det(A)), x2 = (det(A1)/det(A)), xn = (det(An)/det(A))” where the i-th column of Ai is the column of constants in the system of equations.

Properties of Vector Addition and Scalar Multiplication in the Plane

(Ch. 4) Let u, v, and w be vectors in the plane, and let c and d be scalars.

u + v is a vector in the plane (Closure under addition).

u + v = v + u (Communicative property of addition).

(u + v) + w = u + (v + w) (Associative property of addition).

u + 0 = u (Additive identity property).

u + (-u) = 0 (Additive inverse property).

cu is a vector in the plane (Closure under scalar multiplication).

c(u + v) = cu + cv (Distributive property).

(c + d)u = cu + du (Distributive property).

c(du) = (cd)u (Associative property of multiplication).

1(u) = u (Multiplicative identity property).

n-space

(Ch. 4) The set of all n-tuples, denoted by Rn.

Theorem (Properties of Additive Identity and Additive Inverse)

(Ch. 4) Let v be a vector in Rn, and let c be a scalar. Then the properties below are true:

The additive identity is unique. That is, if v + u = v, then u = 0.

The additive inverse of v is unique. That is, if v + u = 0, then u = -v.

0v = 0.

c0 = 0.

if cv = 0, then c = 0 or v = 0.

-(-v) = v.

Vector Space

(Ch. 4) Let V be a set on which two operations (vector addition and scalar multiplication) are defined. If the listed below axioms are satisfied for every v, u, and w in V and arbitrary scalars (c and d), then V is a vector space.

Addition:

u and v is in V (Closure under addition).

u + v = v + u (Communicative property).

u + (v + w) = (u + v) + w (Associative property).

V has a zero vector 0 such that in every u in V, u + 0 = u (Additive identity).

For every u in V, there is a vector V denoted -u such that “u + (-u) = 0” (Additive inverse).

Multiplication:

cu is in V (Closure under scalar multiplication).

c(u + v) = cu + cv (Distributive property).

(c + d)u = cu + du (Distributive property).

c(du) = (cd)u (Associative property).

1(u) = u (Scalar identity).

Theorem (Properties of Scalar Multiples)

(Ch. 4) Let v be any element of a vector space V, and let c be any scalar. Then the properties below are true.

0v = 0.

c0 = 0.

If cv = 0, then c = 0 or v = 0.

(-1)v = -v.

Subspace

(Ch. 4) A nonempty subset W of a vector space V is called a subspace of V if W is a vector space under the operations of addition and scalar multiplication defined in V.

Test for a Subspace

(Ch. 4) If W is a nonempty subset of a vector space V, then W is a subspace of V if and only if the two closure conditions listed below hold:

If u and v are in W, then u + v is in W.

If u is in W and c is any scalar, then cu is in W.

Theorem (The Intersection of Two Subspaces is a Subspace)

(Ch. 4) If V and W are both subspaces of a vector space U, then the intersection of V and W is also a subspace of U.

Spanning Set of a Vector Space

(Ch. 4) Let S = {v1, v2, …, vk} be a subset of a vector space V. The set S is a spanning set of V when every vector in V can be written as a linear combination of vectors in S. In such cases it is said that S spans V.

Span of a Set

If S = {v1, v2, …, vk} is a set of vectors in a vector space V, then the span of S is the set of all linear combinations in the vector S, “span(S) = {c1v1 + c2v2 + … + ckvk | c1, c2, …, ck are real numbers}.”

The span of S is denoted by “span(S)” or “span{v1, v2, …, vk}.”

When span(S) = V, it is said that V is spanned by {v1, v2, …, vk}, or that S spans V.