Utility

1/123

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

124 Terms

Test Utility

The usefulness or practical value of a test in improving efficiency, decision-making, or outcomes.

What does test utility measure?

It measures how helpful or valuable a test is in achieving practical goals, such as selecting employees, diagnosing patients, or improving training.

Scope of Testing

“Testing” can refer to a single test or to an entire testing program involving multiple tests or batteries.

Is the concept of utility limited to one test?

No. It applies to both individual tests and large-scale testing programs that use many tests.

Utility Beyond Testing

Utility can also refer to the usefulness or value of a training program or intervention, not just tests.

Example of test utility in another context?

Evaluating whether adding a new component to a corporate training program increases its effectiveness.

What factors determine a test’s utility?

Reliability, validity, and other data that indicate how much the test improves decision-making or performance efficiency.

Relationship Between Utility, Reliability, and Validity

Reliability and validity contribute to a test’s utility, but they are not the only factors. A test must also provide meaningful, cost-effective, and practical benefits.

Can a test be valid and reliable but have low utility?

Yes. If it doesn’t add practical value or improve efficiency in real-world applications, its utility is low despite being psychometrically sound.

Factors Affecting Test Utility

The usefulness of a test is influenced by its psychometric soundness, costs, and benefits.

What are the three main factors that affect a test’s utility?

(1) Psychometric soundness,

(2) Costs,

(3) Benefits.

Psychometric Soundness

Refers to the reliability and validity of a test. A test is psychometrically sound if its reliability and validity coefficients are acceptably high for its intended purpose.

How is utility different from reliability and validity?

Reliability shows how consistently a test measures something.

Validity shows whether it measures what it claims to measure.

Utility shows the practical value of the test results in real-world decisions.

Can a test be valid but not useful?

Yes. A test may be valid but have limited utility if it doesn’t add practical value to decisions (e.g., when everyone is hired regardless of test results).

What happens to validity if reliability is low?

Reliability sets a ceiling on validity; a test cannot be valid if it is not reliable.

Does validity set a ceiling on utility?

Generally yes—valid tests are more likely to have high utility—but this is not always true because utility depends on multiple factors.

Example Card: Valid but Not Useful

Definition: A cocaine-detection sweat patch showed 92% agreement with urine tests but had limited utility because participants often tampered with it, reducing real-world usefulness.

Costs (in Test Utility)

Refers to the disadvantages, losses, or expenses—economic or noneconomic—associated with testing or not testing.

What are examples of economic costs in testing?

Purchasing tests and materials

Paying staff for test administration and scoring

Facility rental or maintenance

Insurance, legal, or licensing fees

What are noneconomic costs in testing?

Harm, injury, or loss of confidence resulting from poor testing or cost-cutting, such as unsafe airline operations or failure to detect child abuse.

Example Card: Cost–Benefit in X-ray Testing

A study found that adding two more X-ray views for suspected child abuse increased fracture detection. Though more expensive, the additional cost was justified by the benefit of protecting children.

What can happen if an organization removes or reduces its testing programs to cut costs?

It can result in long-term financial losses, lower safety, and loss of public trust—outweighing the short-term savings.

Benefits (in Test Utility)

The profits, gains, or advantages—economic or noneconomic—obtained from using a test.

Give examples of economic benefits of testing.

Higher productivity and company profits

Better quality control and less waste

More effective employee selection

Give examples of noneconomic benefits of testing.

Improved worker performance

Faster training

Fewer accidents and lower turnover

Better learning or work environment

How do professionals balance psychometric soundness, costs, and benefits?

They use formulas, tables, and professional judgment, guided by prudence, vision, and common sense.

What is the key principle of cost–benefit analysis in test utility?

A psychometrically sound test is worth paying for if its potential benefits outweigh its costs—or if the costs of not using it are high.

Utility Analysis

A family of cost–benefit techniques used to determine the usefulness or practical value of a test, training program, or intervention.

What is the main goal of a utility analysis?

To determine whether the benefits of using a test or program outweigh its costs.

Family of Techniques

Utility analysis is not one single method but a broad group of techniques that can vary from simple cost comparisons to complex mathematical models.

Why is utility analysis called a “family of techniques”?

Because it includes various approaches, each requiring different kinds of data and producing different outputs.

What are the two general purposes of conducting a utility analysis?

To evaluate tests or assessment tools.

To evaluate training programs or interventions.

What questions can a utility analysis answer about tests?

Which test is preferable for a specific purpose?

Is one tool of assessment (like a test) better than another (like observation)?

Should new tests be added to those already in use?

Is no testing at all better than testing?

What questions can a utility analysis answer about training programs or interventions?

Which program or intervention is better?

Does adding or removing parts improve effectiveness or efficiency?

Is having no program better than running one?

Cost–Benefit Analysis (in Testing)

The process of weighing the financial and practical benefits of a test or program against the costs involved in its implementation.

What is the endpoint of a utility analysis?

An educated decision about which of several possible actions is most optimal based on cost and benefit considerations.

What is a simple way to describe what utility analysis asks?

“Which test (or program) gives us more bang for the buck?”

Example of Utility Analysis (Cascio & Ramos, 1986)

A study showing that a particular assessment approach for selecting managers saved a telecommunications company over $13 million in four years, illustrating the financial impact of utility analysis.

What key skills are needed to understand utility analysis?

A good grasp of both written and graphical data, since results are often presented using quantitative models and visual comparisons.

What types of data may utility analyses require?

Statistical data (like validity coefficients), financial data (like cost of tests), and outcome data (like performance improvements).

Outcome of Utility Analysis

A data-based judgment identifying the most cost-effective and beneficial test, training program, or intervention among alternatives.

Expectancy Data

Information showing the likelihood that a test-taker will achieve a certain performance level (e.g., passing, acceptable, failing). Usually derived from a scatterplot converted to an expectancy table.

What is the main purpose of an expectancy table in utility analysis?

To estimate how test scores relate to performance outcomes, helping decision-makers predict success rates for employees.

Taylor-Russell Tables

Tables that estimate the percentage of employees who will be successful if a particular test is used in hiring, based on three variables: validity coefficient, selection ratio, and base rate.

What three variables are required to use the Taylor-Russell Tables?

Validity coefficient of the test

Selection ratio (number hired ÷ number of applicants)

Base rate (percentage of current successful employees)

What is a selection ratio?

The ratio of people hired to people applying. Example: 50 hired out of 100 applicants = 0.50.

What does base rate mean?

The percentage of people already successful under the current hiring system.

What is one limitation of the Taylor-Russell tables?

They assume a linear relationship between test scores and job performance, and they require a clear cutoff between “successful” and “unsuccessful” employees.

Naylor-Shine Tables

An alternative to the Taylor-Russell tables that estimate the difference in average criterion scores (e.g., job performance) between the selected and unselected groups.

What kind of validity coefficient is required for both Taylor-Russell and Naylor-Shine tables?

A concurrent validity coefficient obtained from current employees.

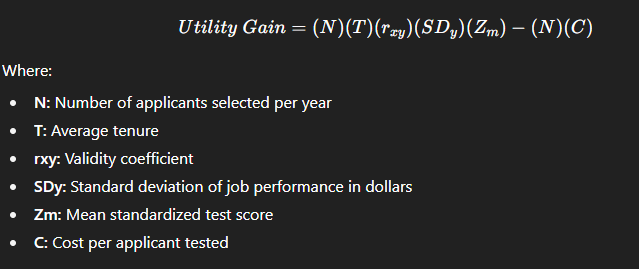

Brogden-Cronbach-Gleser (BCG) Formula

A formula that calculates the monetary utility gain of using a test by estimating the dollar value of increased job performance minus testing costs.

Write the Brogden-Cronbach-Gleser Formula.

What does utility gain represent?

The estimated financial benefit (or productivity increase) from using a test, after subtracting its costs.

How is SDy (standard deviation of performance in dollars) often estimated?

As 40% of the mean annual salary for the job.

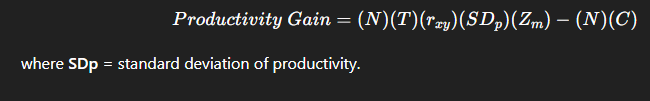

What is the productivity gain formula (a modification of the BCG formula)?

Decision Theory

A statistical approach to test utility that helps set optimal cutoff scores and analyze the consequences of hiring decisions.

What are false positives and false negatives in decision theory?

False positive: Applicant passes the test but fails the job.

False negative: Applicant fails the test but could have succeeded.

According to decision theory, why is setting cutoff scores important?

To balance the risk between hiring unqualified candidates (false positives) and rejecting qualified ones (false negatives).

Give an example of when false negatives are more acceptable than false positives.

In airline pilot testing, it’s safer to reject a qualified pilot (false negative) than to hire an unqualified one (false positive).

What did Schmidt et al. (1979) find about test utility?

They showed that valid tests significantly increase company efficiency and profit—sometimes saving millions of dollars per year.

Why are many employers reluctant to use decision-theory-based hiring?

Because of complex application and the risk of legal challenges.

Base Rate

The proportion of people who would succeed or fail under the current selection system. Extremely high or low base rates can make a test useless for selection purposes.

Pool of Job Applicants

Refers to the number and availability of qualified individuals applying for a job. Many utility analyses assume an unlimited supply of applicants, but in reality, this pool changes depending on the economic climate and job type.

Acceptance Rate of Offers

The percentage of selected applicants who actually accept the job. Utility estimates often overestimate test usefulness because top scorers may decline offers for better opportunities.

Adjustment of Utility Estimates

Because not all qualified applicants accept job offers, utility estimates may need to be adjusted downward by as much as 80% to reflect realistic hiring outcomes (Murphy, 1986).

Job Complexity

Jobs vary in difficulty and performance variability. More complex jobs show greater differences in performance levels, affecting how well utility models apply across job types.

Cut Score (or Cutoff Score)

A numerical reference point dividing test scores into classifications (e.g., pass/fail) used for decisions like hiring or grading.

Relative Cut Score (Norm-Referenced Cut Score)

A cut score based on norm-related performance, meaning it depends on how others perform.

Example: Only the top 10% of test-takers receive an “A.”

Fixed Cut Score (Absolute Cut Score)

A score set based on a minimum proficiency level, independent of how others perform.

Example: Passing a driving test requires meeting a fixed performance standard.

Multiple Cut Scores

Using two or more cut scores for one predictor to categorize test-takers into groups such as A, B, C, D, or F.

Multiple Hurdles

A multistage decision-making process where each stage has a cut score that must be met to move forward.

Example: College admissions requiring passing scores, GPA, and interviews.

Compensatory Model of Selection

A model assuming high scores on one predictor can offset low scores on another. It allows a strong skill in one area to compensate for weakness in another.

Differential Weighting

In compensatory models, predictors are given different weights based on importance.

Example: Safe driving history may count more than customer service in driver selection.

Multiple Regression in Selection

A statistical method ideal for the compensatory model, combining different predictors with weighted importance to make overall selection decisions.

What is a cut score?

A reference point on a test used to divide test takers into categories (e.g., pass/fail, A/B grade) based on performance.

Why are cut scores important?

Because they are used to make high-stakes decisions such as admission, certification, employment, promotion, or legal competence.

Give examples of decisions influenced by cut scores.

Admission to schools, job selection, professional licensing, legal competence, citizenship, and determining intoxication levels.

What are the two main types of cut scores?

Fixed (absolute) cut scores and relative (norm-referenced) cut scores.

What is a fixed cut score?

A set standard of minimum competency that must be met regardless of how others perform (e.g., passing a driver’s test).

What is a relative cut score?

A score set based on comparison to the performance of a group (e.g., top 10% of students get an A).

Who debates the best way to set cut scores?

Educators, psychologists, researchers, and corporate statisticians.

Why is there debate about setting cut scores?

Because different methods can lead to different outcomes, and no single method is universally accepted as most fair or accurate.

What factors must be balanced when setting cut scores?

Technical accuracy, fairness, validity, and ethical considerations.

Who raised key questions about how to set cut scores fairly?

Reckase (2004), who inspired further research and discussion on optimal cut score methods.

Angoff Method

A method for setting fixed cut scores developed by William Angoff (1971), where expert judgments are used to estimate how minimally competent test takers would perform on each test item.

Who developed the Angoff Method?

William Angoff in 1971.

What is the main purpose of the Angoff Method?

To set a fixed cut score for determining whether test takers meet a minimum level of competence or possess a particular trait, ability, or attribute.

How is the Angoff Method applied in personnel selection?

Experts estimate how many items a minimally competent candidate should answer correctly; these estimates are averaged to set the cut score.

How is the Angoff Method applied to measure traits or abilities?

A panel of experts judges how a person with a specific trait or ability would likely respond to each item.

How is the final cut score determined in the Angoff Method?

By averaging the judgments of all expert raters.

What does it mean if someone scores at or above the Angoff cut score?

They are considered competent or sufficiently high in the trait, attribute, or ability being measured.

What is the main advantage of the Angoff Method?

It is relatively simple, widely used, and effective when expert agreement is high.

What is the major weakness (“Achilles heel”) of the Angoff Method?

Low inter-rater reliability — when experts disagree significantly about how test takers should respond.

What is recommended when there is major disagreement among experts using the Angoff Method?

Use a more data-driven method (a “Plan B”) for setting cut scores instead of relying solely on subjective judgments.

Known Groups Method (Contrasting Groups Method)

A method for setting cut scores by comparing test performance between groups known to possess and not to possess a particular trait, ability, or attribute.

What is another name for the Known Groups Method?

The Method of Contrasting Groups.

What is the main idea behind the Known Groups Method?

To determine a cut score that best discriminates between two groups—one that possesses a trait, ability, or skill, and one that does not.

What type of data is collected in the Known Groups Method?

Data from groups known to have and not to have the trait, attribute, or ability being measured.

How is the cut score determined in the Known Groups Method?

By finding the point on the test that best separates the performance of the two contrasting groups—where their scores overlap the least.

Give an example of how the Known Groups Method works in practice.

A college gives a placement test to all freshmen and later compares scores of students who passed vs. failed college-level math. The cut score is set at the point where their performance distributions overlap the least.