Clustering Flashcards

1/23

Earn XP

Description and Tags

Lecture 11

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

24 Terms

What is clustering?

A technique that groups similar data points together based on their characteristics, without using predefined labels. To create clusters where items within a cluster are similar to each other, but different from items in other clusters.

How does clustering differ from classification?

Clustering works without labeled data, finding natural groups, while classification assigns data to predefined categories using labeled training data.

What is K-means clustering?

A popular algorithm that divides data into K clusters by assigning each point to the cluster with the nearest center.

How does the K-means algorithm work?

1) Pick K initial centers, 2) Assign each point to the nearest center, 3) Recalculate centers, 4) Repeat until stable.

What is a centroid in K-means?

The center point of a cluster, calculated as the average of all points in that cluster.

What is inertia in K-means?

The sum of squared distances between each point and its centroid, measuring how compact the clusters are.

Why might K-means give different results on different runs?

Because the initial centroids are chosen randomly, which can lead to different final clustering results.

What is Mini-batch K-means?

A faster version of K-means that uses small random subsets of data in each iteration instead of the entire dataset.

Why is choosing the right number of clusters important?

Too few clusters might miss important patterns, while too many can make the results hard to interpret and less meaningful.

What is the Elbow Method?

A technique to find the optimal K by plotting inertia against different K values and looking for the "bend" in the curve.

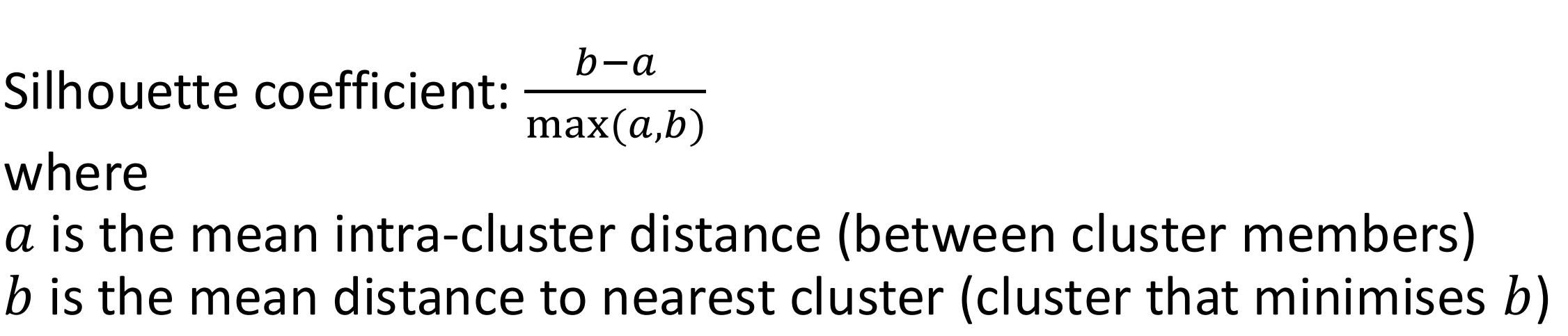

What is the Silhouette Coefficient?

The Silhouette Coefficient (or Silhouette Score) is a measure used to evaluate how well data points have been clustered. It combines two factors:

How similar a point is to its own cluster (cohesion)

How different it is from other clusters (separation)

How is the Silhouette Coefficient calculated?

What is DBSCAN?

A density-based clustering algorithm that groups dense regions of points and identifies outliers in sparse regions.

What are the two main parameters in DBSCAN?

Epsilon (ε): the radius around each point to check, and MinPoints: the minimum number of points needed in that radius to form a dense region.

What are core points in DBSCAN?

Points that have at least MinPoints within their ε-neighborhood, forming the "heart" of clusters.

How does DBSCAN handle outliers?

It labels points that aren't part of any dense region as noise or outliers, rather than forcing them into clusters.

What advantage does DBSCAN have over K-means?

It can find clusters of any shape (not just circular) and doesn't require specifying the number of clusters beforehand.

What do negative labels (-1) represent in DBSCAN results?

They indicate noise points or outliers that don't belong to any cluster.

What are Gaussian Mixture Models (GMM)?

Models that assume data comes from a mix of several Gaussian distributions, good for overlapping or elliptical clusters.

What is Hierarchical Clustering?

A method that builds a tree of clusters, allowing you to examine many possible clustering solutions at different levels of detail.

How does Agglomerative Clustering work?

Starts with each point as its own cluster and repeatedly merges the closest clusters until reaching the desired number.

How can you evaluate clusters if you have some labeled data?

Compare cluster assignments to known labels using metrics like Rand Index or Adjusted Mutual Information.

Why is feature scaling important for clustering?

Because clustering algorithms use distances between points, so features with larger scales could unfairly dominate the results.

What's a good way to visualize high-dimensional clusters?

Use dimensionality reduction techniques like PCA or t-SNE to plot the clusters in 2D or 3D space.