Lang & Comm - Lecture 8

1/60

Earn XP

Description and Tags

the neurobiology of language

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

61 Terms

MEG

magnetoencephalography

what is MEG?

brain imaging technique

measures the magnetic fields generated by the electrical activity of neurons in the brain

indicates the electrical - and therefore active - areas of the brain

advantages and applications of meg

advantages

non-invasive

head is placed in a helmet

no cutting, injections, radiation exposure

high resolution

excellent spatial (location of neuronal activity) and temporal (millisecond precision) resolution

much faster than MRI

applications

widely used in research

used in diagnosis showing active brain regions

brain-computer interfaces

studies on traumatic brain injuries, dementia, autism

weaknesses of MEG

High Cost

Shielding Requirements: Needs a specialised magnetically shielded room

Depth Sensitivity: The signal decays rapidly with distance, making it much harder to detect activity in deep brain structures (e.g., the thalamus) compared to the cortex.

Movement Sensitivity: Since the sensors are in a fixed helmet, the patient must remain perfectly still to avoid data distortion.

what is fMRI

type of magnetic resonance imaging

shows which areas of the brain are active while a person is performing a specific task

how fmri works

use large magnet to create images of the body

measures specific signal related to how much oxygen the blood contains

blood has magnetic properties that change based on oxygen levels

Hickok & Poeppel - ventral stream overview

primarily responsible for transforming acoustic speech signals into conceptual and semantic representations

process of speech recognition or auditory comprehension

sound to meaning

Hickok & Poeppel - ventral stream function

maps sensory or phonological representations onto lexical conceptual representations

Hickok & Poeppel - ventral stream anatomy

structures in superior and middle portions of the temporal lobe

Superior Temporal Sulcus (STS) - phonological processing

Posterior Middle Temporal Gyrus (pMTG) - lexical-semantic interface

linking phonological information to widely distributed conceptual networks

Hickok & Poeppel - ventral stream organisation and laterality

assumed to be largely bilaterally organised

explains why unilateral temporal lobe damage often fails to cause substantial speech recognition deficits

Hickok & Poeppel - ventral stream multi-time resolution

stream is hypothesised to contain parallel pathways that integreate info over different timescales

short, bilateral pathway for resolving segment-level info (phonemes)

longer, right-dominant pathway for resolving syllable-level info (prosodic cues)

Hickok & Poeppel - dorsal stream overview

primarily involved in auditory-motor integration, translating acoustic speech signals into articulatory motor representations in the front lobe

sound to action

Hickok & Poeppel - dorsal stream function

crucial for speech development and normal speech production

provides neual mechanisms for phonological short-term memory

Hickok & Poeppel - dorsal stream anatomy

involves structures in posterior dorsal temporal lobe, parietal operculum, and posterior frontal lobe

Hickok & Poeppel - dorsal stream organisation and laterality

strongly left-hemisphere dominant

lesions in this area lead to prominent speech production deficits

Hickok & Poeppel - speech recognition

auditory comprehension

transforming sounds to access the mental lexicon

primary neural reliance

ventral stream circuitry

Hickok & Poeppel - speech perception

sub lexical tasks

primary neural reliance

dorsal stream circuitry

tasks involve phonological working memory and executive control

cognitive neuroimaging techniques

EEG

MRI/fMRI

MEG

OPM-MEG (optically pumped magnetometers MEG)

MEG overview

Records the magnetic fields generated by brain activity

Has good spatial resolution and excellent temporal resolution

This can capture rapidly changing brain activities

Magnetic fields outside the skull

MEG captures magnetic fields outside the skull around the synchronised neuronal activity

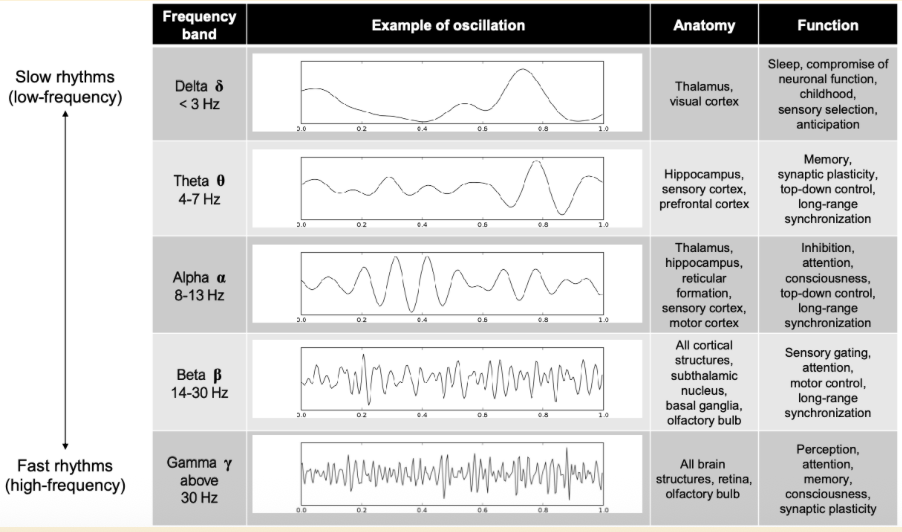

neural oscillations

brain rhythms

picture: each dot is a single neuron activity, and the x-axis indicates the time

there are moments of silence, and then rapid activation

if the neuron is activated 10 times per second, then this is labelled ‘10 Hz rhythm’

types of neural oscillations

alpha rhythm is very dominant in the brain

< 3 rhythm

per second under 3 rhythm fluctuations

speech overview

actual sound of spoken language

oral form of communicating

talking: using the muscles of the tongue, lips, jaw and vocal tract in a very precise and coordinated way to produce recognisable sounds that make up language

how we say sounds and words

speech includes:

articulation

how we make speech sounds using the mouth, lips and tongue

voice

how we use our vocal folds and breath to make sounds

our voice can be loud or soft, high-pitched or low-pitched

fluency

this is the rhythm of our speech

we sometimes repeat sounds or pause while talking

people who do this a lot may stutter

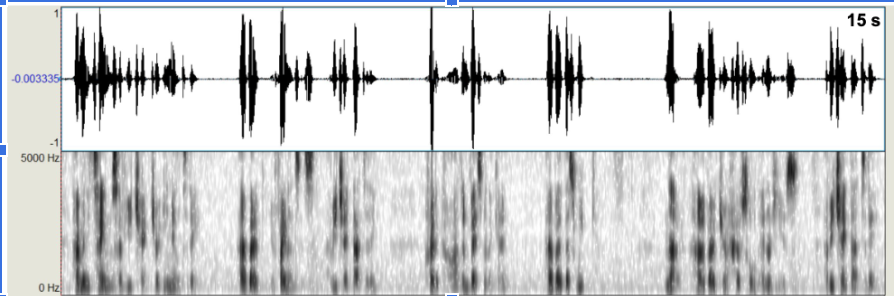

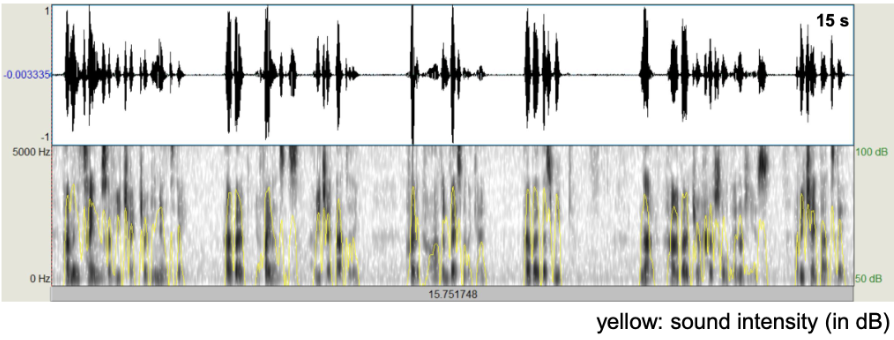

speech spectrogram

raw speech signal (top) and spectrogram (bottom)

speech spectrogram with sound intensity

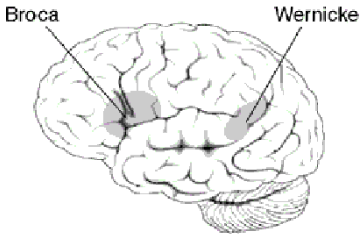

old “localist” view on aphasia

aphasia is a disorder that results from damage to portions of the brain that are responsible for language

double dissociation between Broca’s and Wernicke’s aphasia

broca’s aphasia

impaired speech production

not-fluent aphasia, in which the output of spontaneous speech is markedly diminished

there is a loss of normal grammatical structure

specifically, small linking words, conjunctions, such as and, or, and but, and the use of prepositions are lost

wernicke’s aphasia

impaired language comprehension

despite this speech may have a normal rate, rhythm, and grammar

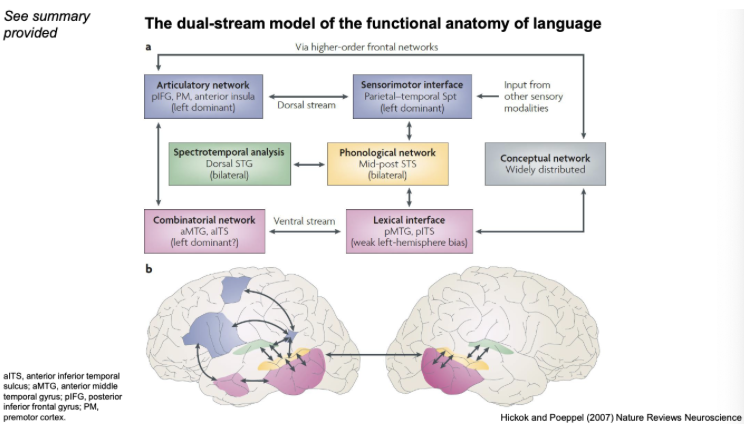

Hickok and Poeppel (2007) - dual-stream model of functional anatomy of language image

Hickok and Poeppel (2007) - dual-stream model of functional anatomy of language - stage 1

Firstly, the earliest stage of cortical speech processing involves some form of spectrotemporal analysis, which is carried out in auditory cortices bilaterally in the supratemporal plane (superior temporal gyrus (STG), depicted in green).

Hickok and Poeppel (2007) - dual-stream model of functional anatomy of language - stage 2

Secondly, phonological-level processing and representation involves the middle to posterior portions of the superior temporal sulcus (STS) bilaterally, although there may be a weak left-hemisphere bias at this level of processing (depicted in yellow).

Hickok and Poeppel (2007) - dual-stream model of functional anatomy of language - stage 3

Subsequently, the system diverges into two broad streams: a dorsal pathway (blue) and a ventral pathway (pink).

The dorsal pathway maps sensory or phonological representations onto articulatory motor representations which is strongly left dominant.

The ventral pathway (pink) that maps sensory or phonological representations onto lexical conceptual representations, which is bilaterally organised with a weak left-hemisphere bias.

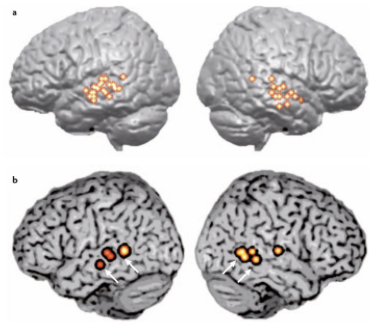

Hickok and Poeppel (2007) - lexical phonological networks in the superior temporal sulcus

A

brain responses to acoustic signals with phonemic info vs. non-speech signals

B

brain responds to words with many similar-sounding neighbours (high-density words) vs. words with few similar-sounding neighbours (low-density words)

high neighbourhood density words

words in a neighbourhood are based on one sound substitution, one sound deletion or one sound addition

phonetically similar to many other words and have 11 or more neighbours

e.g. “ship”. has 18 neighbours

low neighbourhood density words

germ (7)

giant (0)

jaguar (0)

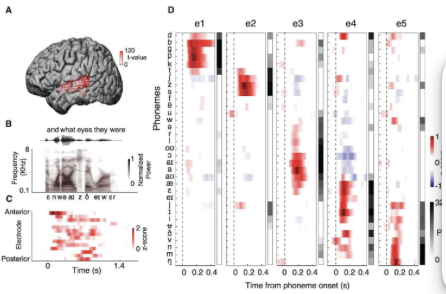

Mesgarani et al. (2014) - phonetic feature encoding in the human superior temporal gyrus - methodology and results

hgih-density direct cortical surface recordings in humans were used while the patients listened to natural, continuous speech

ECoG: Electrocorticogram on epileptic patients

it revealed the STG representation of the entire English phonetic inventory

at single electrodes, they found response selectivity to distinct phonetic features

Mesgarani et al. (2014) - phonetic feature encoding in the human superior temporal gyrus - explanation of the image

each electrode represents a different brain area

phonemes are observed in the different electrodes

the red parts show which sounds observed by which electrode

each area has mapping for different sounds

how our brain proceses each phoneme sound

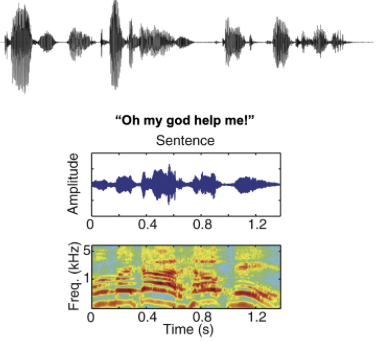

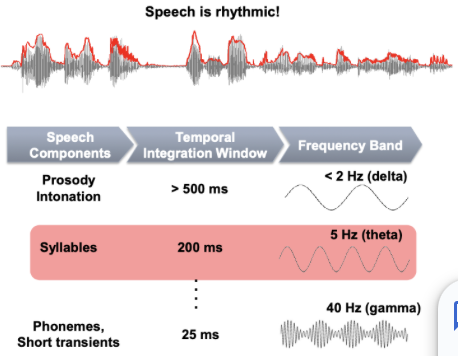

Amal et al. (2015) - speech is rhythmic

raw speech signal and speech spectrogram during the sentence

very rhythmic (energy fluctuations)

speech signal and speech envelope

the speech envelope comprises energy changes corresponding to phonemic and syllabic transitions

Aiken and Picton (2008)

Giraud & Poeppei (2012) - speech has hierarchically nested rhythmic structure

Speech has a hierarchically organised/nested rhythmic structure, and this matches the hierarchy in brain oscillations.

Prosody or intonation, which occurs on a slow timescale, matches delta rhythm in the brain.

Syllables match theta rhythm in the brain, and phonemes match gamma rhythm in the brain

In particular, the syllable rates corresponding to theta and brain oscillations have been known to be important for parsing and segmentation of speech streams and intelligible speech comprehension.

speech syllables

there are roughly ~3-5 syllables per second (~3-5 HZ) in typical speech

i.e. duration of each syllable: ~200-300 ms

syllable - a unit of pronunciation having one vowel sound, with or without surrounding consonants, forming the whole or a part of a word

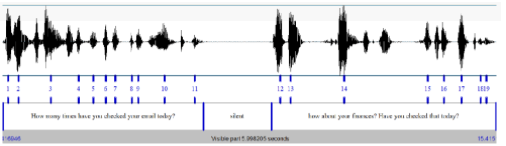

Saberi and Perrott (1999) - speech intelligbility critically depends on syllable rate

found intelligibility of speech is resistant to time reversal of local segments of a spoken sentence when they are at syllable rates.

They subdivided a digitised sentence into segments of fixed duration

Every segment was then time-reversed.

The entire spoken sentence was therefore globally contiguous, but locally time-reversed, at every point (A & B in the figure).

Listeners report:

perfect intelligibility of the sentence for segment durations up to 50 ms

partial intelligibility for segment durations exceeding 100 ms, with 50% intelligibility occurring at about 130 ms

no intelligibility for segment durations exceeding 200 ms (Figure bottom).

This duration (~200 ms) roughly corresponds to one syllable rate

Thus, the results support the importance of the temporal scale of syllables in speech intelligibility.

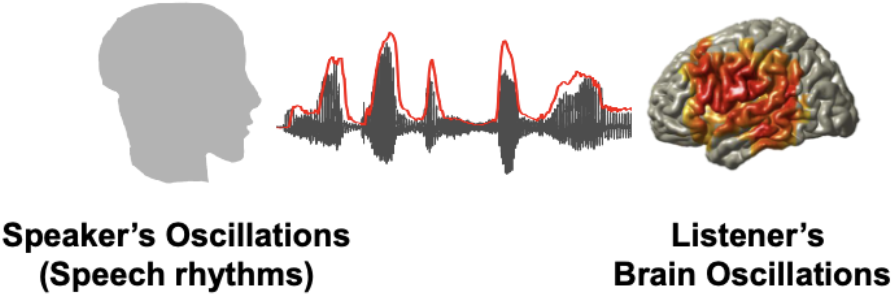

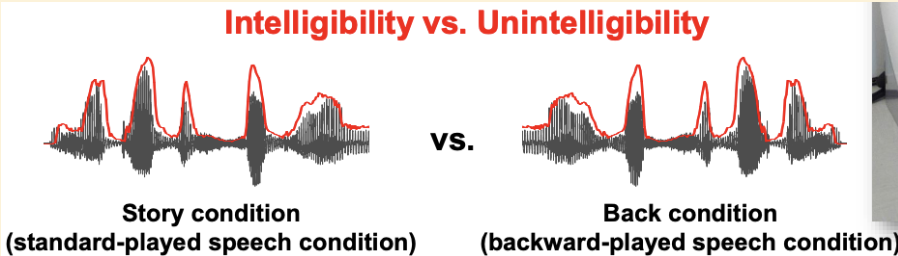

standard speech (intelligible) vs. backward speech (unintelligible)

in terms of rhythmic properties there are no differences

speech tracking

Synchronisation between speaker and listener

Using MEG or EEG, we can study the synchronisation (coupling) between the speaker’s speech rhythms and the listener’s brain oscillations

Park et al. (2018) PLoS Biol; Park et al (2016) eLife

Park et al (2015) Curr Biol; Gross et al (2013) PLoS Biol

studying the listener’s brain rhythms (oscillations) following the speaker’s speech rhythms

seeing if there is coupling between the two rhythms

park et al. - auditory speech entertainment modulated by intelligibility

MEG with 248 magnetometers (4-D Neuroimaging)

22 healthy, right-handed subjects (11 females; 19-44 years old)

Natural stimulus: 7-minute continuous real-life story (auditory speech)

ppt heard two different conditions

normal speech and reversed speech

rhythmic components will be the same

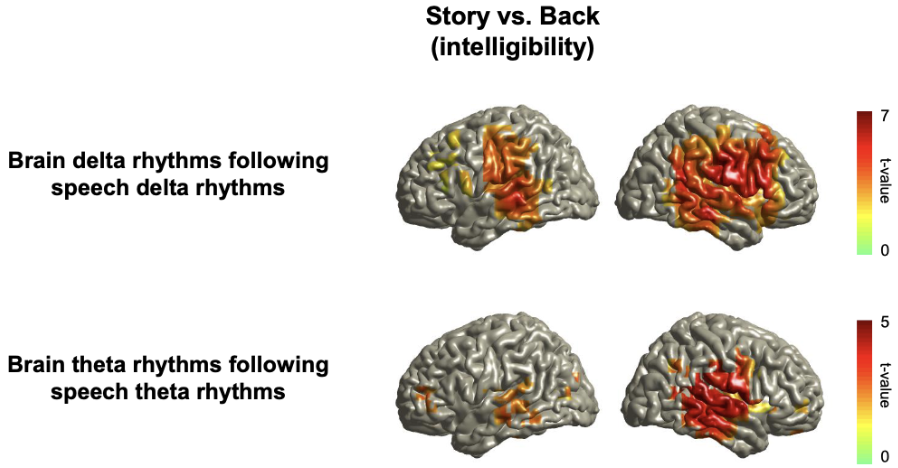

park et al. - low-frequency oscillations in the auditory cortext track intelligble speech

Low-frequency brain oscillations (delta and theta rhythms) in auditory cortex track only intelligible speech (story condition) but not back condition

Story: Standard Continuous Speech

Back: Backward-played Story

LAC: Left Auditory Cortex

RAC: Right Auditory Cortex

y-axis - coupling between two rhythms

bilateral auditory cortex - normal speech

reversed speech - brain rhythms do not follow the speech

magnitude of coupling almost 0

interesting as they both have the same frequency components (rhythms), but the brain rhythm only follows the standard

gross et al. (2013) - low-frequency speech tracking

LF (delta and theta) speech tracking is more right-lateralised

beyond the auditory cortex

gross et al. (2013) - high-frequency speech tracking

HF (gamma) speech tracking is more left-lateralised

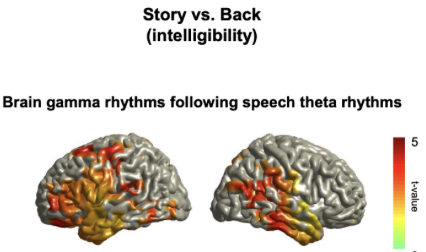

Poeppel (2003) - functional assymetry of the auditory system in speech processing

The Asymmetric Sampling in Time theory (AST; Poeppel, 2003) proposes that auditory cortices preferentially sample at rates tuned to fundamental speech units.

While the left auditory cortex would integrate auditory signals preferentially into ∼ 20–50 ms segments that correspond roughly to the phoneme length

The right auditory cortex would preferentially integrate over ∼ 100–300 ms and thus optimise sensitivity to slower acoustic modulations, e.g., voice and musical instrument periodicity, speech prosody, and musical rhythms.

Basnakova et al. (2015) - face-saving indirectness

public face, being respected, is critical

humans are very social species

public face determines whether people trust you, select you for collaboration, and determine social hierarchies

Basnakova et al. (2015) - brain areas activated for the indirectness effect

relative to direct replies, face-saving indirect replies increased activation in the:

medial prefrontal cortex

bilateral temporoparietal junction

bilateral inferior frontal gyrus

bilateral medial temporal gyrus

indicates understanding face-saving indirect language requires additional cognitive perspective-taking

and other discourse-relevant cognitive processing

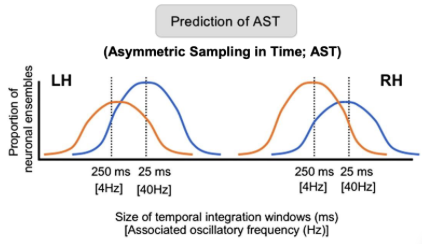

stolk et al. (2016) - communication as a joint action

requires mutual understanding: when different minds mutually infer they agree on an understanding of an object, person, place, event, or idea

need contextual info in social settings

stolk et al. (2016) - experimentally controlled communication

Production and comprehension of novel communicative behaviours supported by right lateralised fronto-temporal network (MEG study: Stolk et al. 2013)

These areas are known to be necessary for pragmatics and mental state inferences, embedding utterances in conversational context (see earlier: Basnakova et al. 2015)

joint action tested experimentally

vmPFC: ventromedial prefrontal cortex

pSTS: posterior superior temporal sulcus

TL: temporal lobe

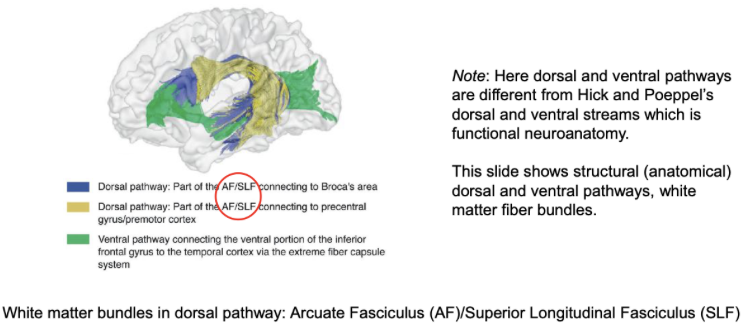

Friederici (2015) - language network - dorsal and ventral pathways

Skeide, Brauer & Friederici (2016) - dorsal pathway is crucial for the processing of complex sentences

dorsal pathway is associated with accurate responses and faster response time

but this pattern was not found in ventral pathway

dorsal pathway is important for complex sentences

developmentally - white matter tracked development, dorsal pathway develops to be more dominant

Dronkers et al. (2007) - white matter - overview

Dronkers et al. (2007) reported the findings from MRI scans of Paul Broca’s two patients (Leborgne and Lelong).

There were two important observations.

The white matter tracts seen so clearly in the right hemisphere are absent in the left.

White matter plays a crucial role in complex cognitive functions like speech and language because these activities involve various brain regions with extensive connections facilitated by white matter bundles.

Dronkers et al. (2007) - white matter - summary

In summary, the results also show differences between the area initially identified as Broca's area and what is currently referred to as Broca's area.

This discovery has important implications for both studies involving brain lesions and functional neuroimaging in this widely recognised brain region

Brain activity involved in complex and higher cognitive functions, such as speech/language, is quite distributed in brain areas with such extensive connections through white matter bundles.

Dronkers et al. (2007) - white matter - first observation

Firstly, the lesions in Broca’s original patients did not encompass the area we now refer to as Broca’s area.

Both patients’ lesions extended significantly into the medial regions of the brain, in addition to the surface lesions observed by Broca.

For example, Leborgne’s primary lesion was more anterior, and Lelong’s lesion only involved the posterior portion of the pars opercularis.

Dronkers et al. (2007) - white matter - second observation

Secondly, both also had lesions in an important white matter pathway, the arcuate/superior longitudinal fasciculus, the long fibre tract that connects posterior and anterior brain areas.

needing only the left hemisphere for language is a myth

While some aspects of language processing, like syntactic comprehension and production, are left-lateralised, many core language processes involve both hemispheres.

In young adults, several core language processes actually rely on right-hemisphere regions.

For instance, sentence-level semantics engages a bilateral network, including the left and right posterior temporal gyrus, which is shared for comprehension and production.

Similarly, phonological processing involves both the left and right middle/superior temporal gyrus.

needing only the left hemisphere for language is a myth - ageing

As individuals age, language processing tends to become more bilateral, even for functions that are left-lateralised in young adults.

Activation often appears more bilateral in older adults, possibly as a compensation for structural changes

The observed functional changes are often strongest in the hemisphere that is non-dominant for the specific cognitive function, such as the right hemisphere in language processing.

Many language processes essential for communication in a social context rely on right-hemisphere contributions.

Affective language processing, including understanding nuances like face-saving indirectness, relies on a right-lateralised network.

Additionally, the act of communication as a joint action also engages a right-lateralised fronto-temporal network.