thinking and decision making

1/24

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

25 Terms

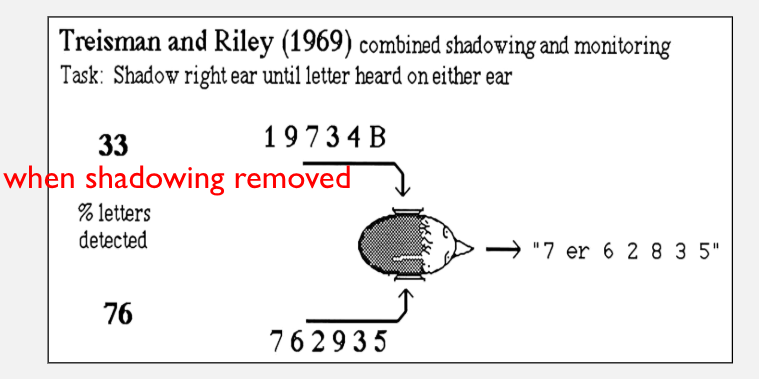

early selection an option but not a structural bottleneck

monitoring for a target word (animal name)

left ear: plate cloth day fog tent risk bear

right ear: hat king pig groan loaf cope lint

After practice, people can detect targets equally well whether they have to monitor one or both ears. This suggests early selection (filtering information at an early stage of processing) is flexible and not necessarily a bottleneck

unless selective understanding/repetition of one message is required eg.

when selective repetition (shadowing) is required, attentional resources are limited, making detection of other stimuli harder

conclusion

attention can be flexible, allowing for parrallel processing when tasks are simple

selective attention becomes a bottleneck when cognitive resources are heavily engaged (eg shadowing a message)

supports theories like Triesmans attenuation model (1964), which suggests unattended information is processed at a weaker level rather than completely blocked

thinking, decision making, reasoning and creativity/introspection

from a psychological perspective, ‘thinking’ includes

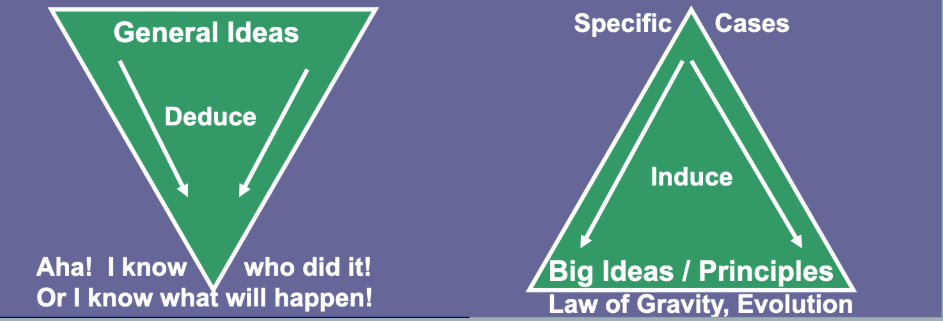

inductive reasoning (predicting the future from past data, making judgements)

statistical generalisations, probabilistic judgements, predictions

hypothesis-testing, rule induction

deductive reasoning

solving logical or mathematical problems that have right answers (a conclusion based on reasoning and evidence)

problem-solving

working out how to get from state A to state B (numerous solutions, varying degrees of constraint)

judgement and decision-making: choosing among options

creative thinking, daydreaming, imagining, ideation

research on thinking

has focused primarily on cases where:

there is a right awnser, and/or

a way of evaluating the rationality of the awnser and/or

a way of assessing the efficiency with which one gets there

as well as asking

how do people think (what are the processes and representations)

there is a strong focus on human imperfection

why are people apparently irrational in their thinking?

what limits the efficiency of thinking? (relative to an ideal thinker)

general dual process theory of reasoning, problem solving and decision making

we use two kinds of process:

‘system 1’ - intuitive, automatic, largely unconscious, quick-and-dirty, approximate - but domain-specific- procedures, schemas, rule of thumb or heuristics

for example, recognising a friend in a crowd

‘system 2’ - a slow, sequential, effortful, - but rational, logical and conscious reasoning system. It allocates attention to effortful mental activities that demand it; constrained by limited working-memory capacity and other basic limitations of our cognitive machinery

for example, solving a maths problem

how do the two systems work in relation to one another?

because using system 2 is effortful it can get depleted, self control and cognitive effort are forms of mental work

if people are cognitively busy system 1 has more influence on behaviour and people are more likely to follow temptations (make selfish choices, make superficial judgements in social situations)

deductive and inductive reasoning

inductive reasoning and problem solving: illustrate how basic properties of the cognitive machinery (a) limit our ability to use optimal reasoning strategies and (b) introduce biases

difficulty attending to relevant information, when there is other salient (but irrelevant) information available

limited working memory capacity

properties of retrieval from long term memory

difficulties in shifting mental ‘set’ or perspective

deductive reasoning

logical reasoning with quantifiers (‘some’, ‘not’, ‘all’): the idea that we reason by imagining concrete examples - mental models - rather than by using abstract and general logical operations. Illustrates the impact of WM limits.

Wason’s 4 card test of deductive reasoning with ‘if-then’ propositions: explanation of effects on performance of the content of the problem, and of the nature of characteristic errors, in terms of domain-specific heuristics

crime mysteries

Sherlock Holmes is often associated with deductive reasoning, but in reality, he primarily uses a combination of inductive and deductive reasoning, along with abductive reasoning (forming the best possible explanation from incomplete evidence)

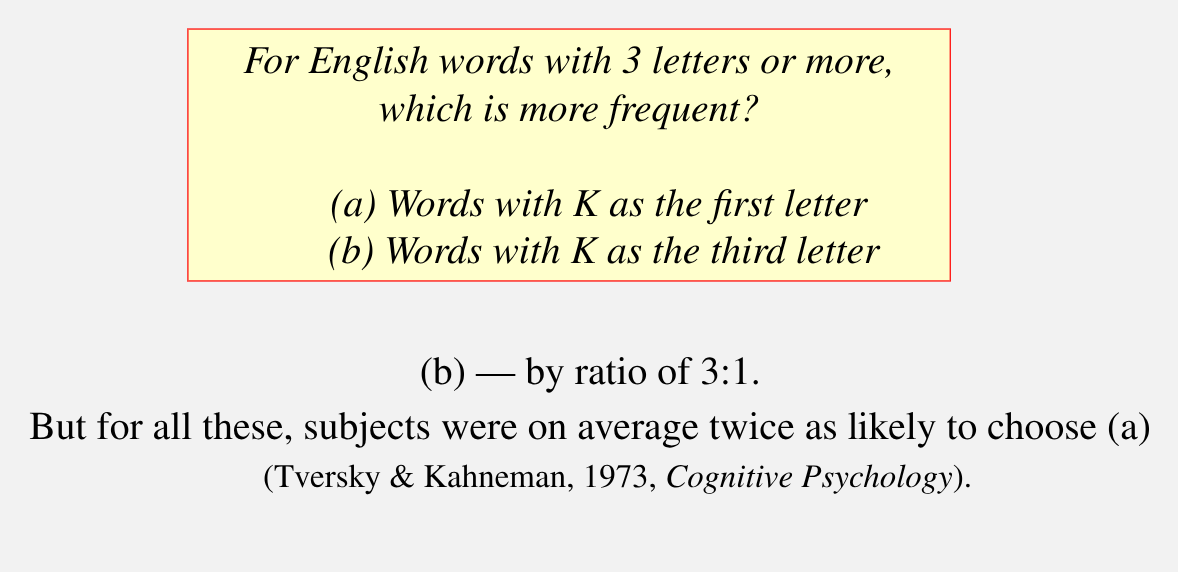

judgements of probability/frequency

some facts about frequency are told to us, or can be looked up:

for example, the lifetime morbid risk of schizophrenia is about 0.7%

but many judgements of probability/frequency we make are based on experience, for example:

will it rain today?

if i get a train to paddington, how likely is it to arrive less than 10 minutes late?

(for a psychiatrist) how likely is this patient to attempt suicide?

an example

availability in memory

the availability heuristic: people tend to judge events or objects as more probable or frequent if they can easily recall examples from memory or their environment (Tversky and Kahnemann, 1973)

this works because it is generally easier to retrieve from memory examples of events/objects that are more frequent

unfortunately retrievability is also determined by other factors:

recency

salience

similarity to the current case

we tend to overestimate the probability of events when we can easily recall examples - especially if they are recent, personally significant, or similar - the availability bias

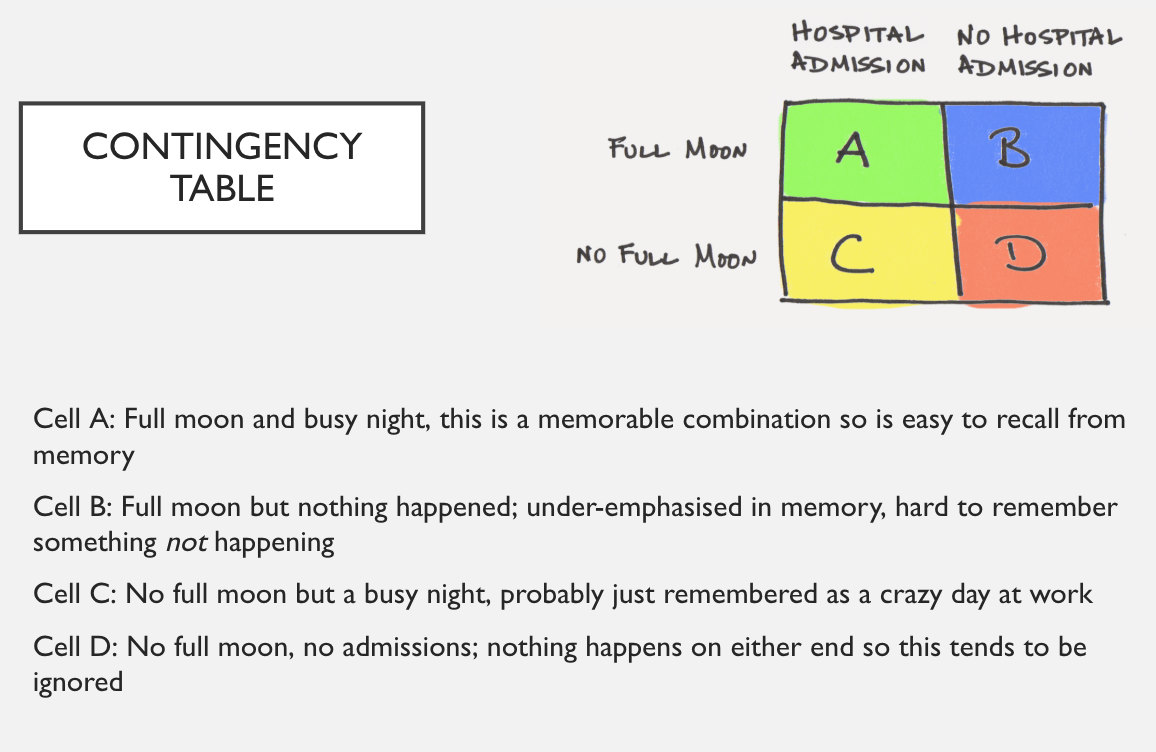

illusory correlation

people percieve a pattern or causal relationship between events, even when they are only coincidental or not related at all. This leads to false correlations or mistaken beliefs about cause and effect.

for example, superstitions. In the middle ages a full moon was associated with disease or the occurrence of werewolves; even in 2005, a study showed that 7 out of 10 nurses still thought a full moon leads to more chaos with patients

this can cause prejudice - for example, seeing someone of a particular racial background being arrested makes you assume all people of that background are more likely associated with crime

illusory correlation - another example

imagine a doctor who investigates a disease which presents with certain symptoms but the symptoms also present with other diseases

symptom (S) occurs in 80% and concludes that it is a good predictor of disease (D)

wrong! why? must compare frequency of D when S is present with frequency when it is absent

finds 40/50 without S have D (so twice as many with S have D (80%) - confirmation

neglect of base rate and representativeness bias

we often overlook the overall frequency of an event (base rates) when making judgements. Instead, we focus on specific case details rather than statistical reality.

If something resembles a typical example of category X, we assume it has all the characteristics of X. This can lead to biases, causing us to ignore additional relevant information.

We rely on stereotypes and prototypes rather than base-rate data, leading to misjudgements in probability and classification

representativeness bias and sequential events

whats more likely in coin toss?

1: HHHHHHHHH

2: HTHTHTTTH

neither! they are equally likely

whats the probability of a head on the 11th row in each case?

the same! the coin (or roulette wheel) has no memory. expecting the coin to somehow readdress any non-representativeness of the previous sequence is in the gamblers fallacy

we have difficulty ignoring the representativeness (or unusualness) of the sequence and focusing on what we know about the probabilities of individual events

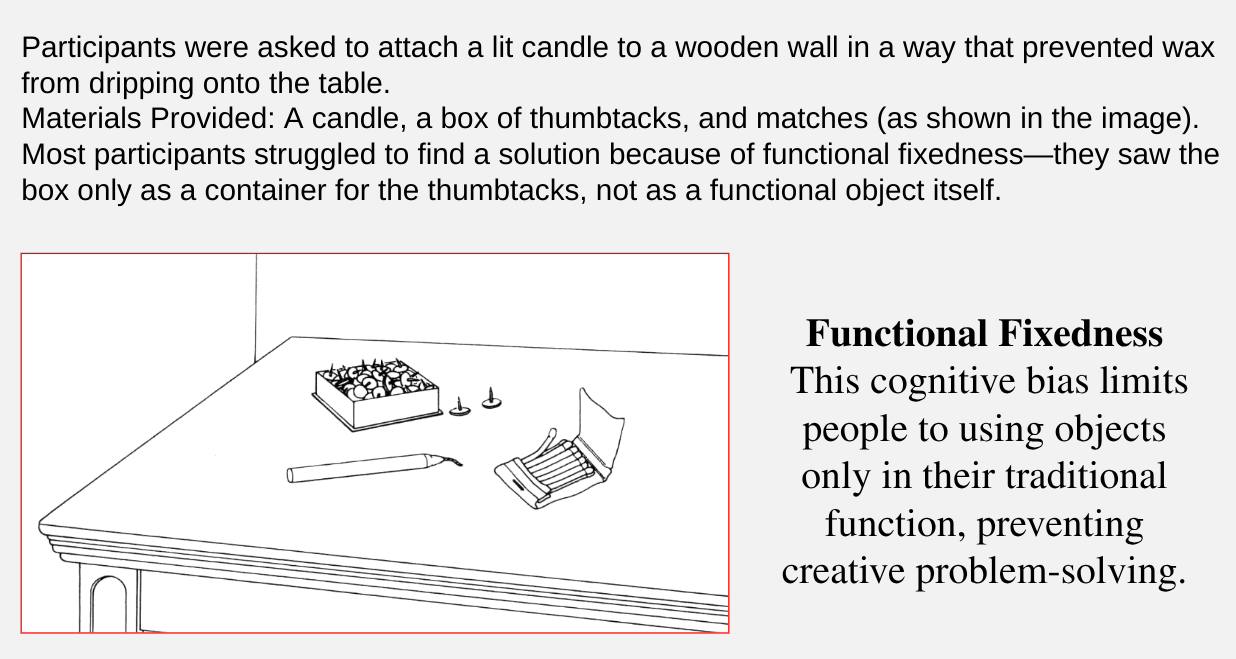

‘functional fixedness’ in problem-solving

problem-solving

problem solving research studies situations where there is a start state and a goal state, and you have to get to the goal as quickly as possible, using a set of available operators, and subject to certain constraints

missionaries and cannibals

luchins water-jug problems

tower of hanoi

1. Missionaries and Cannibals Problem

A classic logic puzzle involving moving missionaries and cannibals across a river using a boat.

Rules:

The boat can only carry two people at a time.

If at any point cannibals outnumber missionaries on either side, the missionaries get eaten.

Challenge:

Requires planning ahead, avoiding dead-end states.

Demonstrates means-end analysis and problem representation.

2. Luchins’ Water-Jug Problems (1942)

A mental set experiment that shows how past experiences affect problem-solving.

Participants are given three jugs of different sizes and must measure a specific amount of water.

Findings:

When people learn a formulaic way to solve early problems, they continue using it even when a simpler solution exists.

Demonstrates fixation and rigidity in problem-solving.

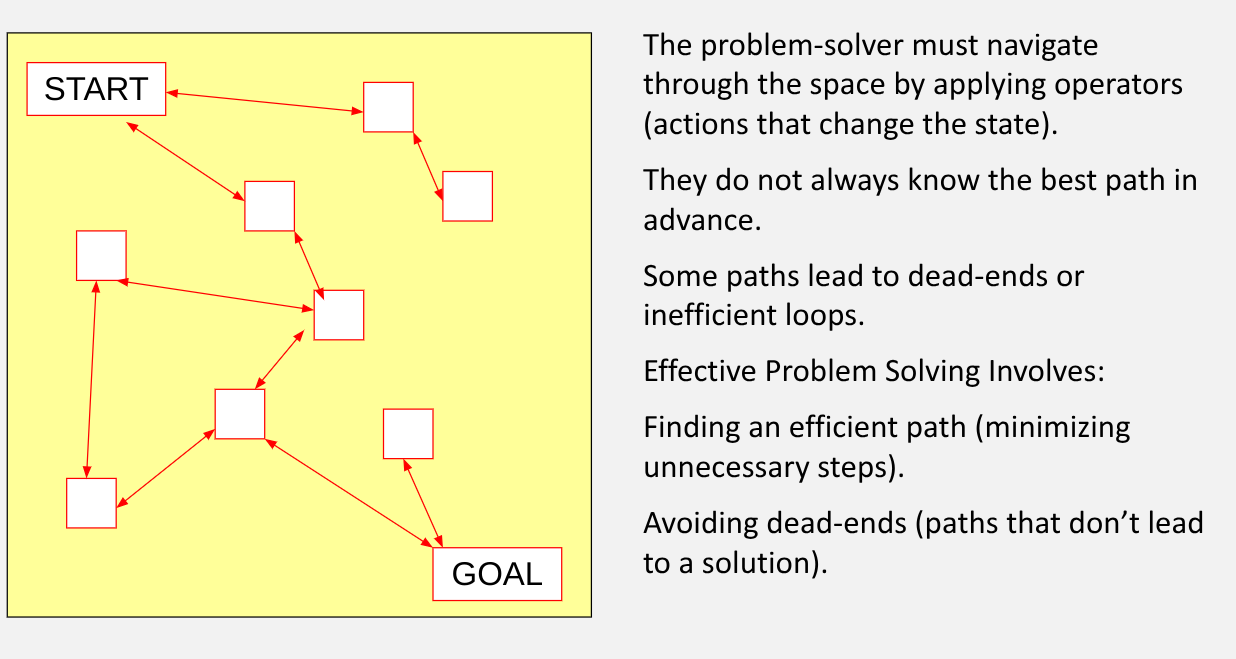

the ‘problem space’ - Newell and Simon, 1972

working memory capacity limits and heuristics in problem solving

in theory, given unlimited time and cognitive resources, we could systematically evaluate every possible move and choose the shortest path to a solution

however, our working memory is limited, so we must rely on strategies to simplify problem solving:

recognising patterns and recalling previously successful moves from long term memory

searching incrementally, moving step by step from the initial state towards the goal using heuristics like:

means end analysis: identifying the overall goal, then creating sub-goals to achieve it

avoiding redundant moves wherever possible

means end analysis in action: if required step is unavailable, we create a sub-goal to make it possible

this continues until we find an action that leads to progress (eg getting locked out of a flat - what steps would you take to regain entry?)

this process relies on maintaining a ‘goal-stack’ in working memory, keeping track of unfinished sub goals

design ‘limitations’ intrinsic to the cognitive machinery

‘design limitations’ in cognitive capacities (properties of memory retrieval, limited working memory, difficulty in attending to relevant info, difficulty in shifting cognitive ‘set’ and the general effortfulness of sequential reasoning) can:

lead to reliance on heuristics (approximate rules of thumb) and

result in intrinsic biases when we apply these heuristics

mental models and syllogistic reasoning

mental model: suppose we have

artist A who is a beekeeper

Beekeeper B who is a violinist

But artist A and Beekeeper B could be different people - there is no necessary collection between artists and violinists

some artists are beekeepers

some beekeepers are violinists

conclusion: some artists are violinists

Mental Model Theory (Johnson-Laird et al) Instead of using formal logic, people construct mental models to visualise relationships between premises. Working memory limitations prevent them from maintaining multiple possibilities

trouble with if-then (‘conditional propositions’)

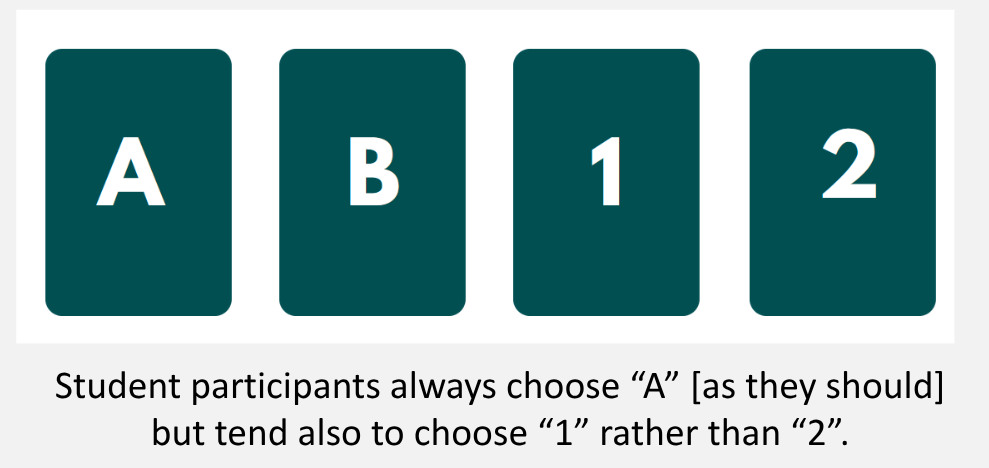

Wasons 4 card problem

if a card has a vowel on one side, then it has an odd number on the other side

why are the ‘a’ and ‘2’ cards the right awnser?

If P (vowel) then Q (odd)

which cards need to be checked?

the rule is violated if P occurs, but NOT-Q follows → (vowel and even number)

this means we should check:

‘A’ (P- a vowel) → to see if it follows the rule and has an odd number

‘2’ (NOT-Q - an even number) → to ensure it is not a vowel (which would break the rule)

which cards are irrelevant?

‘B’ - consonant → the rule says nothing about consonants, so this is irrelevant

‘1’ - odd number → the rule states - if vowel, then odd, but it does not say if odd, then vowel - so we don’t need to check this

so people are just illogical?

changing the content and context of these problems without changing the formal structure can lead to dramatic improvements in performance (Johnson-Laird, 1972)

eg Griggs and Cox (1982): imagine you are a police officer observing drinkers in a bar: for which two kinds of drinker do you need to check their age to drink, or detect transgression of the rule?

if a person is drinking beer, they must be 18 years of age

drinking beer

drinking coke

22 years old

16 years old

75% correct with this version, typically 10-20% in abstract version

Cheng and Holyoak (1985) sed a formally identical problem involving a form with the words ‘TRANSIT’ or ‘ENTERING’ on one side, and a list of diseases on the other. They had to check for observance of the rule:

‘ if the form has ENTERING on one side, then the other side includes cholera among the list of diseases’

half the subjects were given a rationale for the rule (mere transit passengers don’t need cholera inoculation, visitors to this country do')

other half told to check for tropic diseases

without a rationale, performance was poor (~60%)

with a rationale, participants performed well (~90%)

overconfidence bias

bias where peoples confidence in their judgements is greater than the objective accuracy of those judgements.

Overconfidence can be expressed in three ways:

overconfidence in ones actual performance (ie i am good at multitasking)

overconfidence that one’s performance is better than that of others (ie I am a better driver than most people)

overestimating the accuracy of one’s beliefs (eg I know im right about xyz)

summary: why is thinking error prone?

‘design limitations’ in cognitive capacities (properties of memory retrieval, limited working memory, difficulty in attending to relevant info, difficulty in shifting cognitive ‘set’)

leads to reliance on heuristics (approximate rules of thumb)

results in intrinsic biases when we apply these heuristics

our habit of reasoning with concrete mental models coupled with failure to generate, or inability to represent, all possible mental models

‘capture’ of reasoning by relatively automatic domain-specific heuristics that are

adaptive in the right context but

may be inappropriate for the case at hand