lcc FINAL STUDY GUIDE

1/260

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

261 Terms

What are Chomsky’s 3 theories about language acquisition?

I-language (internal language)

Universal Grammar

Innatism

What is I-language?

mental representation of a person's knowledge of their language

What is universal grammar?

all humans are born with an innate set of grammatical principles shared across all languages

acts like a blueprint for language learning, explaining why children can learn complex languages quickly and uniformly, despite limited input

What is innatism?

certain ideas, knowledge, or capacities are inborn rather than acquired through experience

language ability is hardwired into the human brain

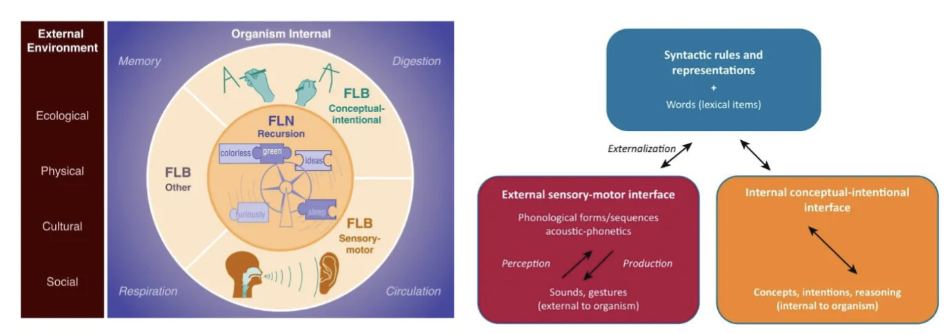

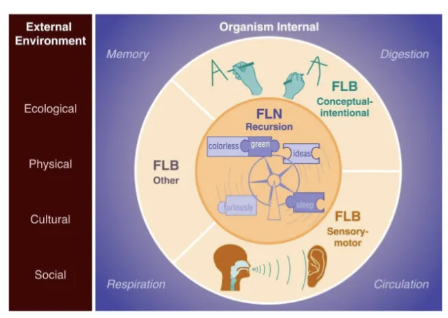

What are the two domains language is divided into?

Faculty of Language

FLB (broad-sense) → sensory-motor and conceptual systems

FLN (narrow-sense) → recursion (computation)

What is faculty of language?

the biological capacity humans have to acquire, understand, and use language

It's a term often associated with Noam Chomsky and his theories on how language is rooted in human cognition

Language is not just speech or communication — it is a cognitive system composed of:

A computational core (syntax/recursion)

Interfaces with thought and sensory-motor systems

Only humans seem to possess the full package (FLN), though parts (FLB) are present in other animals.

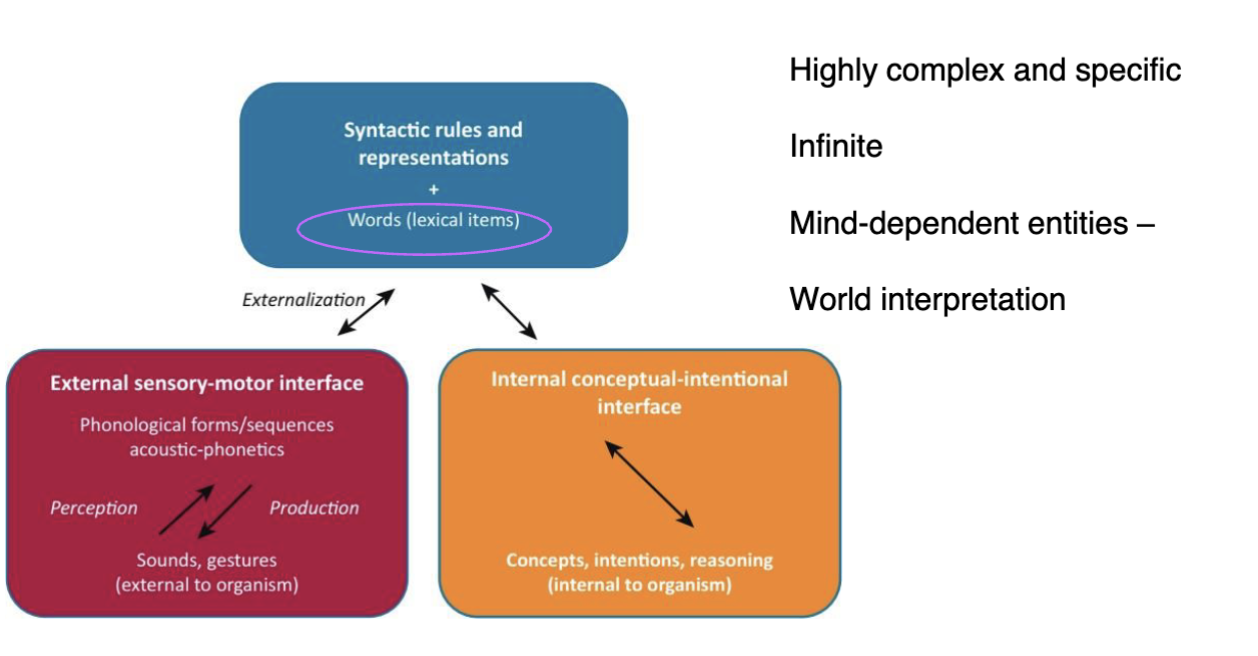

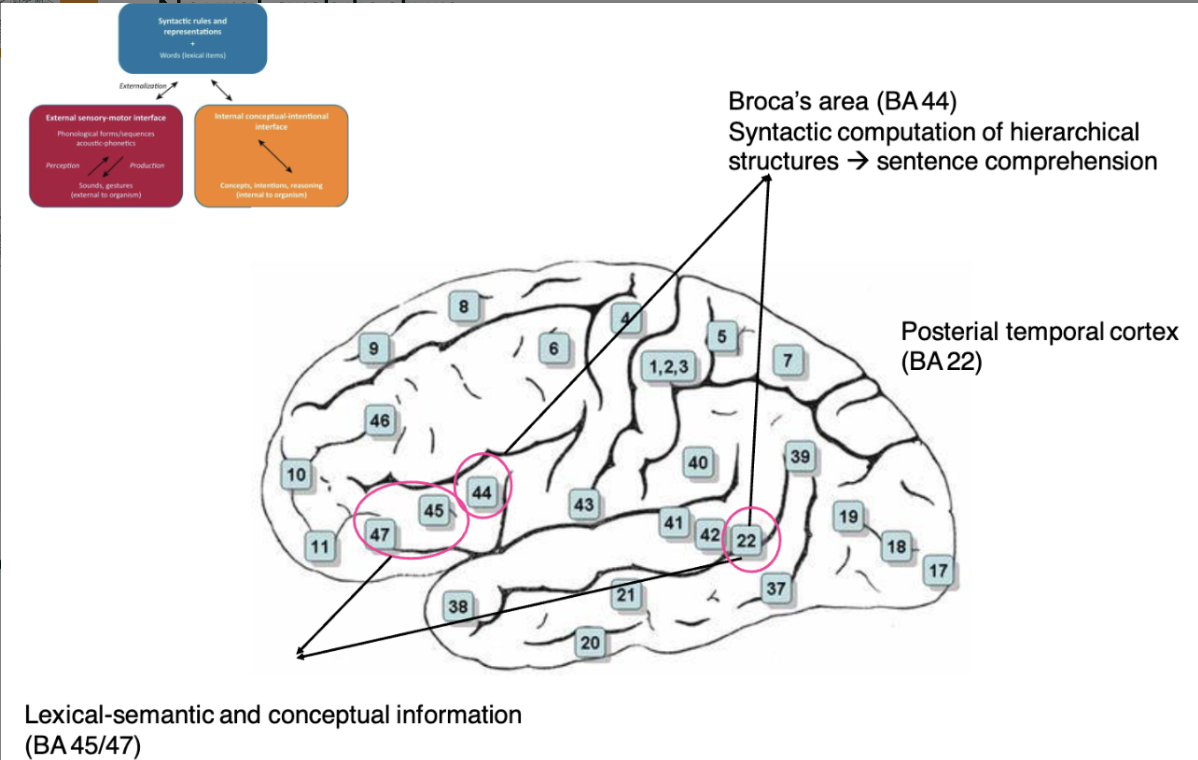

RIGHT DIAGRAM:

Syntactic Rules and Lexical Representations (Blue Box)

Internal grammar system that creates structured sentences

Combines with vocabulary (lexical items)

Internal Conceptual-Intentional Interface (Orange Box)

Deals with meaning, reasoning, and intention

Where thoughts are formulated before being put into language

External Sensory-Motor Interface (Red Box)

How language is perceived and produced (e.g., speech, sign, hearing)

Converts linguistic structures into physical signals (and vice versa)

→ Arrows show bidirectional flow: We understand language by mapping sounds/gestures back to structured meanings, and we produce language by mapping thoughts into words and sound

What is evidence for and against language being innate to humans? (3)

Pro:

poverty of stimulus (speakers know what is wrong without exposure to it)

people have a sense of what is and isn’t correct with little input and corrections

Con:

it is not falsifiable (explanations from linguists are post-hoc, after they happen)

Falsifiability means a theory can be proven wrong by evidence. A theory that's not falsifiable is not scientific in the strict Popperian sense.

after the fact — meaning:

They don’t predict what we should observe.

They explain things post-hoc (after they happen), making them impossible to truly test or refute

linguistic changes vs genetics

fast-changing languages incompatible with a slow-changing genetic hardwire

Languages evolve quickly — new words, grammar shifts, entire languages appear and disappear over centuries.

Genes evolve slowly — over thousands to millions of years.

This mismatch raises a challenge:

How can a genetically fixed language faculty (like FLN or Universal Grammar) account for the huge diversity and rapid evolution of languages?

Wouldn’t our genetic hardwiring lag behind?

If language were mostly hardwired, it should remain fairly stable across cultures and time.

What are the 3 hypotheses for the origins of the faculty of language Broad-sense? (FLB)

FLB is homologous to animal communication

FLB is an adaptation, and only present in humans

FLB is homologous to animals, FLN (narrow-sense) is uniquely human

What is Faculty of Language in the Broad Sense (FLB)?

Includes general cognitive abilities that aren’t specific to language but are involved in it, like memory, pattern recognition, and the ability to learn from experience.

Some animals might share parts of FLB (like communication or pattern recognition skills), but not in the same way humans do.

Includes sensory-motor systems (speech, hearing, gesture) and conceptual-intentional systems (thought, meaning, planning)

Shared with other species – but only humans combine them with recursion to create full language

What is Faculty of Language in the Narrow Sense (FLN)?

Refers to specific features unique to human language, like recursion (the ability to embed ideas within ideas) and the complex grammatical structures we can create

Core computational mechanism that builds infinite structures from finite means

What are 3 claims about language?

Language as a thought tool: The primary role of language is to express thoughts, not to communicate. Communication is secondary.

No comparison with animals: Human language is considered qualitatively different from animal communication; it's not just a more complex version.

Species studies caution: Studies in other species may reveal mechanisms (e.g., working memory, pattern recognition) also used in language—but these mechanisms are not exclusive to language.

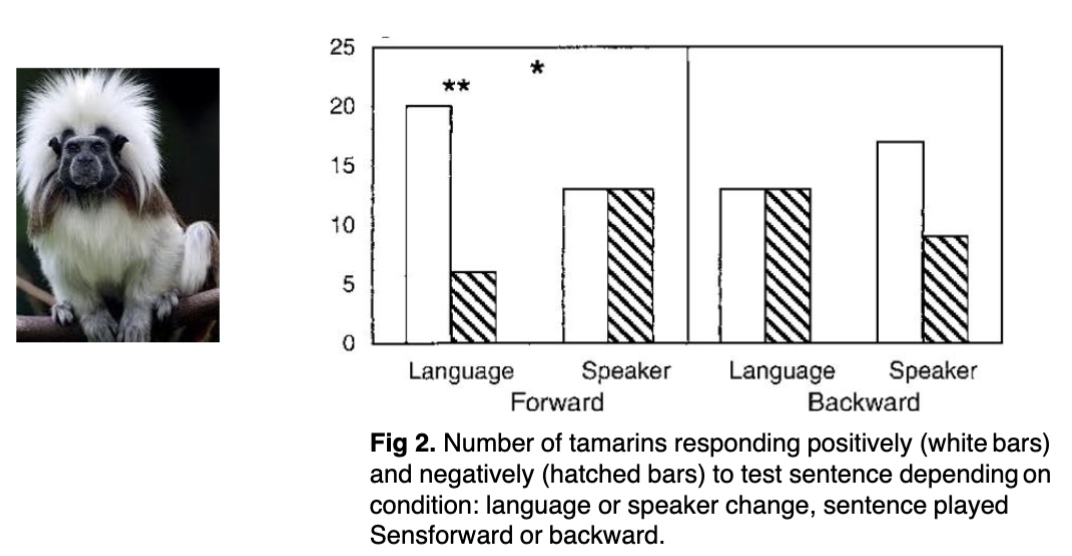

What does the speech discrimination experiment with monkeys (tamarins) show regarding Faculty of language: Sensory-motor interface?

Goal: To test whether tamarins can discriminate between languages and speakers.

Conditions:

Forward speech (normal)

Backward speech (unnatural)

Tamarins can discriminate between languages when speech is played forward, even though they don't have full language abilities.

This implies that some sensory-motor processing mechanisms (like detecting rhythm or sound patterns) are shared across species, even if full language is not.

It supports the idea that some mechanisms used in language are not exclusive to it

What are two animal examples of Faculty of Language: Conceptual - intentional interface

vervet monkeys use vocalizations to communicate specific meanings—though in a limited, non-linguistic way

honeybees use the waggle dance to explore how information can be encoded and communicated without language

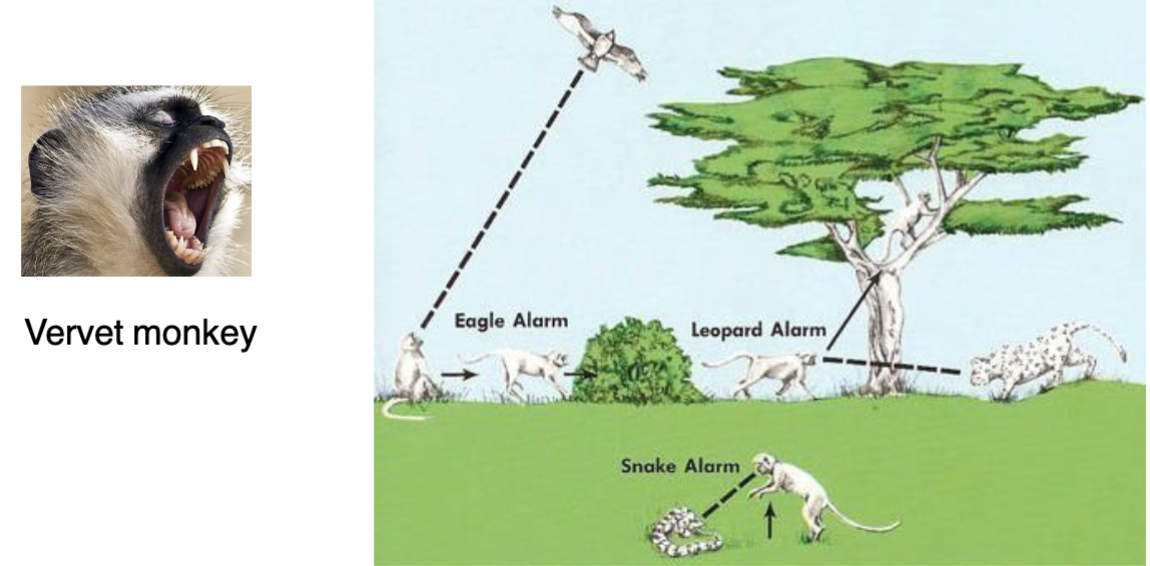

Describe how vervet monkeys are an example for Faculty of Language: Conceptual - Intentional interface

monkeys have different calls for predators → snake alarm is different from eagle alarm

issue: vocalization is limited because they don’t have muscle control and communication is indexical (more instinctive survival instinct)

can only happen in the presence of what is happening

These vocalizations are instinctive and referential (they point to specific dangers), but they:

Lack syntax (no structure or combination rules)

Are not generative (you can’t build new meanings by combining them)

Do not reflect intentional communication like in humans (ex. expressing thoughts or questions)

Vervet calls show that animals may map sounds to meanings, but not in a linguistic way.

This supports the idea that full language depends on a more complex conceptual-intentional interface unique to humans

This slide demonstrates that some precursors to language (like sound-meaning associations) exist in other species. However, only humans use a flexible, abstract, and compositional system tied to internal thought and intentionality—what we call language.

What is the Conceptual - Intentional interface? → faculty of language

The cognitive side of language: where thoughts, intentions, and reasoning occur.

It connects mental concepts with the linguistic system.

In humans, this interface enables us to turn abstract thoughts into structured language.

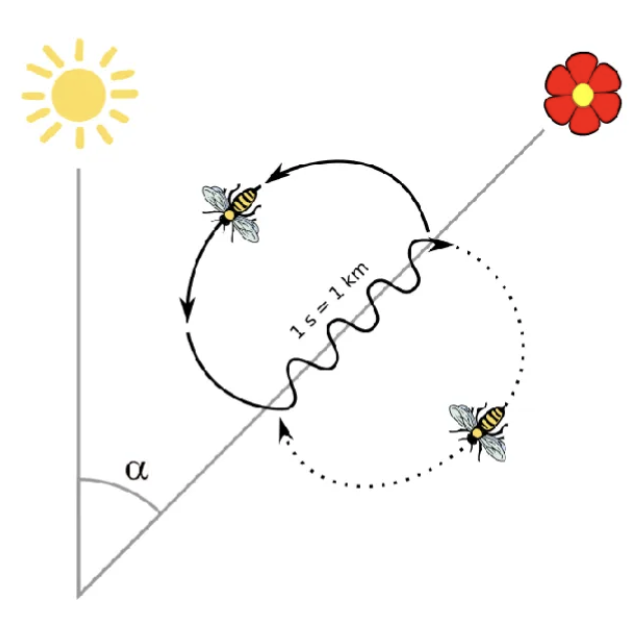

Describe how honeybees are an example for Faculty of Language: Conceptual - Intentional interface

When a forager bee finds a flower source, it returns to the hive and performs a waggle dance.

The dance communicates:

Direction of the flower (relative to the sun)

Distance (via duration of the waggle)

This is a symbolic communication system—it maps internal representations (location of food) to external signals (dance patterns).

animals can encode and convey conceptual information.

Limitations of the waggle dance:

Is rigid and limited in scope (only about food)

Doesn’t involve syntax or recursive structure

Isn’t generative (bees can’t create new messages beyond what’s hard-coded)

What are makes language uniquely human? (3)

Discrete Infinite Elements (words)

Human language consists of units (ex. words) that are:

Discrete: clearly separable

Infinite in potential: can be combined endlessly

This enables humans to express an unlimited number of ideas using a finite vocabulary.

2. Syntactic Organization (Computation)

These elements are not just listed — they’re structured by rules (syntax).

The mind applies computational operations (like recursion, hierarchy) to build complex expressions.

This syntactic system is what separates language from other forms of animal communication.

3. Symbolism (Lexicon)

Language uses arbitrary symbols (words) to refer to concepts.

These are stored in the lexicon — our mental dictionary.

Symbolic reference is more flexible and abstract than fixed calls or signals seen in animals.

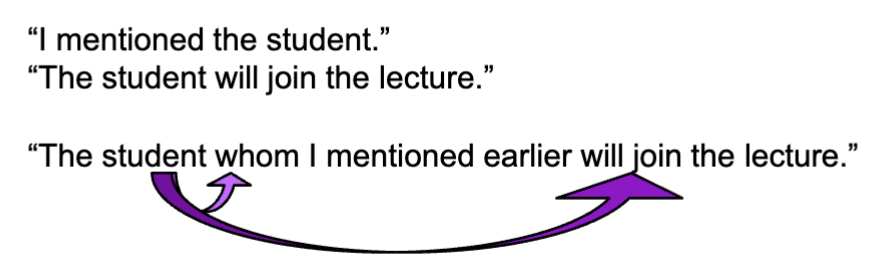

Describe recursion in the Faculty of Language (FLN) → unique human component

Recursion is the ability to embed structures within structures (ex. sentences within sentences).

It’s the computational mechanism at the heart of human syntax.

This is what makes the Faculty of Language in the Narrow Sense (FLN) distinct from broader communication systems seen in animals

Recursion is:

A defining feature of human language

What allows us to generate infinite new expressions from finite elements

Considered absent in non-human species, even those with symbolic or meaningful signals

Describe recursion in the honeybee waggle dance and vocalization in vervet monkeys

The bee waggle dance and vervet monkey calls lack recursion.

They convey fixed messages (e.g., food direction or predator type) but can’t combine or nest them in flexible ways.

How is the Pirahã language, spoken in the Amazon an example of recursion?

Pirahã lacks recursion in its external language (e-language, ex. actual spoken language).

Culture shapes grammar — the Pirahã worldview (focus on the present, rejection of abstraction) may restrict language structure.

Raises the question: might recursion exist in the internal language (I-language, the mental system), even if it's not expressed?

Recursion (the ability to embed phrases within phrases) is seen as a core component of the Faculty of Language in the Narrow sense (FLN) and is often claimed to be unique to humans

emphasizes a key tension in linguistics:

Is recursion truly universal in human language?

Or can culture override an innate computational capacity?

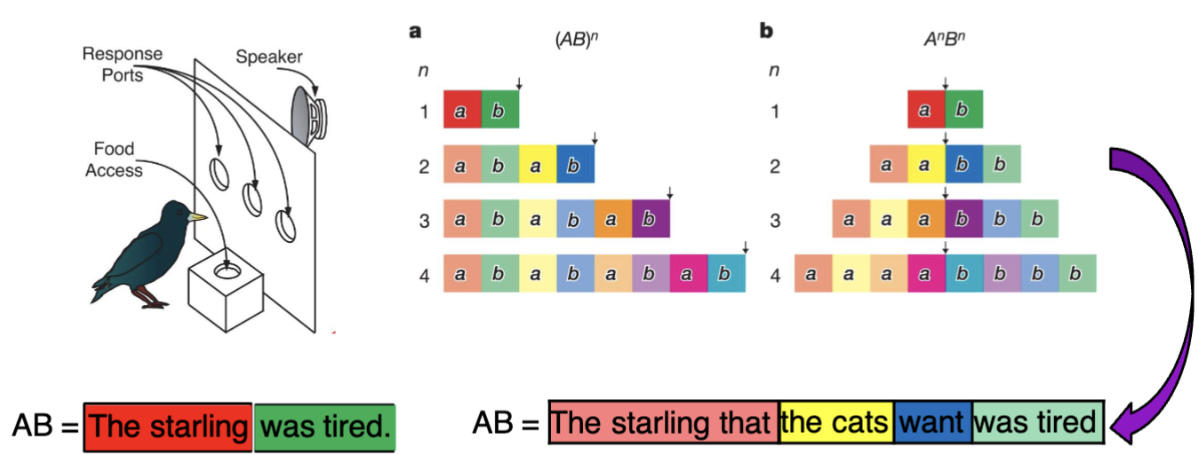

Describe how European Starlings were used to try and show recursion

Starlings were trained to discriminate between patterns of artificial sounds with different grammatical structures.

Two types of sound patterns were tested:

(AB)ⁿ (e.g., ab, abab, ababab) → simple repetition

AⁿBⁿ (e.g., aabb, aaabbb) → more complex nested patterns, suggesting recursion

Setup (left image)

Birds heard sound patterns from a speaker and had to choose the correct response port to get food.

Their choices revealed whether they could distinguish between pattern types.

Results (middle graphs)

The birds learned (AB)ⁿ patterns more easily (simpler repetition).

There was some limited success with AⁿBⁿ patterns, suggesting possible sensitivity to structured sequences.

Human Comparison (bottom examples)

AB structure = “The starling was tired.” (simple sentence)

AⁿBⁿ structure = “The starling [that the cats want] was tired.” (nested/recursive relative clause)

This human sentence involves recursive embedding, which is much more complex than AB repetition. It requires memory, hierarchy, and grammar rules—all tightly linked to syntactic recursion.

While starlings show some pattern learning, the complexity and flexibility of human recursive syntax (like in embedded clauses) still appears qualitatively different.

This supports the view that recursion, as used in human language, remains a uniquely human capacity.

*Birds could have counted or detected acoustic approximation → could have simply learned the patterns

![<ul><li><p class="">Starlings were trained to <strong>discriminate between patterns of artificial sounds</strong> with different grammatical structures.</p></li><li><p class="">Two types of sound patterns were tested:</p><ul><li><p class=""><strong>(AB)ⁿ</strong> (e.g., ab, abab, ababab) → simple repetition</p></li><li><p class=""><strong>AⁿBⁿ</strong> (e.g., aabb, aaabbb) → more complex nested patterns, suggesting recursion</p></li></ul></li></ul><p></p><p>Setup (left image) </p><ul><li><p class="">Birds heard sound patterns from a <strong>speaker</strong> and had to <strong>choose the correct response port</strong> to get food.</p></li><li><p class="">Their choices revealed whether they could <strong>distinguish between pattern types</strong>.</p></li></ul><p class=""></p><p class="">Results (middle graphs) </p><ul><li><p class="">The birds <strong>learned (AB)ⁿ patterns more easily</strong> (simpler repetition).</p></li><li><p class="">There was <strong>some limited success</strong> with AⁿBⁿ patterns, suggesting possible <strong>sensitivity to structured sequences</strong>.</p></li></ul><p class=""></p><p class="">Human Comparison (bottom examples) </p><ul><li><p class=""><strong>AB structure</strong> = “The starling was tired.” (simple sentence)</p></li><li><p class=""><strong>AⁿBⁿ structure</strong> = “The starling [that the cats want] was tired.” (nested/recursive relative clause)</p></li></ul><p> </p><p class="">This human sentence involves <strong>recursive embedding</strong>, which is <strong>much more complex</strong> than AB repetition. It requires <strong>memory, hierarchy, and grammar rules</strong>—all tightly linked to <strong>syntactic recursion</strong>.</p><p class=""></p><ul><li><p class="">While starlings show <strong>some pattern learning</strong>, the complexity and flexibility of <strong>human recursive syntax</strong> (like in embedded clauses) still appears <strong>qualitatively different</strong>.</p></li><li><p class="">This supports the view that <strong>recursion, as used in human language</strong>, remains a <strong>uniquely human capacity</strong>.</p></li></ul><p></p><p>*Birds could have counted or detected acoustic approximation → could have simply learned the patterns</p>](https://knowt-user-attachments.s3.amazonaws.com/1f622346-b200-4773-a1d1-100165ad4e3c.png)

What are 3 uniquely human components of language?

recursion

merge

lexicon

Describe merge in the Faculty of Language (FLN) → unique human component

Merge is a fundamental operation in syntax that:

Combines two elements (like words or phrases) into a new syntactic unit

Is the building block of syntactic structure

Example:

[ate] + [apple] → [ate apple]

This forms hierarchical structure, not just a linear string

Aspects:

Creates syntactic objects (like sentences or phrases)

Allows for syntactic dependencies (ex. subject-verb agreement, word order)

Supports hierarchical sentence structure

Integration with Language System

Merge operates within the syntactic module (blue box)

It interfaces with:

Conceptual-intentional system (meaning/thoughts)

Sensory-motor system (spoken/written/sign language)

![<p><strong>Merge</strong> is a fundamental operation in syntax that:</p><ul><li><p class=""><strong>Combines two elements</strong> (like words or phrases) into a new <strong>syntactic unit</strong></p></li><li><p class="">Is the building block of <strong>syntactic structure</strong></p></li></ul><p class="">Example:</p><ul><li><p class="">[ate] + [apple] → [ate apple]</p></li><li><p class="">This forms <strong>hierarchical structure</strong>, not just a linear string</p></li></ul><p></p><p><strong>Aspects</strong>:</p><ol><li><p class=""><strong>Creates syntactic objects</strong> (like sentences or phrases)</p></li><li><p class="">Allows for <strong>syntactic dependencies</strong> (ex. subject-verb agreement, word order)</p></li><li><p class="">Supports <strong>hierarchical</strong> sentence structure</p></li></ol><p class=""></p><p class="">Integration with Language System </p><ul><li><p class="">Merge operates within the <strong>syntactic module</strong> (blue box)</p></li><li><p class="">It interfaces with:</p><ul><li><p class=""><strong>Conceptual-intentional system</strong> (meaning/thoughts)</p></li><li><p class=""><strong>Sensory-motor system</strong> (spoken/written/sign language)</p></li></ul></li></ul><p class=""></p>](https://knowt-user-attachments.s3.amazonaws.com/0b24d5ba-0cad-45a3-ad9f-bc6f693c7aca.png)

Describe the 4 aspects of lexicon in the Faculty of Language (FLN) → unique human component

The lexicon is the internal mental store of words (lexical items).

Each lexical item includes:

Phonological form (how it sounds)

Syntactic features (how it combines with other words)

Semantic content (meaning)

It’s not just a list—it’s a rich, structured system crucial for producing and understanding language.

Aspects:

Highly complex and specific

Words carry detailed grammatical and conceptual information, unlike animal calls which are typically fixed and limited.

Infinite

Humans can create an unlimited number of new words or meanings using existing elements (e.g., compound words, neologisms, metaphors).

Mind-dependent entities

Lexical meaning is not just referential—it often reflects mental representations, emotions, or abstract concepts.

Enables interpretation of the world

Words are tools for categorizing and reasoning about experience, not just for communication.

System Interaction (diagram)

The lexicon is housed in the syntactic system (blue box).

It interacts with:

The conceptual-intentional system (orange): to encode thoughts into words

The sensory-motor system (red): to express those words in speech/sign

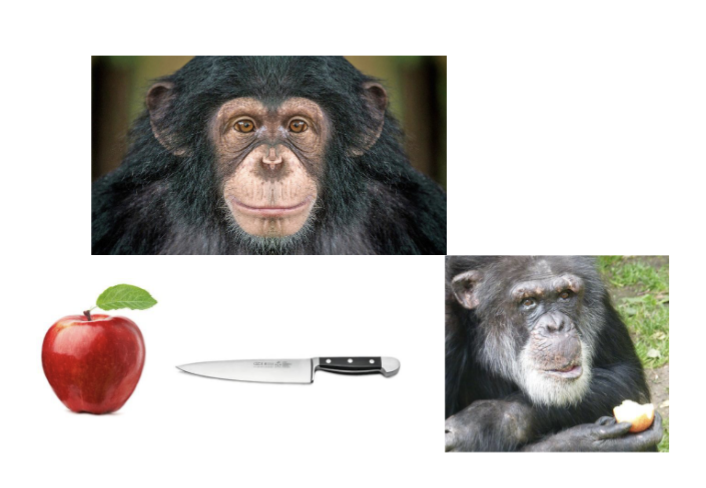

Decribe chimps and Lexicon → unique human component of Language (4)

While chimps can:

Recognize objects (ex. apple, knife)

Use tools

Learn some symbolic associations (via signs or lexigrams)

They lack a lexicon in the human linguistic sense:

1. Highly complex and specific

Human words encode rich syntactic and semantic features.

Chimps recognize objects but don’t assign roles or relationships linguistically.

2. Infinite

Humans can endlessly create and combine words (ex. “knife apple cutter,” “pre-cuttable apple”).

Chimps show no evidence of productive, generative word creation.

3. Mind-dependent entities

Human words can refer to things that don’t exist (ex. “unicorn,” “justice”).

Chimps communicate only about immediate, concrete entities.

4. World interpretation

Language lets humans categorize, explain, and narrate the world.

Chimps can act on the world but do not show evidence of representing it symbolically in structured ways.

🐒 Visual Message of the Slide

The chimp with an apple and knife suggests tool use and object recognition.

But it cannot label, describe, or reflect on the experience using language.

Neural architecture

What are the two FLB interfaces and FLN - 3 major component of language?

Sensory-Motor Interface (FLB)

How language is externalized (speech, sign)

Includes:

Speech discrimination (Ramus et al. 2000 – tamarins)

Vocalization (Owren & Bernacki, 1988 – vervet monkeys)

2. Conceptual-Intentional Interface (FLB)

Interface between language and thought

Includes:

Vocalization again (ex. vervet monkey calls as meaningful signals)

3. Computation Core (FLN)

Syntax and recursion

Merge as the core operation (Berwick et al., 2013)

Enables hierarchical structure

What are the 3 components of evolution?

Variation: Individuals differ in traits

Heredity: Traits are passed on genetically

Differential reproduction: Traits that help survival and communication get passed on more easily

Implication for language:

Language-related traits (like vocal learning or symbol processing) may have evolved gradually due to adaptive advantages (e.g., better cooperation, teaching, mate selection)

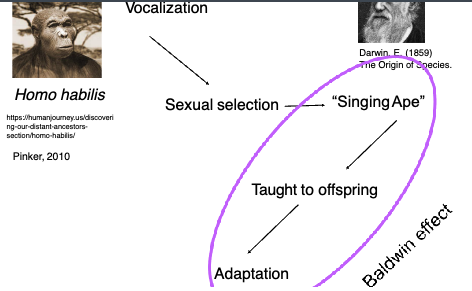

Describe Vocalization and the Singing Ape Hypothesis → evolution

Early vocalizations were:

Taught to offspring (culturally transmitted)

Used for social bonding or display

Over time, this behavior:

Became subject to sexual selection (more complex/appealing vocalizers reproduced more)

Led to the emergence of a “singing ape”: proto-humans with richer vocal abilities

🧬 Result:

Vocal learning became an adaptation, biologically supported and passed on genetically

Feedback loop: cultural teaching → selective advantage → biological adaptation → more teaching

🔁 Key Mechanism: Baldwin Effect

Learned behavior (like vocal imitation) leads to biological adaptation over time due to selection pressures.

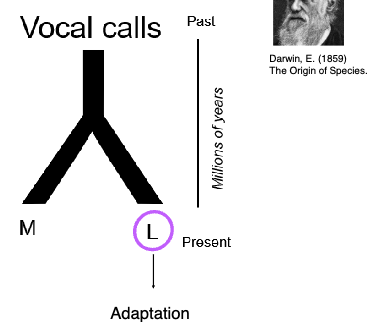

Describe: vocal calls to language

Vocal Calls → Language (L)

M = Common ancestor shared by humans and other primates

From primitive vocal calls, two lineages evolved:

One remained with non-linguistic vocalizations

One (L) led to language

Millions of years of evolution separate simple vocal calls from modern language

✅ Language (L) is now a biological adaptation:

It likely began as learned, flexible vocal behavior

Selected for because of social, sexual, or survival advantages

How was Wilhelm Wundt influential to the evolution of language?

1879 – Wilhelm Wundt: psychological research

chat:

Founded the first psychological lab in Leipzig

Marked the beginning of experimental psychology

🧪 Psychology provides:

Methods to study cognition, learning, and communication

Insight into mental processes behind language: memory, attention, intention, social interaction

💬 Applied to language evolution:

Helps explain how the mind supports language (e.g., theory of mind, symbol use, imitation)

Complements biological and linguistic approaches

What impact did the Société de Linguistique de Paris (1866) have? → Language Evolution: Linguistics

🏛 Société de Linguistique de Paris (1866):

Banned all discussion of language origins.

Language origin was seen as unscientific due to lack of data.

Gatekeeper of what someone could publish in linguistics

Describe the modern view on evolutionary linguistics

🧠 Modern View (Chomsky & Hauser)

“It is not easy to imagine a course of selection...”

— Chomsky (1981)

🔹 Language’s complexity makes its evolution hard to reconstruct via natural selection alone.

“FLN may have evolved for non-language reasons...”

— Hauser et al. (2002)

🔹 FLN (e.g. recursion) might have evolved originally for other cognitive domains like:

Numerical reasoning

Navigation

Social cognition

🔍 Key Insight

Language-specific abilities (like Merge) may be by-products or exaptations, not direct adaptations for communication.

Describe comparative methods used in linguistics for animals → language evolution

Goal: Understand what aspects of language are uniquely human by comparing humans with other species.

🐵 Approach:

Study non-human animals for:

Communication systems (vervet monkey alarm calls)

Vocal learning (songbirds, parrots)

Cognitive capacities (chimpanzees using symbols or gestures)

🧠 What We Learn:

Shared traits (FLB): perception, memory, vocal imitation, social learning

Unique traits (FLN): recursive syntax, Merge, hierarchical structure

chat:

Comparative linguistics studies the evolution and relationships between languages by:

Comparing vocabulary

Analyzing grammar and sound patterns

Reconstructing language families

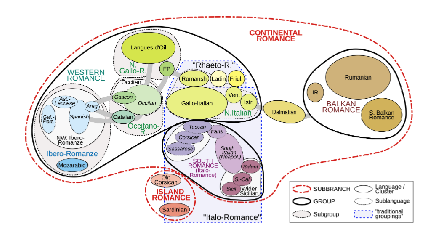

🗣 Example: Romance Languages

The diagram maps Romance subfamilies:

Ibero-Romance (e.g., Spanish, Portuguese)

Western Romance, Eastern Romance, Island Romance, etc.

Shows language descent and divergence from Latin

🧠 Why It Matters for Language Evolution:

Reveals how languages change over time

Helps trace linguistic ancestry

Offers clues about universal patterns and cognitive constraints

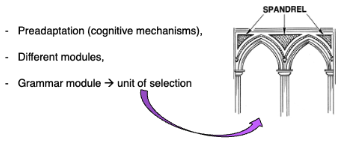

Describe the linguist revival 1990 (3)

🧬 Language as a gradual adaptation shaped by natural selection

→ Not a sudden mutation, but evolved like other complex traits.

🧠 Key Ideas:

Preadaptation:

Language built on earlier cognitive mechanisms (ex. memory, imitation)

Modularity:

The mind has specialized modules (ex. vision, language, social reasoning)

Grammar module:

Treated as a potential unit of selection—it evolved because it helped survival/reproduction.

🏛 SPANDREL metaphor:

Not all features are directly selected for—some (like certain aspects of grammar) may be by-products (spandrels) of other adaptations.

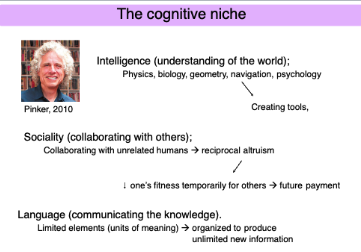

Describe the cognitive niche and the three cognitive mechanisms hominids evolved to use (3)

A niche is the environment an organism constructs

The cognitive niche is a niche built using brainpower instead of claws or speed

🧍♂ Hominids evolved to:

Use cognitive mechanisms to dominate their environment

🧠 Intelligence → understanding of the world

🗣 Language → communicating that knowledge

🤝 Sociality → collaborating with others

reciprocal altruism: collaborating with unrelated humans to pass on knowledge

chat:

🧠 Intelligence (understanding of the world)

Domains: physics, biology, geometry, navigation, psychology

Leads to tool creation and strategic manipulation of the environment

🤝 Sociality (collaborating with others)

Humans cooperate even with unrelated humans

Based on reciprocal altruism:

Short-term decress in one’s fitness for others → future reward

Builds trust, coordination, social bonds

🗣 Language (communicating knowledge)

Combines limited elements (words) to generate unlimited messages

Essential for:

Teaching

Planning

Coordinating social and technical tasks

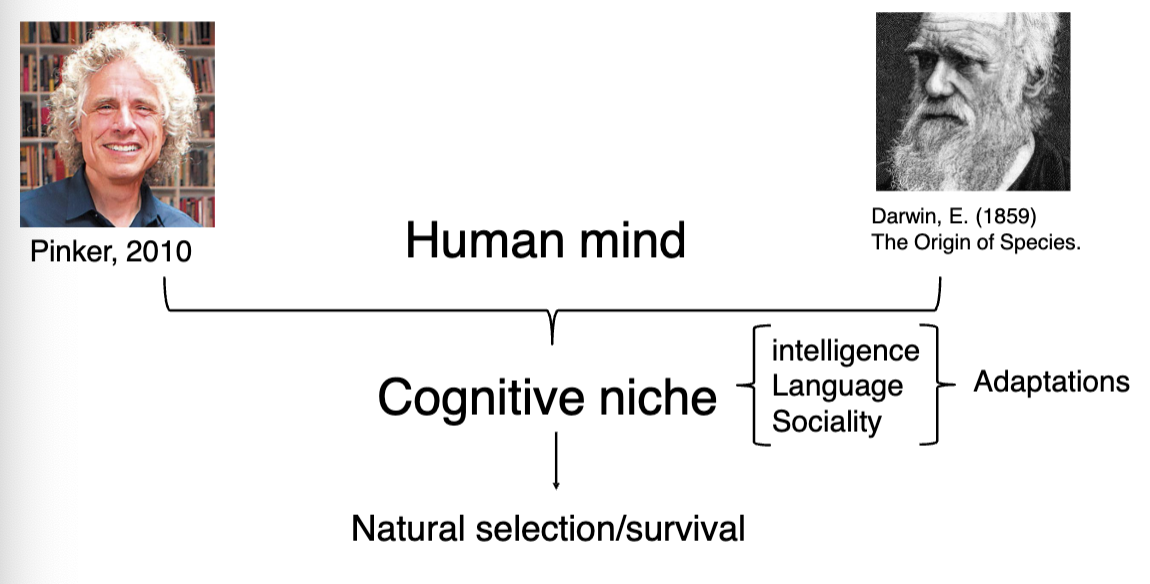

The cognitive niche

🧬 Human Mind = Cognitive Niche

The cognitive niche is a human-specific strategy for survival and adaptation.

It's constructed by the brain using:

🧠 Intelligence (understanding the world)

🗣 Language (communicating knowledge)

🤝 Sociality (collaboration and cooperation)

These are adaptations:

Evolved through natural selection

Gave humans a flexible, knowledge-based survival strategy

Contrast with animals relying on physical traits (claws, speed)

📌 Summary

The human mind itself is an adaptation shaped by and shaping the cognitive niche.

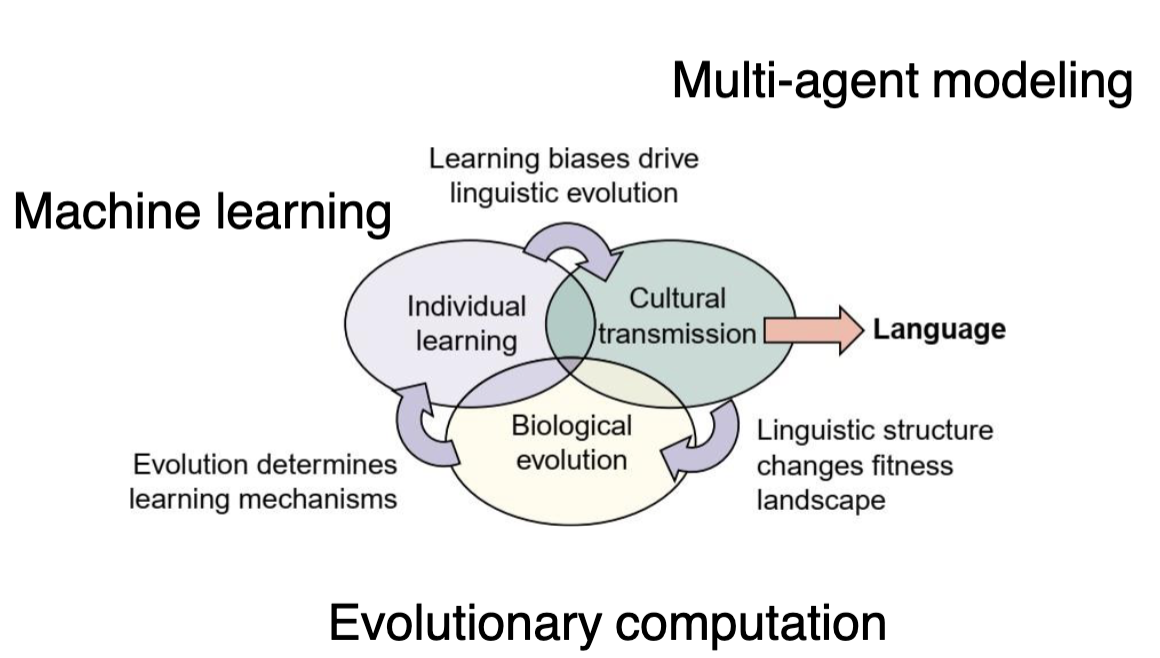

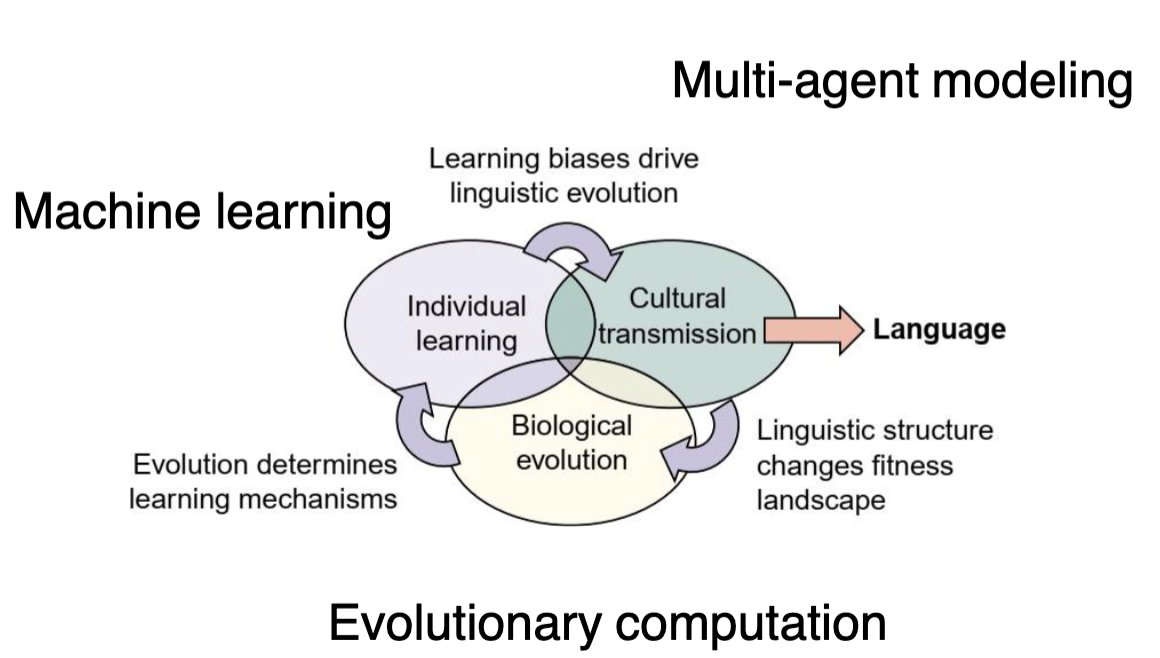

What are the 3 computational models?

Machine learning

Multi-agent modeling

Evolutionary computation

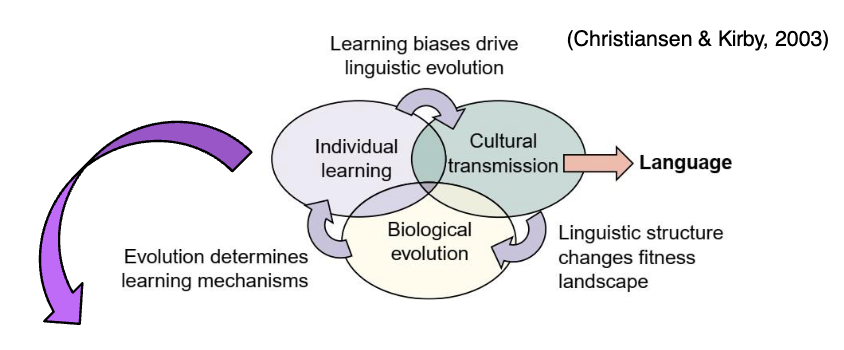

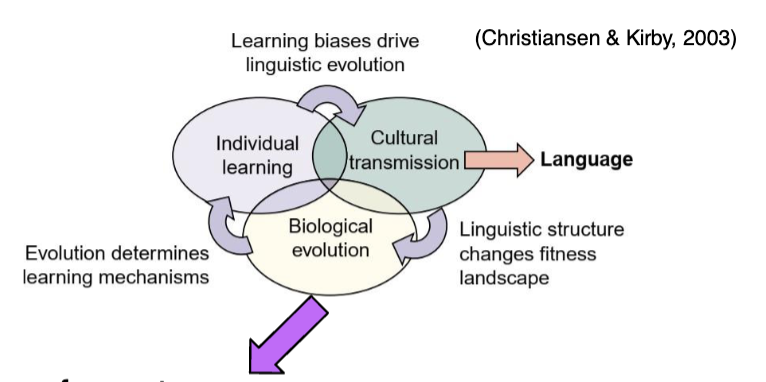

Describe computational modeling

🔁 Three interacting systems:

Individual learning

→ Learners adapt to language based on biases and exposure

→ (Linked to machine learning models)Cultural transmission

→ Language is passed between generations through imitation, teaching, and communication

→ Drives changes in structure over timeBiological evolution

→ Determines learning capacities, vocal abilities, and cognitive traits

→ Language structure can affect fitness (e.g., cooperation, success)

🧩 Interactions:

Learning biases influence which structures are easier to acquire

Cultural evolution favors learnable, expressive systems

Biological evolution shapes learning mechanisms

Language itself feeds back into fitness and selection

🛠 Tools:

Machine learning = simulates individual learning

Multi-agent modeling = simulates populations and cultural dynamics

Evolutionary computation = simulates long-term genetic change

Describe the computational model machine learning (2)

🧠 Individual Learning

Learners acquire language by observing others

🧪 Key Learning Models:

Instance-based learning (Batali, 1999)

Learners compare new information to stored information

Enables pattern recognition and generalization

Network models (Christiansen & Dale, 2003)

Use neural networks to simulate how language is learned and processed

Capture emergent structure from repeated exposure

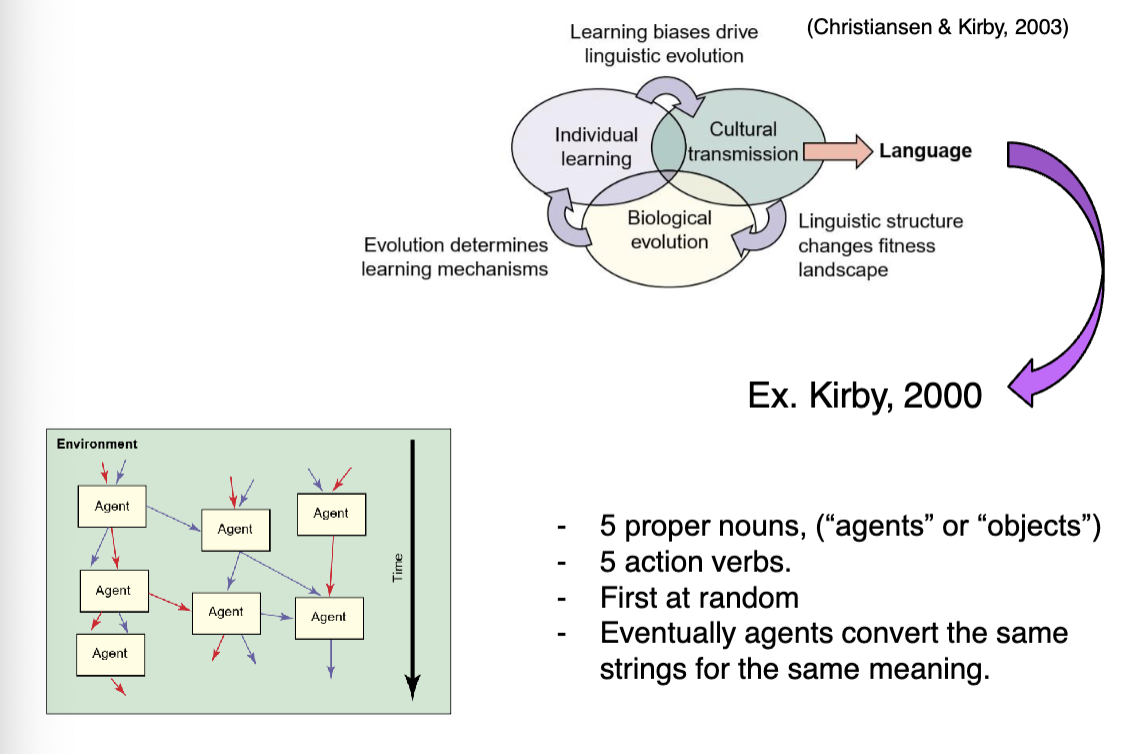

Describe the computational model multi-agent modeling & the experiment for it

Ex: Kirby, 2000

🧩 What it is:

A simulation where multiple agents interact and learn from each other over time.

Each agent has a set of meanings and signals (words/strings) that can be shared.

🧠 Key Setup:

5 proper nouns (ex. agents or objects)

5 action verbs

Agents start with random associations

Through interaction and feedback, they converge on shared meanings (ex. "word A" = "agent X")

🔄 How it models language evolution:

Simulates cultural transmission

Captures how shared structure (like grammar or vocabulary) emerges without centralized control

Shows how language stabilizes over generations

Describe the computational model evolutionary computation

⚙ What It Models:

Biological evolution of agents

Agents have genetically-determined traits (e.g., learning bias, communication ability)

Traits are passed on through genetic transmission and selected via natural selection

🔁 Evolutionary Process:

Some traits give agents a fitness advantage (e.g., better communication = more success)

These traits are selected for over generations

Goal: simulate how language-related biological traits evolve

What’s a criticism of the computational model evolutionary computation?

Bickerton, 2007:

Many models suffer from the “streetlight effect” –

They only search for answers where it’s easiest to look, not necessarily where the truth is.

→ Lack of realism: Often oversimplified or detached from actual human biology/social dynamics

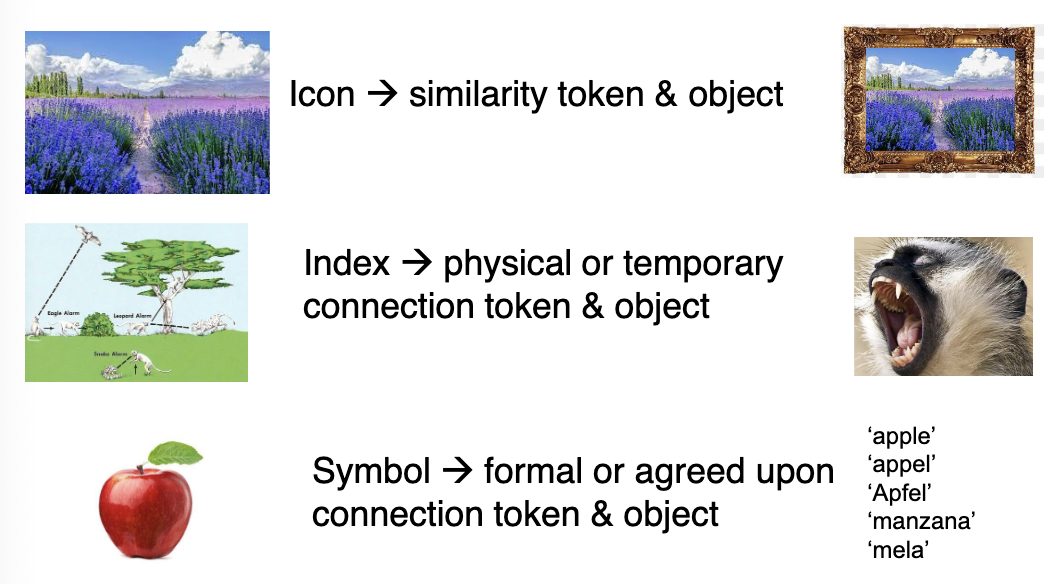

What are the 3 types of symbols → consensus

🖼 Icon

Similarity between the token (representation of object) and the object it represents

Example: Picture of a field = actual field

🔗 Index

Token is physically or temporally linked to what it refers to

Direct or causal connection

Example: Monkey alarm call → indicates nearby predator, smoke → fire

🅰 Symbol

Arbitrary but socially agreed connection → no natural link, relies on shared understanding

formal or agreed upon connection between token & object

Example:

🍎 = “apple” in English

“Apfel” (German), “manzana” (Spanish), “mela” (Italian)

What are the 3 key developments of symbols → consensus

Relation of symbols with each other

Language isn’t just words → it's how words relate to each other

Increase in symbolic complexity

Growing structure = syntax

Allows infinite combinations of finite words

Auditory memory expansion

Needed to track sequences of sounds

Important for understanding longer phrases or nested sentences

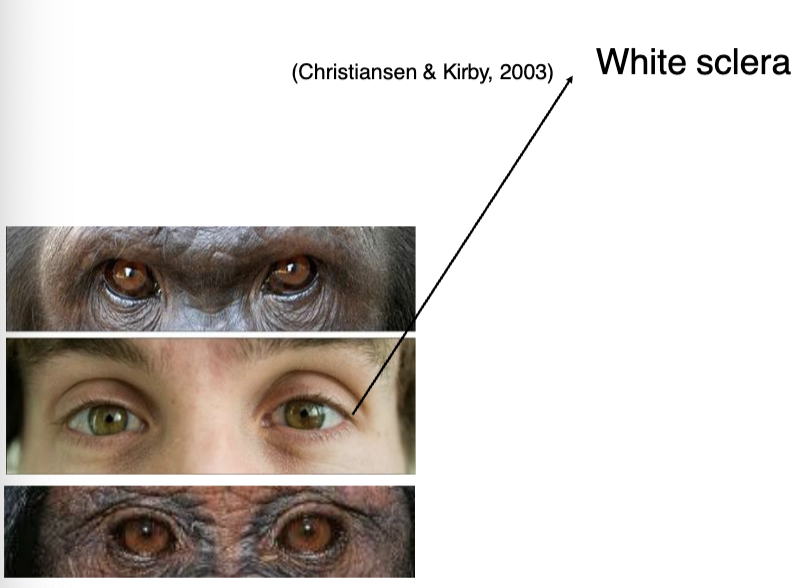

How is white sclera an example of a preadaptation unique to humans compared to other primates, and why does it matter for language?

White sclera (visible eye-whites)

Enhances eye-gaze visibility and joint attention

🧠 Why it matters for language:

Supports nonverbal communication

→ Easier to follow others' gaze and intentionsEnables shared attention

→ Crucial for teaching, pointing, and symbol groundingGrand apes prefer head movement over eye gaze

Babies (12-18 mo) prefer eye gaze over head movement

🪜 Preadaptation defined:

A biological trait that evolved for another function but facilitated language evolution.

What are 3 preadaptations in humans?

white sclera → joint attention, tracking eye-gaze

ToM → represent others as agent and intentional beings

mirror neurons → empathy

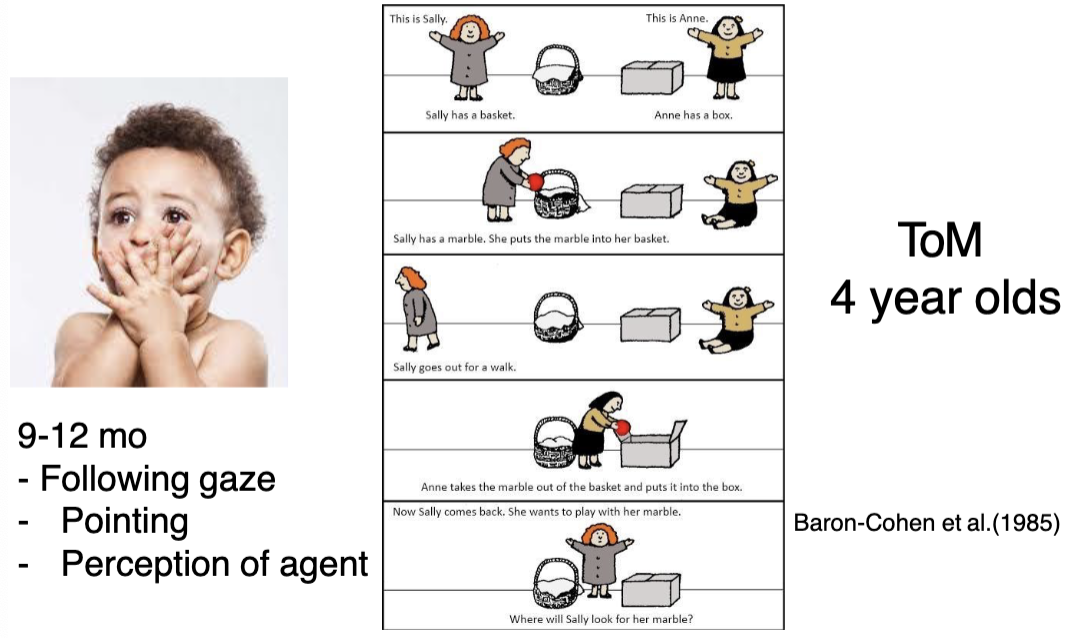

How is representing others as agents a preadaptation for humans and why does it matter for language?

🧍♂👁 Theory of Mind (ToM)

The ability to represent others as intentional beings with thoughts and goals

Essential for communication, cooperation, and language

🧒 Developmental Milestones:

9–12 months:

Follows others’ gaze

Understands pointing

Perception of agents

4 years old:

Passes false belief tests (e.g., Sally-Anne task)

Can represent what someone else knows/thinks even if it’s wrong

🧩 Why it matters for language:

Enables shared attention, joint action, and symbol use

A cognitive prerequisite for understanding intentions behind words

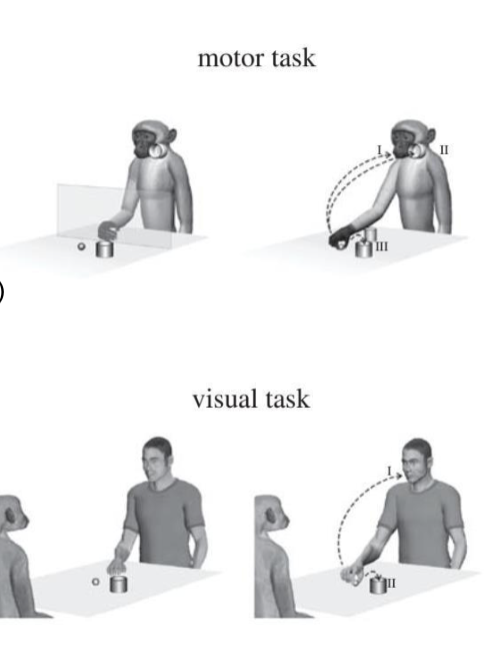

How is mirror neurons a preadaptation for humans and why does it matter for language?

🔁 What are Mirror Neurons?

Neurons that fire both when an individual performs an action and when they observe the same action in another.

🧍♂→🧍♂ Key Functions:

Action imitation

Learning by observation

Understanding others’ intentions

🧠➡💬 Why they matter for language:

Enable social learning of communicative gestures

Support the recognition of communicative intent

Possible foundation for gesture-based or spoken language evolution

📌 Core Idea:

Mirror neurons help link action, perception, and intention — a crucial foundation for communicative behavior.

Brain responses are based on neural population activity, not individual neurons — so interpretations must consider broader network effects

Describe the gesture hypothesis

🖐 Gesture Hypothesis

Animal calls show limited vocal control.

Tool use led to gestures evolving into vocal forms

Humans have advanced imitation skills

Vocal sounds were recruited to accompany gestures → supports idea that gesture and vocalization co-evolved

Describe the speech hypothesis

🗣 Speech Hypothesis

Gestures needed line of sight and daylight (visually limited)

Phonetic gestures evolved from chewing/sucking/swallowing

Vocal calls became holistic words

Describe verbal dyspraxia in the KE family

🧑👩👦👦 1991: KE Family

Language impairment (grammar comprehension + production)

Caused by mutations in FOXP2 gene

Apart from the KE family in which animals is verbal dyspraxia present? (3)

🐀 FOXP2 Across Species

Present in rats, monkeys, and apes

Apes differ from humans by 2 amino acid changes

What does FOXP2 do? → language and genes

Transcription gene

Turns other genes on/off

Crucial in developing neural circuits for language

Describe right handedness in humans and chimps → language dominance (3)

🧠 Brain-Language Relationship

95% of right-handed people:

→ Left cerebral dominance for language

75% of left-handed people:

→ Also left hemisphere dominant for language

🧍 Handedness Prevalence

90% of humans are right-handed

50% of chimps show right-hand preference

🗣 Language & Gesture

Right-handedness → better word generation

Birds, frogs, mammals:

Vocalization processed in left hemisphere

Which side of the body does the left hemisphere control?

The right side of the body

SUMMARY

🧬 Biology: Language as Adaptation

Darwinian view: Language evolved via natural selection

📚 Linguistics Perspective

Language = gradual adaptation

Fits into the cognitive niche:

Natural selection

Intelligence

Language and Sociality

🤖 Computational Models

Machine learning

Multi-agent modeling

Evolutionary computation

🧠 Consensus

Language uses symbols and preadaptations (ToM, mirror neurons, joint attention)

⚖ Controversies

Speech vs. Gesture: Which came first?

Language and Genetics: FOXP2 gene

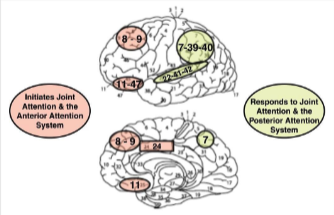

What are the two types of joint attention?

RJA (Responding Joint Attention): Following others' gaze or gestures

sharing a common point of interest

helps learning and language acquisition

in infants and chimps

IJA (Initiating Joint Attention): Actively directing another's attention

shows intent to share interest or pleasure

develops later

needs a deeper understanding of others’ attention and intentions

limited use in chimps

Describe the social cognitive model

Social cognition:

Develops around 9–12 months

Foundation for developing joint attention (RJA & IJA)

RJA and IJA are correlated in development

🔹 Key Study (Brooks & Meltzoff, 2005):

At 10–11 months, infants show social awareness of the meaning of looking

Look longer when adult’s eyes are open vs. closed ➝ evidence of social interpretation

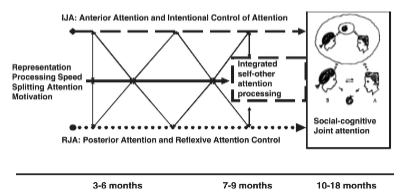

What are the 2 attention-system models?

Posterior attention system → supports RJA (Responding Joint Attention)

Anterior attention system → supports IJA (Initiating Joint Attention)

🔹 Development Timeline:

3–6 months: Reflexive attention (RJA), basic attention control

7–9 months: Beginning of intentional control (IJA), faster processing

10–18 months: Integrated self–other attention → emergence of full joint attention abilities

Describe the social cognitive model?

🔹 Key Points:

Social cognition develops around 9–12 months and is necessary for the development of joint attention

RJA and IJA develop in parallel → correlated growth

Receptive joint attention at 6 months already supports language learning

Yet, social cognition is not fully developed at this age

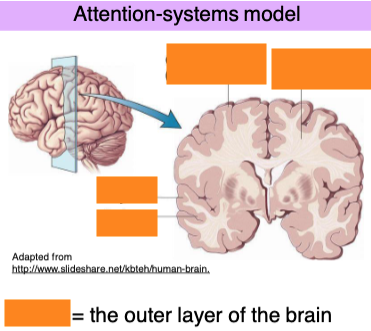

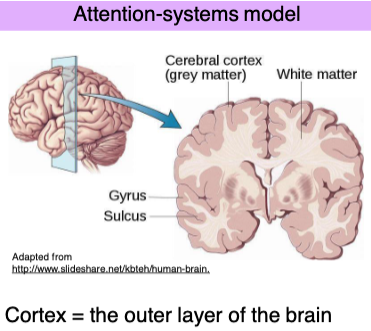

Grey matter (cerebral cortex): involved in processing information

White matter: responsible for communication between brain regions

Brain folds:

Gyrus = raised ridge

Sulcus = groove or fold

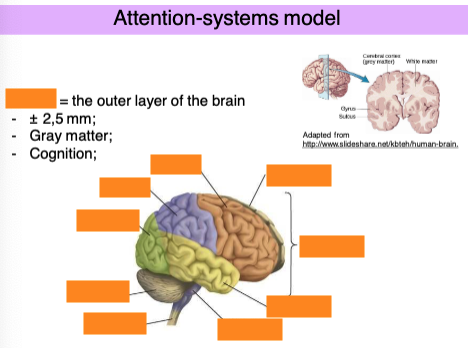

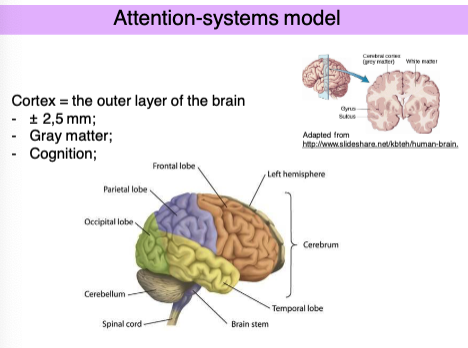

Cortex:

Made of gray matter

Crucial for cognition (thinking, attention, memory)

🔹 Major Brain Regions:

Frontal lobe: decision-making, planning, voluntary movement

Parietal lobe: sensory integration, spatial orientation

Temporal lobe: auditory processing, language

Occipital lobe: visual processing

Cerebellum: coordination and balance

Brain stem & spinal cord: basic bodily functions, communication between brain and body

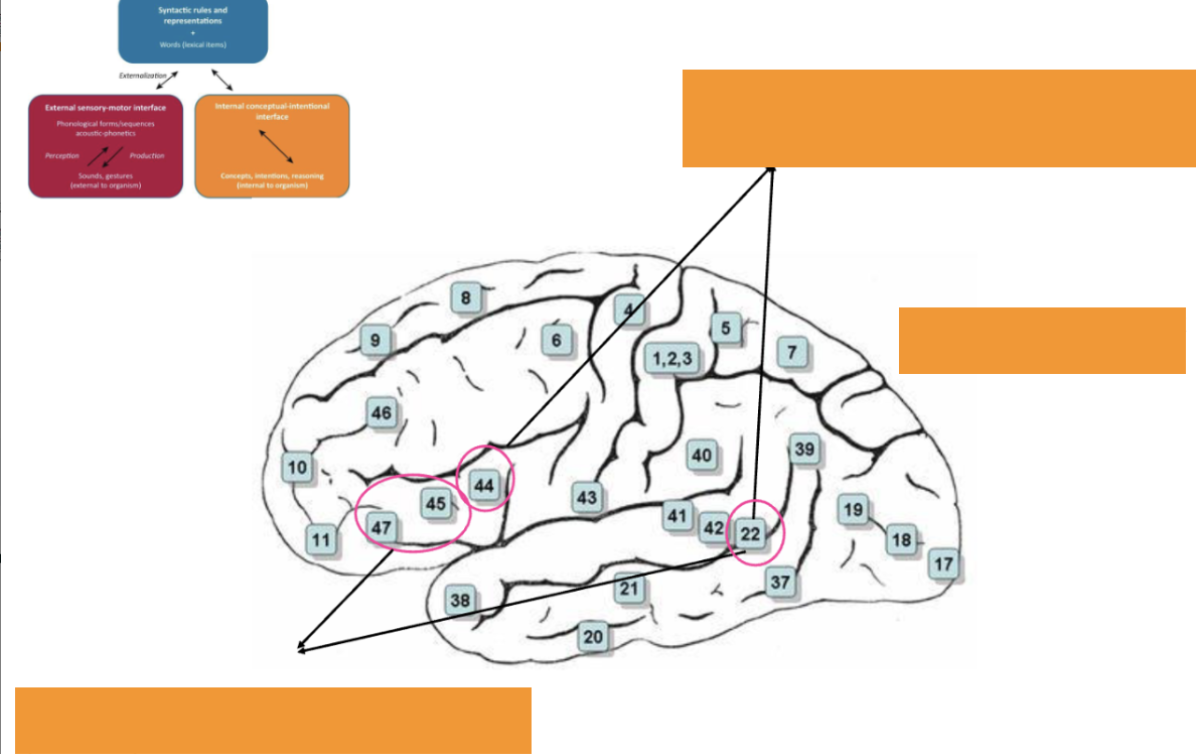

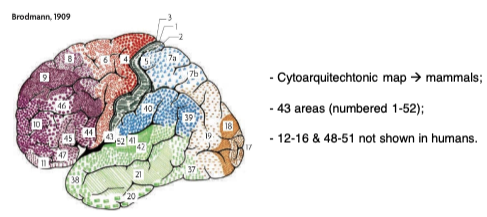

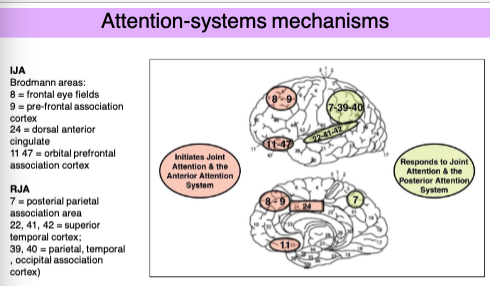

Attention-Systems Model: Brodmann Areas (1909)

Cytoarchitectonic (cell structure) map of the cortex is applicable to mammals

Divides cortex into 43 areas (labeled 1–52)

Areas 12–16 and 48–51 are not found in humans

Used to localize brain functions (e.g., attention, vision, language)

Attention-systems mechanisms

What are 5 aspects of the Attention-Systems Model?

RJA ≠ IJA, but they are interactive mechanisms of attention

Experience influences attention system interaction

IJA demands more connection between frontal and posterior attention systems than RJA

Autistic individuals have poor connection between their frontal and posterior attention systems

Blind children have different frontal neural topography for social cognition

How is language processing a selective mechanism? (2)

Language Acquisition

Joint attention between adult, infant, and object facilitates language learning

Language Processing

Selective mechanism:

Drives goal-directed processing

Helps filter out distractors

Describe Attention in Language Processing: Automatic vs. Controlled

Automatic: Cognitive Psychologists

No interference

No attention needed

Controlled: Early Psycholinguists

Language is modular

Computation is automatic

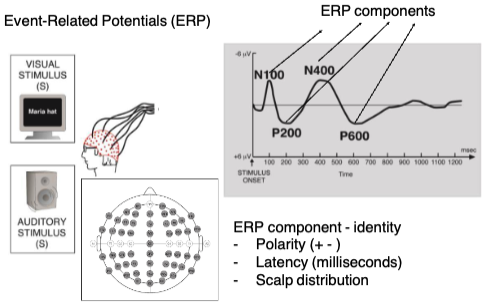

Describe the components and stimulus types of Event-Related Brain Potentials (ERP)

ERP Components (ex. N100, P200, N400, P600):

Identity features:

Polarity: Positive (+) or Negative (–)

Latency: Time in milliseconds after stimulus

Scalp distribution: Where the activity is recorded

Stimulus types:

Visual (ex. word on screen)

Auditory (ex. spoken word)

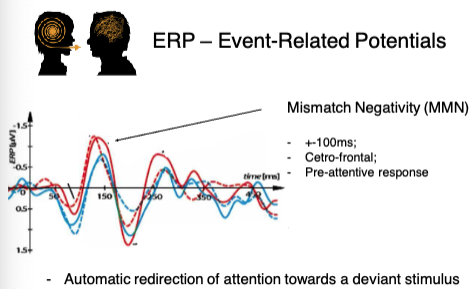

Describe key characteristics of Event-Related Brain Potentials (ERP)

ERP – Mismatch Negativity (MMN)

Mismatch Negativity (MMN) = ERP response to a deviant stimulus among standard ones

Key characteristics:

Occurs at ~100ms

Detected over centro-frontal regions

Pre-attentive: does not require conscious attention

Function:

Reflects automatic redirection of attention to unexpected or deviant events

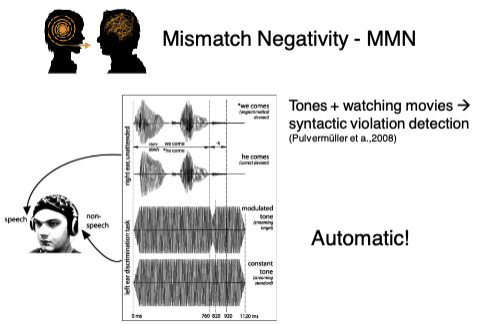

Describe mismatch negativity (MMN) in Event related brain potentials (ERP)

AUTOMATIC!

Syntactic Violation Detection → Study: Pulvermüller et al. (2008)

Finding:

Mismatch Negativity (MMN) occurs automatically in response to syntactic violations

Even when participants were watching movies + hearing tones (i.e., not focused on the language task)

Conclusion:

Syntactic processing can be automatic, detected without attention

🧪 Study: Pulvermüller et al. (2008) 🧠 What did they study?

Whether the brain automatically detects syntactic violations — even when you're not paying attention to the language.

Participants were distracted (watching a silent movie + listening to tones).

Meanwhile, grammatical and ungrammatical sentences were played in the background.

🎯 Key Finding:

The brain showed a Mismatch Negativity (MMN) in response to syntactic violations.

MMN is an ERP component that usually reflects pre-attentive change detection — the brain’s way of noticing when something violates a rule or expectation.

MMN showed up even when participants were not focused on the language at all.

🔍 Conclusion:

✅ Syntactic processing can happen automatically.

✅ The brain can detect grammatical errors without conscious attention.

🚫 This differs from semantic processing (like the N400), which does require a certain level of input clarity or attention (as we saw earlier with degraded speech).

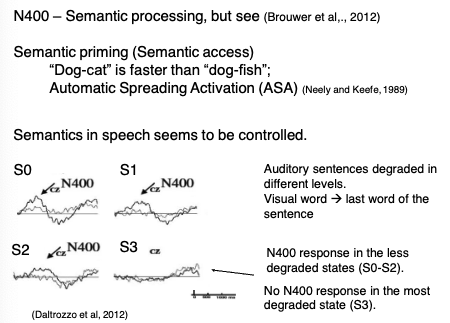

Describe event related brain potentials (ERP) N400 & Semantic Processing

N400 = ERP component related to semantic priming (semantic access)

Example: "Dog–cat" is processed faster than "dog–fish"

🔁 Automatic Spreading Activation (ASA)

Semantics in speech seems to be controlled:

Auditory sentences degraded in different levels (increasing degradation from S0 to S3)

N400 response in the less degraded states (S0–S2)

No N400 in the most degraded speech (S3)

⇒ Semantic processing not fully automatic, depends on input quality

🧠 What is the N400?

The N400 is an event-related potential (ERP) — a kind of brainwave measured by EEG.

It shows up about 400 milliseconds after you hear or read a word.

It reflects semantic processing — how your brain reacts to the meaning of words.

💡 Classic N400 effect: Semantic Priming

When two words are semantically related (like “dog–cat”), the brain processes the second word faster and with less effort.

This causes a smaller N400.

If the words are unrelated (like “dog–fish”), the N400 is larger, showing that your brain had to work harder to integrate the unexpected or unrelated meaning.

Why?

→ This supports the idea of Automatic Spreading Activation (ASA):

When you hear “dog,” it activates related concepts in your mental lexicon, like “cat,” “bark,” or “bone.”

If the next word is one of those, it’s already activated, so it’s easier to process.

🎧 What happens in spoken language, especially degraded speech?

Researchers tested how semantic processing (and the N400) behaves when speech quality drops — like when audio is muffled, noisy, or distorted.

They created 4 levels of degradation, from S0 (clear) to S3 (very degraded).

🔍 Key Finding:

In S0 to S2 (where speech is still fairly understandable), the N400 effect is present. This means:

Even if the speech isn’t perfect, the brain still accesses meaning and shows semantic priming (smaller N400 for related words).

In S3 (heavily degraded speech):

The N400 disappears → no evidence of semantic access.

The brain can’t process the meaning if the signal is too poor.

🧠 Interpretation:

Semantic processing is not fully automatic — it depends on the quality of the input.

This challenges older models that assumed semantic access happens automatically, no matter what.

Instead, if auditory input is too unclear, your brain may not even get far enough to do semantic processing.

This shows a kind of adaptive efficiency: the brain doesn’t waste resources trying to extract meaning when the signal is unintelligible.

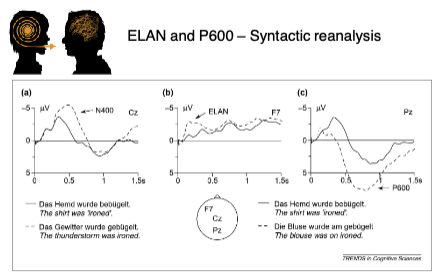

Describe ELAN and P600 – Syntactic Reanalysis → event related brain potentials (ERP)

ELAN = automatic

p600 = controlled

ELAN (Early Left Anterior Negativity):

Appears ~200ms

Reflects early automatic syntactic structure building

Shown when sentence violates expected word category

P600:

Appears ~600ms

Reflects controlled syntactic reanalysis or repair

Linked to conscious syntactic processing (e.g., garden-path sentences)

🧠 ELAN vs. P600 — Two Stages of Syntactic Processing

These are ERP components observed during sentence processing, especially in response to syntactic violations.

🔹 ELAN (Early Left Anterior Negativity)

When: ~200 milliseconds after the critical word

Where: Left frontal regions of the brain

What it reflects:

🔄 Automatic, fast parsing of syntax

🧱 Structure building — e.g., identifying whether the incoming word fits the expected grammatical structure

⚠ Triggered by:

Violations of syntactic category:

e.g., “The man sang the book.” ← Verb in place of a noun → ELAN response

The brain expected a noun, got a verb → automatic detection

➕ Summary:

Unconscious

Early

Structure-sensitive

Automatic parsing

🔹 P600

When: ~600 milliseconds after the word

Where: Parietal regions (often posterior)

What it reflects:

🧠 Controlled, conscious processing

🔁 Reanalysis or repair of syntactic structures

⚠ Triggered by:

Complex or ungrammatical sentences that require reinterpretation

e.g., Garden-path sentences:

“The horse raced past the barn fell.” ← Unexpected verb “fell” forces reanalysis

Also triggered by agreement violations:

“The key to the cabinets are rusty.”

➕ Summary:

Conscious

Later

Involves reanalysis

Effortful syntactic repair

🧩 Final Insight:

These components show that syntax is processed in stages:

First, the brain automatically checks word category (ELAN).

If something goes wrong or gets confusing, the brain may consciously repair or reinterpret the sentence (P600).

Describe the criticism of ELAN being an artifact or real effect

Criticism by Steinhauer & Drury (2012):

ELAN might be an artifact of:

Implicit learning or experimental strategy

Experimental design choices

🧪 Challenges the idea that ELAN reflects a true early syntactic processing mechanism

❗ Criticism: Is ELAN a real marker of automatic syntax processing?

Steinhauer & Drury (2012) challenged the interpretation of ELAN as a clear sign of early syntactic structure building by pointing to several concerns.

🔍 1. Experimental Design Artifacts

They argue that some studies claiming to find ELAN may have unintentionally:

Used highly artificial or repetitive stimuli

Created expectation effects due to predictable structures

Repeated sentence types so often that participants developed strategies

🔁 Result: The observed ELAN might reflect task-related effects or learned regularities, not spontaneous syntactic parsing.

🔍 2. Implicit Learning or Strategy

Participants may implicitly learn patterns during the experiment (even if they’re not aware of it).

This could lead to early ERP effects that mimic ELAN — but are actually driven by attention, working memory, or prediction, not real-time structure building.

🧠 In other words:

"Maybe participants aren't automatically parsing syntax — maybe they're just good at noticing patterns we accidentally trained them on."

🧪 3. Reproducibility and Variability

ELAN findings are not always robust or replicable across labs or languages.

The timing, scalp distribution, and presence of ELAN vary a lot between studies.

This inconsistency weakens the claim that ELAN is a universal and reliable marker of early syntactic processing.

🔄 Summary of Steinhauer & Drury’s Argument

Point of Criticism | Explanation |

|---|---|

Design-driven artifact | ELAN may arise from how the experiment is structured, not from natural syntax processing |

Implicit strategy use | Participants may unconsciously "game" the system, leading to misleading ERP signals |

Poor replicability | ELAN isn’t consistently found, raising questions about its theoretical significance |

Not conclusively syntax-specific | The ELAN might reflect other processes (like early attention or expectation mismatch) |

🧠 Implication:

This critique does not disprove that early syntactic processing exists — but it warns against over-interpreting ELAN as definitive evidence for it.

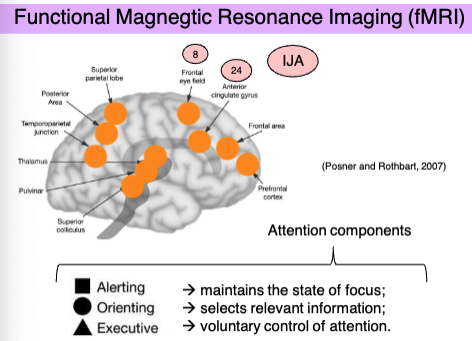

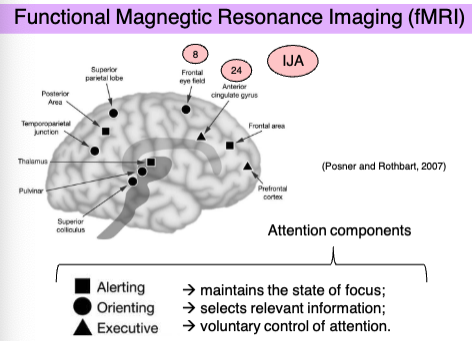

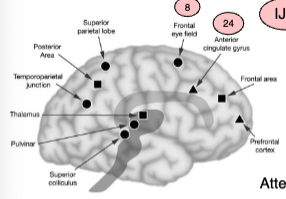

What are the three attention networks?

Alerting → Maintains the state of focus

Orienting → Selects relevant info

Executive → Voluntary control of attention

1. Alerting

Function: Maintains readiness and a high state of sensitivity to incoming stimuli.

Like your brain's “stay awake and be ready” signal.

Involves arousal and vigilance.

Neurotransmitter: Norepinephrine

Brain areas involved: Right frontal and parietal cortex; thalamus

✅ Example: You're waiting for the traffic light to turn green — your alerting system keeps you ready to respond.

2. Orienting

Function: Directs attention to specific stimuli or locations — selects what's relevant.

Like a spotlight that shifts your attention based on external cues.

Can be overt (eye movement) or covert (attention shift without moving eyes).

Neurotransmitter: Acetylcholine

Brain areas involved: Superior parietal lobe, frontal eye fields, superior colliculus

✅ Example: You hear your name across a noisy room and immediately focus on that direction — that's orienting.

3. Executive (Control)

Function: Manages voluntary, goal-directed attention — especially during conflict or decision-making.

Involves inhibition, task-switching, and error monitoring.

Neurotransmitter: Dopamine

Brain areas involved: Anterior cingulate cortex (ACC), lateral prefrontal cortex

✅ Example: You’re doing a math test, ignoring distracting noises — your executive system helps you stay on task.

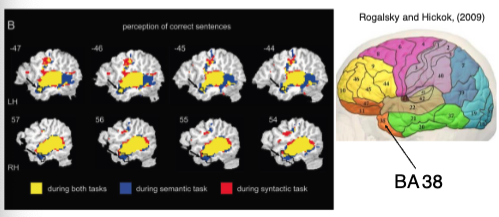

Describe selective attention & the anterior temporal lobe, what are the 2 violations?

Selective attention targets the Anterior Temporal Lobe

Focuses on language processing and meaning

Violations:

Syntactic violation: "A tailor in the city were altering the gown."

Triggers syntactic reanalysis → often associated with ERP markers like P600.

Semantic violation: "That coffee at the diner was pouring the waiter."

Triggers semantic integration failure → often linked to N400 response.

Brain Activation:

BA 38 (Brodmann Area 38)

Activated during semantic and syntactic processing

Color-coded:

Yellow = Both tasks

Blue = Semantic

Red = Syntactic

🧠 What does BA 38 do in these cases?

It’s activated in both types of violations.

Suggests it plays a role in integrating meaning and structure — not just isolated semantic or syntactic processing.

Selective attention may amplify ATL activity when the brain tries to resolve conflicts or detect anomalies in meaning or grammar.

Describe Attention Impairment & Language in Parkinson’s disease (3)

Neurodegenerative disease affects attention and memory networks

Difficulty in keeping focus on words and retrieving them

Unable to identify phonetic and semantic errors in sentences

Describe attention impairment & language in specific language impairment and dyslexia

Specific Language Impairment (SLI)

Developmental language disorder

Impacts phonological, semantic, syntactic processing

SLI → sustained attention deficit

SLI children are worse at sustaining attention than non-SLI children

IQ controlled for

Dyslexia

Visual attention deficit predicts poor reading

🧩 Language and attention share neural networks

Describe the fixations of eye tracking regarding attention and reading (3)

Saccades (eye motions) → spatial attention shifts

Fixations depend on:

Word frequency

Grammatical category

Phonological complexity

Describe the two models that show attention is influenced by linguistic information?

🧩 Serial Processing

Word-by-word

Attention shift occurs after processing is completed

EZ model

🧠 Parallel Processing

Processes several words at once

Simultaneous integration of information

SWIFT model

SUMMARY

1. Language Acquisition

RJA = Responding Joint Attention

IJA = Initiating Joint Attention

Social-Cognitive Model:

Social cognition → RJA & IJA

Attention-Systems Model:

RJA + IJA → supports social cognition

Involves frontal and posterior attention systems

2. Language Processing

Automatic vs. Controlled Processing

Selective Attention

ERP Components:

MMN: automatic

N400: controlled, semantic processing

P600: controlled, syntactic reanalysis

fMRI findings:

Brain areas for attention: alerting, orienting, executive

Selective attention → Temporal lobe (BA 38)

Impairments:

Parkinson’s → attention/language deficit

SLI and Dyslexia → affect visual/sustained attention

Reading:

Serial vs. Parallel models (EZ vs. SWIFT)

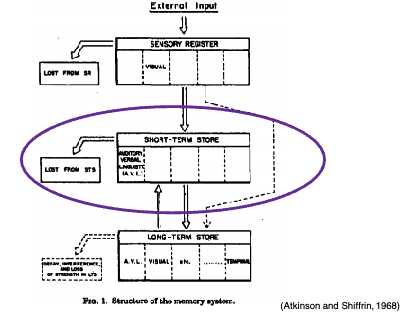

Describe the 3 components of the multi-store model of memory (Atkinson & Shiffrin, 1968)

🧠 1. Sensory Register (SR)

Briefly stores raw sensory input (mainly visual)

Other modalities (auditory, tactile) are not well understood here

Rapid decay unless attended to

🔄 2. Short-Term Store (STS) / Short-Term Memory

Receives info from SR and long-term store (LTM)

Acts as a workspace for:

Reasoning

Comprehension

Information is fragile – quickly decays or is lost unless rehearsed

Key for language understanding and production

📚 3. Long-Term Store (LTM)

More stable, but modifiable

Stores semantic, procedural, and episodic memories

Info is retrieved to STS when needed

Less info decay over time

Explain the support for the Short-Term Memory – Long-Term Memory distinction (2)

Patients with learning difficulties had unaffected STM, suggesting that STM and LTM are separate systems.

Conduction aphasia patients

STM impaired, LTM intact

Impoverished speech repetition, but patients could still paraphrase, implying LTM supports semantic content even if STM fails.

Explain the paradox of the Short-Term Memory – Long-Term Memory distinction

STM ≠ Working Memory (WM) (Atkinson & Shiffrin, 1968)

But later research shows:

WM = the interaction of different complex cognitive tasks (phonological loop, central executive).

Impaired STM affects complex cognition (cognitive impairment), suggesting it is more deeply involved than initially thought.

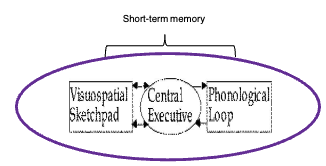

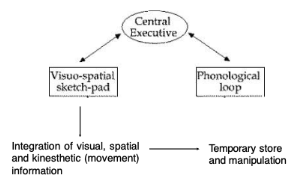

What are the 3 components of the short-term memory? → Baddeley and Hitch 1974

Central Executive

The control system that supervises attention, planning, and coordination.

It directs information to the two “slave systems” below.

Phonological Loop

Deals with verbal and auditory information (e.g., language, speech, sounds).

Key for language acquisition and inner speech ("talking to yourself").

Visuospatial Sketchpad

Handles visual and spatial information (e.g., mentally rotating an object, navigation).

Describe how the experiment with healthy adults supported the working memory model by Baddeley and Hitch 1974

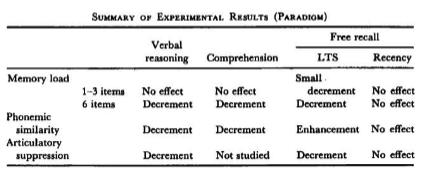

This image summarizes experimental support for Baddeley and Hitch's Working Memory Model, particularly from research with healthy adults. The table shows how different factors affect tasks like verbal reasoning, comprehension, long-term storage (LTS), and recency effects.

Key Findings from the Table: Memory Load

1–3 items: No effect on reasoning or comprehension.

6 items: Causes performance decrements in both.

Phonemic Similarity

Words that sound similar impair:

Verbal reasoning and comprehension (→ Decrement).

But enhance long-term storage.

Articulatory Suppression

Inhibiting internal speech (e.g., saying “the, the, the…” while doing a task):

Leads to decrements in reasoning and LTS performance.

Not studied for comprehension.

Interpretation:

These findings support the idea that working memory has limited capacity and relies heavily on verbal rehearsal.

The phonological loop is sensitive to sound-based interference.

Articulatory suppression disrupts the phonological loop, which is critical for verbal tasks.

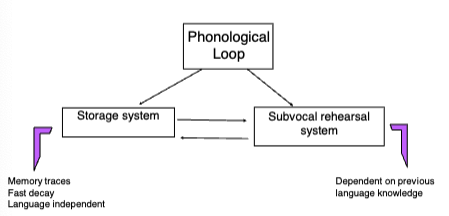

Describe the 2 components of the phonological loop in the working memory by Baddeley and Hitch 1974

It's responsible for temporarily storing and manipulating verbal and auditory information.

1. Storage System

Holds memory traces (e.g., sounds, words).

Information decays quickly (a few seconds).

Language independent: stores sounds even if they aren't fully understood.

2. Subvocal Rehearsal System

Refreshes the decaying memory traces through silent repetition (like repeating a phone number to yourself).

Language dependent: relies on previously learned phonological and linguistic knowledge to rehearse.

Summary:

The phonological loop allows us to retain verbal info briefly.

It's crucial for language acquisition, reading, and learning new words, especially in early development.

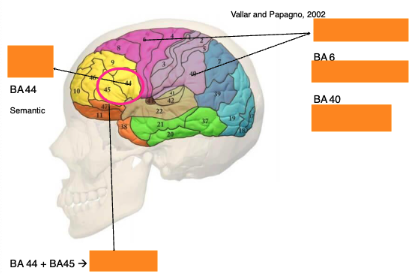

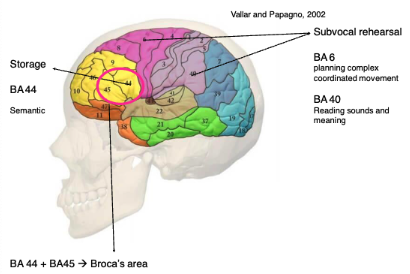

Describe the 3 aspects of phonological loop → working memory model

🔊 Retention depends on acoustic or phonological features

🔁 Similarity effect

It's harder to recall similar-sounding words (e.g., man, mad, mat...) than dissimilar ones.

Confusion arises because of overlapping phonological traces.

🧠 Similarity in meaning helps learning for long-term memory, not immediate recall

Words with related meanings are easier to learn over time, but this doesn't help with immediate recall.

📏 Word-length effect

Short words are recalled more easily than long words:

Shorter words require less rehearsal time and are less prone to decay.

Longer words increase risk of forgetting or rehearsal errors.

🟨 Storage Component

BA 44 (part of Broca's area):

Involved in semantic processing and temporary storage of verbal material.

Linked to phonological short-term memory.

🔁 Subvocal Rehearsal Component

BA 6:

Associated with motor planning.

Helps in coordinating silent articulation or subvocal rehearsal.

BA 40:

Involved in phonological processing, particularly reading and interpreting sound-based input.

Describe the 3 core functions of the phonological loop → WMM

Sentence Comprehension

Example: Patient PV showed difficulty with long sentences.

Suggests the phonological loop is crucial for holding and manipulating verbal information over short durations.

Facilitates Language Acquisition & Learning

Measured via non-word repetition tasks:

Impaired in SLI (Specific Language Impairment) children.

Predicts vocabulary growth in normal children

Correlates with second language (L2) learning success.

Subvocalization (Silent verbal rehearsal)

Supports:

Action control

Cognitive switching

Strategic control

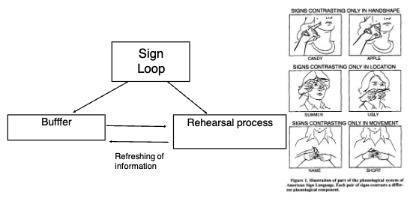

Describe Deafness and the Sign Loop

Study Overview:

Participants: 24 users of American Sign Language (ASL).

Task: Recall visually presented signs.

Conditions: Suppressed (no rehearsal) vs. not-suppressed (rehearsal allowed).

Stimuli: similar vs dissimilar signs

Key Findings:

Worse recall in the suppressed condition → shows importance of rehearsal.

A similarity effect was found:

Similar signs were harder to remember.

Mirrors the phonological similarity effect seen in hearing individuals.

Describe the 2 components of the sign loop

Buffer:

Temporarily stores manual signs (just like phonological loop stores sound-based info).

Holds visual-spatial linguistic information.

Rehearsal Process:

Actively refreshes the buffer, keeping sign information available.

Involves covert or overt manual rehearsal (similar to subvocal rehearsal in speech).

Refreshing of Information:

The loop allows maintenance of signs over short periods, enabling tasks like sign-based sentence repetition, learning new signs, or recalling signed sequences.

Describe the visuospatital sketchpad of the WWM

What is the Visuospatial Sketchpad?

A temporary store used for:

Visual information (shapes, colors)

Spatial information (locations, directions)

Kinesthetic (movement-based) input

Controlled and coordinated by the Central Executive.

🔁 Functions

Storage and manipulation of visual/spatial data

Enables mental imagery, navigation, and tracking movement

Supports problem-solving in tasks like mental rotation or spatial planning

🧪 Key Evidence (Baddeley et al., 1973)

Spatial tracking (following a moving dot) disrupts visual memory but not verbal memory → shows a distinct system from the phonological loop.

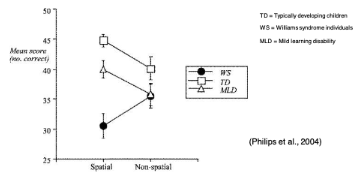

How does Williams Syndrome (WS) show a distinctive pattern in visuospatial sketchpad functioning?

Difficulty in comprehending spatial syntax (ex. understanding "the cat is under the table")

🧬 Williams Syndrome: Cognitive Profile

Unusual pattern of learning difficulties

Preserved verbal skills

Impaired visual processing

📊 Graph Explanation

Compares spatial vs non-spatial sentence comprehension

Three groups:

TD = Typically Developing children

WS = Williams Syndrome individuals

MLD = Mild Learning Disability

Key Result:

WS group performs much worse than TD and MLD on spatial syntax tasks, but is closer in non-spatial syntax.

Indicates a specific visuospatial processing deficit, not general cognitive impairment.

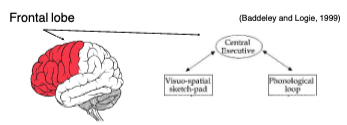

Describe 5 functions of the central executive and its cognitive significance → WMM

Attentional control of working memory: Directs attention and prioritizes tasks.

Coordinates the phonological loop and visuospatial sketchpad.

Combines short-term storage and active processing.

Influences language comprehension capacity by managing competing information.

The main factor in individual differences in working memory span.

Most important for general cognition, since it integrates and regulates mental resources → multitasking

Located in the Frontal Lobe → Crucial for high-level executive functions

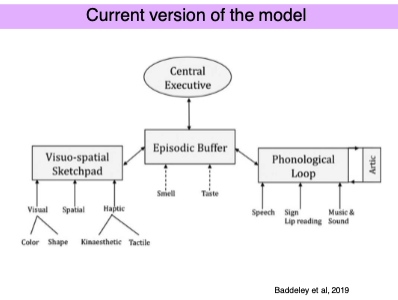

Describe the episodic buffer in WWM

🧩 Episodic Buffer Overview

Bridges the visuo-spatial sketchpad, phonological loop, and long-term memory (LTM).

Controlled by the Central Executive.

🧠 Key Functions

Integrates visual and verbal information into a multidimensional representation in LTM

Provides extra storage capacity beyond the phonological loop and sketchpad.

Retention of prose passages: Requires binding of sequential information (words, meaning, sentence structure).

Amnesic patients: Show impaired performance due to limited use of the episodic buffer.

What are 5 key characteristics of the episodic buffer → WMM

Limited capacity: Can hold only a finite amount of information at once.

Controlled by the Central Executive: Does not operate independently.

Stores information: Temporarily integrates and holds information from multiple sources.

Multimodal integration: Combines visual, spatial, verbal, and long-term memory inputs into coherent episodes.

Foundation of conscious awareness: Enables us to be aware of bound, meaningful experiences (remembering a movie scene with dialogue, actions, and emotions).

🧠 Core Components:

Central Executive

Oversees attention, coordination, and control of the system.

Manages integration and switching between modalities.

Visuo-spatial Sketchpad

Handles visual, spatial, and haptic (touch-related) information.

Includes subtypes like color, shape, kinesthetic (movement), and tactile data.

Phonological Loop

Processes auditory and verbal information: speech, sound, sign language, lip reading, and music.

Episodic Buffer

Integrates information from all modalities (visual, spatial, auditory, etc.)

Incorporates smell and taste (new additions)

Links working memory with long-term memory to form unified experiences.

Articulation (ArtiC)

A new label here possibly indicating articulatory control, responsible for subvocal rehearsal.