3. Efficient Inference with Transformers

1/4

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

5 Terms

How is a 32-bit float structured?

1 bit for sign (+,-)

8-bit biased exponent => [10-38, 1038].

24-bit fraction => 7 digit precision.

=> 32 bits is too large for modern LLMs

![<ul><li><p>1 bit for sign (+,-)</p></li><li><p>8-bit biased exponent => [10<sup>-38</sup>, 10<sup>38</sup>].</p></li><li><p>24-bit fraction => 7 digit precision.</p></li></ul><p></p><p>=> 32 bits is too large for modern LLMs</p>](https://knowt-user-attachments.s3.amazonaws.com/d9883bb7-29f3-416a-99e2-1bbe31b1230f.png)

How is a 16-bit float structured?

1 bit for sign (+,-)

5-bit biased exponent => [10-4, 104].

10-bit fraction => 3 digit precision.

=> Range is too small for LLMs

![<ul><li><p>1 bit for sign (+,-)</p></li><li><p>5-bit biased exponent => [10<sup>-4</sup>, 10<sup>4</sup>].</p></li><li><p>10-bit fraction => 3 digit precision. </p></li></ul><p></p><p>=> Range is too small for LLMs</p>](https://knowt-user-attachments.s3.amazonaws.com/bc08519a-adab-45ca-be24-93036baca7de.png)

How is a bfloat16 structured?

Idea: Less bits for precision, more for range

1 bit for sign (+,-)

8-bit biased exponent => [10-38, 1038].

7-bit fraction => 2 digit precision.

![<p>Idea: Less bits for precision, more for range</p><ul><li><p>1 bit for sign (+,-)</p></li><li><p>8-bit biased exponent => [10<sup>-38</sup>, 10<sup>38</sup>].</p></li><li><p>7-bit fraction => 2 digit precision.</p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/39a88922-76c4-45e5-a320-b42df8ca96e8.png)

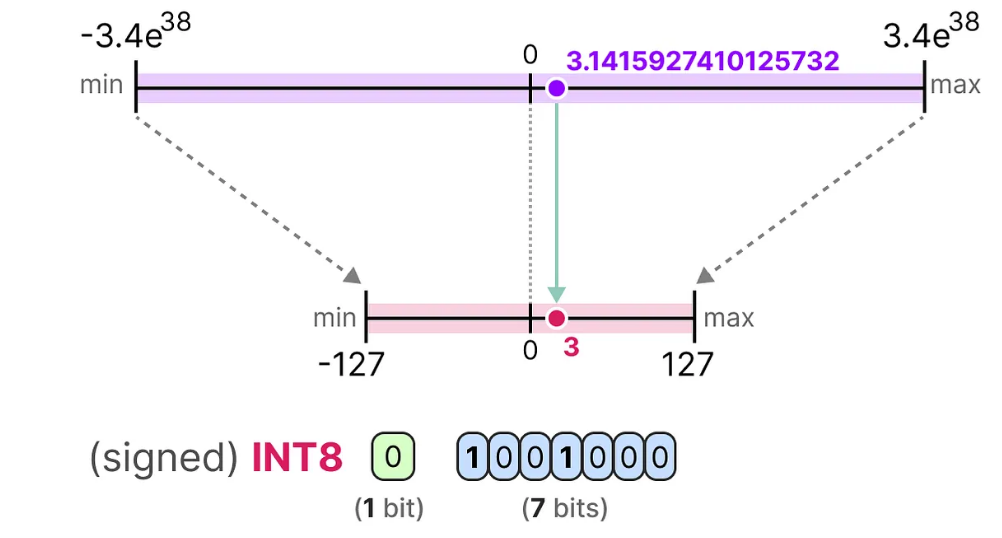

What is the idea behind Quantizing weights?

Map float values to int8 (254 distinct) values.

Uses half as much space as bfloat16.

int8 operations can be computed much faster (hardware acceleration).

→ Results in some errors, but no big difference in performance.

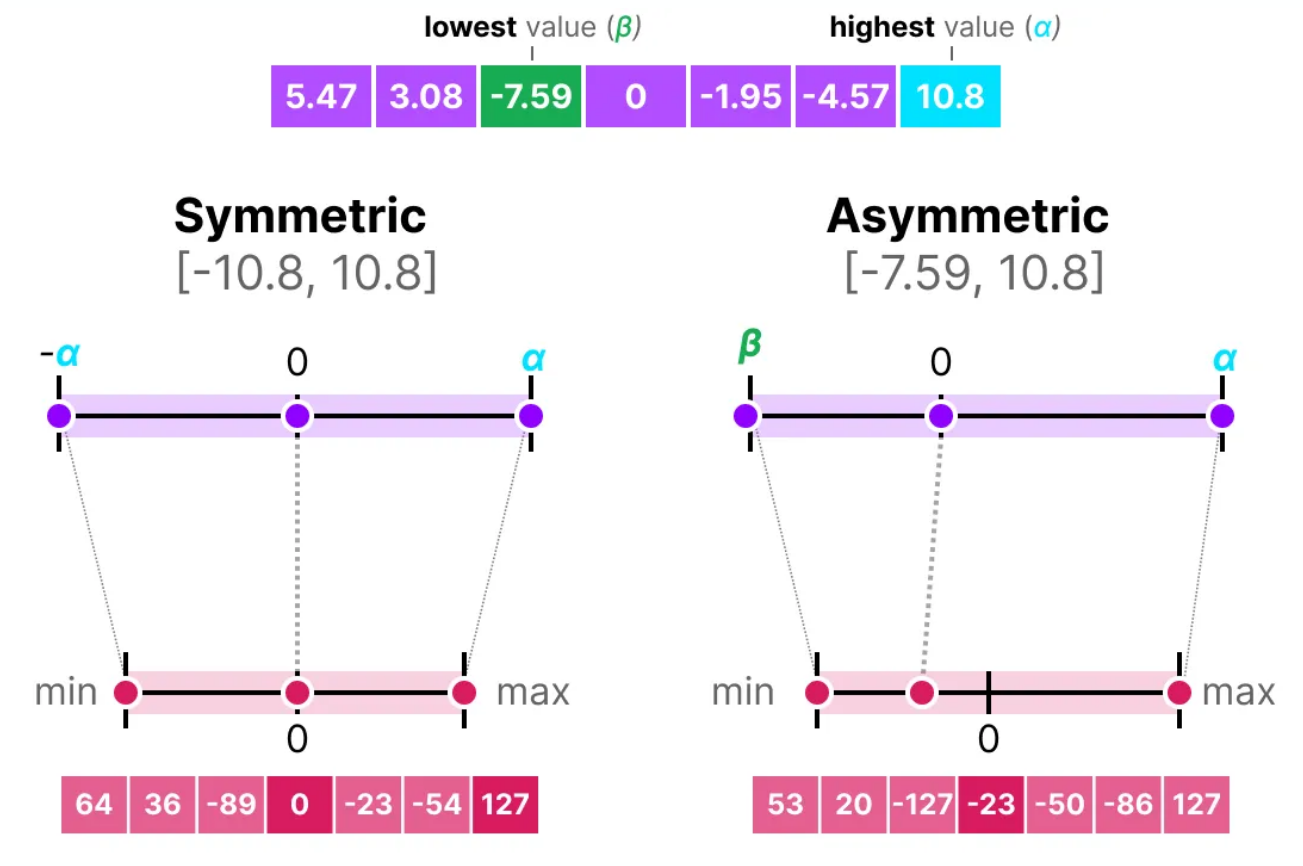

Symmetric Quantization vs. Asymmetric Quantization

Symmetric Quantization:

0 points of base and quantized match.

Min / Max are negatives of each other.

Asymmetric Quantization:

0 points do not match.

More precision than symmetric.

=> Both have problems with outliers. Can be solved by clipping weights to a pre-determined range.