L6: Acquisition of linguistic sounds

1/19

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

20 Terms

Define a baby/newborn, infant, toddler

Baby/newborn: 0-2 months

Infant: 3-12 months

Toddler: 12-36 months

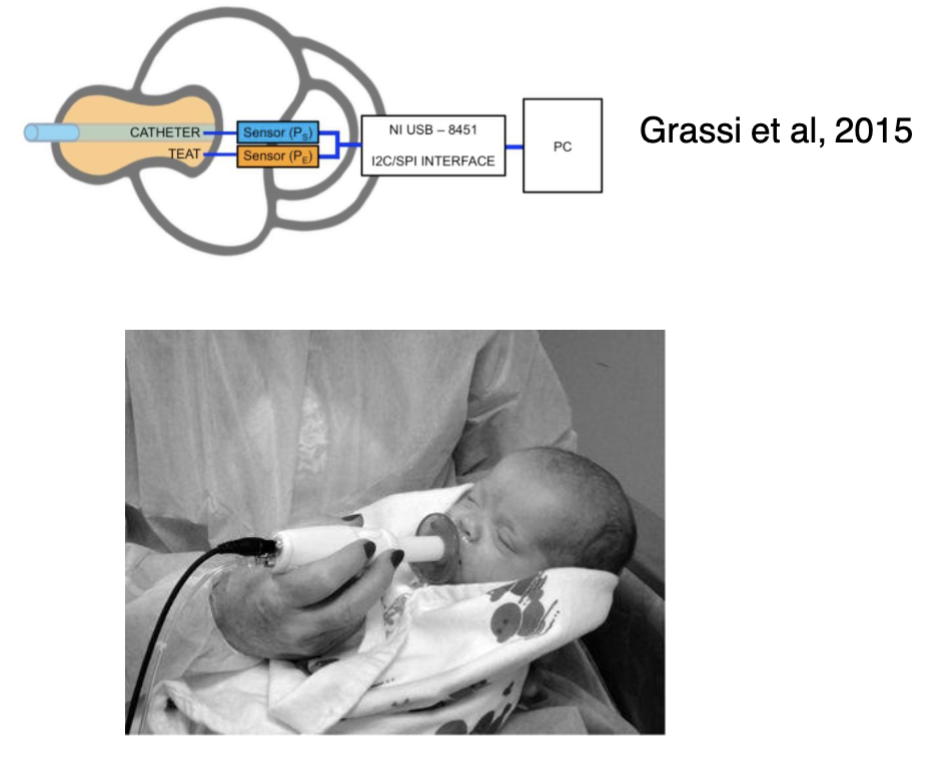

What is high amplitude sucking (HAS)?

👶 Target Age: Newborns to 3 months old

🧪 What it Measures:

Auditory discrimination and interest in sounds

Changes in sucking rate (amplitude or frequency) in response to auditory stimuli

⚙ How it Works:

Infant sucks on a specially designed pacifier connected to a pressure transducer.

Sucking controls sound playback.

When infants hear a novel or interesting sound, sucking rate increases.

When they habituate (get used to the sound), sucking rate decreases.

📊 Applications:

Detects infants’ ability to discriminate phonemes, rhythms, and even speech vs. non-speech sounds.

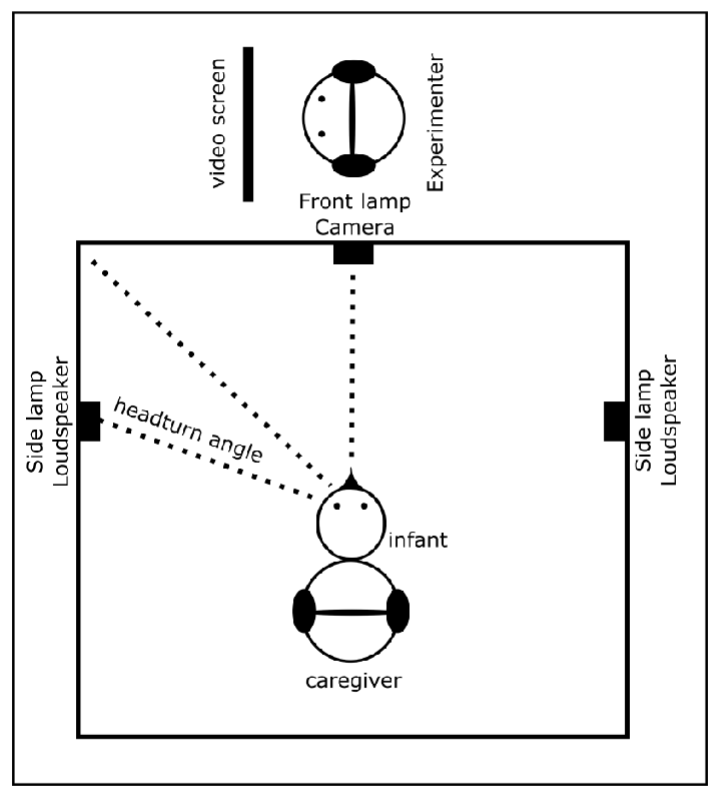

Describe the headturn paradigm

👶 Age Range: 4 to 12 months

Infants must be able to hold their head steady

🎯 Purpose: To test whether infants can detect changes in sound, especially phonetic contrasts that may or may not be present in their native language.

🧪 How It Works:

Infant sits on caregiver's lap facing forward.

A sound plays repeatedly from one side (e.g., ba ba ba...).

Suddenly, the sound changes (e.g., to da da da).

If the infant detects the change, they will typically turn their head toward the new sound source.

Head turns are recorded using hidden cameras and rewarded (e.g., with a light or animated toy).

🔍 What It Measures:

Discrimination of phonemes

Sensitivity to sound contrasts

Perceptual narrowing: Infants lose ability to distinguish unfamiliar phonemes (usually by ~10–12 months)

Describe the 3 foundational cognitive mechanisms of language acquisition

1. General Acoustic Perception

Present in infants from birth

Ability to perceive a broad range of sound features (e.g., pitch, duration, rhythm)

Shared with many animal species

Basis for distinguishing phonemes (like "b" vs. "p")

2. Computational Abilities

Seen in non-human animals (e.g., monkeys, rats)

Includes:

Statistical learning (tracking patterns or frequencies)

Sequence processing

Important for detecting word boundaries and grammar rules

3. Social Interaction

Particularly emphasized in humans and social mammals

Necessary for:

Joint attention

Turn-taking

Intentional communication

Supports motivation and contextual understanding

Together, these mechanisms illustrate how biological predispositions and social environments interact in the emergence of language

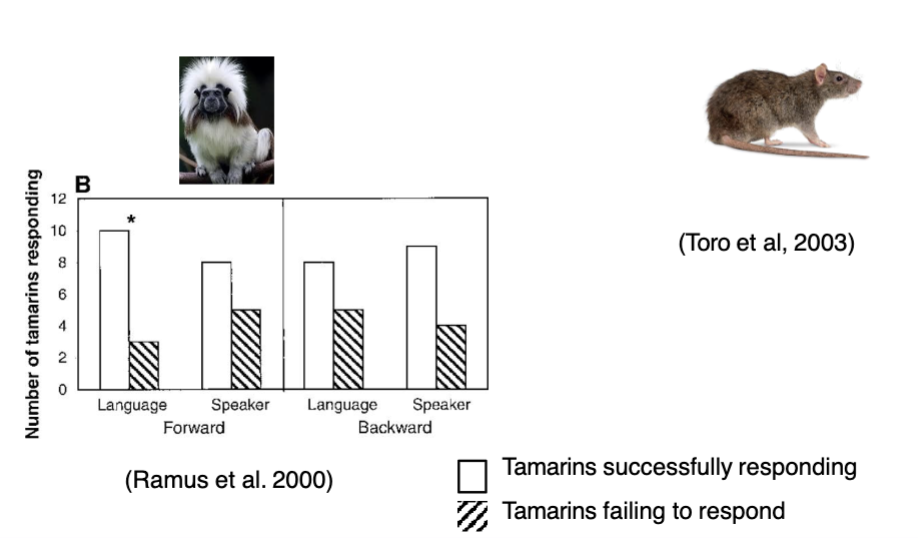

Describe the animal studies with tamarins and rats on Acoustic Perception in Animals

Humans can’t hear everything → range 20 Hz – 20,000 Hz

Humans are tuned to hear acoustic features critical to natural language

🔹 Animal Studies

Tamarins:

Responded more strongly to forward speech than backward speech

Show sensitivity to prosody and rhythm in human language

Graph shows more tamarins successfully responded (white bars) in the forward language condition

Rats:

Showed the ability to discriminate syllable patterns

Suggests basic acoustic pattern detection exists even in non-primate mammals

🔹 Conclusion

Some animals can perceive linguistic-relevant acoustic properties, even without language.

Suggests evolutionary precursors to language may lie in general auditory processing abilities.

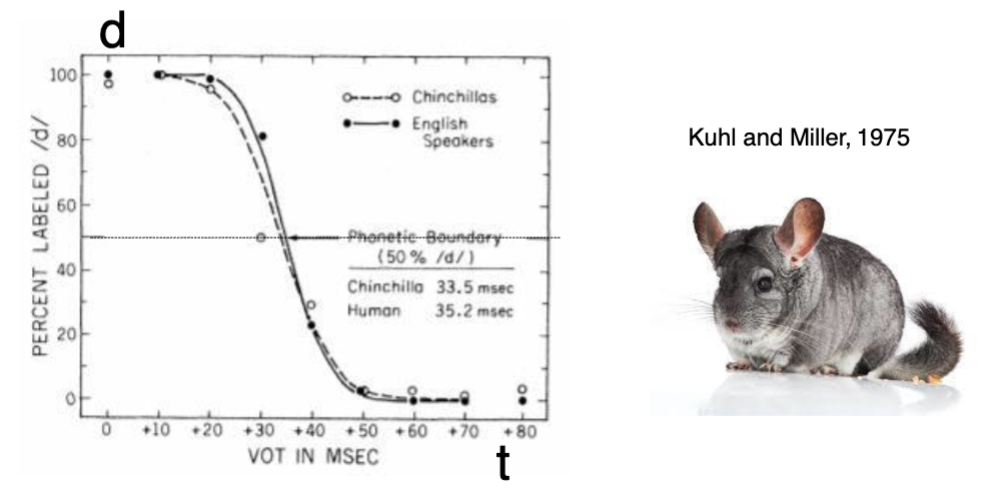

Describe the results of the study done on Chinchillas and Humans for categorical perception → acoustic perception in animals

Study: Kuhl & Miller (1975)

🧪 Experiment Overview

Subjects: Chinchillas and English-speaking humans

Stimuli: Voice onset time (VOT) continuum between /d/ and /t/ sounds

Task: Determine the phoneme boundary (where /d/ becomes /t/)

📊 Graph Interpretation

X-axis: VOT in milliseconds (msec)

Y-axis: % labeled as /d/

Phoneme boundary: At 50% /d/ labeling

Chinchillas: ~33.5 msec

Humans: ~35.2 msec

🧩 Key Finding

Chinchillas showed similar categorical perception of VOT contrasts as humans.

Suggests that categorical perception is not uniquely human, but may reflect general auditory processing abilities present in mammals.

This evidence supports the idea that basic building blocks of speech perception may have evolved from shared auditory processing mechanisms in animals.

What are 3 things infants must learn for language → acoustic perception in humans

👶 What Infants Must Learn

Distinguish phonemes that carry meaning (e.g., /k/ vs. /b/).

Recognize allophones: Learn that /kɑː/ and /kɑʊ/ can be variations of the same phoneme.

Adapt to variations in: Sex, age, dialect, speech rate, phonetic context

Phonemes: Smallest sound units in a language (e.g., /kɑʊ/ vs. /bɑʊ/).

Phones / Phonetic Units: Variations of phonemes influenced by accent or context

Example:

UK pronunciation: /kɑː/

US pronunciation: /kɑʊ/

These are allophones—different sounds that don’t change meaning in context.

🧬 Innate Capacity

Humans are born with the ability to discriminate phonetic sounds.

This skill is refined with experience and exposure to a native language.

Describe the impact of environment input on early auditory and language development (infants)

Even with limited auditory exposure, infants develop:

General auditory mechanisms (infants)

Specialized mechanisms (adults)

👶 Prenatal Sensitivity (30 weeks pregnancy)

Fetuses at 30 weeks can hear airborne sounds.

These sounds lead to:

Heart rate acceleration

Body movements

📌 Implication:

The fetus is already responding to sound patterns before birth.

Language learning starts in utero, laying a foundation for postnatal language acquisition.

Describe the experiment on infants for rhythmic perception

2-day-old infants exposed to Spanish and English.

Measurement: Duration of bursts per second over an 18-minute session, divided into 3 six-minute periods.

Findings:

Native language stimuli led to sustained or increased sucking activity, indicating more interest or familiarity.

Foreign language stimuli led to a decline in activity, especially toward the final 6-minute period.

Conclusion: Infants are born with a sensitivity to the rhythmic patterns of their native language, supporting the idea of early auditory learning or prenatal exposure → can differentiate between native and foreign languages based on rhythm

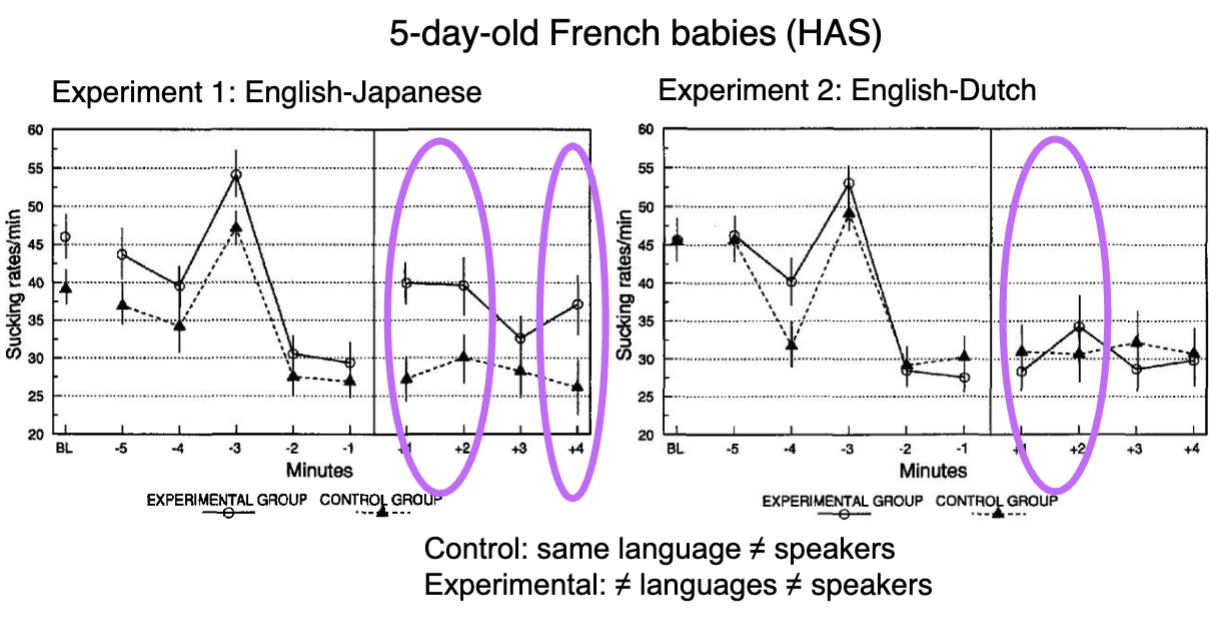

Describe experiment 1 (English-Japanese) and experiment 2 (English-Dutch) done with 5-day-old French babies → rhythmic perception

Experiment 1 (English-Japanese):

Languages belong to different rhythmic classes (stress-timed vs. mora-timed).

Experimental group (different language and speaker): a significant increase in sucking rate when language changed.

Control group (same language, different speaker): no significant change.

→ Indicates infants can detect rhythmic differences across languages.

Experiment 2 (English-Dutch):

Both languages are stress-timed (same rhythmic class).

No significant change in sucking rates after the language switch.

→ Infants cannot discriminate between languages of the same rhythmic class at 5 days old.

Key Point:

Discrimination between rhythmic classes is present at birth, but discrimination within a rhythmic class only emerges after ~4 months.

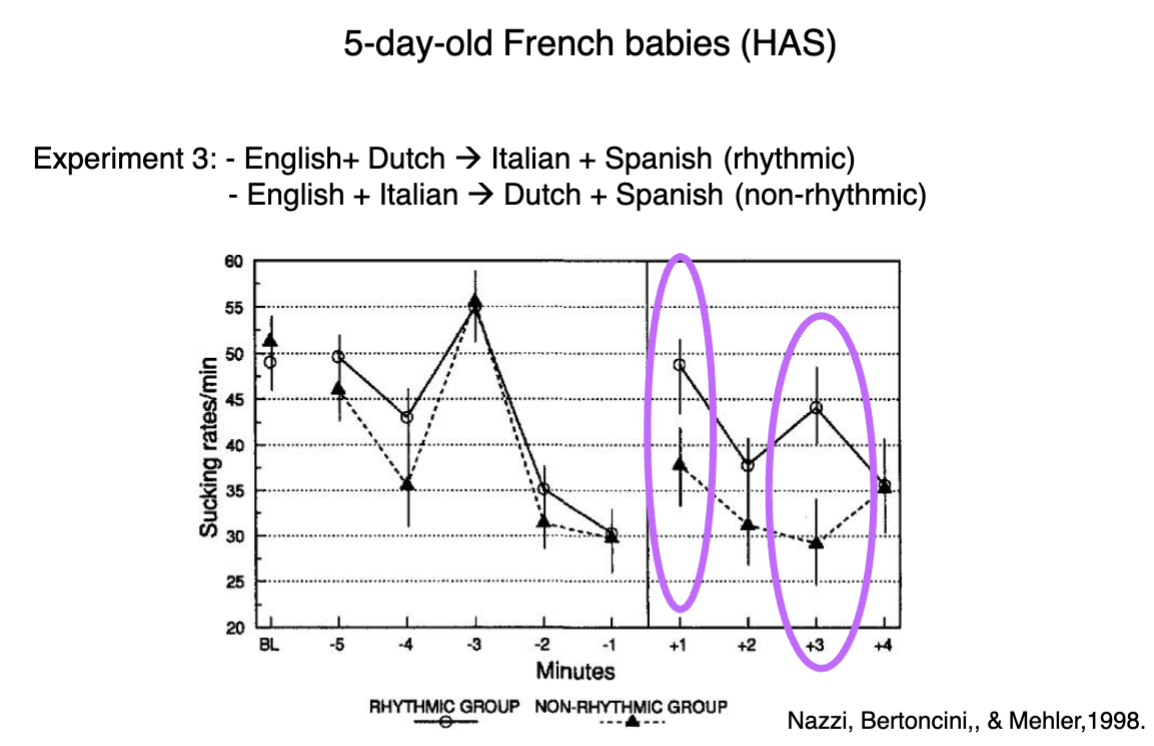

Describe experiment 3 on rhythmic (English+Dutch → Italian+Spanish) and non-rhythmic (English+Italian → Dutch+Spanish) groups done with 5-day-old French babies → rhythmic perception

Key Experimental Design:

Rhythmic group:

Language switch from English + Dutch → Italian + Spanish.

All languages in this condition differ rhythmically (e.g., stress-timed to syllable-timed/mora-timed).

Result: Significant increase in sucking rate, indicating discrimination based on rhythmic class.

Non-rhythmic group:

Language switch from English + Italian → Dutch + Spanish.

The languages do not differ in rhythmic class.

Result: No significant increase in sucking, indicating no discrimination when rhythmic contrast is absent.

Conclusion:

Newborns are sensitive to rhythmic properties of language even before they acquire linguistic experience. This sensitivity enables them to differentiate between rhythmic classes of languages — a foundational mechanism in early language acquisition.

What are the 6 core mechanisms invovled in statistical learning in humans?

📈 Core Mechanisms Involved:

Regularity: Recognizing recurring patterns in sounds.

Probability: Estimating which sounds are likely to follow others.

Frequency distribution: Mapping how often sounds occur.

Categorical learning: Sorting input into meaningful categories (e.g. phonemes).

Transitional probability: Predicting upcoming sounds based on prior context.

Stress pattern: Using rhythm/stress to identify word boundaries.

🧠 Key Takeaways on Statistical Learning:

Infants learn language by tracking statistical regularities in the speech stream.

Infants are sensitive to the frequency distribution of sounds in language.

Hebbian learning principle: “Cells that fire together, wire together” → Neural connections are strengthened by repeated co-activation, supporting learning

Describe categorical learning on 6-month-old infants

🧠 Categorical Learning via Statistical Input Study setup:

👶 24 infants (6 months old)

Tested on distinguishing the speech sounds [da] and [ta]

Familiarization used a continuum of speech stimuli between [da] and [ta]

🔍 Two Conditions:

Bimodal distribution (green line)

High exposure at endpoints of the continuum

Leads to better categorization of [da] vs [ta]

Unimodal distribution (blue line)

High exposure at center of the continuum

Blurs category boundaries

📊 Outcome (Right graph):

Infants in the bimodal condition showed greater discrimination:

Longer looking time in "alternating" trials (novelty preference)

Infants in the unimodal condition treated sounds as more similar (less categorization)

🧩 Conclusion: Statistical learning → Categorical perception

Infants use frequency distributions to learn phoneme categories.

![<p><span data-name="brain" data-type="emoji">🧠</span> <strong>Categorical Learning via Statistical Input</strong> <strong>Study setup</strong>: </p><ul><li><p class=""><span data-name="baby" data-type="emoji">👶</span> <strong>24 infants</strong> (6 months old)</p></li><li><p class="">Tested on distinguishing the speech sounds <strong>[da]</strong> and <strong>[ta]</strong></p></li><li><p class="">Familiarization used a continuum of speech stimuli between [da] and [ta]</p></li></ul><p> </p><p><span data-name="mag" data-type="emoji">🔍</span> <strong>Two Conditions:</strong> </p><ol><li><p class=""><strong>Bimodal distribution (green line)</strong></p><ul><li><p class="">High exposure at <strong>endpoints</strong> of the continuum</p></li><li><p class="">Leads to <strong>better categorization</strong> of [da] vs [ta]</p></li></ul></li><li><p class=""><strong>Unimodal distribution (blue line)</strong></p><ul><li><p class="">High exposure at <strong>center</strong> of the continuum</p></li><li><p class=""><strong>Blurs category boundaries</strong></p></li></ul></li></ol><p> </p><p><span data-name="bar_chart" data-type="emoji">📊</span> <strong>Outcome (Right graph)</strong>: </p><ul><li><p class="">Infants in the <strong>bimodal</strong> condition showed <strong>greater discrimination</strong>:</p><ul><li><p class=""><strong>Longer looking time</strong> in "alternating" trials (novelty preference)</p></li></ul></li><li><p class="">Infants in the <strong>unimodal</strong> condition treated sounds as more similar (less categorization)</p></li></ul><p> </p><p><span data-name="jigsaw" data-type="emoji">🧩</span> <strong>Conclusion</strong>: <strong>Statistical learning → Categorical perception</strong><br>Infants use frequency distributions to learn <strong>phoneme categories</strong>.</p>](https://knowt-user-attachments.s3.amazonaws.com/5d61b8ec-e26d-4d2d-a529-1a4c6259232f.png)

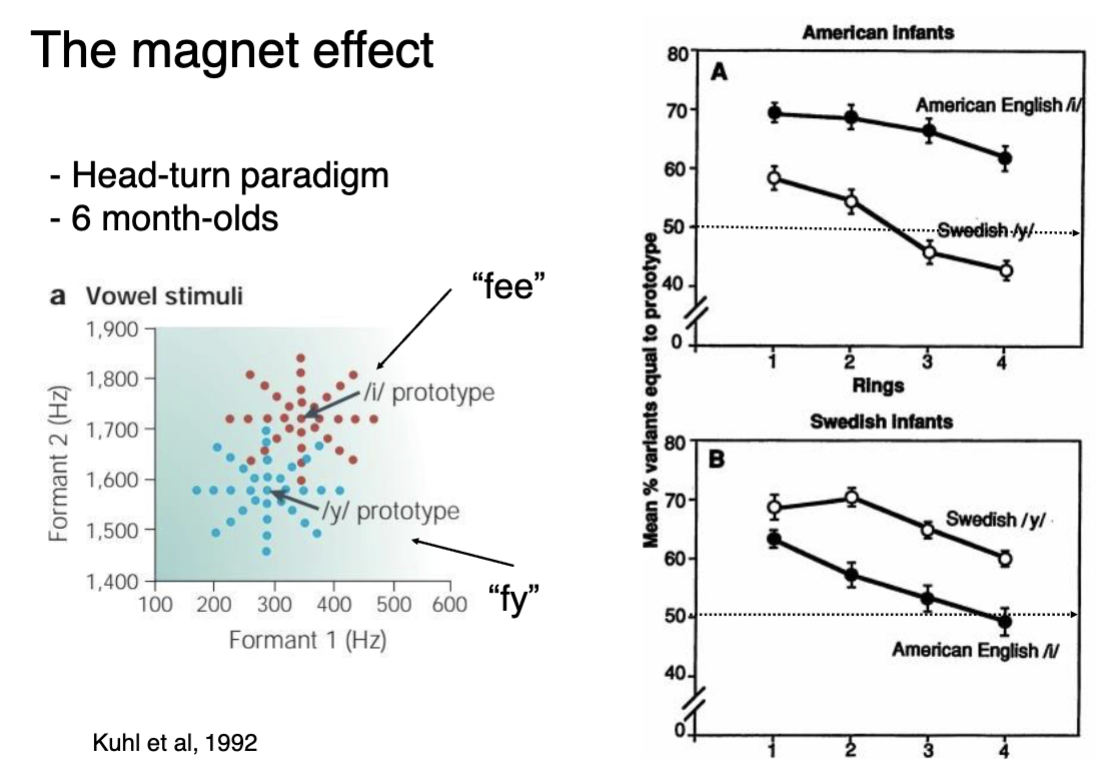

Describe the magnet effect in 6-month-olds → categorical learning

🧪 Experiment Setup

Infants: 6-month-olds

Method: Head-turn paradigm

Stimuli: Vowel sounds in the acoustic space

Focus: American English /i/ ("fee") and Swedish /y/ ("fy")

🗣 Main Concept

Infants are better at discriminating foreign vowel sounds than native prototypes because:

Prototypes act like magnets, pulling nearby sounds toward them perceptually.

This makes variations of the native sound harder to distinguish.

📊 Left Graph (Vowel Space):

Shows the acoustic distribution of stimuli.

Clusters around American /i/ (e.g. "fee") and Swedish /y/ (e.g. "fy").

📈 Right Graphs: Results

A) American Infants:

Better at discriminating Swedish /y/ (open circles)

Worse at discriminating native /i/ — magnet effect compresses the perception space.

B) Swedish Infants:

Opposite pattern: Better at discriminating American /i/ than their native /y/**

🧠 Conclusion: Exposure to a language shapes auditory perception early — native vowel prototypes "magnetically" attract similar sounds, reducing discrimination.

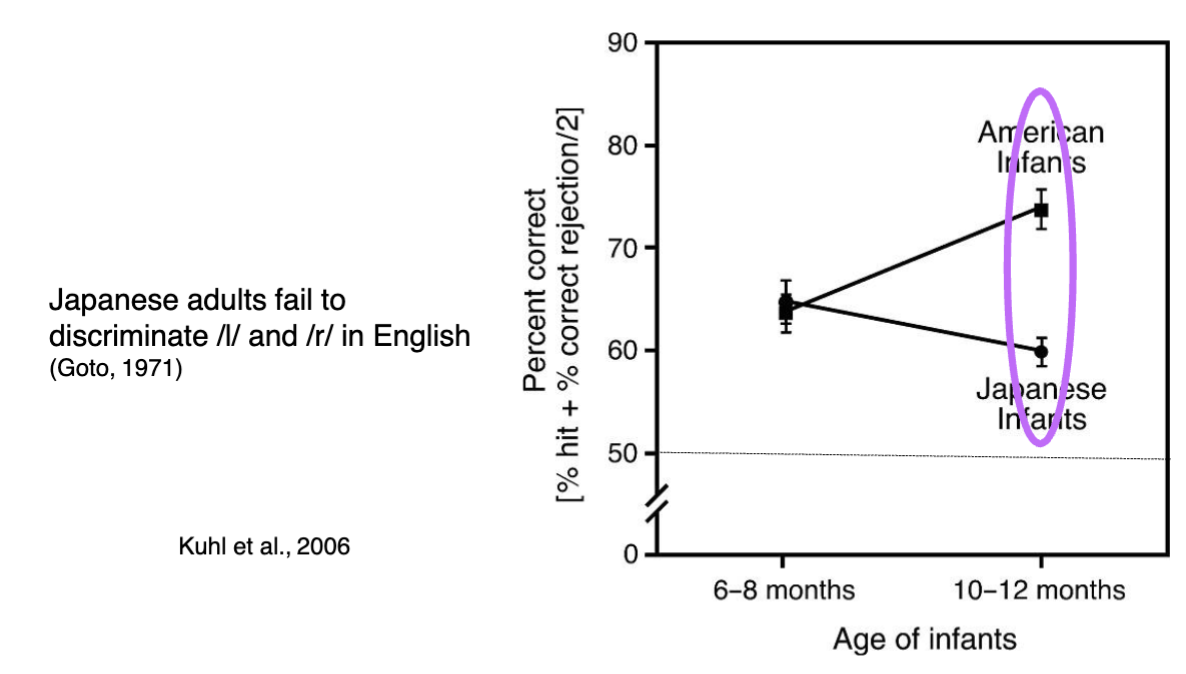

Describe language specialization for consonants, using the classic /l/ vs. /r/ discrimination study

🧪 Task:

Infants tested on ability to distinguish between the English consonants /l/ and /r/.

Measured via percent correct discrimination using head-turn paradigm.

📉 Key Findings:

6–8 months:

Both American and Japanese infants show similar ability to discriminate /l/ and /r/.

Suggests universal phonetic sensitivity early in development.

10–12 months:

American infants improve their discrimination — exposure reinforces native language contrasts.

Japanese infants decline — due to lack of /l/-/r/ distinction in Japanese, perception narrows.

📌 Takeaway:

Language experience during infancy leads to phonetic tuning — infants lose sensitivity to non-native contrasts by their first year.

This phenomenon is often referred to as “perceptual narrowing” or “native language neural commitment”.

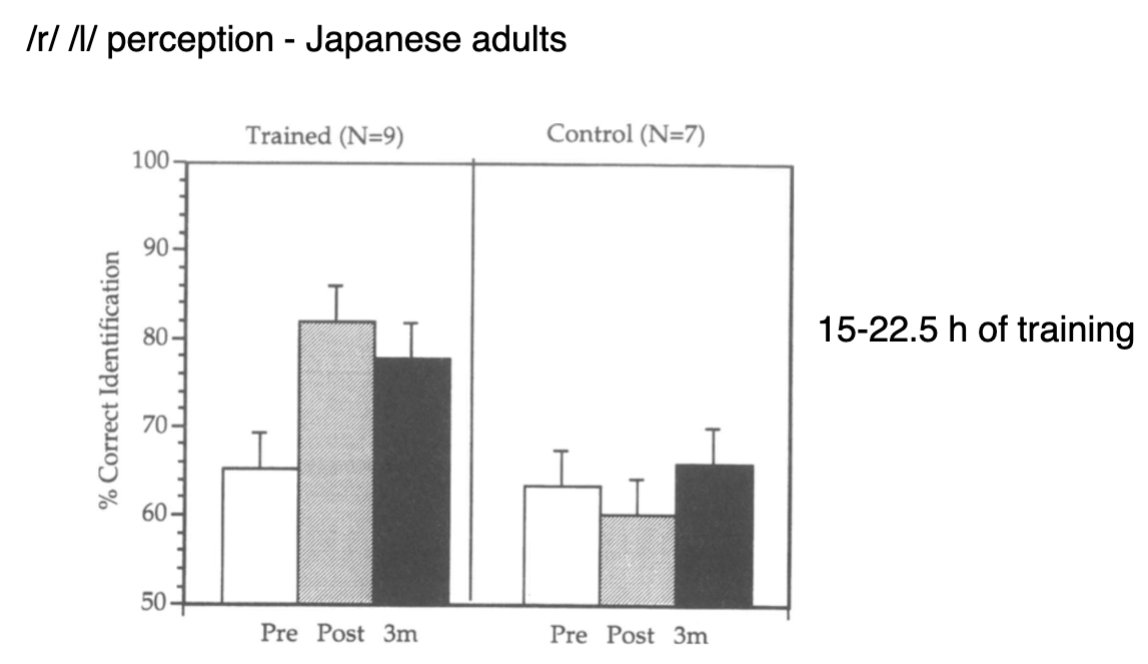

Describe how Japanese adults can significantly improve their /r/–/l/ discrimination with targeted training

🧠 Study Summary: /r/–/l/ Perception in Japanese Adults

Training duration: 15–22.5 hours

Groups:

Trained group (N=9)

Control group (N=7)

📊 Results:

Trained Group:

Pre-training: ~65% correct

Post-training: ↑ to ~82% correct

3 months later: Performance maintained (~78%)

Control Group:

No substantial change across time points.

✅ Key Insight:

Perceptual learning is possible in adulthood, even for difficult non-native contrasts (like /r/ vs. /l/), but requires focused training.

This supports the idea that native language neural commitment is not irreversible, though it becomes less flexible with age.

What are the 2 learning mechanisms of Native Language Neural Commitment (NLNC)

NLNC refers to the process by which the brain becomes tuned to the native language through early experience. This tuning:

Helps encode and process familiar input efficiently.

Makes learning a second language (L2) more difficult if it deviates from L1.

📌 Mechanisms:

Statistical learning: Infants track the frequency of sounds, syllables, and word patterns.

Prosodic learning: Sensitivity to rhythm, intonation, and stress patterns.

🌍 Developmental Path:

Early in life: Brain is universally open to all language contrasts.

With age: Brain commits to native patterns, becoming language-specific.

🚧 Consequences for L2 Learning:

L1–L2 similarity: More similarity = easier learning.

Age of acquisition: Earlier = better.

L2 exposure/use: More input = better outcomes.

What are the 4 key factors influencing second language (L2) learning outcomes?

Linguistic Levels Matter

Outcomes differ across levels (ex. phonology vs. syntax).

Ex. A learner might master L2 grammar but retain an accent

Proficiency Affects Performance

At high proficiency, L2 comprehension ≈ L1 comprehension.

Syntactic complexity is equally manageable in L1 and L2 for advanced learners

Explicit Training Can Improve Pronunciation

Especially useful for reducing foreign accent

Motivation Drives Success

Motivated learners show better L2 acquisition and maintenance

Together, these findings emphasize that neural commitment is not destiny—with the right conditions (ex. training, proficiency, and motivation), adult learners can achieve native-like outcomes in certain domains.

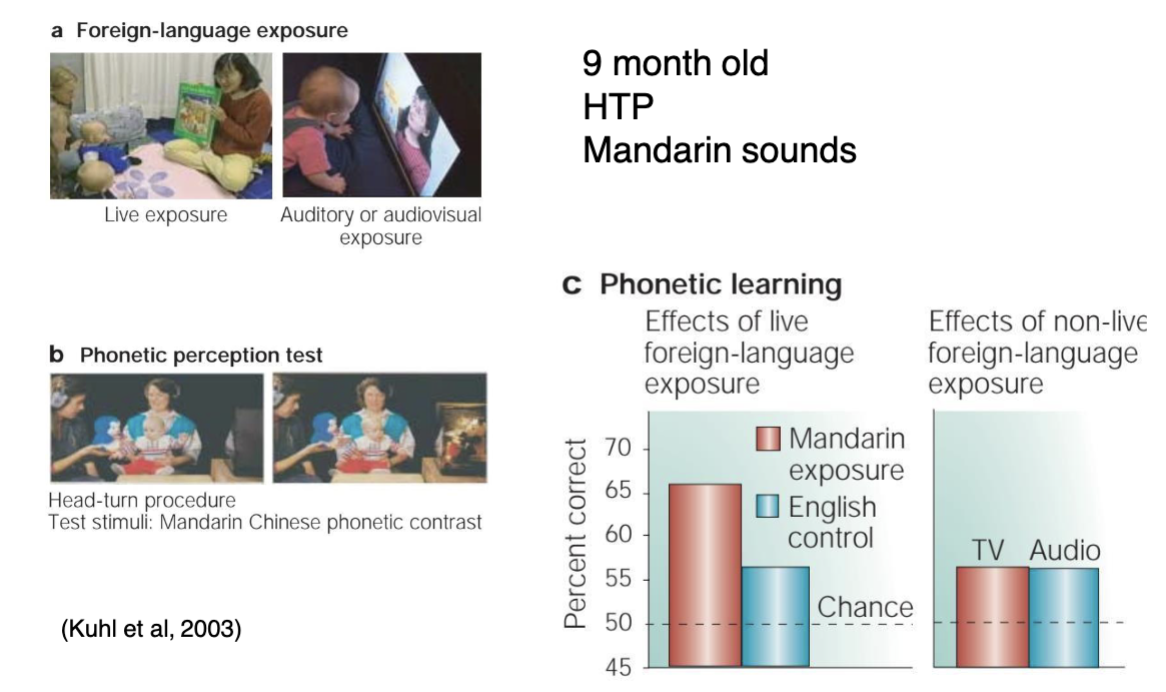

Describe the role of social interaction in early language learning

👶 Participants:

9-month-old infants

Learning Mandarin Chinese phonetic sounds

🧪 Experimental Setup:

a) Exposure Phase

Two conditions:

Live exposure: Native Mandarin speaker interacts in person with infant.

Audiovisual/TV exposure: Infants watch or hear the same material without a live speaker.

b) Testing Phase

Head-turn paradigm (HTP) used to measure phonetic discrimination (e.g., distinguishing Mandarin sounds).

📊 Results (c):

Live Mandarin exposure group showed significantly higher phonetic learning (above chance level).

TV and Audio exposure groups performed at chance level—no learning occurred.

🔍 Interpretation:

Social interaction is essential for language learning in infancy.

Passive exposure (even with the same content) is not sufficient.

Supports the idea that language learning is socially gated—humans need real social engagement to tune their phonetic system.

SUMMARY

🍼 Age Categories:

Baby: 0–2 months

Infant: 3–12 months

Toddler: 12–36 months

🔬 Experimental Paradigms:

HAS (High Amplitude Sucking) – Used with babies (0–3 months)

Headturn Paradigm – Used from 4–12 months

🧠 Core Components of Language Acquisition:

Acoustic Perception (0–6 months)

Perception of phonetic units

Sensitivity to environmental input

Rhythmic perception

Statistical Learning (6–11 months)

Categorical learning (e.g., phoneme boundaries)

Transitional probabilities and stress patterns

Specialization (~11 months)

Consonant perception

Native Language Neural Commitment (NLNC)

💡 Additional Notes:

Social interaction is a key catalyst throughout development.

Many perceptual and statistical mechanisms are not exclusive to humans.

The learning process moves from universal sensitivity to language-specific specialization.