Non-local Means Denoising and Inpainting (6.3-6.4)

1/8

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No study sessions yet.

9 Terms

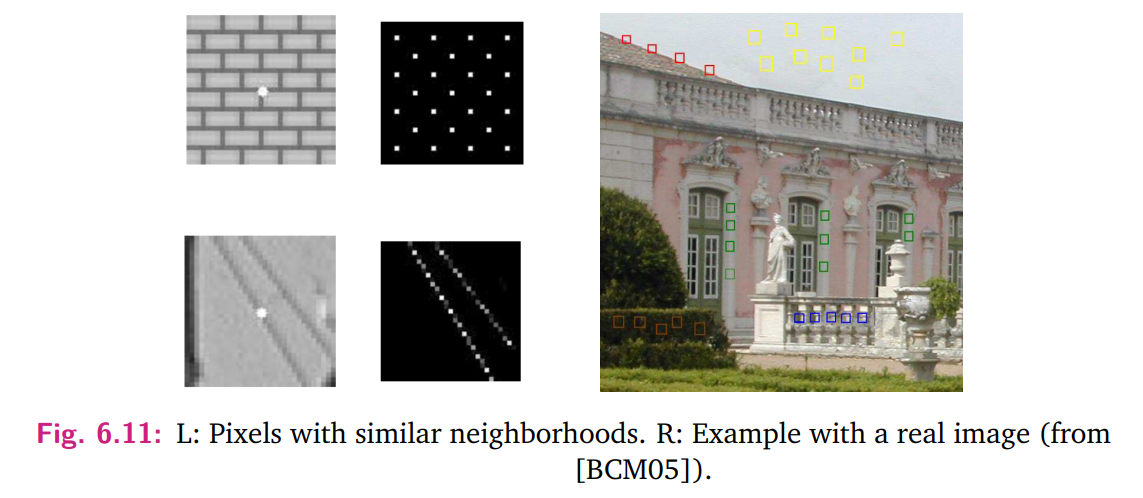

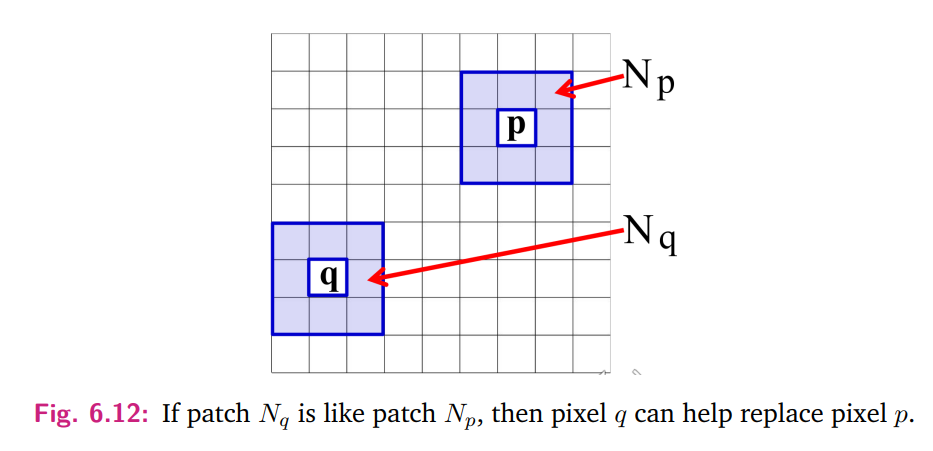

What is non-local means denoising, and how does it work?

Non-local means denoising replaces a pixel p using values from similar neighborhoods across the entire image. Instead of relying on local pixels, it identifies candidate patches S whose neighborhoods are similar to that of p and combines their central pixel values using Gaussian-weighted sums.

What is the formula for non-local means denoising?

The filtered value at pixel p is:

I_n(p) = \frac{1}{W} \sum_{q \in S} e^{-\frac{d(N_p, N_q)}{h^2}} I(q),

where:

- d(N_p, N_q) is the Gaussian-weighted squared sum of differences between the neighborhoods N_p and N_q.

- W = \sum_{q \in S} e^{-\frac{d(N_p, N_q)}{h^2}} is the normalization factor.

Algorithm first finds different patches Nq that looks similar to Np. This formula then averages the central value of each patch and adds their contributions to making a new pixel p. Each contribution is weighted by how similar that patch is to the original (defined by d).

If a pixel is very different to the corresponding pixel in the neighboring patch, then d(N,N) would be large and the weight would become small.

What are the design considerations in non-local means denoising?

1. Patch Size: Typical patch sizes are 5 \times 5 or 7 \times 7.

2. Search Area: The search for similar patches S can span the entire image or be restricted to a smaller subimage (e.g., 35 \times 35) for efficiency.

3. Gaussian Weighting: Gaussian weighting ensures that candidates close in appearance and intensity contribute more significantly.

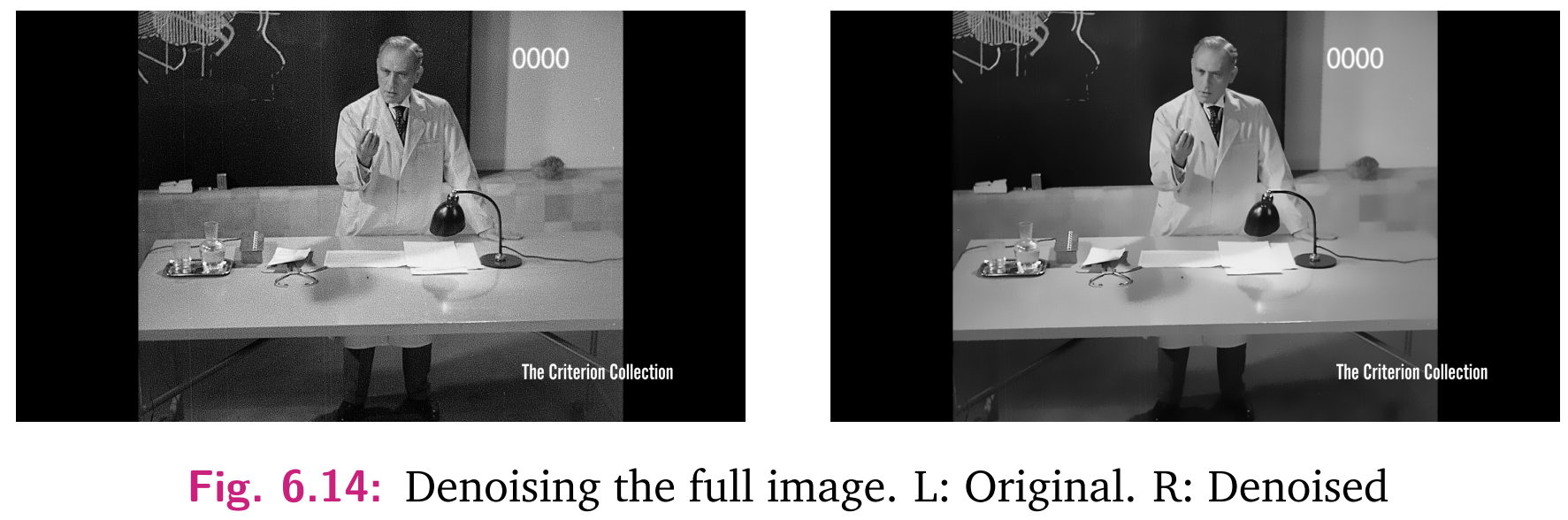

How is non-local means denoising used in film restoration?

Non-local means denoising is useful for removing dust and scratches from old films. For example, to fix a dust pixel:

1. Identify candidates S by comparing patches using d(N_p, N_q).

2. Combine the central values of similar patches to replace the dust pixel.

This method provides a crisp result, as shown in Fig. 6.14, but is computationally intensive compared to simpler techniques like median filtering.

What is inpainting in image processing?

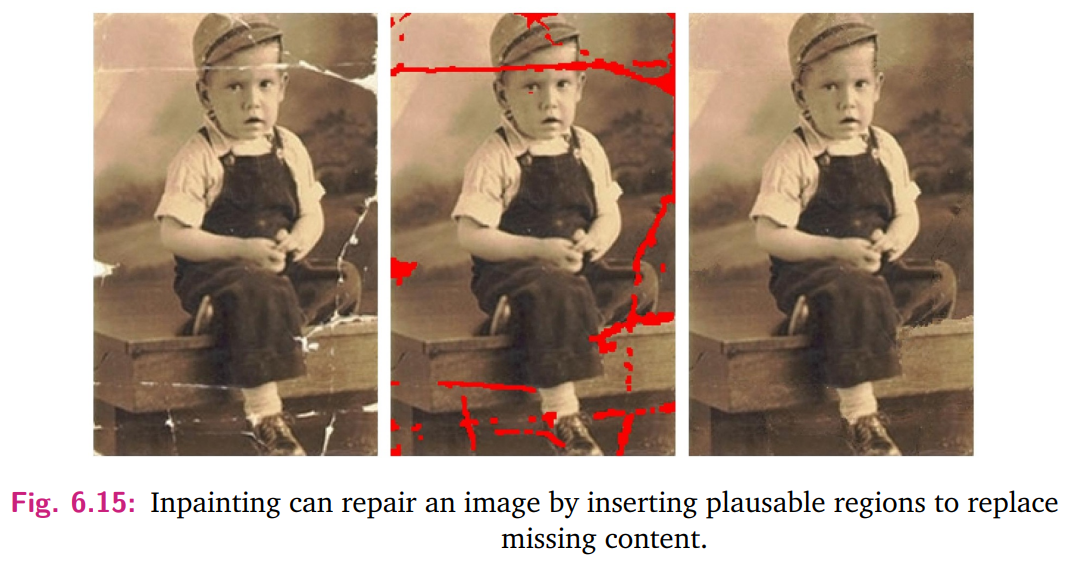

Inpainting is the process of filling in missing or damaged regions of an image using automated texture generation. It selects patches with similar neighborhoods from across the image and synthesizes pixels to plausibly replace missing content, as shown in Fig. 6.15.

How is inpainting applied iteratively?

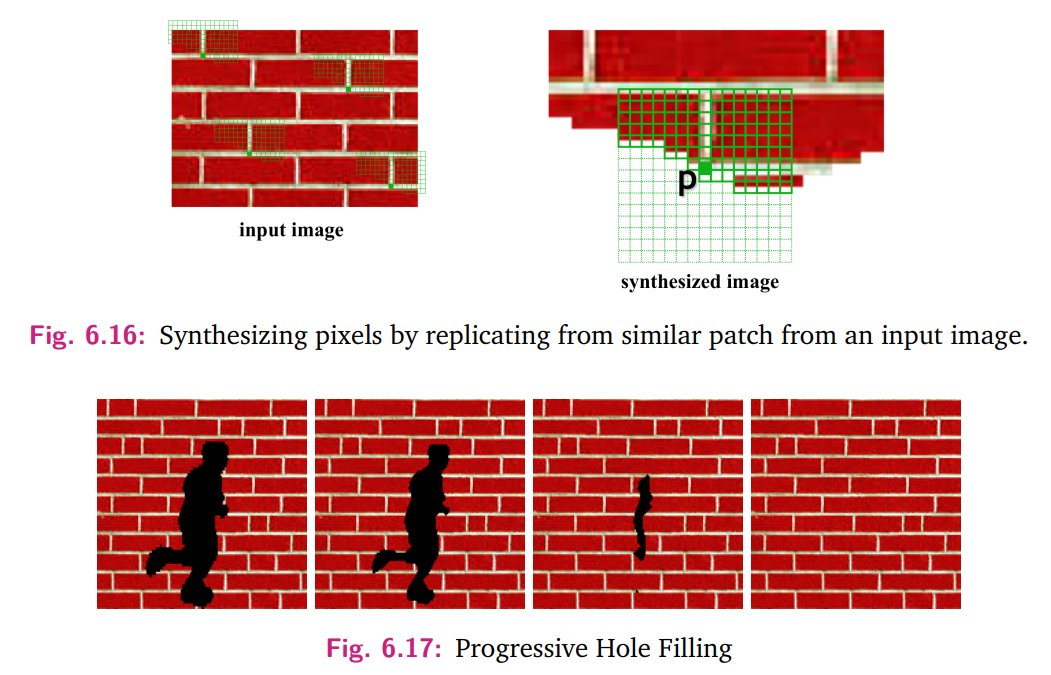

Inpainting can iteratively fill in damaged or blank regions by selecting matching patches and synthesizing pixels. For example, Fig. 6.17 demonstrates progressive hole filling to remove a foreground object. While effective, it may struggle to recreate highly regular textures like brick patterns.

What are practical applications of inpainting?

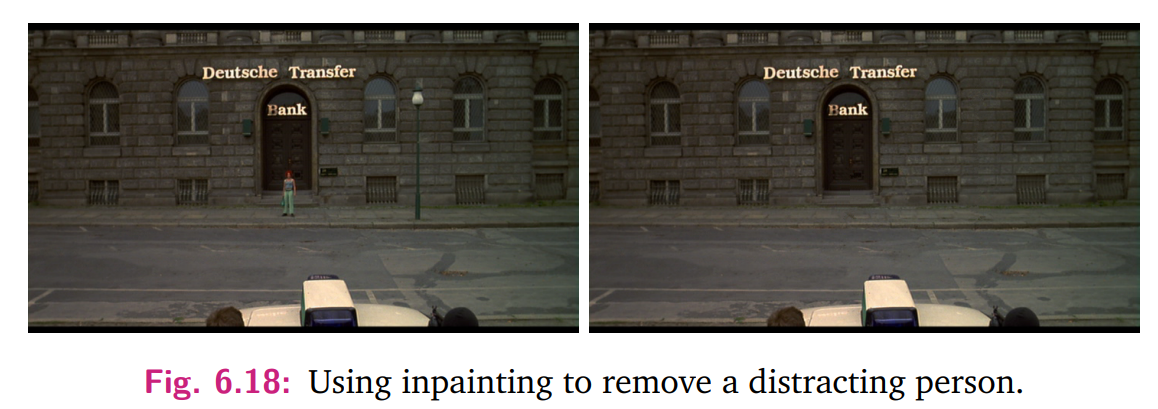

1. Object Removal: Tools can highlight unwanted objects in an image and replace them with synthesized pixels (Fig. 6.18).

2. Film Restoration: Damaged or missing regions in old films can be reconstructed.

3. Creative Edits: Used in modern photo editing to enhance or alter images.

What is novel image synthesis in image processing?

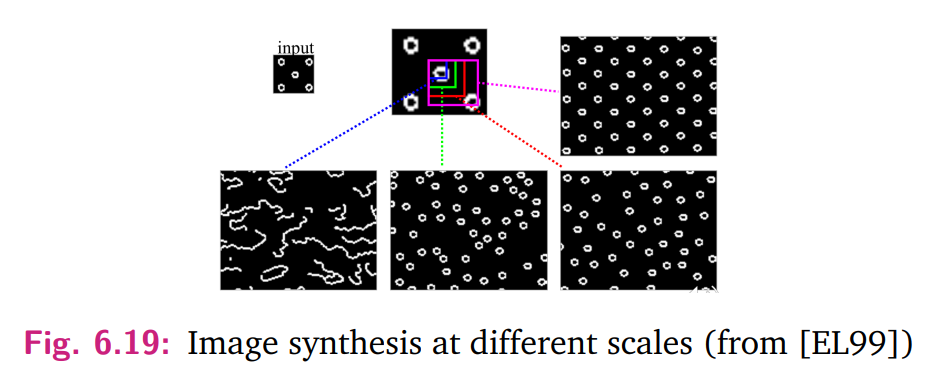

Novel image synthesis extends inpainting by creating entirely new images with similar statistical properties to the original. Starting from an input seed, patches are iteratively regenerated to produce a new image, as shown in Fig. 6.19. Patch size affects the regularity of the synthesized pattern.

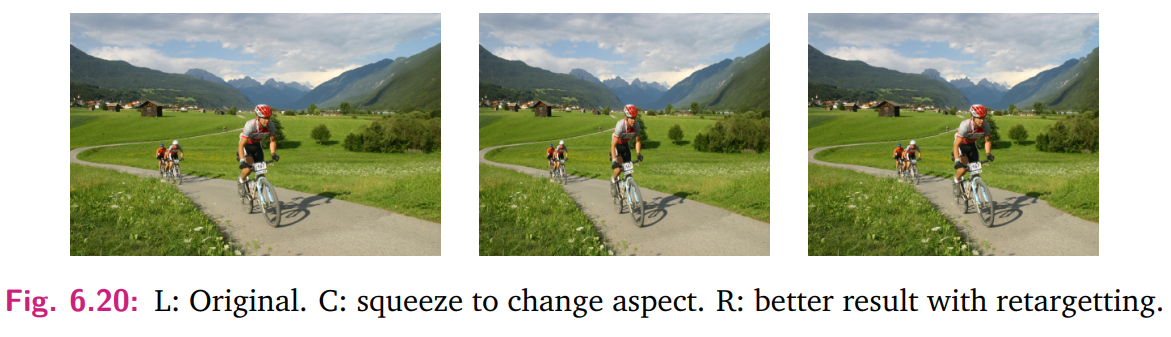

What is image retargeting, and how is it used?

Image retargeting changes an image's aspect ratio without distorting its proportions or losing semantic meaning. For example, Fig. 6.20 demonstrates horizontal retargeting, preserving the subject's aspect ratio while compressing less important side areas for a more pleasing result.