Lec 6: Clinical vs Statistical significance, directioned tests, Reliability/Validity

1/34

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

35 Terms

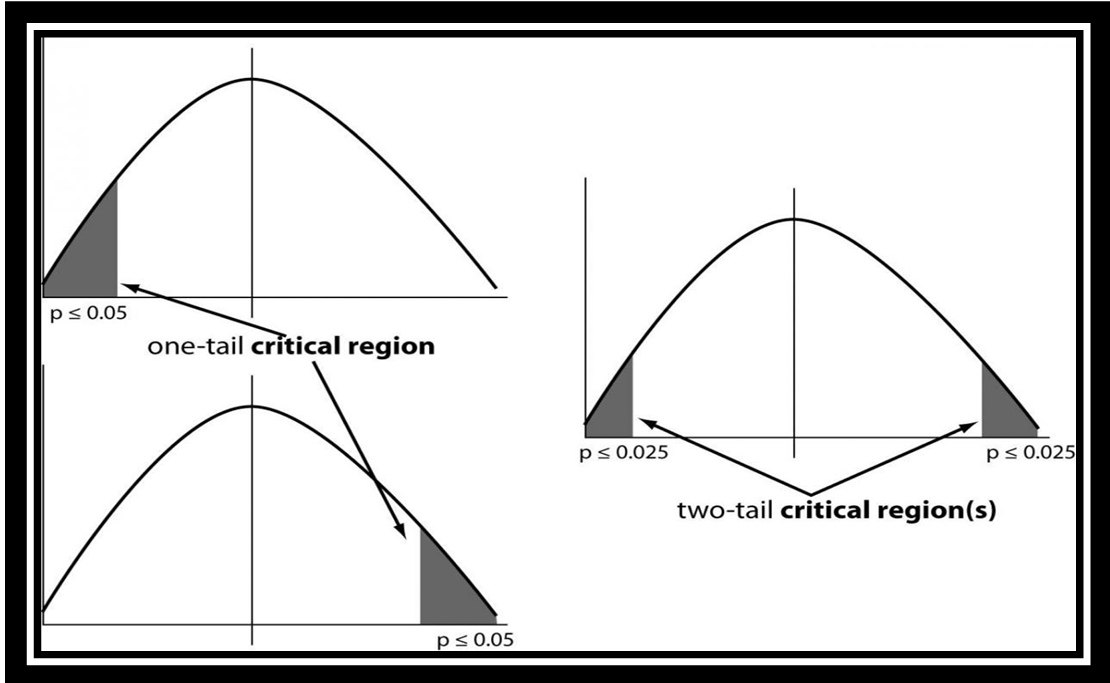

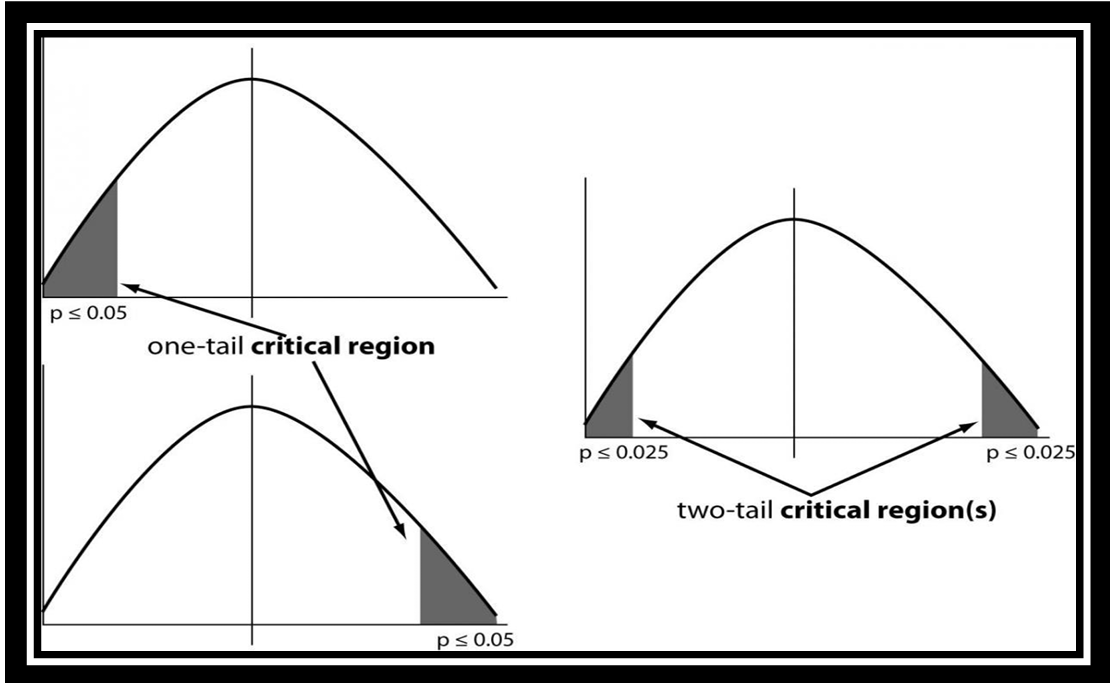

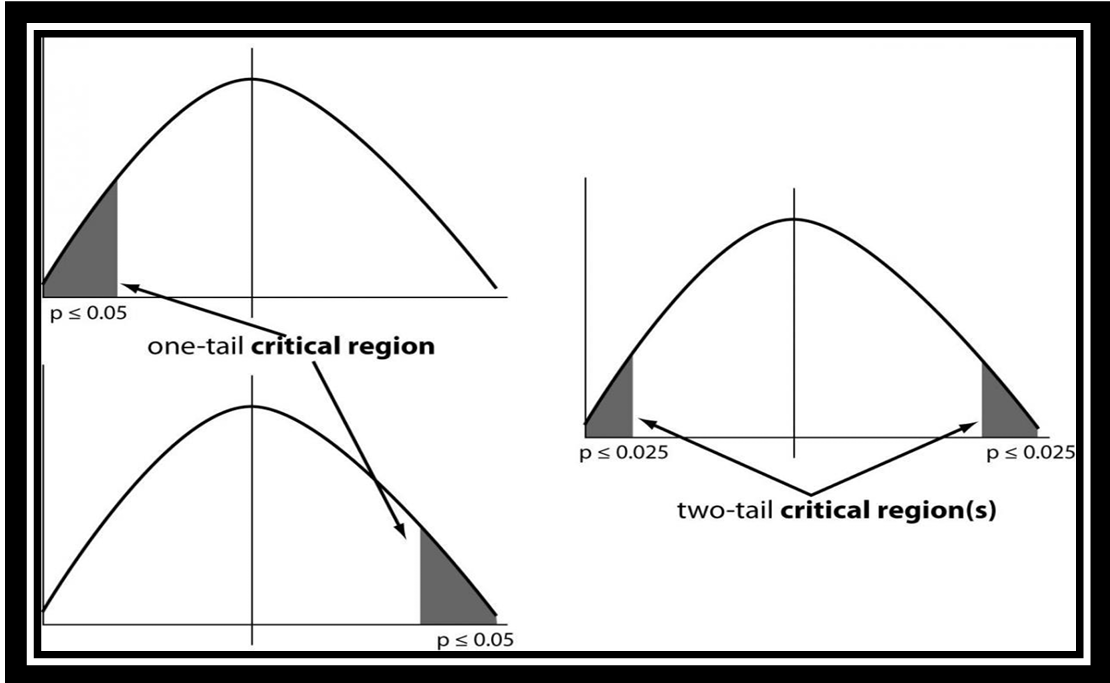

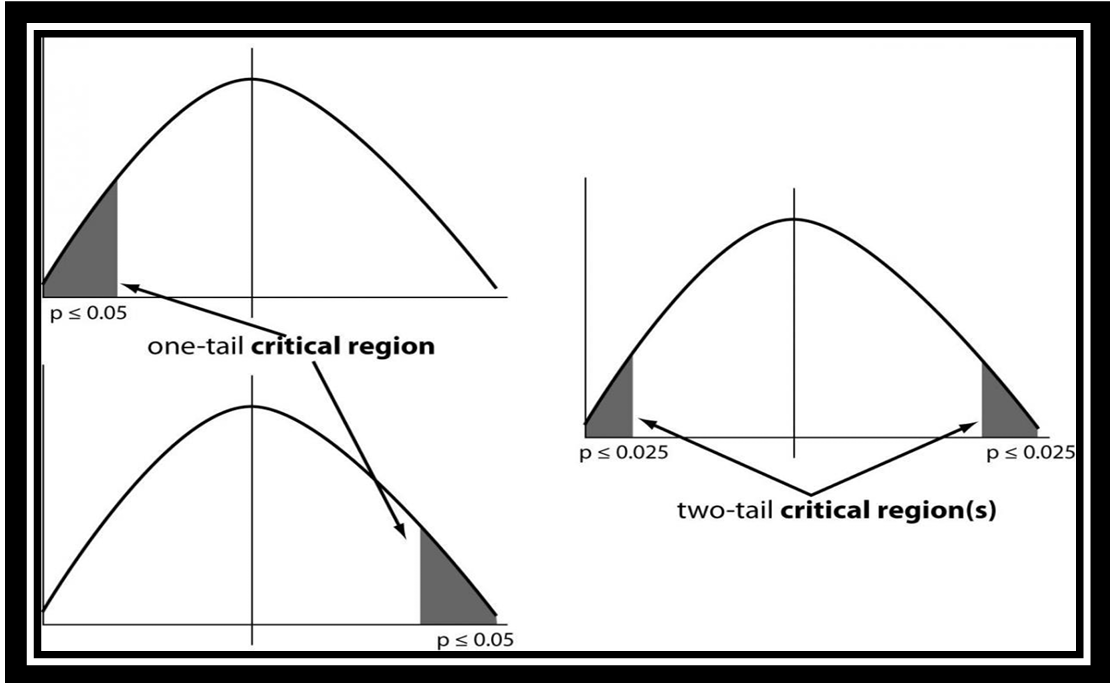

one tailed test meaning

suggests null hypothesis should be rejected when test value is in the critical region on one side of the mean

can be left or right tailed=depending on the direction of the inequality of the alternative hypothesis

two tailed test meaning

null hypothesis should be rejected when the test value is in either of the two critical regions

statistical significance vs clinical significane

statistical significance may not be clinically useful while clinical is

reliability def

extent to which a test consistently measures whatever it purports to measure

aka when your measurement tool is consistent and repeatable

what does reliability depend on

variables are free from random errors

consistency of repeating the measure

what does high reliability indicate

the measurement system produces similar results under the same conditions

stability def (characteristics)

consistent & enduring

does not change over time

high correlation coefficient when administered repeatedly (diff results should be similar

evaluate stability at the beginning & throughout the study

homogeneity def/characteristics

extent to which items on a multi-item instrument are consistent with one another

aka internal consistency reliability (diff methods/questions=same results)

useful for single concept

assessed with cronbach’s alpha (ranges from 0/no reliability to 1/complete reliability)

Equivalence def

how well multiple forms or users of the instrument produce the same results

variation: diff forms of tool or user error/understanding

when multiple individuals are collecting data, ensure inter-rater reliability

what are the diff types of reliability

equivalency reliability

stability reliability

internal consistency

inter-rater reliability

intra-rater reliability

Equivalency Reliability def

extent to which two items measure the same concepts at identical level

Stability Reliability def

measuring variable over time

Internal Consistency def

extent to which test assess the same characteristic

Inter-rater Reliability def

extent to which two or more individuals (raters) agree

Intra-rater Reliability def

same assessment completed by same rater on two or more occasions

what does % Agreement or Kappa statistic measure

inter-rater reliability

list sTATISTICAL MEASURES OF RELIABILITY

% agreement or kappa statistic

cronbach’s alpha = correlation of items, ranges from 0 to 1, >0.8 good

factor analysis=relationships between items and item reduction

rest-retest=correlation between test 1 and test 2

what does validity/accuracy depend on

the form of test

the purpose of test

the population to whom it is intended

what is validity checking for

the appropriateness of the data rather than whether measurements are repeatable

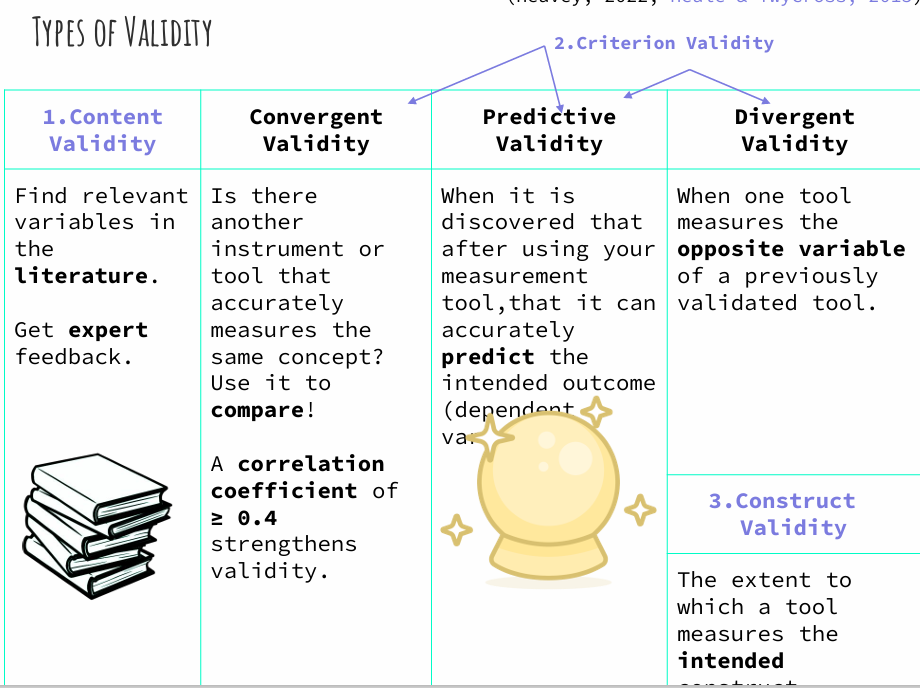

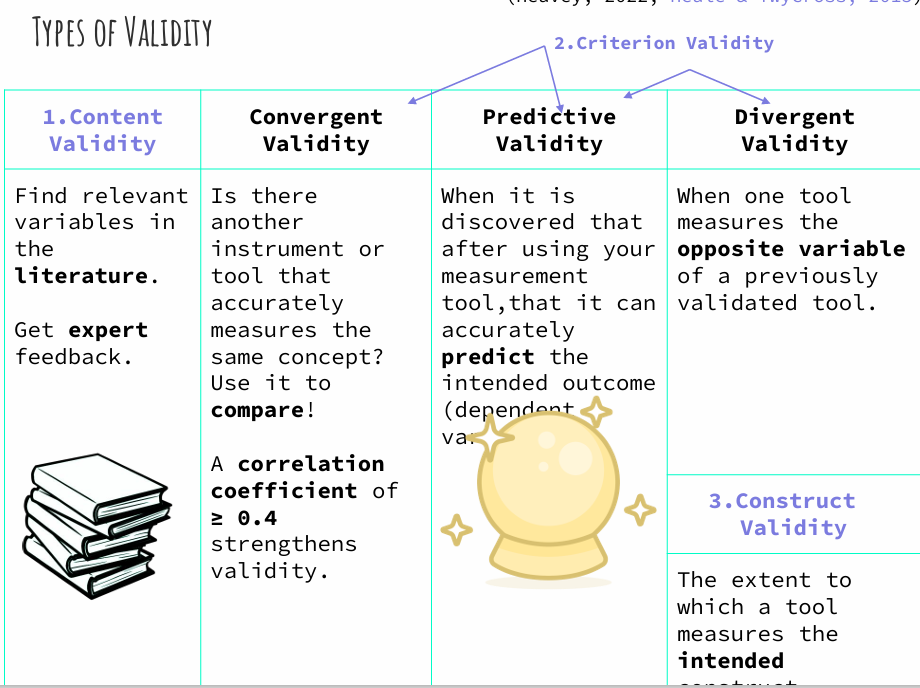

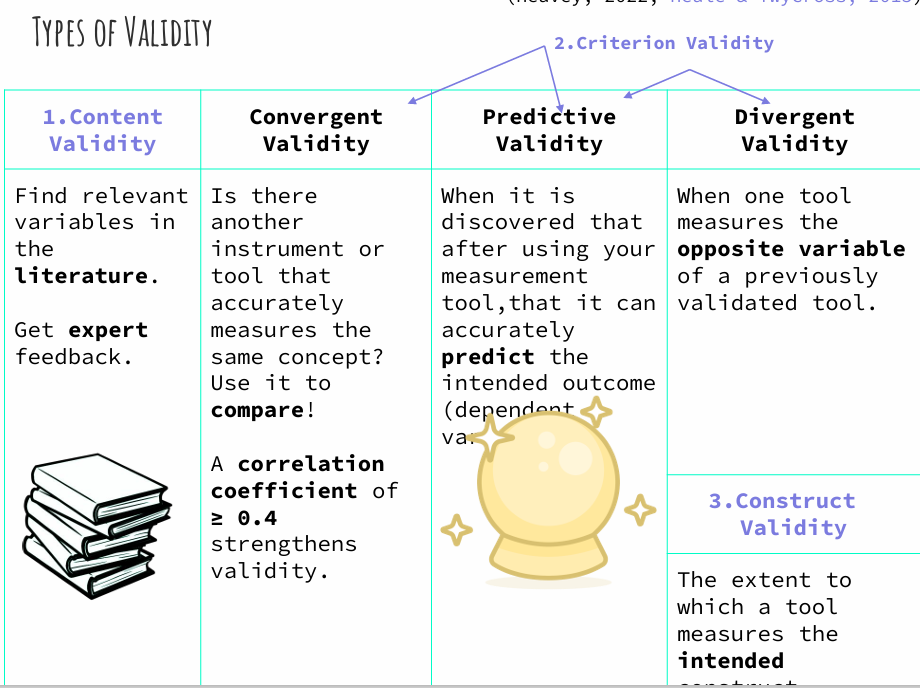

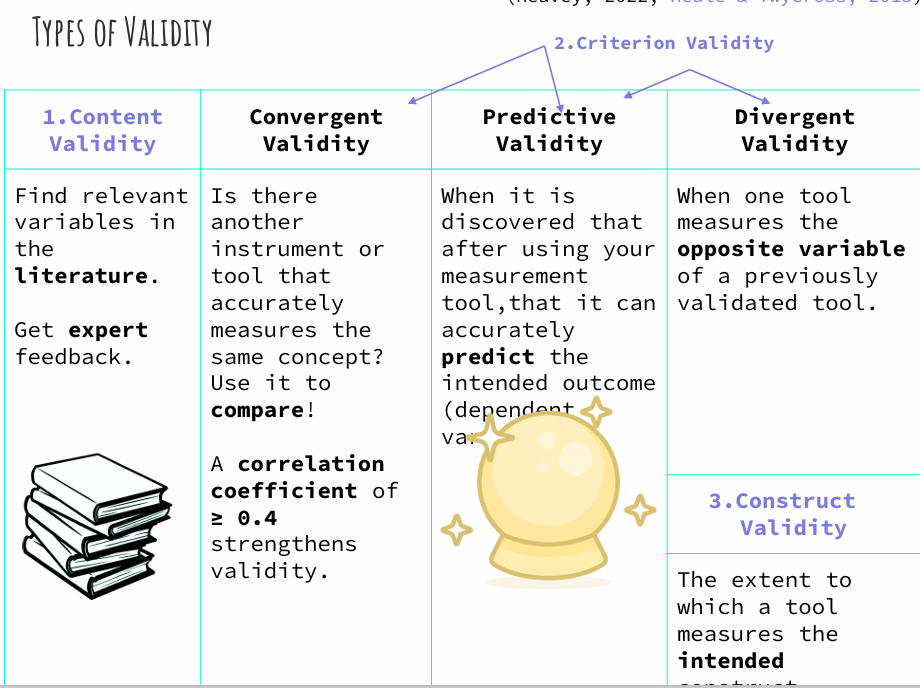

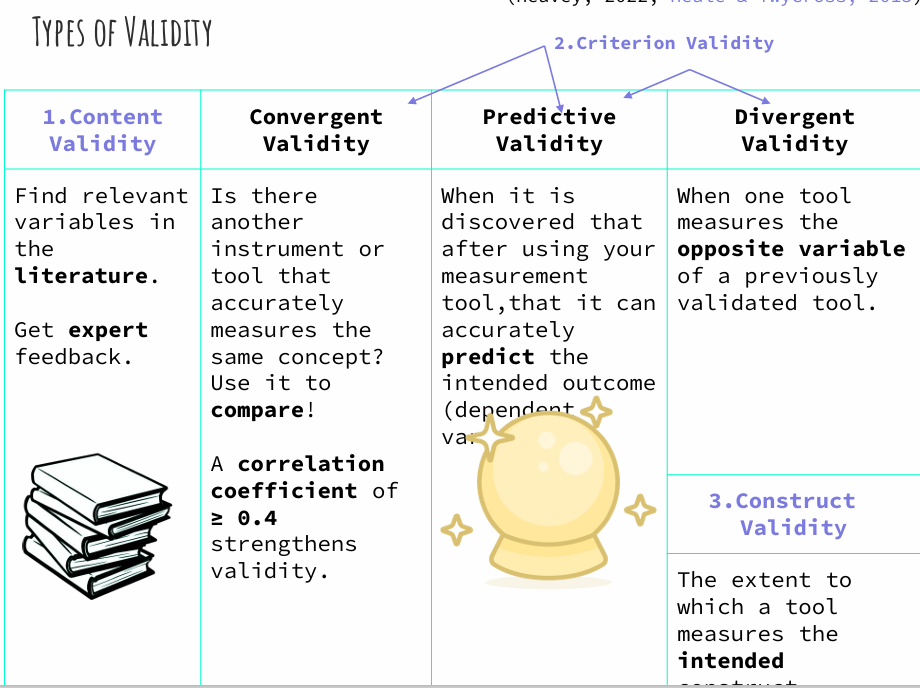

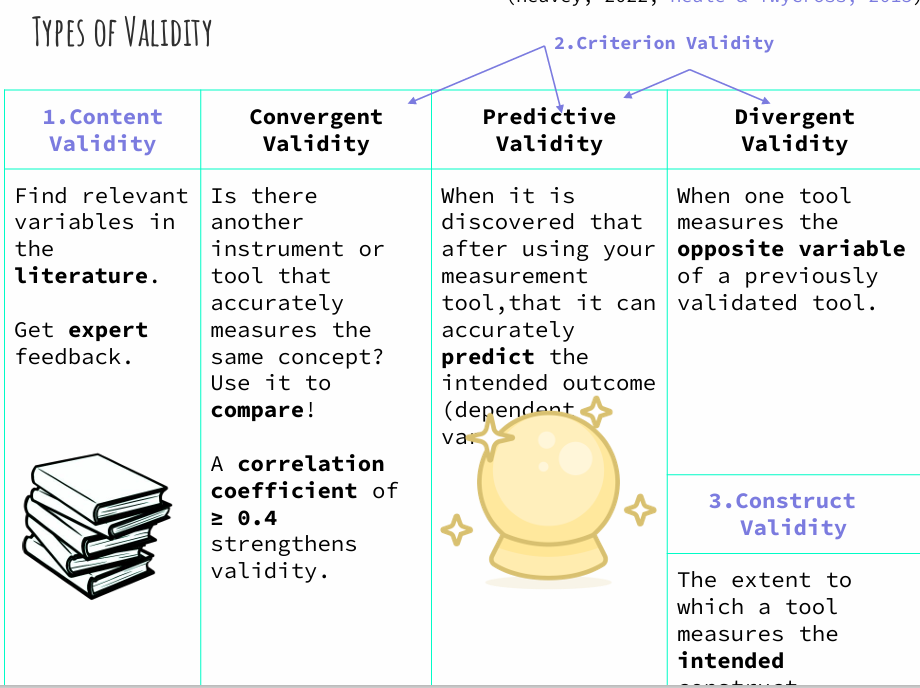

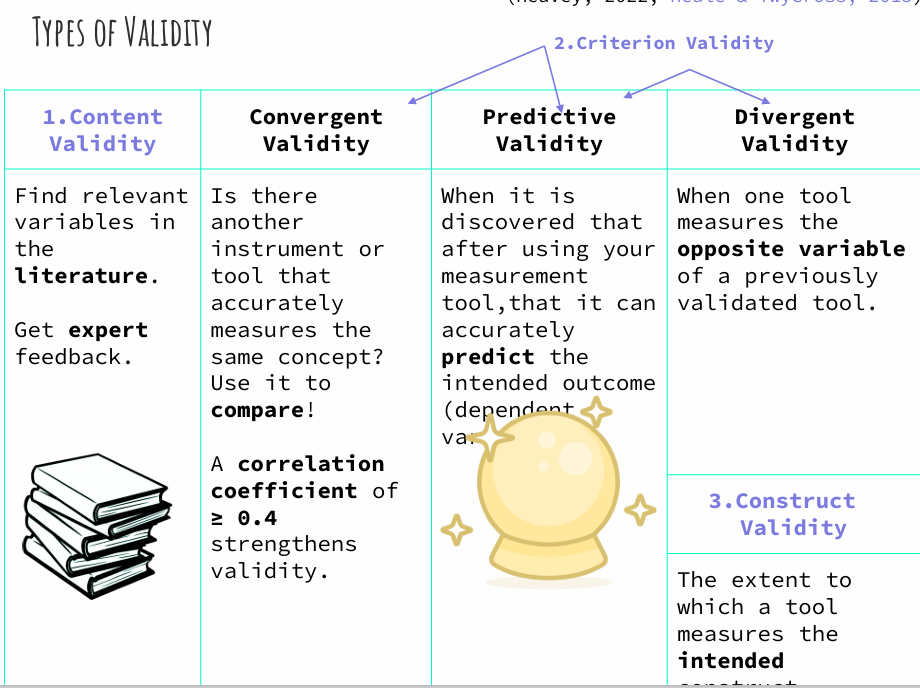

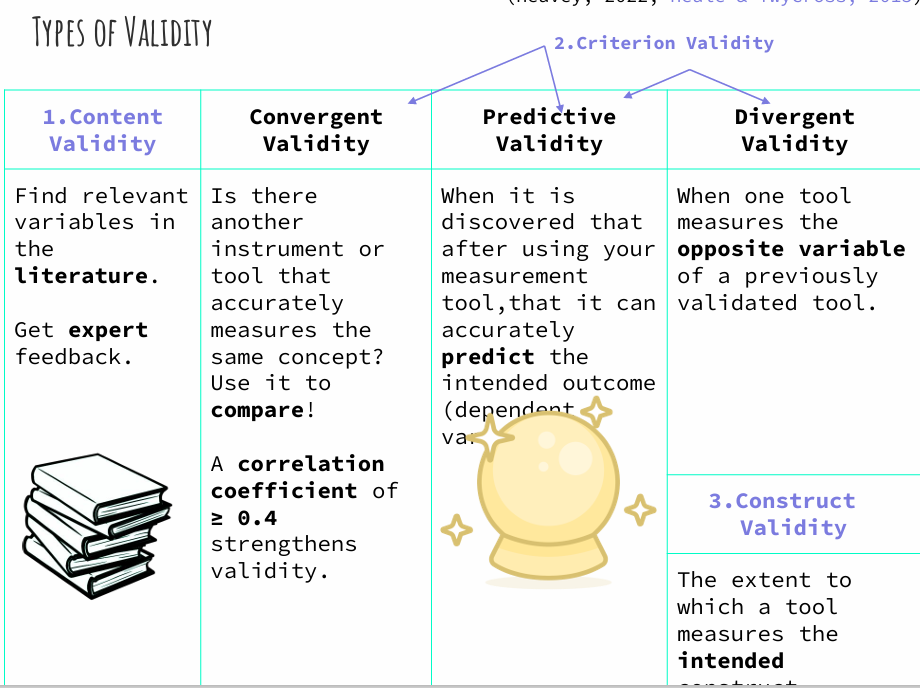

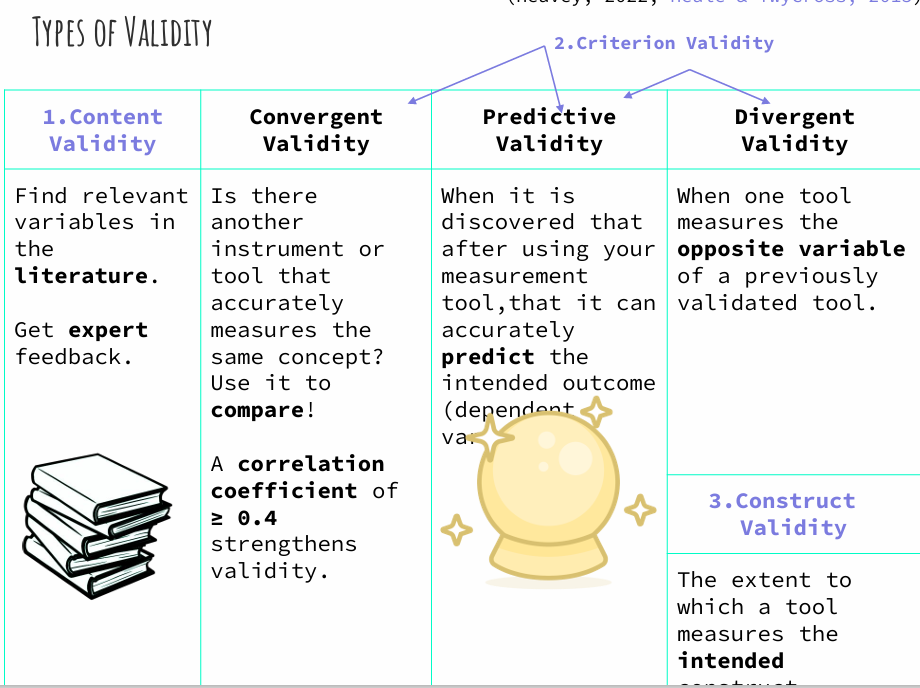

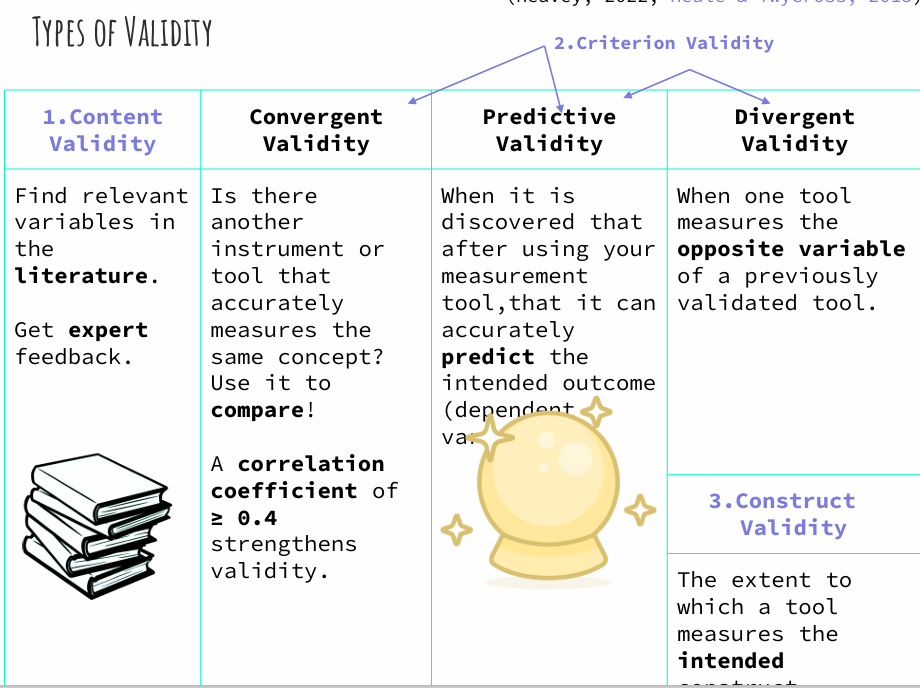

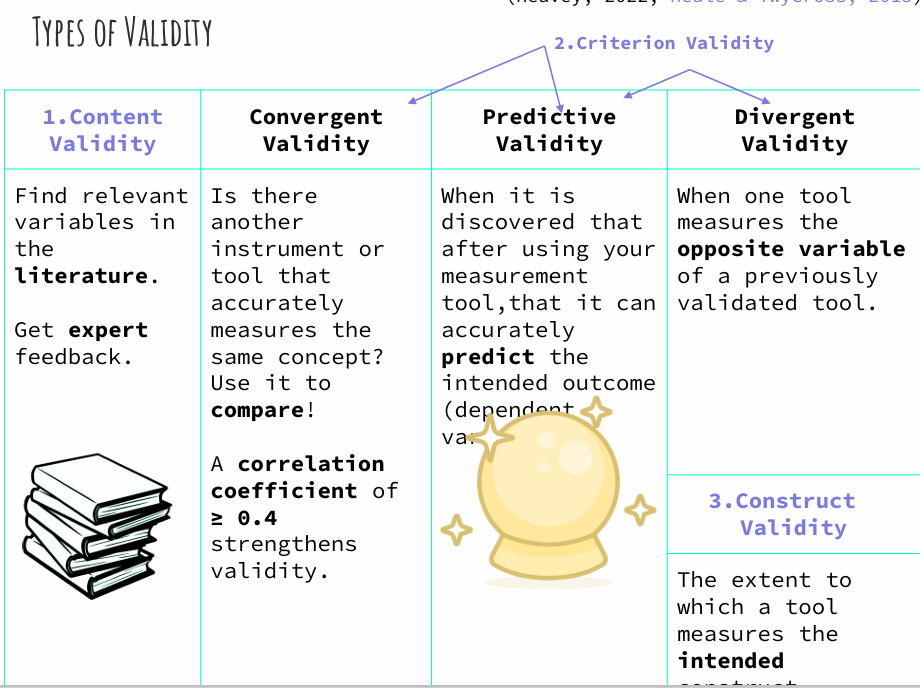

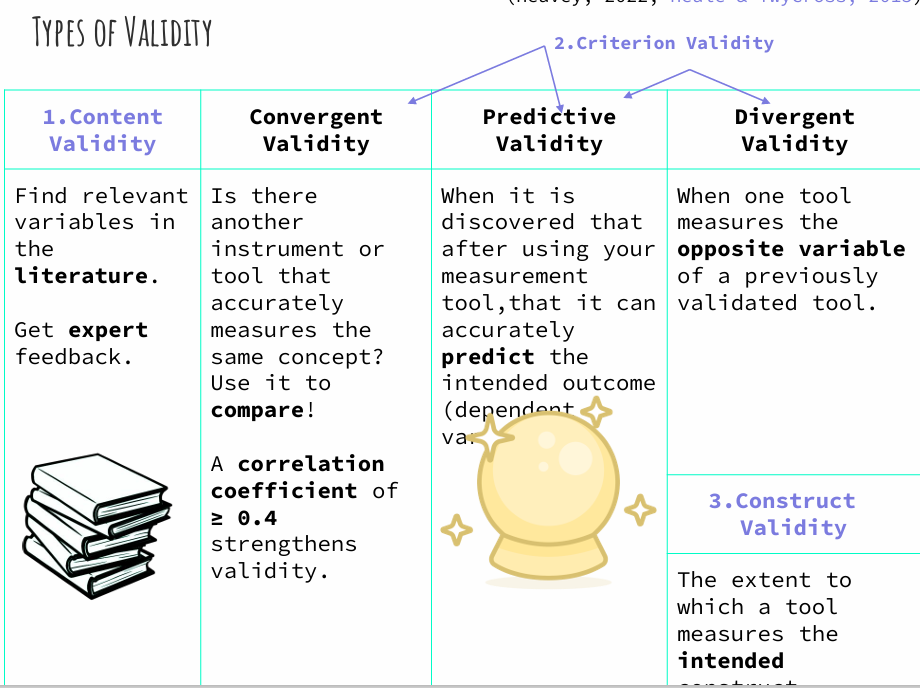

lists the diff types of validity

face validity/content validity

criterion validity

convergent validity

discriminant validity

face validity/content validity

depends on the judgement of the observer (interviews/focus groups)

criterion validity

examine correlations with variables that you expect to be linked (relate with standards)

convergent validity

examine correlations with existing tools/ measures or instruments

comparing your results with those of a previously validated survey that measures the same thing

Discriminant validity def

doesnt measure what it shouldnt

validity def

extent to which a concept or variable is ACCURATELY measured

a correlation coefficient of ≥ 0.4 strengthens validity

predictive validity

when it is discovered that after using your measurement tool that it can accurately predict the intended outcome (dependent variable)

divergent validity

when one tool measures the opposite variable of a previously validated tool

a measurement must be reliable first before it can be…

For a test to be valid it has to be reliable!

valid

a test can be reliable but it doesn’t mean it is valid/accurate

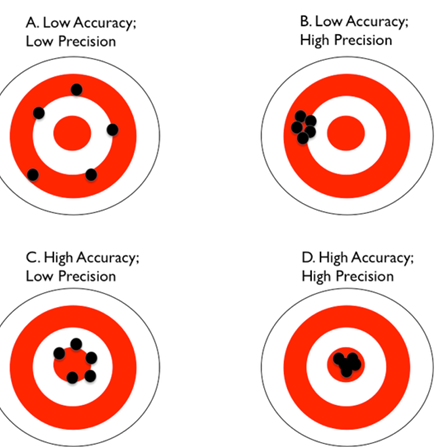

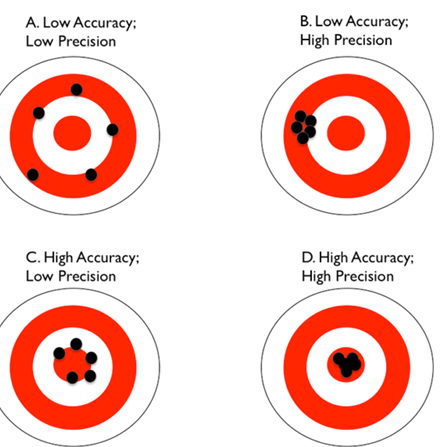

SELECTION OF MEASUREMENT TOOLS pic

IDEALLY = WANT HIGH ACCURACY AND HIGH PRECISION

Validity (Accuracy) = the degree to which measuring the true value. How close are you to measuring the true value?

Reliability (Precision) = how repeatable are the measurements. How close are the repeated measures to each other?

In order to determine if measurements are reliable and valid we need to examine

error

types of error

random=variations day to day, moment to moment

systematic=errors associated with incorrect measurement tool, design of study, bias

acceptability

extent acceptable to target group

language and format

indicators: response time, response rate, missing data

Feasibility

how easy the tool is to use

practicality

time

cost

resources

construct validity

detected a diff that was known to exist in a population, confirms concept

what 3 main factors make up reliability

stability

homogeneity

equivalence