Merged

1/331

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

332 Terms

what is the software crisis

Term used during early computing but can be applied now, the difficulty of producing useful and efficient programs with good design in the required time, caused by the rapid increase in computer power and possible problem complexity

what caused the software crisis

As software complexity increased, many software problems arose because existing methods were inadequate.

Also caused by the fact computing power has outpaced the skill ability of programmers effectively using those capabilities.

processes and methodologies created to try improve it but large projects will always be vulnerable

what are the crisis signs of the software crisis

over budget

over time

inefficient software

low quality software

not meeting requirements

hard to maintain

never delivered

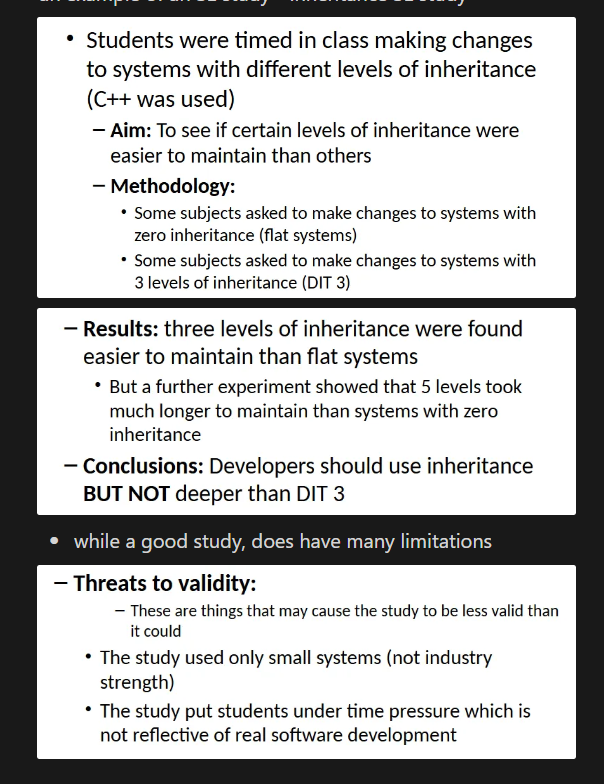

what is a software engineering study

consists of an aim - what the study tries to answer or do

methodology - how to conduct design and implementation

results

conclusions - making conclusions needs to be based on evidence and critical thinking based on the threats to validity

threats to validity - anything which could affect the credibility of the product developed

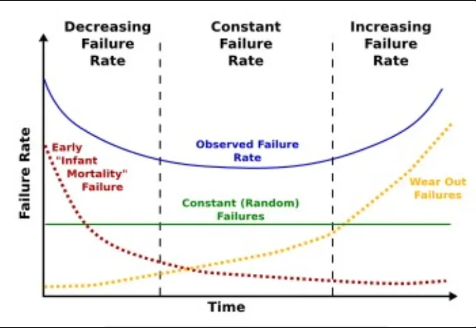

which of these bathtub curves is the best

people can have different affects on the bathtub curve

the red curve is best

what data is needed to construct a bathtub curve

requires accurate failure data (not always easy to gather):

need to measure time according to a subjective timeline decided by the team e.g.

release

hourly, daily, monthly, yearly

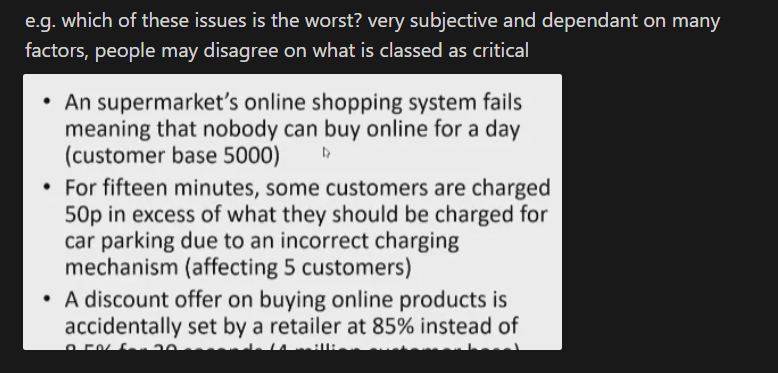

why is it hard to gather accurate failure data

hard to know which failures to include

minor, major, critical failures?

the severity of each is subjective

if combining all 3 failures in 1 bathtub, makes it difficult to compare bathtubs on a common basis as minor failures become equivalent to showstoppers

if trying to track the types individually, individual bathtubs need to be created

how does brooks law impact the bathtub curve

bathtub can be drawn for a developing system as well and not just a released system, where the end coincides with more people being added and higher failure rate

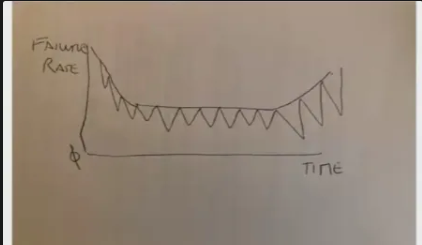

how do refactoring practices impact the bathtub curve

when you do refactoring, there will be a temporary dip in the failure rate, but once the refactoring wears out it will go up again, resulting in these spikey teeth like bathtub curve - shows that refactoring is temporary, and later on in the system need to put in more effort for the same results as earlier in the system

bathtubs not always a smooth line, but can have bumps and zigzags to represent the tech debt and refactoring changes

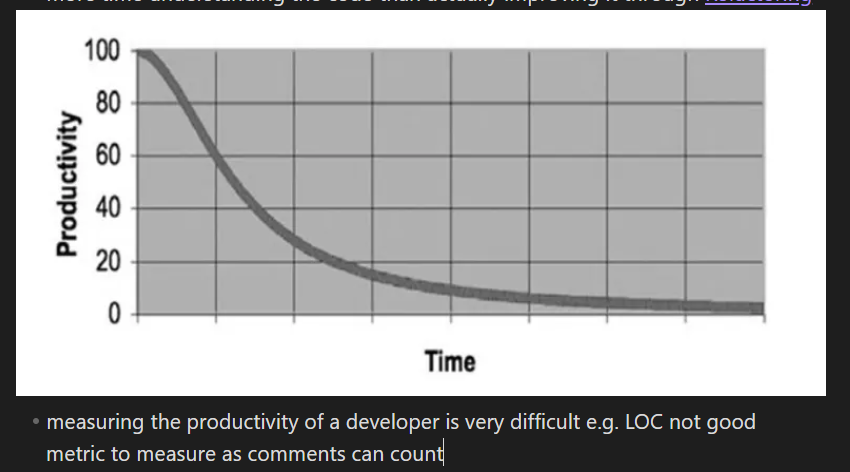

what is a productivity drain

any wasted activity or situation that negatively affecting a programmers ability to code on time and on budget

why are productivity drains bad and what does it show about human impact

bad because productivity drains impact how well programmers can do tasks like refactoring

shows how human factors have a significant impact on software engineering, a lot of the principles depend on whether humans can follow them effectively.

most times not, leading to the software crisis

what are the 6 productivity drains

misaligned work

excess work in progress

rework

demand vs. capacity imbalance

repetitive and manual effort

aged and cancelled effort

what is the drain - misaligned work

basically ‘productive procrastination’

based on unclear communication and poor grasp on priorities

developers tackle tasks that don’t directly contribute to the important tasks

can tech debt, TODO be classed as misaligned work? there’s a balance between what we should do now or what we should leave

what is the drain - excess work in progress

no penalty for overloading developers

this creates a silent stressor as uncomplete tasks pile up and project deadlines slip (tech debt)

what is the drain - rework

having to redo work - possibly refactoring, again, debatable on whether refactoring is rework or is useful

a roadblock to productivity (blocker) caused by

a tangled web of unclear requirements

poor communication between teams

inadequate testing practices

unaddressed tech debt

what is the drain - demand vs. capacity imbalance

mismatch between the demand for work and the available capacity to handle it (basically, either too much work or no work all, no balance at all)

occurs when one stage in a connected workflow operates too quickly or slowly for the next stage

what is the drain - repetitive manual effort

repetitive tasks like manual testing, data entry, and low value work

this work steals valuable time from better more innovative tasks and could just be automated

what is the drain - aged and cancelled effort

miscommunication/inflexibility can cause work to be abandoned or obsolete (binned work) before completion

caused by lack of adaption to new information or feedback

what is software cost estimation

estimating the time and cost and effort it will take to develop a system

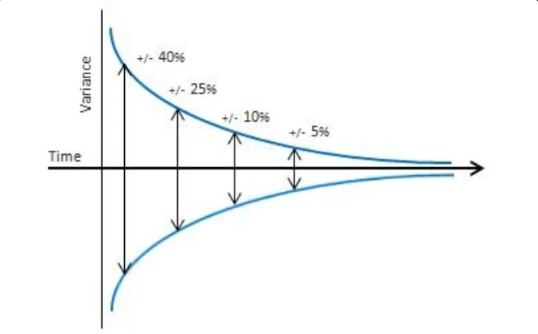

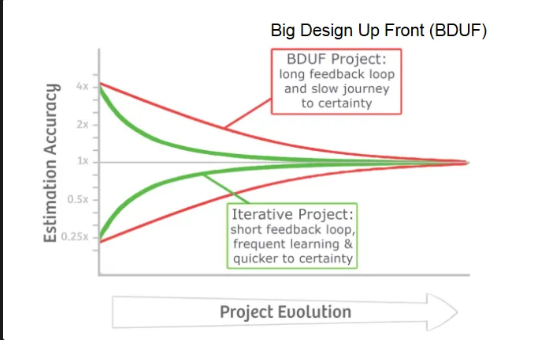

what is the cone of uncertainty in estimation

concept that shows that over time, the amount of error you make in estimating the amount of time it takes to complete something goes down

estimation accuracy increases as you become more knowledgeable in what the system is going to do

what is a BDUF project

big design up front

associated with waterfall methodology where design must be completed and perfected before implementation

what is an iterative project

the project is developed in iterations or stages that can be repeated as needed, associated with agile

how does the cone of uncertainty differ between BDUF and iterative projects

BUDF - greater estimation error

iterative - better estimation accuracy

what are the different software estimation techniques

algorithmic cost modelling

expert judgement

estimation by analogy

Parkinson’s law

specification-based

what is algorithmic cost modelling estimation

a formulaic approach based on historical cost information, generally based on software size

cost/effort is estimated as a formula including product, project, and process attributes (A, B, M)

values estimated by project manager

most models ae similar but have different A, B, M values

doesn’t always involve experts, just people who collect the data

what is the formula for algorithmic cost modelling

Effort = A * (B/M)

A is anticipated system complexity decided by the PM - this again is subjective to define

B the number of hours available

M a value reflecting the number of product, process and people attributes

what is expert judgement estimation

1 of more experts in both software development and application domain use their experience to predict the software costs

process iterates until consensus is reaches

similar to algorithmic cost modelling, but intuition only and no formula

what are the pros of expert judgement

cheap estimation method

can be accurate if experts have direct experience of similar systems

what are the cons of expert judgement

very inaccurate if there are no true experts

what is estimation by analogy

cost of a project computed by comparing the project to a similar project in the same application domain

NASA does this a lot since many systems are similar, so past systems act as a good guide for future system estimation

what are the pros of estimation by analogy

accurate if previous project data available

what are the cons of estimation by analogy

impossible is no comparable project done and need to start from the beginning

needs systematically maintained cost data

what is Parkinson’s law estimation

states that the work expands to fill the time available, so whatever costs are set, they’ll always be used up

how do we combat Parkinson’s law

state what, the project will cost whatever resources are available and that’s all it’s getting (applying a fixed cost)

what are the pros of Parkinson’s law

no risk of overspending

what are the cons of Parkinson’s law

system is usually unfinished because the cost is determined by the available resources instead of the objective statement

what is specification-based estimation

project cost is agreed on basis of an outline proposal and the development is constrained by that cost

a detailed specification may be negotiated

may be the only appropriate when other detailed information is lacking - no prior knowledge, the specification itself is the guide onto how long it will take e.g. making a spec in uni, you are your own expert

what are some issues with Cyclomatic Complexity

entirely different programs can have the same CC value

cant be calculates where case and switch statements are used for conditions

people argue it is no better than LOC, might as well use LOC

hard to interpret e.g. CC 10 vs. CC 20,

2x as complex?

some classes have a very high CC but can be simple and easy to understand e.g. doing 1 thing that has many conditions

developed for procedural like C, should it be used for OO?

CC often replaces using avg(CC) where the average CC of methods in a class

this does not represent the spread of CC across the class e.g. {10,1,1} vs. {4,4,4}

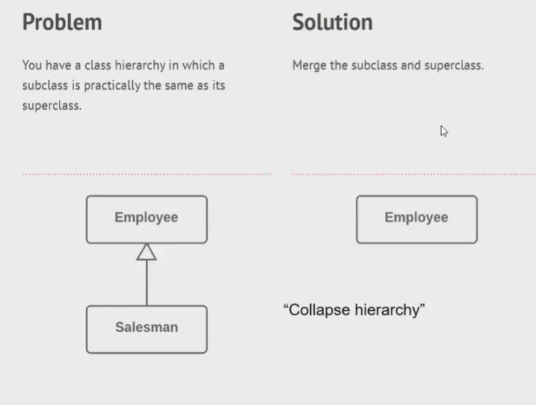

what are some issues with DIT

nobody has come up with a good value for DIT e.g. earlier inheritance study is not the only correct one

some systems need lots of inheritance and some don’t e.g. GUI lots DIT vs. maths based systems not a lot DIT

most developers in industry don’t see the value of DIT, so what’s the point in collecting it? LOC and CC are more favoured - most systems have a median DIT = 1

most systems collapse to DIT 1 as a result of merging sub and super classes

DIT meant to reflect how humans structure info but in reality doesn’t really show this, we don’t know what level of inheritance is good

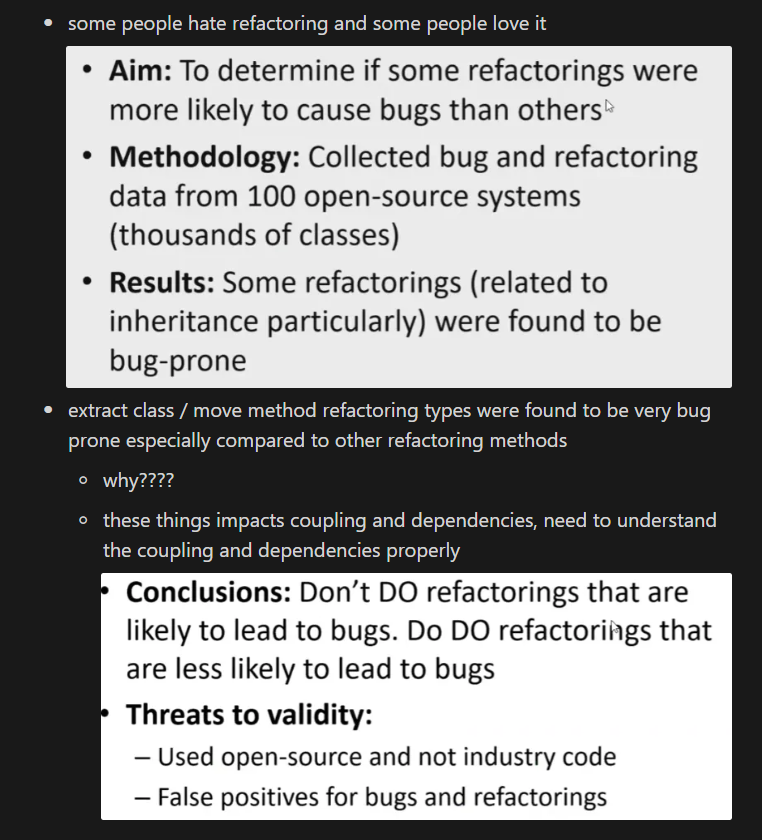

what is Di Penta 2020 refactoring study about

so many things were developed in the 60s-80s, because nobody has come up with a better alternative since

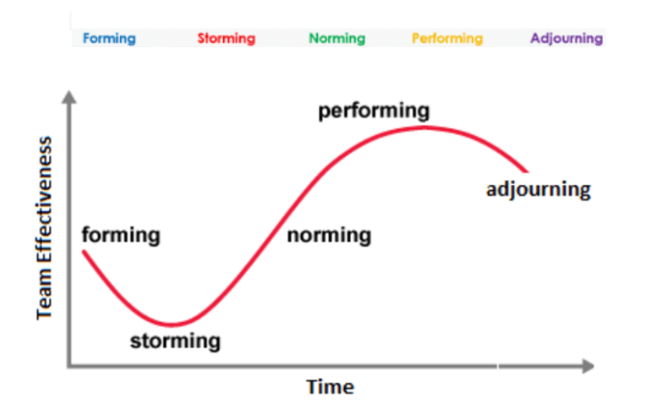

what is Tuckman’s Theory of Teams

suggests that every team goes through four key stages as they work on a task before it can become effective

these phases are all necessary and inevitable for a team to grow up to face challenges, tackle problems, find solutions, plan work, and deliver results

as the team develops maturity and ability, relationships establish, and leadership style changes to more collaborative or shared leadership

what are the phases in Tuckerman’s theory of teams

forming

storming

norming

performing

adjorning

what is the forming phase

team spends time getting acquainted

open discussion on the purpose, methods, and problems of the task

what is the storming phase

open debate - conflict may exist on the goals, leadership, methods of task

most challenging stage - lack of trust, personality clashes, productivity nosedive from misaligned work

what is the norming phase

a degree of consensus enables the team to move on

differences are worked through, team learns to cooperate and take responsibility - focus on team goals and productivity

harmony between members

what is the performing phase

team is effective

open compliance emerges to group norms and practice allowing the team to be flexible, functional, and constructive

team requires minimum assistance from leadership and can effective in productivity and performance

what is the adjourning phase

the team agrees it has completed the phase

followed optionally by mourning stage (not in the original stages but added after) where the team is nostalgic that it’s over if the team was closely bonded

what is the role of the project manager in Tuckerman’s theory of teams

need to support the team throughout the different stages in different ways

forming - be open and encourage open communication, give high guidance as roles are unestablished

storming - be patient, focus the energy, support the wounded, authoritative leadership to push things back on track

norming - a chance to coordinate the consensus, can be relaxed but ensure it doesn’t go back to storming

performing - maintain the team momentum (not easy), little guidance needed

just a student study on inheritance

what is clean code

code that is

elegant and pleasing to read

focused where each function and class exposes a single minded attitude that remains undistracted by surrounding details [[Single Responsibility Principle SRP

taken care of by curators, developers made it simple and orderly

contains no duplication, the number of entities (classes and methods) are minimised

limit to this, before each class starts becoming a Large Class, needs to still be understandable

follows [[Rule of Three]]

follows [[Boy Scout Rule]]

why do we need clean code

better use of time in the long-term

short term pain for long term gain by reducing [[Tech Debt]]

saves money as the developer’s time is costly to pay for

easier ramp-up of new staff

easier debugging

improves maintenance in the long term

[[Code Smells]], [[Degrading]], [[Bathtub Curve]], [[Lehman's Laws]] are all impacted by how clean the code is

[[Fault Proneness]] reduction

vulnerability reduction → customer prosecution possible for not updating code to resolve this

what is staff ramp up

refers to getting new team members up to speed on the system

what are the consequences of unclean code

productivity decreases as more time is spent understanding the code rather than improving it with refactoring

what are the general principles for clean code

KISS

YAGNI

don’t let tech debt build up

what is KISS

Keep it simple stupid (KISS)

Simpler is always better (reduce complexity as much as possible)

done using Refactoring

what is YAGNI

You Aren't Gonna Need It (YAGNI)

a developer should not add functionality unless deemed necessary

what factors impact clean code

naming conventions

method conventions

comments

formatting styles

open closed principle

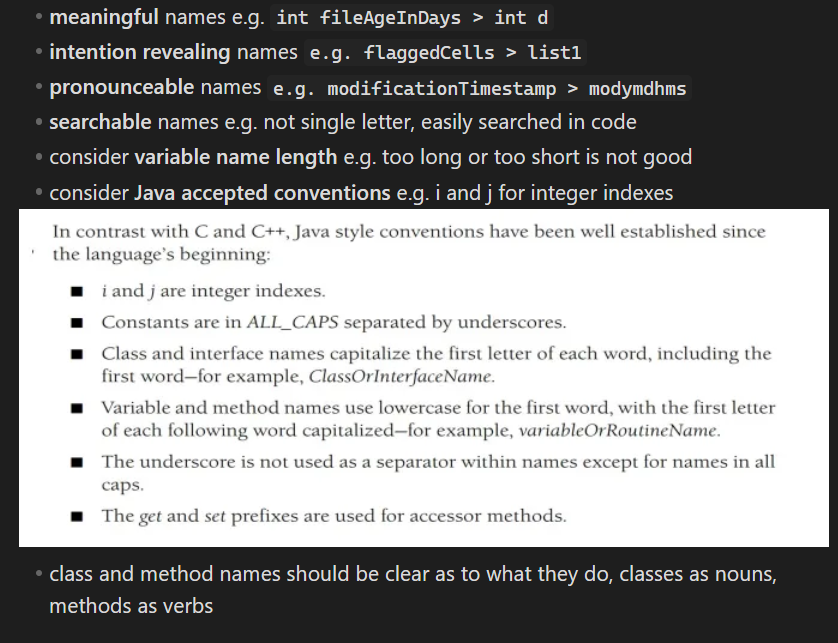

what naming conventions should be used for clean code

what method conventions should be used for clean code

minimise the number of parameters

method callers and callees should be close together in the source code

If one method calls another:

Keep those methods vertically close in the source file

Ideally, keep the caller right above the callee

We tend to read code from top-to-bottom, easier to read the code

use the same concepts across the codebase

e.g. fetchValue() getValue(), don’t mix these keywords or will cause confusion. just choose one.use opposites properly

e.g. add/remove, start/stop, begin/end, show/hide

how do comments impact clean code

helpful or damaging, if they must be included, minimize them

poor or inaccurate comments are worse than no comments - need [[Refactoring]]

comments can lie from being outdated

the code should speak for itself, comments only added when code can’t be self documenting (Daniel Read)

![<ul><li><p>helpful or damaging, if they must be included, minimize them</p></li><li><p>poor or inaccurate comments are worse than no comments - need [[<span>Refactoring</span>]]</p></li><li><p>comments can lie from being outdated</p></li><li><p>the code should speak for itself, comments only added when code can’t be self documenting (Daniel Read)</p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/35100a84-8bd9-4d99-ba84-a598635f95fc.png)

what are the formatting style rules to maintain clean code

Keep code lines short

Use appropriate indentation

Declare variables close to where they are used (scope - what other areas are affected and need to be considered, minimise scope)

Use vertical blank space sensibly

To associate related things and disassociate unrelated things

Keep horizontal blank space consistent (indentation)

what is the open closed principle

software entities (classes, modules, functions, and so on) should be written so that they are open for extension, but closed for modification

what is the relevance of the open closed principle

developers should be able to add new functionalities to an existing software system without changing the existing code

promotes a more cohesive class overall

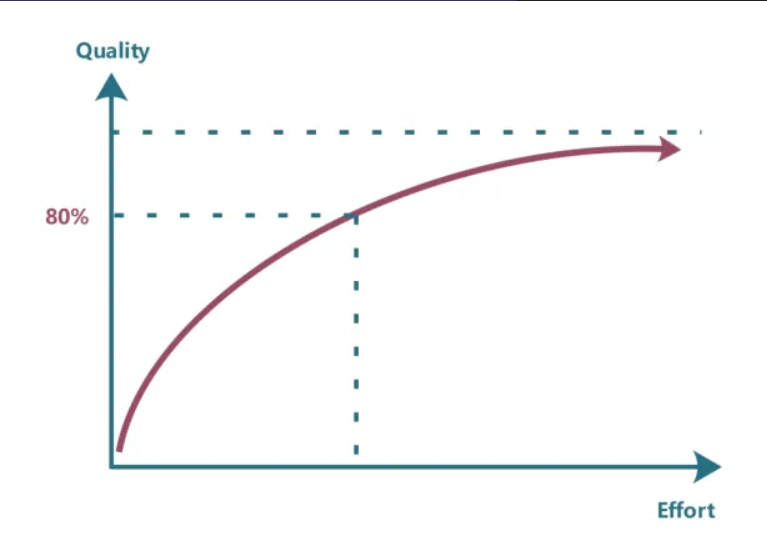

how does pareto law relate to code cleanliness

getting code quality to 100% with clean code principles takes a lot longer, more time, flattened out, 20% extra quality with 80% of the time

almost impossible to get clean code 100%, quick gains

what is dirty code

code that is

rigid

It is difficult to change

A single change causes a cascade of other required changes

Ripple effect

fragile

The software breaks in many places after a single change

immobile

You cannot reuse parts of the code because of high effort and risks in doing so

how does clean and dirty code relate to legacy systems

legacy systems suffer from dirty code

purpose of clean code is to ensure Legacy Systems are maintained as well and for as long as possible

what is a legacy system

software systems that are developed especially for an organisation have a long lifetime, many existing systems were developed many years ago using old technologies, but are stull business critical meaning essential for normal functioning of the system

why is changing legacy systems expensive

different parts implemented by different teams so no consistent programming style

uses old language

system documentation out of date

system structure corrupted after years of [[Maintenance]], [[Bathtub Curve]]

what is the dilemma of legacy systems

expensive and risky to replace the system, but expensive to maintain it too

businesses need to weight up the costs and risks and decide to extend the system lifetime using techniques like re-engineering, or replace it

uses audit grid

how are legacy systems structured

more like socio-technical systems and not just software systems comprised of

System hardware - may be mainframe hardware

Support software - operating systems and utilities

Application software - several different programs

Application data - data used by these programs that is often critical business information

Business processes - the processes that support a business objective and which rely on the legacy software and hardware

Business policies and rules - constraints on business operations

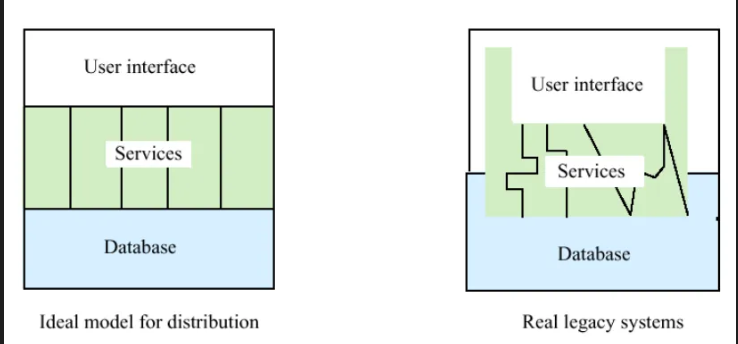

what is the ideal model for legacy system distributions

what are 2 relevant laws of code cleanliness

Hofstadter’s law

Eagleson’s law

what is Hofstadter’s Law

“it always takes longer than you expect, even when you take into account Hofstadter's Law.”

Regards the difficulty of accurately estimating the time it will take to complete tasks of high complexity

relates to Brooks' Law, longer than expected when adding people

what is Eagleson’s law

“Any code of your own that you haven’t looked at for six or more months might as well have been written by someone else.”

Law highlights the need for clear, effective [[Comments]] and clear coding standards. Not even the original programmer could decipher unclean code later down the line

what is refactoring

the process of changing a software system in such a way that it does not alter the external behaviour of the code, yet improves its internal structure

when should refactoring be done

Can be done at any time but most useful during the end of the [[Bathtub Curve]] where a system is reaching the end of its lifecycle where it starts to show signs of [[Decay]].

what are Fenton & Neil’s results on where the most faults are in a system

within a system - ‘small number of modules contain most of the Faults

links to Pareto Rule

if we know this, we can direct effort to refactoring the 20%

does refactoring change system behaviour

no, the user should not notice any difference

refactoring a system should make the system follow the main Software Engineering Laws

what are the 2 important software engineering laws

Law of Demeter (LoD)

Single Responsibility Principle (SRP)

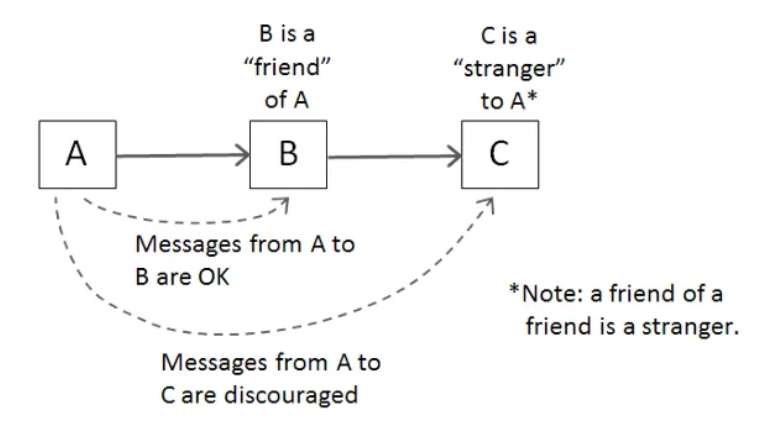

what is the Law of Demeter (LoD)

also called the principle of least knowledge

each class should have only limited knowledge about other classes: a class should only know about classes closely related to itself

Class talks to immediate friends only, stranger danger

what type of complexity does LoD relate to

Relates to [[Coupling]]

LoD acts as a design guideline for developing software that minimises coupling (and therefore should reduce complexity)

what is the Single Responsibility Principle (SRP)

Each class should only have a single responsibility

what type of complexity does SRP relate to

Relates to [[Cohesion]]

a class developed with high cohesion has only one responsibility and follows principle

a class with low cohesion has many responsibilities and violates principle

what type of maintenance is refactoring

preventative (prevent [[Tech Debt]], making things better now to prevent them debting up)

perfective (improve existing codebase)

what type of project lifecycles use refactoring

especially important in XP and TDD

constant regression testing important

what is the aim of successful refactoring

modify the existing codebase to

remove duplicate code - need to change in every area it’s duplicated

improve [[Cohesion]] and reduce [[Coupling]]

improve understandability and maintainability

when should you refactor

Fowler - refactor consistently and mercilessly

when you recognise a warning sign like [[Code Smells]]

when you have to fix a [[Bug]] since after the bug fix the affected code around it can be refactored

when you do a code review as part of a pair or [[Mob Programming]]

what tools can you use for refactoring

eclipse, netbeans, refactor tools build into the IDE

what are the methods of refactoring (fowler suggest 72)

Extract Method

Extract Class

Extract Subclass

Move Method

Move Field

Encapsulate Field

Replace Magic Number With Symbolic Constant

Remove Dead Code

Consolidate Duplicate Conditional Fragments

Substitute Algorithm

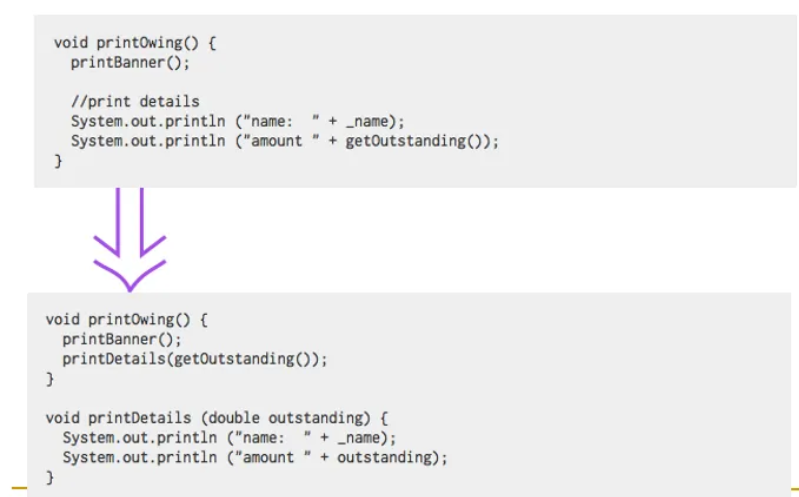

what is extract method refactoring

Applies when you have a code fragment inside some code block where the lines of code should always be grouped together

Turn the fragment into a method whose name explains the purpose of the block of code

what is extract class refactoring

You have one class doing work that should be done by two different classes

Create a new class and move the relevant fields and methods from the old class to the new class

has the cost of increasing [[Coupling]] since there is a new dependency between the Person and Telephone Number class, developer must determine if the benefits of refactoring overweight the disadvantage of extra coupling

![<p>You have one class doing work that should be done by two different classes</p><ul><li><p><em>Create a new class and move the relevant fields and methods from the old class to the new class</em></p></li><li><p>has the cost of increasing [[<span style="color: purple">Coupling</span>]] since there is a new dependency between the Person and Telephone Number class, developer must determine if the benefits of refactoring overweight the disadvantage of extra coupling</p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/57f9a129-2af2-4592-b4b1-411fcbc1f3fb.png)

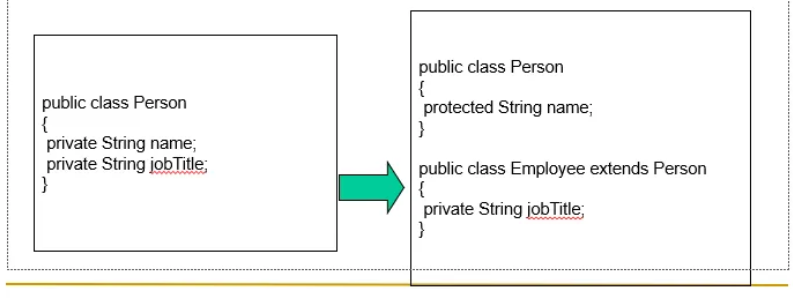

what is extract subclass refactoring

When a class has features (attributes and methods) that would only be useful in specialized instances, we can create a specialization of the class and give it those features

what is move method refactoring

A method is used more by another class, or uses more code in another class, than its own class.

Create a new method with a similar body in the class it uses most, as a result, reduces [[Coupling]]

![<p>A method is used more by another class, or uses more code in another class, than its own class.</p><ul><li><p>Create a new method with a similar body in the class it uses most, as a result, reduces <span style="color: purple">[[Coupling]]</span></p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/fe64ffc0-2fa9-4292-b17f-c8d3083c349d.png)

what is move field refactoring

A field is used by another class more than it is in its own class.

Move it to the class that uses it most. reduce [[Coupling]]

![<p>A field is used by another class more than it is in its own class.</p><ul><li><p>Move it to the class that uses it most. reduce<span style="color: purple"> [[Coupling]]</span></p></li></ul><p></p>](https://knowt-user-attachments.s3.amazonaws.com/7f9be644-8227-41c9-be4b-98c1b1ac5061.png)

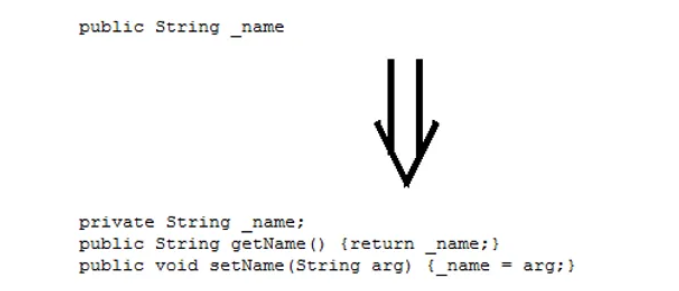

what is encapsulate field refactoring

Restrict direct access to data through outside classes and provide public getters and setters instead, insuring data integrity

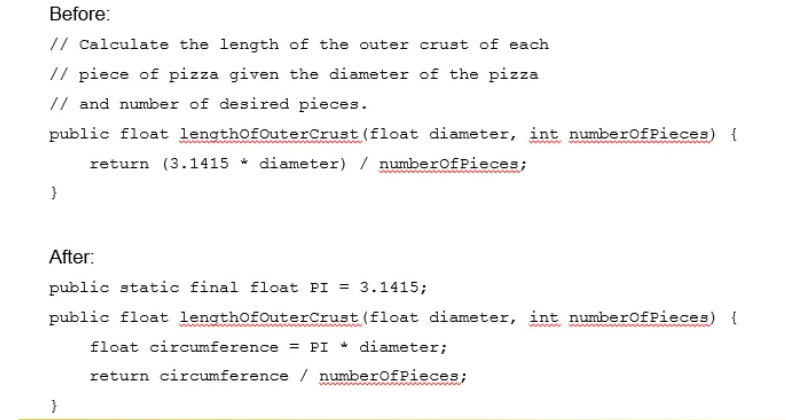

what is Replace Magic Number With Symbolic Constant refactoring

Instead of using exact constants in code, put them into constant where they can be easily reused and modified when needed

what is remove dead code refactoring

code is never used, removing it tidies up code base, improves readability and understandability

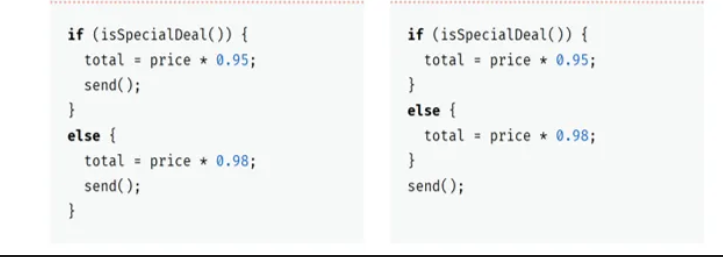

what is consolidate duplicate conditional fragments refactoring

Identical code can be found in all branches of a conditional

remove the duplicate code to outside the conditional

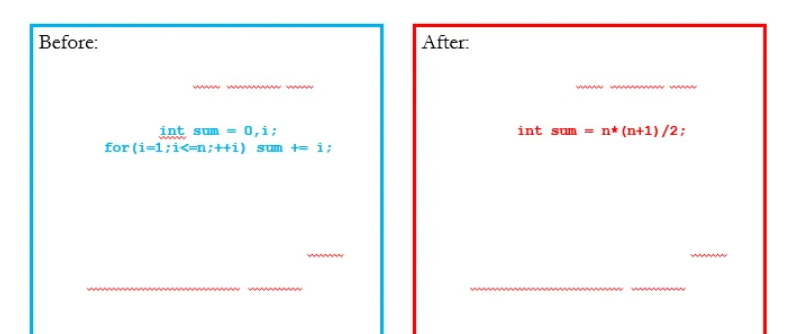

what is substitute algorithm refactoring

Takes one algorithm and replaces it with a shorter and more efficient algorithm

what are the advantages of refactoring

Reduces duplicate and inefficient code

Improve cohesion, reduce coupling (unnecessary coupling)

Improves the understandability of code, maintainability, etc. by reducing complexity

Can help reduce technical debt

Can help reduce bugs since the code is more maintainable

Can be done at any stage of a system’s life