Agent-Based Assessments of Criminological Theory

Overview

Criminological theories describe postulated mechanisms of cognition and action for both potential victims and offenders, as well as the surrounding environment's role in locating victimhood.

Yet, due to the inherent difficulties of observation and testing, we are frequently unable to directly witness how and in what manner such interactions occur, and must instead watch only their outcome.

This gap between observed and hypothesized individual-level crime theories has prompted a number of criminology experts to investigate the potential applications of computational models in order to better characterize and comprehend these complex interactions of the crime event.

The agent-based model is a method for investigating the generative sufficiency of individual-level criminological theory, including its strengths, flaws, and prerequisites for a generative method.

Computational Agent-Based Modelling in the Social Sciences

Social sciences: Examine the functioning and interconnections of society, and complexity is intrinsic to the vast majority of the phenomena they study.

- Herbert Simon argued that the so-called "soft" social sciences should be renamed the "hard" sciences due to a variety of obstacles to research.

- Epstein and Axtell (1996) examine the methodological challenges that have hindered the effectiveness of standard equation-based models for theory testing.

A summary of these observations follows:

- Most social phenomena are comprised of several mechanisms that all function in tandem - geographical, cultural, economic, demographic, and so on - and are rarely defined by discrete, easily decomposable subprocesses.

- Due to a number of ethical and practical obstacles, controlled experiments designed to test hypotheses in the social sciences are frequently challenging to conduct.

- Conventional social science models frequently presume that all entities are rational, perfect actors with access to perfect information.

- Most social science models conceal unit heterogeneity through the use of "representative agent" strategies in order to reduce processing needs.

- Such heterogeneity is fundamental in social systems, but "there has been no natural methodology for discussed comprehensively highly heterogeneous populations" in the social sciences.

- Models in the social sciences frequently presume social systems can be described as static equilibria, so ignoring the significance of temporal dynamics.

Agent-based models (ABMs): These are computational modeling tools that hold great potential for those wishing to construct explanatory models of complicated social systems.

- a well-established computer simulation technique with numerous real and promising applications in a variety of fields.

- replicates the interactions between various autonomous entities in order to analyze how the decentralized individual actions influences the behavior of the entire system.

- enables social science researchers to develop virtual societies and populate them with modeled populations of heterogeneous autonomous actors, letting them to investigate the relationship between daily human decisions and observable events.

- fundamentally composed of two elements: a population of agents and a simulated environment wherein they exist.

- considered a "natural" paradigm for the study of human systems, according to proponents of the approach, because of its capacity to capture the linkages between micro-action and macro-result.

Simulation Agents

- In an ABM, each member of the virtual population is represented by an autonomous entity that makes decisions on its own, also known as an agent.

- Individual qualities, interests, and behaviors, such as age, residence, and preferred social group, can be exhibited by agents.

- A range of agent behaviors regulate how they see, reason, and act in specific contexts.

- These behaviors are characterized by a set of condition-action rules, and algorithms and heuristics are built to reflect the individual-level mechanisms proposed by theory.

- ABM can be utilized by social scientists to examine the validity of social science theories.

Simulation Environment

- ABM agents are placed into an environment, which can take on various forms depending on the objective of the model being constructed.

- Model environments can represent abstract physical or social space, or they might be created to closely resemble actual environments.

- The appropriate amount of realism for certain simulation environments and ABM in general is the subject of significant debate.

- The objective of simulation study is to ^^determine whether the choice mechanism of agents is sufficient to generate the pattern of interest^^.

- The application of simulation is intended to establish the ^^sufficiency of the mechanism^^ regardless of its operating environment.

- One method is to create simulations in each of the aforementioned realistic situations, while another is to abstract the environment and have the simulation operate in an abstract setting.

Agent-Based Interactions

- ABM can be used to describe a variety of interactions, including agent-agent, environment-environment, and temporal dynamics.

- Agent-agent interactions are those in which agents get information or resources from one another, build social ties with other agents, or compete over a simulated entity.

- Interactions between the environment and an agent are circumstances in which the environment influences an agent, for as by restricting movement or determining where particular activities can and cannot occur.

- ABMs imitate the passage of time through discrete increments, typically referred to as cycles, allowing longitudinal analysis of time-dependent phenomena including the development, interaction, and separation of system elements through time.

- These temporal dynamics are particularly crucial for the modeling of phenomena such as tipping points, in which the accumulation of individual actions over time can result in abrupt and substantial deviations in system behavior.

Characteristics of ABM

Autonomy: ABMs lack comprehensive top-down control methods.

- Rather, each agent in the simulation independently perceives, argues, and acts.

- Although agents may communicate information directly or indirectly through the environment, there is no centralized controller that regulates behavior.

Heterogeneity: ABMs imitate many agents, both within and between groups.

- Agents may use probabilistic or deterministic thinking.

- Agents have various internal properties but use the same decision calculus.

- Capturing unit heterogeneity is crucial for studying real-world phenomena when unit homogeneity is rare.

Explicit Space: ABMs depict creatures embedded in an abstract or realistic space, facilitating the formation of the concept of local interaction.

Local Interactions: Equation-based models presume that system entities have complete awareness of their environment and the other system elements.

- This is an unrealistic expectation.

- ABMs focus mostly on interactions between entities that are physically or socially close to one another inside the simulated environment.

Bounded Rationality: Agents' rational decision-making can be confined to localized, limited information.

- Hence, rationality is restricted by decision-making knowledge.

- Agent behaviors can use bounded computation to avoid endlessly searching all possible actions to find the best solution.

- These rationality representations are more realistic.

Agent-based modeling has several epistemic advantages:

- Accessibility: Agent-based methods help researchers and others understand difficult ideas.

- ABM pieces are usually defined individually, making them easier to understand than complex mathematical abstractions.

- Informing and interpreting ABM does not require ABM expertise.

- Domain experts may ask better model questions than developers.

- Aiding Scientific Discourse: The intuitive representation of complicated processes by ABM can result in enhanced scientific discourse and the formalization of theoretical ideas.

- It can also reveal logical errors and generate new questions and possibilities.

- Simulation Experimentation: ABMs enable experiments that would otherwise be impossible due to financial, ethical, or logistical limitations.

- The number of simulation experiments can be restricted by the processing power and time available to researchers.

- Simulation experiments can be performed easily and fast.

- Absolute Control: ABM is an innovative alternative to controlled social experiments.

- Traditional experimentation lets researchers adjust any number of outside elements to study dose-response interactions in limitless configurations.

- Absolute Observation: ABMs create a synthesis of real-world systems in which precise observation and measurement can occur.

- It is possible to collect simulation data sets that describe every action taken by members of a simulated population and their underlying calculations.

- In Situ and In Silico Experimentation: ABM can help identify new empirical avenues of inquiry and improve ABM through parameterization and validation, resulting to advancements in the investigation of phenomena.

Flashcards:

Agent-Based Modelling of Social Systems and the Generative Explanation

- ABM has been used in the social sciences to suggest a "third way of doing science" instead of inductive and deductive thinking.

- The agent-based computational model, often known as artificial society, is a new scientific tool that allows social macrostructures of interest to be "computed" experimentally.

- Society is viewed as a distributed computing apparatus that computes macrostructures from microaction.

- The generativist believes complex social science phenomena can be comprehended by synthesizing their genesis from lower-order activity and interaction.

- Building ABMs of a system's proposed microlevel mechanisms and testing if they create the target's macroscopic regularities can test ABMs.

- Generative social science evaluates theory by identifying microlevel mechanisms that yield macro-level output patterns that match observable regularities.

- If a system cannot generate regularities, it may be discarded as a plausible explanation of the target phenomena.

- ABM helps generative social science find explanations.

- The generativist first eliminates insufficient hypotheses, leaving only sufficient explanations.

- This procedure has removed insufficient, implausible, and empirically falsified explanations, but it is unlikely to generate a single valid explanation.

- Generative social science has explored civil violence, epidemic control, and indigenous community cultural change.

Linking Micro-crime Theory and Macro-crime Patterns: Generative Agent-Based Models of the Crime Event

- Using Epstein's generative social science concept, the ABM technique may investigate criminological theory propositions.

- Micro-specifications of the criminal event and empirically obtained macroscopic regularities of crime are needed to examine the explanatory sufficiency of such microspecifications. Criminology has both.

Micro-specifications of the Crime Event

- %%Routine activity approach and pattern theories of crime%%: This describe how the characteristics and interactions of individual-level activities determine the spatial and temporal distribution of criminal behavior and victimization.

- Agents may be prospective offenders, potential victims, and potential protectors who move throughout a geographical area.

- Decision rules explain how various agents react to the presence of one another.

- %%Rational choice perspective%%: This provides a quantifiable offender decision calculus based on boundedly rational evaluations of perceived risks, rewards, and efforts faced in prospective pre-crime circumstances.

- Agents are individual would-be offenders who react to possibilities that may have incentives and efforts needed to victimize targets and related dangers, partly in terms of social (dis)approval by other agents in a social network.

- %%Social learning theory%%: This provides hypotheses concerning mechanisms of offender reinforcement.

- Agents may be repeat offenders whose decision calculus considers past offenses and their success or failure.

- %%Network theory of peer association%%: Here, individuals may constitute agents, who may have particular peer relationships.

- In terms of the number of peer agents that commit simulated crimes, the choice rules for engaging in criminal activity and the decision to (dis)continue being a member of a peer network are given.

- %%Social disorganization theory%%: This proposes communications of risk and crime control among peer (neighborhood) groups.

- Agents will be individuals in social contexts, which will be modeled via social connections and disconnections between agents.

- The decision rules will be based on risk communication between agents and the likelihood of agents engaging in criminal control against others.

Macrostructures of Crime

- %%Spatial and Temporal Clustering%%

- Research demonstrates that the spatial patterning of crime adheres to the Pareto law of concentration, A 26 Agent-Based Analyses of Criminological Theory with the bulk of crime concentrating in a small number of geographic areas, sometimes known as crime hot spots or hot places.

- Crime is not dispersed consistently throughout time.

- Very few locations consistently experience high levels of crime.

- Crime hotspots are frequently noted, whether by hour of the day, day of the week, or month of the year, in which the rate of victimization is disproportionately high compared to other periods.

- %%Repeat and Near-Repeat Victimization%%

- Crime is not only physically and temporally concentrated, but also limited to a small number of persons/locations/targets.

- These individuals, typically referred to as "repeat victims," are victimized at disproportionate rates.

- Recent study has also repeatedly proven spatiotemporally clustered near-repeat victimization, i.e. the victimization of additional targets in close proximity to previously victimized targets.

- %%Repeat Offending%%

- While research has shown that victimization is concentrated in specific regions, at specific times, and against specific targets, research has also consistently established that the prevalence of criminal activity is similarly disproportionally distributed among offenders.

- A small percentage of offenders are typically accountable for a big percentage of crime.

- %%Journeys to Crime%%

- Many research analyzing the spatial characteristics of criminal travels have revealed the existence of a distance decay function in which the bulk of criminal excursions occur within a short distance of offenders' residences.

- Similar observations have been made on a variety of various sorts of crime, such as residential burglary, robbery, and rape.

- %%The Age-Crime Curve%%

- Research indicates that younger criminals are more likely to commit crimes than older offenders.

- After initially increasing during youth and peaking in late teens/early twenties, the frequently quoted age-crime curve declines steadily.

Computational Experiments

Generative ABM: It is an ideal instrument for determining whether or not statements derived from theory represent proximal mechanisms that play a substantial role in the conduct of crime.

- To test theories, simulation studies are conducted, and simulated crime patterns are compared to appropriate macroscopic regularities.

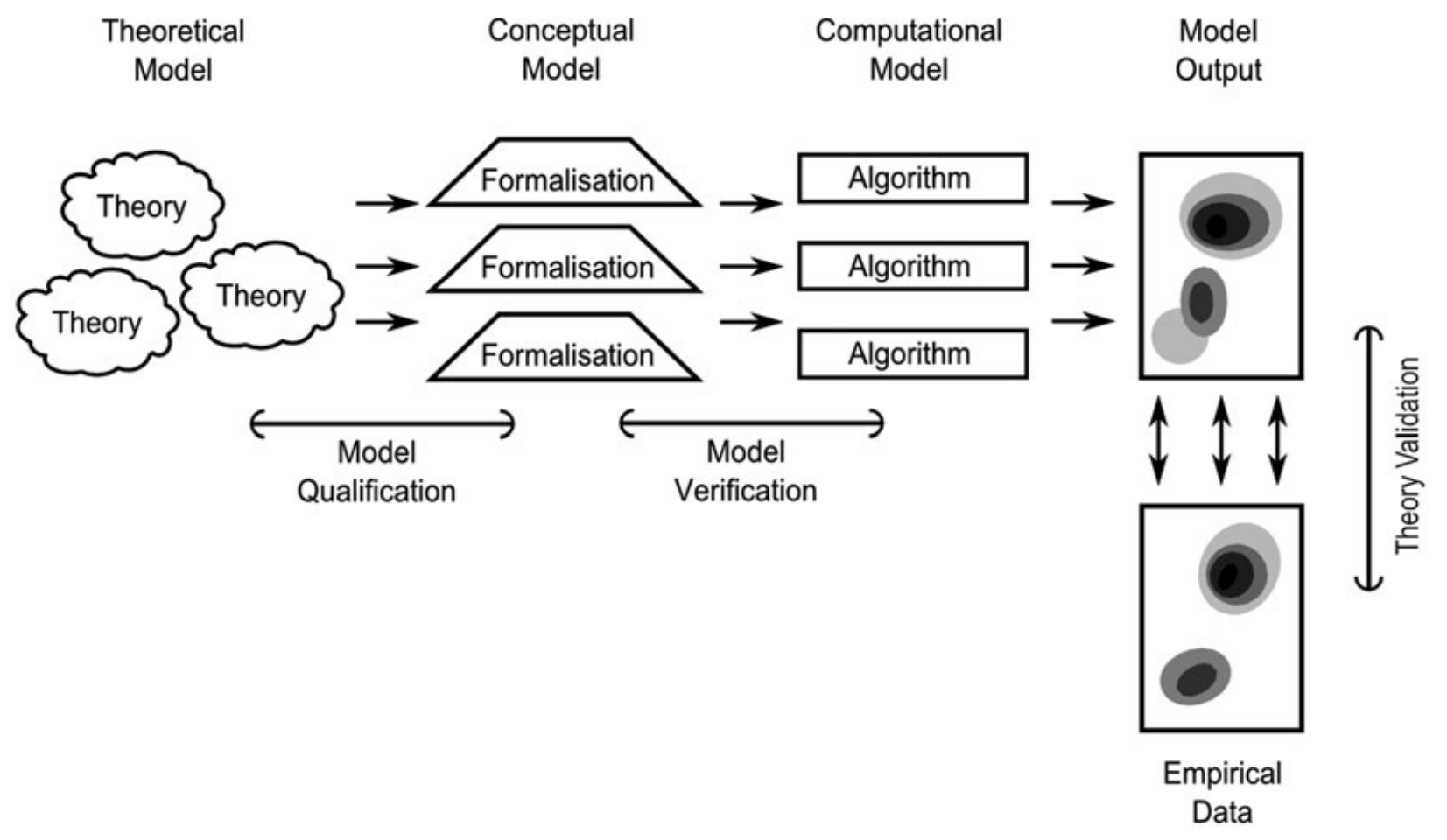

- Model qualification evaluates whether the conceptual model adequately represents the original, frequently "fuzzy," theoretical model.

- The refinement of conceptual formalizations to reflect theoretical notions is followed by the creation of a computational model embodying the conceptual model.

- Model verification determines if these computational constructions adequately replicate the conceptual model from which they were formed.

- Model result validation evaluates the adequacy of theoretically defied computational constructs in representing the output behavior of the target system as a whole, and controlled simulation experiments are required.

Characteristics crucial to the design and execution of such simulation experiments:

- %%Address Theoretical Research Question%%

- Successful simulation experiments must address a particular theoretical research subject.

- %%Systematic Control of Experimental Conditions%%

- In silico studies should adhere to stringent design rules and employ counterfactual simulation states in order to evaluate the effects of model modifications.

- To illustrate, agents' behaviors should be designed to reflect both the lack and presence of the suggested mechanism.

- The results of simulations conducted with agents functioning under both experimental and control settings should be examined.

- %%Simulation Replication%%

- The purpose of this is to identify implementation-specific issues that may impact observed outcomes.

- It requires little investment besides time and processing power and is quite simple to execute.

- The primary challenge is making sense of the multitude of generated results.

- %%Robustness Testing%%

- The purpose of this simulation testing is to investigate the impact of parameter changes on outcome patterns.

- They entail modifying model parameters, rerunning simulations, and analyzing variations in model results.

- Testing for robustness evaluates distributional equivalency, ensuring that model plausibility does not break down when seemingly harmless adjustments are made.

- It is essential to investigate a number of crucial model parameters within the computational restrictions of a given study, and stochastic models should also be subjected to robustness testing with regard to specified random number seeds.

- %%Levels of In Situ/In Silico Equivalence%%

- Axtell and Epstein (1994) suggest many degrees of in situ and in silico system equivalence, ranging from qualitative macro equivalence to quantitative equivalence.

- Although abstract models may not provide quantitative equivalency, they do permit estimations of generative sufficiency and provide insight into the expected dynamics underlying processes.

- %%Multiple Output Measures%%

- While evaluating in situ/in silico equivalence, it is crucial to analyze other relevant regularities, as the objective of science is to identify descriptions of a system that are compatible with observable occurrences.