Critical Appraisal ISD Lecture 3

Critical Appraisal

Critical appraisal is the process of carefully and systematically examining research to judge its validity, trustworthiness, value and relevance.

Prioritise your Reading

Read the title: main theory or variables being investigated

Read the abstract: summary of the journal article

Why is critical appraisal important?

Are the results valid?

Do they apply to me and my patients?

Should I change my practice?

Key part in maintaining up-to-date evidence-based practice/CPD

Develop an understanding of the research methodology used

How do I do it?

Carefully read the whole article

Why? What are the aims and objectives?

How? Has the right research methodology been used?

When? Is it recent or seminal research?

Where? Has it been published in a recognised /peer-reviewed journal? Consider impact factor

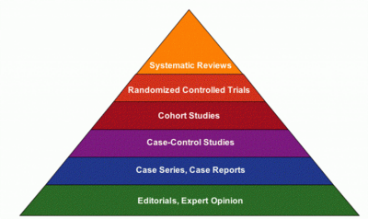

Appraising the Evidence

Is the study valid?

Is the study unbiased? Evaluate methodological quality

What are the results?

Are the results clinically important? Did the experimental group show a significantly better outcome compared to control? How much uncertainty is there about the results?

Do the results apply to my needs?

Is your clinical question answered?

Critique

Introduction:

Should state the aim of the research (usually last sentence)

Should discuss why the research is required/ novel

Perhaps mention other relevant studies and findings

It may be a hypothesis – do the outcome measures they have been selected help to answer this?

Does it address a clearly focused issue?

Can you identify what type of study it is?

How do I do it?

Method:

What kind of study is it and is it appropriate?

Does it make sense/ is it clear?

What is the study population?

Inclusion/exclusion criteria?

Have they considered all the confounding variables?

Do they treat each group the same, and do they have similar baseline demographics?

Inter/ intra-examiner calibration?

Were the participants ‘blind’ to the intervention they were given?

Were the investigators ‘blind’ to the intervention they were giving to participants?

Were the people assessing/analysing outcome/s ‘blinded’?

Were the study groups similar at the start of the research/trial?

CONSIDER: Were the baseline characteristics of each study group (e.g. age, sex, socio-economic group) clearly set out?

Were there any differences between the study groups that could affect the outcome/s?

Power calculation?

Selection, Recruitment, Observer, Participant Biases?

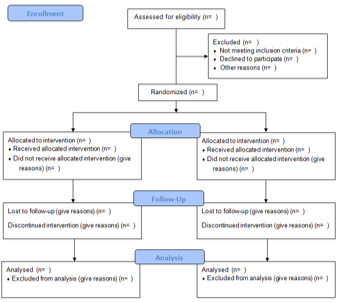

How did they recruit participants and is the process transparent (PRISMA and CONSORT diagrams)?

Is there ethical approval?

Are the patients consented?

How do I do it?

Results:

Are statistics used where needed?

What statistics are they using- are these appropriate?

Are they reporting all the outcomes they said they would measure?

Are the results significant statistically?

Are they significant clinically?

Do the conclusions they make stem from the results (e.g. facts), and not the discussion (e.g. possible speculation)

How do I do it?

Discussion & Conclusion:

Relate and compare own results to other studies

Explain new findings and differences

Outline limitations in method

Discuss how this may affect management / clinical treatment in future

Opportunities for further research?

How do I do it?

External Validity:

Can the results be applied to your local population?

Do the results have an impact on my patients and my practice?

If you were doing the study how would you make it better?

Useful Resources

Cochrane Handbook

http://www.slideshare.net/giustinid/wheres-the-best-evidence-2010

NHS Evidence

Clinical Evidence